Test-infra: run_after_success behavior when testing both the parent and child job needs fixing

If you /test both a parent and child job, you trigger both immediately if there is a previous run of the parent job. I'm not sure what we want to do here, but I think this is going to need discussion before we can rely on run_after_success / shared builds. At the very least we have a problem with the status being inaccurate:

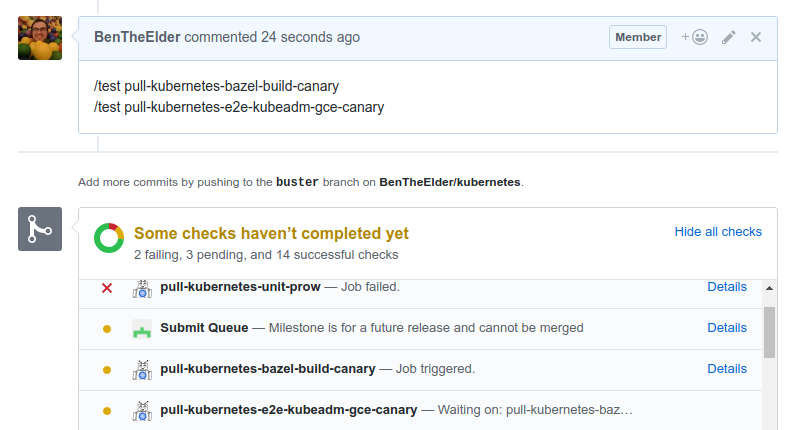

comment & status:

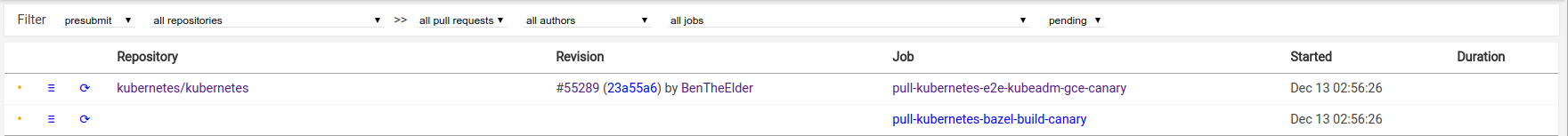

deck:

comment & log links:

/area prow

/kind bug

/cc @cjwagner @mindprince @krzyzacy @spiffxp

xref: https://github.com/kubernetes/test-infra/issues/5436#issuecomment-351255948, https://github.com/kubernetes/test-infra/pull/5832#issuecomment-351230605

All 19 comments

From the kubeadm canary:

W1213 02:57:17.319] Getting shared build location from: gs://kubernetes-jenkins/shared-results/master:38e33513126e2090b578531b7c3919348bf6b167,55289:23a55a6e3f921f26620c1d6fd7d887fdf9394f8c/bazel-build-location.txt

W1213 02:57:17.345] Read GCS Path: gs://kubernetes-release-dev/bazel/v1.10.0-alpha.0.865+f6921f775e62e5

Since we're using the PULL_REFS to key where the shared builds are at, I think this will fall back to doing a local build if you push a new commit and then /test child as well, which is not ideal :confused:

Currently report.Report only handles reporting one context at a time. In order to handle this case correctly it will need to be be able to look up the state of child jobs and the results must be from the current sync loop in case the child was just triggered. This will require a bit of a refactor to the controllers, but it shouldn't be too ugly.

if you push a new commit and then /test child as well

I'm not sure what the desired behavior is in terms of triggering the jobs in this scenario. Is RunAfterSuccess intended to be a possible trigger of the job or the only way to trigger the job? RunIfChanged jobs can be triggered by a changed file or a /test command.

I'm not sure what the desired behavior is in terms of triggering the jobs in this scenario.

For my use case I think the desired behavior is that pushing a commit invalidates child jobs, but I think the desired behavior is an open question. @sebastienvas, @yutongz are you still using run_after_success? If so what would your desired behavior be?

pushing a commit invalidates child jobs

I'm not certain what you mean by this. Do you mean that child jobs cannot be manually triggered when a new commit is pushed and the parent job has not yet succeeded? Or in other words, child jobs cannot be manually triggered unless their parent job has already succeeded?

Yeah, I think run_after_success jobs would ideally require the parent to be in a success state to trigger, and that the success state should be cleared from the whole chain if the parent job is cleared (EG after a push).

In particular if we want to ensure that shared builds are viable, we need to make sure that the status of the child jobs is genuinely for the current commit, which can be dodged if you trigger a child job before the parent is triggered since it actually ran against the build form the last commit.

I'm not sure about other use cases though, or how reasonable this is from an implementation perspective. I think it makes sense but I'm definitely fishing for thoughts on this.

In particular if we want to ensure that shared builds are viable, we need to make sure that the status of the child jobs is genuinely for the current commit, which can be dodged if you trigger a child job before the parent is triggered since it actually ran against the build form the last commit.

I don't think this is currently possible because the child job would report a status for the old commit sha not the current sha that the parent is running against.

We may not be able to allow manually triggering child jobs at all if we go this route. If we allow the child job to be manually retriggered after the parent job has passed, the parent could be retriggered and fail while the child succeeds. Is this an acceptable state to be in as far as shared builds go?

I don't think this is currently possible because the child job would report a status for the old commit sha not the current sha that the parent is running against.

We may not be able to allow manually triggering child jobs at all if we go this route. If we allow the child job to be manually retriggered after the parent job has passed, the parent could be retriggered and fail while the child succeeds. Is this an acceptable state to be in as far as shared builds go?

Can't we solve this by just canceling the jobs when you push a new commit?

When a new commit is pushed:

- cancel currently running jobs

- clear the status for all jobs

?

If a new commit is pushed duplicate termination should handle everything. I'm referring to manually triggered reruns of a job on the same commit.

Can't we terminate those too?

You should be able to manually rerun once a commit has green for the parent

job. We could check the parent status when manually triggering child jobs?

On Wed, Dec 13, 2017, 13:55 Cole Wagner notifications@github.com wrote:

If a new commit is pushed duplicate termination should handle everything.

I'm referring to manually triggered reruns of a job on the same commit.—

You are receiving this because you were assigned.

Reply to this email directly, view it on GitHub

https://github.com/kubernetes/test-infra/issues/5922#issuecomment-351537525,

or mute the thread

https://github.com/notifications/unsubscribe-auth/AA4BqzCvCsy7NLGRrh8dLPB1PTfxR4XQks5tAEfEgaJpZM4Q_8es

.

It looks like we actually do terminate dupes for new jobs on the same commit. We just consider a job a duplicate if it has the same job name, org, repo, and number.

You should be able to manually rerun once a commit has green for the parent

job. We could check the parent status when manually triggering child jobs?

I'm thinking of the case where we get a /test child rerun command, we check that the parent has succeeded (it has), and then trigger a rerun of the child job. Now that the child job is running the parent could be retriggered with /test parent and the parent could fail. Is this problematic for shared builds?

I'm thinking of the case where we get a /test child rerun command, we check that the parent has succeeded (it has), and then trigger a rerun of the child job. Now that the child job is running the parent could be retriggered with /test parent and the parent could fail. Is this problematic for shared builds?

Not particularly as long as it's the same commit. If the build job is flaky or has non-reproducible output then someone needs to fix the build output. Not sure about other use cases.

Ok, this seems safe for our use case then because if the parent was running on a new commit, the child results would not be reported to the status context for the current commit on the PR.

Not sure about other use cases.

/shrug

If we come across another use case that doesn't want to allow certain child jobs to be manually triggered at all we can make it possible to prevent jobs from being manually triggered. Perhaps just allowing the rerun command regex to be empty and using that to indicate no manual triggers are allowed.

I spoke to @sebastienvas about this and we seem to be on the same page (istio is using run_after_success to have a first step verify / upload artifacts then an e2e).

It also looks like openshift does not currently use run_after_success, I doubt anyone else is.

@munnerz?

Following up: we checked with other users and we're quite sure nobody besides kubernetes and istio uses run_after_success currently.

We're punting on this for the moment but would definitely like to get back to this so we can reduce unnecessary load on the prow bulid cluster

/priority important-soon

Issues go stale after 90d of inactivity.

Mark the issue as fresh with /remove-lifecycle stale.

Stale issues rot after an additional 30d of inactivity and eventually close.

If this issue is safe to close now please do so with /close.

Send feedback to sig-testing, kubernetes/test-infra and/or fejta.

/lifecycle stale

Stale issues rot after 30d of inactivity.

Mark the issue as fresh with /remove-lifecycle rotten.

Rotten issues close after an additional 30d of inactivity.

If this issue is safe to close now please do so with /close.

Send feedback to sig-testing, kubernetes/test-infra and/or fejta.

/lifecycle rotten

/remove-lifecycle stale

Rotten issues close after 30d of inactivity.

Reopen the issue with /reopen.

Mark the issue as fresh with /remove-lifecycle rotten.

Send feedback to sig-testing, kubernetes/test-infra and/or fejta.

/close