Three.js: InstancedMesh uses an incorrect normal matrix

As discussed here.

The vertex normal should be transformed by the normal matrix computed from the instanceMatrix.

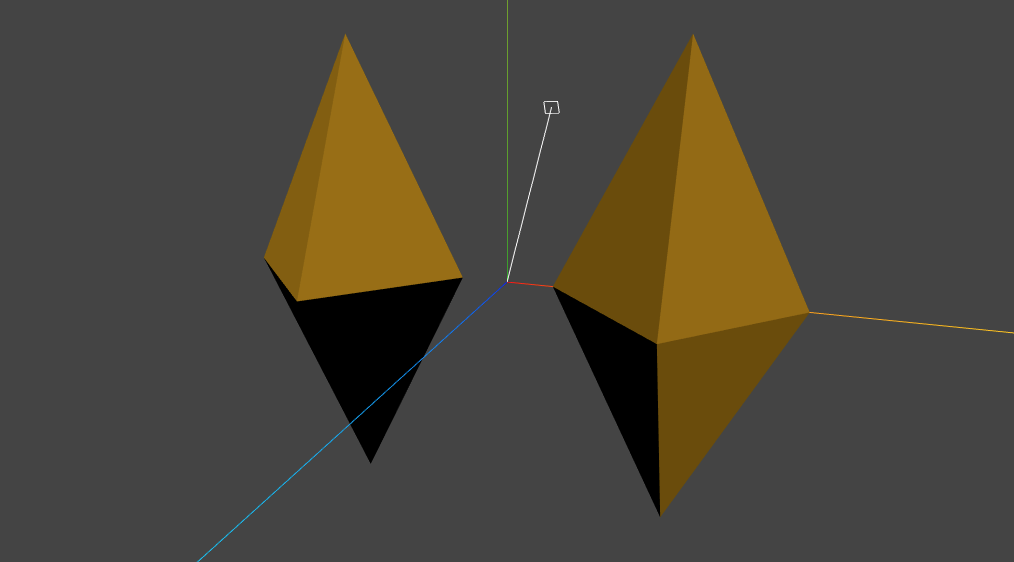

In this image, the mesh on the left is an InstancedMesh having a single instance. The image on the right is a non-instanced Mesh. They should have identical shading.

Three.js version

- [ x ] r113 dev

- [ x ] r112

All 6 comments

Ah, so the problem is not that instancedMesh.scale.y = 2 doesn't work but that one of the instance has a non-uniform scale.

Would supporting non-uniform scaling per instance have a big performance penalty?

FWIW normal matrix isn't the only way to transform normals, assuming the object matrix uses rotation and (non-uniform) scale there's an alternative formulation.

I'm going to use column vectors below, so the vertex transform is T*R*S*v with a decomposed matrix. The canonical formulation for normal matrix suggests using NM = inverse(transpose(R*S)).

inverse(transpose(R*S)) = inverse(transpose(S) * transpose(R)) = inverse(transpose(R)) * inverse(transpose(S)), where R is a rotation matrix and S is a diagonal matrix with scale values for each axis.

inverse(transpose(R)) = R, and transpose(S) = S, so the above is equal to R * inverse(S), which is equal to R * S * inverse(S) * inverse(S).

Thus it's sufficient to pre-transform the object-space normal using inverse(S)^2 - since S is a diagonal, if you know the scale values this just involves dividing the normal by scale^2.

You can recover the scale values from the combined R*S matrix by measuring the length of basis vectors.

This shader code illustrates the construction:

vec3 RX = modelViewMatrix[0].xyz;

vec3 RY = modelViewMatrix[1].xyz;

vec3 RZ = modelViewMatrix[2].xyz;

vec3 transformedNormal = objectNormal;

transformedNormal /= vec3(dot(RX, RX), dot(RY, RY), dot(RZ, RZ));

transformedNormal = (modelViewMatrix * vec4(transformedNormal, 0.0)).xyz;

transformedNormal = normalize(transformedNormal);

The cost of this correction is three dot products and a vector division, which seems reasonable. If both instance matrix and object matrix can carry non-uniform scale then I think you will need to run this code twice.

Something like this could be used as a generic normal transform function (assuming a normalization step is ran after this):

vec3 transformNormal(vec3 v, mat3 m)

{

return m * (v / vec3(dot(m[0], m[0]), dot(m[1], m[1]), dot(m[2], m[2])));

}

edit The above assumes that the transform matrix can be decomposed into R*S, which isn't true of an arbitrary sequence of rotation-scale transforms, but is probably true for instance matrix transform - so I'm assuming this can be combined with using normalMatrix for handling the general scene graph transform. So this might be useful not as a replacement for existing normalMatrix, but purely as a way to correct instanceMatrix transformation in the shader.

@zeux The API that @mrdoob selected for InstancedMesh does not involve setting a position, quaternion, and scale per instance. Instead, the user specifies the matrix transform directly.

Consequently, the transform can be any affine matrix. Your solution helps with non-uniform scale, but as you know, it does not work in general.

That being said, I'm not sure @mrdoob would support adding a per-instance normal-matrix anyway -- unless user demand requires it.

So, instead, I'd like to implement the following change, as you suggested. It is still limiting, but I think it is OK for now.

#ifdef USE_INSTANCING

// this is in lieu of a per-instance normal-matrix

// shear transforms in the instance matrix are not supported

mat3 m = mat3( instanceMatrix );

transformedNormal /= vec3( dot( m[ 0 ], m[ 0 ] ), dot( m[ 1 ], m[ 1 ] ), dot( m[ 2 ], m[ 2 ] ) );

transformedNormal = m * transformedNormal;

#endif

Does that seem reasonable to you?

Does that seem reasonable to you?

Yeah, I think this is a reasonable compromise. I would expect in general per-instance transforms to be decomposable into TRS; notably, glTF extension that is being discussed for standardization right now (https://github.com/KhronosGroup/glTF/pull/1691) uses decomposed transforms as well.

Adding support for position, quaternion and scale is certainly a possibility. I just didn't know if it was really needed at the time.

We also had this glsl code:

vec3 applyTRS( vec3 position, vec3 translation, vec4 quaternion, vec3 scale ) {

position *= scale;

position += 2.0 * cross( quaternion.xyz, cross( quaternion.xyz, position ) + quaternion.w * position );

return position + translation;

}

But I was not sure about the performance implications, so ended up starting with a matrix per instance and wait for more use cases.

For the current InstancedMesh API, I think the fix proposed in this thread is reasonable.

Most helpful comment

FWIW normal matrix isn't the only way to transform normals, assuming the object matrix uses rotation and (non-uniform) scale there's an alternative formulation.

I'm going to use column vectors below, so the vertex transform is

T*R*S*vwith a decomposed matrix. The canonical formulation for normal matrix suggests usingNM = inverse(transpose(R*S)).inverse(transpose(R*S)) = inverse(transpose(S) * transpose(R)) = inverse(transpose(R)) * inverse(transpose(S)), where R is a rotation matrix and S is a diagonal matrix with scale values for each axis.inverse(transpose(R)) = R, andtranspose(S) = S, so the above is equal toR * inverse(S), which is equal toR * S * inverse(S) * inverse(S).Thus it's sufficient to pre-transform the object-space normal using

inverse(S)^2- since S is a diagonal, if you know the scale values this just involves dividing the normal by scale^2.You can recover the scale values from the combined R*S matrix by measuring the length of basis vectors.

This shader code illustrates the construction:

The cost of this correction is three dot products and a vector division, which seems reasonable. If both instance matrix and object matrix can carry non-uniform scale then I think you will need to run this code twice.

Something like this could be used as a generic normal transform function (assuming a normalization step is ran after this):

edit The above assumes that the transform matrix can be decomposed into R*S, which isn't true of an arbitrary sequence of rotation-scale transforms, but is probably true for instance matrix transform - so I'm assuming this can be combined with using normalMatrix for handling the general scene graph transform. So this might be useful not as a replacement for existing normalMatrix, but purely as a way to correct instanceMatrix transformation in the shader.