Hello,

I installed kong via homebrew. When I am calling [GET] localhost:8000 looks good is everythings

{

"message": "no Route matched with those values"

}

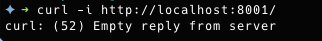

But when I am calling kong admin api [GET] localhost:8001 or 0.0.0.0:8001 or 127.0.0.1:8001 kong does not give response.

Additional Details & Logs

- Kong version : 1.1.0

- Kong debug-level startup logs (

$ kong start --vv)

2019/04/04 10:04:08 [verbose] Kong: 1.1.0

2019/04/04 10:04:08 [debug] ngx_lua: 10013

2019/04/04 10:04:08 [debug] nginx: 1013006

2019/04/04 10:04:08 [debug] Lua: LuaJIT 2.1.0-beta3

2019/04/04 10:04:08 [verbose] reading config file at /etc/kong/kong.conf

2019/04/04 10:04:08 [debug] reading environment variables

2019/04/04 10:04:08 [debug] admin_access_log = "logs/admin_access.log"

2019/04/04 10:04:08 [debug] admin_error_log = "logs/error.log"

2019/04/04 10:04:08 [debug] admin_listen = {"0.0.0.0:8001"}

2019/04/04 10:04:08 [debug] anonymous_reports = true

2019/04/04 10:04:08 [debug] cassandra_consistency = "ONE"

2019/04/04 10:04:08 [debug] cassandra_contact_points = {"127.0.0.1"}

2019/04/04 10:04:08 [debug] cassandra_data_centers = {"dc1:2","dc2:3"}

2019/04/04 10:04:08 [debug] cassandra_keyspace = "kong"

2019/04/04 10:04:08 [debug] cassandra_lb_policy = "RequestRoundRobin"

2019/04/04 10:04:08 [debug] cassandra_port = 9042

2019/04/04 10:04:08 [debug] cassandra_repl_factor = 1

2019/04/04 10:04:08 [debug] cassandra_repl_strategy = "SimpleStrategy"

2019/04/04 10:04:08 [debug] cassandra_schema_consensus_timeout = 10000

2019/04/04 10:04:08 [debug] cassandra_ssl = false

2019/04/04 10:04:08 [debug] cassandra_ssl_verify = false

2019/04/04 10:04:08 [debug] cassandra_timeout = 5000

2019/04/04 10:04:08 [debug] cassandra_username = "kong"

2019/04/04 10:04:08 [debug] client_body_buffer_size = "8k"

2019/04/04 10:04:08 [debug] client_max_body_size = "0"

2019/04/04 10:04:08 [debug] client_ssl = false

2019/04/04 10:04:08 [debug] database = "postgres"

2019/04/04 10:04:08 [debug] db_cache_ttl = 0

2019/04/04 10:04:08 [debug] db_resurrect_ttl = 30

2019/04/04 10:04:08 [debug] db_update_frequency = 5

2019/04/04 10:04:08 [debug] db_update_propagation = 0

2019/04/04 10:04:08 [debug] dns_error_ttl = 1

2019/04/04 10:04:08 [debug] dns_hostsfile = "/etc/hosts"

2019/04/04 10:04:08 [debug] dns_no_sync = false

2019/04/04 10:04:08 [debug] dns_not_found_ttl = 30

2019/04/04 10:04:08 [debug] dns_order = {"LAST","SRV","A","CNAME"}

2019/04/04 10:04:08 [debug] dns_resolver = {}

2019/04/04 10:04:08 [debug] dns_stale_ttl = 4

2019/04/04 10:04:08 [debug] error_default_type = "text/plain"

2019/04/04 10:04:08 [debug] headers = {"server_tokens","latency_tokens"}

2019/04/04 10:04:08 [debug] log_level = "notice"

2019/04/04 10:04:08 [debug] lua_package_cpath = ""

2019/04/04 10:04:08 [debug] lua_package_path = "./?.lua;./?/init.lua;"

2019/04/04 10:04:08 [debug] lua_socket_pool_size = 30

2019/04/04 10:04:08 [debug] lua_ssl_verify_depth = 1

2019/04/04 10:04:08 [debug] mem_cache_size = "128m"

2019/04/04 10:04:08 [debug] nginx_admin_directives = {}

2019/04/04 10:04:08 [debug] nginx_daemon = "on"

2019/04/04 10:04:08 [debug] nginx_http_directives = {}

2019/04/04 10:04:08 [debug] nginx_optimizations = true

2019/04/04 10:04:08 [debug] nginx_proxy_directives = {}

2019/04/04 10:04:08 [debug] nginx_sproxy_directives = {}

2019/04/04 10:04:08 [debug] nginx_stream_directives = {}

2019/04/04 10:04:08 [debug] nginx_user = "nobody nobody"

2019/04/04 10:04:08 [debug] nginx_worker_processes = "auto"

2019/04/04 10:04:08 [debug] origins = {}

2019/04/04 10:04:08 [debug] pg_database = "kong_gateway"

2019/04/04 10:04:08 [debug] pg_host = "127.0.0.1"

2019/04/04 10:04:08 [debug] pg_port = 5432

2019/04/04 10:04:08 [debug] pg_ssl = false

2019/04/04 10:04:08 [debug] pg_ssl_verify = false

2019/04/04 10:04:08 [debug] pg_timeout = 5000

2019/04/04 10:04:08 [debug] pg_user = "kong"

2019/04/04 10:04:08 [debug] plugins = {"bundled"}

2019/04/04 10:04:08 [debug] prefix = "/usr/local/opt/kong/"

2019/04/04 10:04:08 [debug] proxy_access_log = "logs/access.log"

2019/04/04 10:04:08 [debug] proxy_error_log = "logs/error.log"

2019/04/04 10:04:08 [debug] proxy_listen = {"0.0.0.0:8000","0.0.0.0:8443 ssl"}

2019/04/04 10:04:08 [debug] real_ip_header = "X-Real-IP"

2019/04/04 10:04:08 [debug] real_ip_recursive = "off"

2019/04/04 10:04:08 [debug] ssl_cipher_suite = "modern"

2019/04/04 10:04:08 [debug] ssl_ciphers = "ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256"

2019/04/04 10:04:08 [debug] stream_listen = {"off"}

2019/04/04 10:04:08 [debug] trusted_ips = {}

2019/04/04 10:04:08 [debug] upstream_keepalive = 60

2019/04/04 10:04:08 [verbose] prefix in use: /usr/local/opt/kong

2019/04/04 10:04:08 [debug] loading subsystems migrations...

2019/04/04 10:04:08 [verbose] retrieving database schema state...

2019/04/04 10:04:08 [verbose] schema state retrieved

2019/04/04 10:04:08 [verbose] preparing nginx prefix directory at /usr/local/opt/kong

2019/04/04 10:04:08 [verbose] SSL enabled, no custom certificate set: using default certificate

2019/04/04 10:04:08 [verbose] default SSL certificate found at /usr/local/opt/kong/ssl/kong-default.crt

2019/04/04 10:04:08 [debug] searching for OpenResty 'nginx' executable

2019/04/04 10:04:08 [debug] /usr/local/openresty/nginx/sbin/nginx -v: 'sh: /usr/local/openresty/nginx/sbin/nginx: No such file or directory'

2019/04/04 10:04:08 [debug] OpenResty 'nginx' executable not found at /usr/local/openresty/nginx/sbin/nginx

2019/04/04 10:04:08 [debug] /opt/openresty/nginx/sbin/nginx -v: 'sh: /opt/openresty/nginx/sbin/nginx: No such file or directory'

2019/04/04 10:04:08 [debug] OpenResty 'nginx' executable not found at /opt/openresty/nginx/sbin/nginx

2019/04/04 10:04:08 [debug] nginx -v: 'nginx version: openresty/1.13.6.2'

2019/04/04 10:04:08 [debug] found OpenResty 'nginx' executable at nginx

2019/04/04 10:04:08 [debug] testing nginx configuration: KONG_NGINX_CONF_CHECK=true nginx -t -p /usr/local/opt/kong -c nginx.conf

2019/04/04 10:04:08 [debug] searching for OpenResty 'nginx' executable

2019/04/04 10:04:08 [debug] /usr/local/openresty/nginx/sbin/nginx -v: 'sh: /usr/local/openresty/nginx/sbin/nginx: No such file or directory'

2019/04/04 10:04:08 [debug] OpenResty 'nginx' executable not found at /usr/local/openresty/nginx/sbin/nginx

2019/04/04 10:04:09 [debug] /opt/openresty/nginx/sbin/nginx -v: 'sh: /opt/openresty/nginx/sbin/nginx: No such file or directory'

2019/04/04 10:04:09 [debug] OpenResty 'nginx' executable not found at /opt/openresty/nginx/sbin/nginx

2019/04/04 10:04:09 [debug] nginx -v: 'nginx version: openresty/1.13.6.2'

2019/04/04 10:04:09 [debug] found OpenResty 'nginx' executable at nginx

2019/04/04 10:04:09 [debug] sending signal to pid at: /usr/local/opt/kong/pids/nginx.pid

2019/04/04 10:04:09 [debug] kill -0 `cat /usr/local/opt/kong/pids/nginx.pid` >/dev/null 2>&1

2019/04/04 10:04:09 [debug] starting nginx: nginx -p /usr/local/opt/kong -c nginx.conf

2019/04/04 10:04:09 [debug] nginx started

2019/04/04 10:04:09 [info] Kong started

- Kong error logs : tail -f kong/logs/error.log

2019/04/04 10:10:08 [notice] 99933#0: signal 20 (SIGCHLD) received from 99934

2019/04/04 10:10:08 [alert] 99933#0: worker process 99934 exited on signal 11

2019/04/04 10:10:08 [notice] 99933#0: start worker process 1577

2019/04/04 10:10:08 [notice] 99933#0: signal 23 (SIGIO) received

2019/04/04 10:10:08 [notice] 99933#0: signal 23 (SIGIO) received from 99936

2019/04/04 10:10:08 [notice] 99933#0: signal 23 (SIGIO) received

2019/04/04 10:10:08 [notice] 99933#0: signal 23 (SIGIO) received from 99940

2019/04/04 10:10:08 [notice] 99933#0: signal 20 (SIGCHLD) received from 99938

2019/04/04 10:10:08 [alert] 99933#0: worker process 99938 exited on signal 11

2019/04/04 10:10:08 [notice] 99933#0: start worker process 1581

2019/04/04 10:10:08 [notice] 99933#0: signal 23 (SIGIO) received

2019/04/04 10:10:08 [notice] 99933#0: signal 23 (SIGIO) received

2019/04/04 10:10:08 [notice] 99933#0: signal 23 (SIGIO) received from 99937

2019/04/04 10:10:08 [notice] 99933#0: signal 20 (SIGCHLD) received from 99937

2019/04/04 10:10:08 [alert] 99933#0: worker process 99937 exited on signal 11

2019/04/04 10:10:08 [notice] 99933#0: start worker process 1583

2019/04/04 10:10:08 [notice] 99933#0: signal 23 (SIGIO) received

2019/04/04 10:10:08 [notice] 99933#0: signal 23 (SIGIO) received

2019/04/04 10:10:08 [notice] 99933#0: signal 23 (SIGIO) received from 1581

2019/04/04 10:10:08 [notice] 99933#0: signal 23 (SIGIO) received from 1581

2019/04/04 10:10:08 [notice] 99933#0: signal 23 (SIGIO) received

2019/04/04 10:10:08 [notice] 99933#0: signal 20 (SIGCHLD) received from 99940

2019/04/04 10:10:08 [alert] 99933#0: worker process 99940 exited on signal 11

2019/04/04 10:10:08 [notice] 99933#0: start worker process 1585

2019/04/04 10:10:08 [notice] 99933#0: signal 23 (SIGIO) received

2019/04/04 10:10:08 [notice] 99933#0: signal 23 (SIGIO) received from 99941

2019/04/04 10:10:08 [notice] 99933#0: signal 23 (SIGIO) received from 1583

2019/04/04 10:10:08 [notice] 99933#0: signal 23 (SIGIO) received from 1583

2019/04/04 10:10:08 [notice] 99933#0: signal 23 (SIGIO) received from 1583

2019/04/04 10:10:09 [notice] 99933#0: signal 20 (SIGCHLD) received from 1585

2019/04/04 10:10:09 [alert] 99933#0: worker process 1585 exited on signal 11

2019/04/04 10:10:09 [notice] 99933#0: start worker process 1589

2019/04/04 10:10:09 [notice] 99933#0: signal 23 (SIGIO) received

2019/04/04 10:10:09 [notice] 99933#0: signal 23 (SIGIO) received from 1581

2019/04/04 10:10:09 [notice] 99933#0: signal 23 (SIGIO) received from 1581

2019/04/04 10:10:14 [notice] 99933#0: signal 20 (SIGCHLD) received from 1583

2019/04/04 10:10:14 [alert] 99933#0: worker process 1583 exited on signal 11

2019/04/04 10:10:14 [notice] 99933#0: start worker process 1608

2019/04/04 10:10:14 [notice] 99933#0: signal 23 (SIGIO) received

2019/04/04 10:10:14 [notice] 99933#0: signal 23 (SIGIO) received

2019/04/04 10:10:14 [notice] 99933#0: signal 23 (SIGIO) received from 99941

2019/04/04 10:10:14 [notice] 99933#0: signal 23 (SIGIO) received from 1589

2019/04/04 10:10:14 [notice] 99933#0: signal 20 (SIGCHLD) received from 1589

2019/04/04 10:10:14 [alert] 99933#0: worker process 1589 exited on signal 11

2019/04/04 10:10:14 [notice] 99933#0: start worker process 1610

2019/04/04 10:10:14 [notice] 99933#0: signal 23 (SIGIO) received

2019/04/04 10:10:14 [notice] 99933#0: signal 23 (SIGIO) received

2019/04/04 10:10:14 [notice] 99933#0: signal 23 (SIGIO) received from 99941

2019/04/04 10:10:14 [notice] 99933#0: signal 23 (SIGIO) received from 1608

2019/04/04 10:10:14 [notice] 99933#0: signal 23 (SIGIO) received from 1608

2019/04/04 10:10:14 [notice] 99933#0: signal 23 (SIGIO) received from 1608

2019/04/04 10:10:14 [notice] 99933#0: signal 20 (SIGCHLD) received from 1581

2019/04/04 10:10:14 [alert] 99933#0: worker process 1581 exited on signal 11

2019/04/04 10:10:14 [notice] 99933#0: start worker process 1612

2019/04/04 10:10:14 [notice] 99933#0: signal 23 (SIGIO) received

2019/04/04 10:10:14 [notice] 99933#0: signal 23 (SIGIO) received

2019/04/04 10:10:14 [notice] 99933#0: signal 23 (SIGIO) received from 1608

2019/04/04 10:10:14 [notice] 99933#0: signal 23 (SIGIO) received from 1610

2019/04/04 10:10:14 [notice] 99933#0: signal 23 (SIGIO) received from 1610

2019/04/04 10:10:14 [notice] 99933#0: signal 23 (SIGIO) received from 1610

- Kong configuration : not respond

- Operating system macOS mojave 10.14.3

All 12 comments

I can reproduce the problem by running:

➜ kong git:(next) bin/busted -v spec/03-plugins/01-tcp-log/01-tcp-log_spec.lua

0 successes / 0 failures / 2 errors / 0 pending : 2.299433 seconds

Error → spec/03-plugins/01-tcp-log/01-tcp-log_spec.lua @ 12

Plugin: tcp-log (log) [#postgres] lazy_setup

spec/03-plugins/01-tcp-log/01-tcp-log_spec.lua:46: sh: line 1: 33572 Segmentation fault: 11 nginx -p /Users/thomaslutz/Documents/kong/servroot -c nginx.conf > /tmp/lua_B0J0te 2> /tmp/lua_eOheu8

Error: ./kong/cmd/start.lua:50:

Run with --v (verbose) or --vv (debug) for more details

stack traceback:

spec/03-plugins/01-tcp-log/01-tcp-log_spec.lua:46: in function <spec/03-plugins/01-tcp-log/01-tcp-log_spec.lua:12>

Error → spec/03-plugins/01-tcp-log/01-tcp-log_spec.lua @ 12

Plugin: tcp-log (log) [#cassandra] lazy_setup

spec/03-plugins/01-tcp-log/01-tcp-log_spec.lua:46: 2019/04/07 13:11:08 [warn] You are using Cassandra but your 'db_update_propagation' setting is set to '0' (default). Due to the distributed nature of Cassandra, you should increase this value.

sh: line 1: 33626 Segmentation fault: 11 nginx -p /Users/thomaslutz/Documents/kong/servroot -c nginx.conf > /tmp/lua_zpJGGk 2> /tmp/lua_cPwADl

Error: ./kong/cmd/start.lua:50: nginx: [warn] [lua] log.lua:68: log(): You are using Cassandra but your 'db_update_propagation' setting is set to '0' (default). Due to the distributed nature of Cassandra, you should increase this value.

Run with --v (verbose) or --vv (debug) for more details

stack traceback:

spec/03-plugins/01-tcp-log/01-tcp-log_spec.lua:46: in function <spec/03-plugins/01-tcp-log/01-tcp-log_spec.lua:12>

Further investigation yielded a problem with the dns_hostsfile config in the tests:

➜ kong git:(next) grep dns_hostsfile spec/kong_tests.conf

dns_hostsfile = spec/fixtures/hosts

This file is not found by openresty when starting, as it is not correctly prefixed like other variables (as seen in the autogenerated .kong_env).

Anybody with more experience with Kong have some pointers here how this should be fixed? Not sure, why this only seems to be a problem on MacOS.

➜ kong git:(next) KONG_DATABASE='postgres' KONG_PLUGINS='bundled,dummy,cache,rewriter,error-handler-log,error-generator,error-generator-last,short-circuit,short-circuit-last' KONG_LUA_PACKAGE_PATH='./spec/fixtures/custom_plugins/?.lua;./spec/fixtures/custom_plugins/?.lua' KONG_NGINX_CONF='spec/fixtures/custom_nginx.template' bin/kong start --conf spec/kong_tests.conf --nginx-conf spec/fixtures/custom_nginx.template -vv | grep dns_hostsfile

sh: line 1: 33978 Segmentation fault: 11 nginx -p /Users/thomaslutz/Documents/kong/servroot -c nginx.conf > /tmp/lua_gZcCpx 2> /tmp/lua_5rJe7e

Error:

./kong/cmd/start.lua:61: ./kong/cmd/start.lua:50:

stack traceback:

[C]: in function 'error'

./kong/cmd/start.lua:61: in function 'cmd_exec'

./kong/cmd/init.lua:88: in function <./kong/cmd/init.lua:88>

[C]: in function 'xpcall'

./kong/cmd/init.lua:88: in function <./kong/cmd/init.lua:45>

bin/kong:7: in function 'file_gen'

init_worker_by_lua:51: in function <init_worker_by_lua:49>

[C]: in function 'xpcall'

init_worker_by_lua:58: in function <init_worker_by_lua:56>

2019/04/07 13:18:02 [debug] dns_hostsfile = "spec/fixtures/hosts"

This leaves the servroot folder for more investigation.

Not sure why I thought that the dns_hostsfile is the problem. This seems to work fine according to the debug logs. When running nginx -p /Users/thomaslutz/Documents/kong/servroot -c nginx.conf manually, I get the segmentation fault and the error.log in servroot ends with:

2019/04/08 16:21:35 [debug] 41651#0: [lua] plugins.lua:209: load_plugin(): Loading plugin: correlation-id

2019/04/08 16:21:35 [debug] 41651#0: [lua] plugins.lua:209: load_plugin(): Loading plugin: pre-function

2019/04/08 16:21:35 [debug] 41651#0: [lua] plugins.lua:209: load_plugin(): Loading plugin: cors

Debugging with lldb:

➜ kong git:(next) lldb nginx

(lldb) target create "nginx"

Traceback (most recent call last):

File "<input>", line 1, in <module>

File "/usr/local/Cellar/python@2/2.7.16/Frameworks/Python.framework/Versions/2.7/lib/python2.7/copy.py", line 52, in <module>

import weakref

File "/usr/local/Cellar/python@2/2.7.16/Frameworks/Python.framework/Versions/2.7/lib/python2.7/weakref.py", line 14, in <module>

from _weakref import (

ImportError: cannot import name _remove_dead_weakref

Current executable set to 'nginx' (x86_64).

(lldb) r -p /Users/thomaslutz/Documents/kong/servroot -c nginx.conf

Process 45960 launched: '/usr/local/bin/nginx' (x86_64)

Process 45960 stopped

* thread #1, queue = 'com.apple.main-thread', stop reason = EXC_BAD_ACCESS (code=EXC_I386_GPFLT)

frame #0: 0x000000000e5d8e11 libcrypto.1.0.0.dylib`doall_util_fn + 65

libcrypto.1.0.0.dylib`doall_util_fn:

-> 0xe5d8e11 <+65>: movq (%r15), %rdi

0xe5d8e14 <+68>: movq 0x8(%r15), %r15

0xe5d8e18 <+72>: testl %ebx, %ebx

0xe5d8e1a <+74>: je 0xe5d8e24 ; <+84>

Target 0: (nginx) stopped.

(lldb) bt

* thread #1, queue = 'com.apple.main-thread', stop reason = EXC_BAD_ACCESS (code=EXC_I386_GPFLT)

* frame #0: 0x000000000e5d8e11 libcrypto.1.0.0.dylib`doall_util_fn + 65

frame #1: 0x000000000e512220 libssl.1.0.0.dylib`SSL_CTX_flush_sessions + 100

frame #2: 0x000000000e50bc40 libssl.1.0.0.dylib`SSL_CTX_free + 94

frame #3: 0x000000000e4c57d7 _openssl.so`sx__gc + 39

frame #4: 0x0000000000012a7a libluajit-5.1.2.dylib`lj_BC_FUNCC + 52

frame #5: 0x0000000000014d32 libluajit-5.1.2.dylib`gc_call_finalizer + 99

frame #6: 0x0000000000015218 libluajit-5.1.2.dylib`gc_onestep + 811

frame #7: 0x0000000000014e88 libluajit-5.1.2.dylib`lj_gc_step + 67

frame #8: 0x0000000000025b24 libluajit-5.1.2.dylib`expr_table + 1286

frame #9: 0x0000000000024c40 libluajit-5.1.2.dylib`expr_binop + 284

frame #10: 0x000000000002580c libluajit-5.1.2.dylib`expr_table + 494

frame #11: 0x0000000000024c40 libluajit-5.1.2.dylib`expr_binop + 284

frame #12: 0x0000000000026c1c libluajit-5.1.2.dylib`expr_list + 17

frame #13: 0x000000000002396a libluajit-5.1.2.dylib`parse_chunk + 2295

frame #14: 0x0000000000022eed libluajit-5.1.2.dylib`lj_parse + 202

frame #15: 0x000000000002870c libluajit-5.1.2.dylib`cpparser + 84

frame #16: 0x0000000000012de9 libluajit-5.1.2.dylib`lj_vm_cpcall + 74

frame #17: 0x0000000000028686 libluajit-5.1.2.dylib`lua_loadx + 80

frame #18: 0x00000000000287f2 libluajit-5.1.2.dylib`luaL_loadfilex + 132

frame #19: 0x000000000005ad1e libluajit-5.1.2.dylib`lj_cf_package_loader_lua + 56

frame #20: 0x0000000000012a7a libluajit-5.1.2.dylib`lj_BC_FUNCC + 52

frame #21: 0x000000000005b23c libluajit-5.1.2.dylib`lj_cf_package_require + 369

frame #22: 0x0000000000012a7a libluajit-5.1.2.dylib`lj_BC_FUNCC + 52

frame #23: 0x000000000005b28c libluajit-5.1.2.dylib`lj_cf_package_require + 449

frame #24: 0x0000000000012a7a libluajit-5.1.2.dylib`lj_BC_FUNCC + 52

frame #25: 0x0000000000021121 libluajit-5.1.2.dylib`lua_pcall + 94

frame #26: 0x00000001000b3328 nginx`ngx_http_lua_do_call + 90

frame #27: 0x00000001000c39e3 nginx`ngx_http_lua_init_by_inline + 62

frame #28: 0x00000001000c0ce7 nginx`ngx_http_lua_shared_memory_init + 157

frame #29: 0x0000000100012b36 nginx`ngx_init_cycle + 3246

frame #30: 0x0000000100002fe3 nginx`main + 2215

frame #31: 0x00007fff7284f3d5 libdyld.dylib`start + 1

frame #32: 0x00007fff7284f3d5 libdyld.dylib`start + 1

Hi, thanks for reporting this. I have started investigating.

I can reproduce @inarli's issue, and I am trying to isolate the problem.

@kikito Any luck to identify the problem yet? I'm not sure how to proceed with my lldb findings, I have found some kind-of related nginx / openresty segfault reports where the SSL context was at fault though.

Hello guys,

We were able to reproduce and identify the problem to be with the latest PCRE 8.43 that Homebrew ships. The JIT compiler has compatibility issue with macOS Mojave in PCRE 8.43 and will cause normal requests to coredump.

This problem is not specific to Kong itself, any request to NGINX can coredump under this configuration. We are still figuring out a good way to properly fix this.

In the meantime, you can manually downgrade the formula to PCRE 8.42 and reinstall PCRE to get the problem fixed:

$ brew edit pcre

# replace content with https://github.com/Homebrew/homebrew-core/blob/839adfb30f52a6e93d30d4fe2a8eb5f53f20dc4e/Formula/pcre.rb and save file

$ brew remove pcre

$ brew reinstall pcre -s

the problem should go away after this.

Let me know if it works for you!

@dndx Will try that! Have you created an issue at https://github.com/Homebrew/homebrew-core/issues yet?

I can confirm, that kong installed via homebrew works fine after downgrading pcre to 8.42.

Confirmed as well: Downgrade to pcre 8.4.2 works. The admin interface is responding correctly.

Hello guys,

We have provided a long-term fix in https://github.com/Kong/openresty-patches/pull/39 and this issue should be fixed now. You may revert back the changes to pcre's formula and reinstall kong with pcre, the problem should no longer be persistent!

Appreciate the feedback as well!

@dndx It works, if pcre, openresty and kong are reinstalled. With the first two being the important ones, as the patches were for openresty. Thanks! 👍

Most helpful comment

Hello guys,

We were able to reproduce and identify the problem to be with the latest PCRE 8.43 that Homebrew ships. The JIT compiler has compatibility issue with macOS Mojave in PCRE 8.43 and will cause normal requests to coredump.

This problem is not specific to Kong itself, any request to NGINX can coredump under this configuration. We are still figuring out a good way to properly fix this.

In the meantime, you can manually downgrade the formula to PCRE 8.42 and reinstall PCRE to get the problem fixed:

the problem should go away after this.

Let me know if it works for you!