Kong: Kong 2.0.5 -> 2.1.4 Migratons C* issues still possible.

Summary

Thought we were out of the woods here. But I just tested migrations from a 2.0.5 to 2.1.4 DB upgrades with our prod keyspace cloned (decided dry runs of this migrations stuff is best with real keyspace data too) and things went sideways again. Captured logs of each migrations execution from the up to having to run finish multiple times to reach a conclusion, detailed below:

First the Kong migrations up:

/ $ kong migrations up --db-timeout 120 --vv

2020/09/28 17:17:57 [verbose] Kong: 2.1.4

2020/09/28 17:17:57 [debug] ngx_lua: 10015

2020/09/28 17:17:57 [debug] nginx: 1015008

2020/09/28 17:17:57 [debug] Lua: LuaJIT 2.1.0-beta3

2020/09/28 17:17:57 [verbose] no config file found at /etc/kong/kong.conf

2020/09/28 17:17:57 [verbose] no config file found at /etc/kong.conf

2020/09/28 17:17:57 [verbose] no config file, skip loading

2020/09/28 17:17:57 [debug] reading environment variables

2020/09/28 17:17:57 [debug] KONG_PLUGINS ENV found with "kong-siteminder-auth,kong-kafka-log,stargate-waf-error-log,mtls,stargate-oidc-token-revoke,kong-tx-debugger,kong-plugin-oauth,zipkin,kong-error-log,kong-oidc-implicit-token,kong-response-size-limiting,request-transformer,kong-service-virtualization,kong-cluster-drain,kong-upstream-jwt,kong-splunk-log,kong-spec-expose,kong-path-based-routing,kong-oidc-multi-idp,correlation-id,oauth2,statsd,jwt,rate-limiting,acl,request-size-limiting,request-termination,cors"

2020/09/28 17:17:57 [debug] KONG_ADMIN_LISTEN ENV found with "0.0.0.0:8001 deferred reuseport"

2020/09/28 17:17:57 [debug] KONG_PROXY_ACCESS_LOG ENV found with "off"

2020/09/28 17:17:57 [debug] KONG_CASSANDRA_USERNAME ENV found with "kongdba"

2020/09/28 17:17:57 [debug] KONG_CASSANDRA_PASSWORD ENV found with "******"

2020/09/28 17:17:57 [debug] KONG_PROXY_LISTEN ENV found with "0.0.0.0:8000, 0.0.0.0:8443 ssl http2 deferred reuseport"

2020/09/28 17:17:57 [debug] KONG_DNS_NO_SYNC ENV found with "off"

2020/09/28 17:17:57 [debug] KONG_DB_UPDATE_PROPAGATION ENV found with "5"

2020/09/28 17:17:57 [debug] KONG_CASSANDRA_PORT ENV found with "9042"

2020/09/28 17:17:57 [debug] KONG_HEADERS ENV found with "latency_tokens"

2020/09/28 17:17:57 [debug] KONG_DNS_STALE_TTL ENV found with "4"

2020/09/28 17:17:57 [debug] KONG_WAF_DEBUG_LEVEL ENV found with "0"

2020/09/28 17:17:57 [debug] KONG_WAF_PARANOIA_LEVEL ENV found with "1"

2020/09/28 17:17:57 [debug] KONG_CASSANDRA_REFRESH_FREQUENCY ENV found with "0"

2020/09/28 17:17:57 [debug] KONG_CASSANDRA_CONTACT_POINTS ENV found with "server05503,server05505,server05507,server05502,server05504,server05506"

2020/09/28 17:17:57 [debug] KONG_DB_CACHE_WARMUP_ENTITIES ENV found with "services,plugins,consumers"

2020/09/28 17:17:57 [debug] KONG_NGINX_HTTP_SSL_PROTOCOLS ENV found with "TLSv1.2 TLSv1.3"

2020/09/28 17:17:57 [debug] KONG_CASSANDRA_LOCAL_DATACENTER ENV found with "DC1"

2020/09/28 17:17:57 [debug] KONG_UPSTREAM_KEEPALIVE_IDLE_TIMEOUT ENV found with"30"

2020/09/28 17:17:57 [debug] KONG_DB_CACHE_TTL ENV found with "0"

2020/09/28 17:17:57 [debug] KONG_PG_SSL ENV found with "off"

2020/09/28 17:17:57 [debug] KONG_WAF_REQUEST_NO_FILE_SIZE_LIMIT ENV found with "50000000"

2020/09/28 17:17:57 [debug] KONG_WAF_PCRE_MATCH_LIMIT_RECURSION ENV found with "10000"

2020/09/28 17:17:57 [debug] KONG_CASSANDRA_SCHEMA_CONSENSUS_TIMEOUT ENV found with "30000"

2020/09/28 17:17:57 [debug] KONG_LOG_LEVEL ENV found with "notice"

2020/09/28 17:17:57 [debug] KONG_CASSANDRA_TIMEOUT ENV found with "5000"

2020/09/28 17:17:57 [debug] KONG_NGINX_MAIN_WORKER_PROCESSES ENV found with "6"

2020/09/28 17:17:57 [debug] KONG_CASSANDRA_KEYSPACE ENV found with "kong_prod2"

2020/09/28 17:17:57 [debug] KONG_WAF ENV found with "off"

2020/09/28 17:17:57 [debug] KONG_ERROR_DEFAULT_TYPE ENV found with "text/plain"

2020/09/28 17:17:57 [debug] KONG_UPSTREAM_KEEPALIVE_POOL_SIZE ENV found with "400"

2020/09/28 17:17:57 [debug] KONG_WORKER_CONSISTENCY ENV found with "eventual"

2020/09/28 17:17:57 [debug] KONG_CLIENT_SSL ENV found with "off"

2020/09/28 17:17:57 [debug] KONG_TRUSTED_IPS ENV found with "0.0.0.0/0,::/0"

2020/09/28 17:17:57 [debug] KONG_SSL_CERT_KEY ENV found with "/usr/local/kong/ssl/kongprivatekey.key"

2020/09/28 17:17:57 [debug] KONG_MEM_CACHE_SIZE ENV found with "1024m"

2020/09/28 17:17:57 [debug] KONG_NGINX_PROXY_REAL_IP_HEADER ENV found with "X-Forwarded-For"

2020/09/28 17:17:57 [debug] KONG_DB_UPDATE_FREQUENCY ENV found with "5"

2020/09/28 17:17:57 [debug] KONG_DNS_ORDER ENV found with "LAST,SRV,A,CNAME"

2020/09/28 17:17:57 [debug] KONG_DNS_ERROR_TTL ENV found with "1"

2020/09/28 17:17:57 [debug] KONG_DATABASE ENV found with "cassandra"

2020/09/28 17:17:57 [debug] KONG_CASSANDRA_DATA_CENTERS ENV found with "DC1:3,DC2:3"

2020/09/28 17:17:57 [debug] KONG_WORKER_STATE_UPDATE_FREQUENCY ENV found with "5"

2020/09/28 17:17:57 [debug] KONG_LUA_SSL_VERIFY_DEPTH ENV found with "3"

2020/09/28 17:17:57 [debug] KONG_LUA_SOCKET_POOL_SIZE ENV found with "30"

2020/09/28 17:17:57 [debug] KONG_UPSTREAM_KEEPALIVE_MAX_REQUESTS ENV found with"50000"

2020/09/28 17:17:57 [debug] KONG_CASSANDRA_CONSISTENCY ENV found with "LOCAL_QUORUM"

2020/09/28 17:17:57 [debug] KONG_CLIENT_MAX_BODY_SIZE ENV found with "50m"

2020/09/28 17:17:57 [debug] KONG_ADMIN_ERROR_LOG ENV found with "/dev/stderr"

2020/09/28 17:17:57 [debug] KONG_DNS_NOT_FOUND_TTL ENV found with "30"

2020/09/28 17:17:57 [debug] KONG_PROXY_ERROR_LOG ENV found with "/dev/stderr"

2020/09/28 17:17:57 [debug] KONG_CASSANDRA_REPL_STRATEGY ENV found with "NetworkTopologyStrategy"

2020/09/28 17:17:57 [debug] KONG_CASSANDRA_SSL_VERIFY ENV found with "on"

2020/09/28 17:17:57 [debug] KONG_ADMIN_ACCESS_LOG ENV found with "off"

2020/09/28 17:17:57 [debug] KONG_DNS_HOSTSFILE ENV found with "/etc/hosts"

2020/09/28 17:17:57 [debug] KONG_WAF_REQUEST_FILE_SIZE_LIMIT ENV found with "50000000"

2020/09/28 17:17:57 [debug] KONG_SSL_CERT ENV found with "/usr/local/kong/ssl/kongcert.crt"

2020/09/28 17:17:57 [debug] KONG_NGINX_PROXY_REAL_IP_RECURSIVE ENV found with "on"

2020/09/28 17:17:57 [debug] KONG_SSL_CIPHER_SUITE ENV found with "intermediate"

2020/09/28 17:17:57 [debug] KONG_CASSANDRA_SSL ENV found with "on"

2020/09/28 17:17:57 [debug] KONG_ANONYMOUS_REPORTS ENV found with "off"

2020/09/28 17:17:57 [debug] KONG_WAF_MODE ENV found with "On"

2020/09/28 17:17:57 [debug] KONG_CLIENT_BODY_BUFFER_SIZE ENV found with "50m"

2020/09/28 17:17:57 [debug] KONG_WAF_PCRE_MATCH_LIMIT ENV found with "10000"

2020/09/28 17:17:57 [debug] KONG_LUA_SSL_TRUSTED_CERTIFICATE ENV found with "/usr/local/kong/ssl/kongcert.pem"

2020/09/28 17:17:57 [debug] KONG_CASSANDRA_LB_POLICY ENV found with "RequestDCAwareRoundRobin"

2020/09/28 17:17:57 [debug] KONG_WAF_AUDIT ENV found with "RelevantOnly"

2020/09/28 17:17:57 [debug] admin_access_log = "off"

2020/09/28 17:17:57 [debug] admin_error_log = "/dev/stderr"

2020/09/28 17:17:57 [debug] admin_listen = {"0.0.0.0:8001 deferred reuseport"}

2020/09/28 17:17:57 [debug] anonymous_reports = false

2020/09/28 17:17:57 [debug] cassandra_consistency = "LOCAL_QUORUM"

2020/09/28 17:17:57 [debug] cassandra_contact_points = {"apsrp05503","apsrp05505","apsrp05507","apsrp05502","apsrp05504","apsrp05506"}

2020/09/28 17:17:57 [debug] cassandra_data_centers = {"DC1:3","DC2:3"}

2020/09/28 17:17:57 [debug] cassandra_keyspace = "kong_prod2"

2020/09/28 17:17:57 [debug] cassandra_lb_policy = "RequestDCAwareRoundRobin"

2020/09/28 17:17:57 [debug] cassandra_local_datacenter = "DC1"

2020/09/28 17:17:57 [debug] cassandra_password = "******"

2020/09/28 17:17:57 [debug] cassandra_port = 9042

2020/09/28 17:17:57 [debug] cassandra_read_consistency = "LOCAL_QUORUM"

2020/09/28 17:17:57 [debug] cassandra_refresh_frequency = 0

2020/09/28 17:17:57 [debug] cassandra_repl_factor = 1

2020/09/28 17:17:57 [debug] cassandra_repl_strategy = "NetworkTopologyStrategy"

2020/09/28 17:17:57 [debug] cassandra_schema_consensus_timeout = 30000

2020/09/28 17:17:57 [debug] cassandra_ssl = true

2020/09/28 17:17:57 [debug] cassandra_ssl_verify = true

2020/09/28 17:17:57 [debug] cassandra_timeout = 5000

2020/09/28 17:17:57 [debug] cassandra_username = "kongdba"

2020/09/28 17:17:57 [debug] cassandra_write_consistency = "LOCAL_QUORUM"

2020/09/28 17:17:57 [debug] client_body_buffer_size = "50m"

2020/09/28 17:17:57 [debug] client_max_body_size = "50m"

2020/09/28 17:17:57 [debug] client_ssl = false

2020/09/28 17:17:57 [debug] cluster_control_plane = "127.0.0.1:8005"

2020/09/28 17:17:57 [debug] cluster_listen = {"0.0.0.0:8005"}

2020/09/28 17:17:57 [debug] cluster_mtls = "shared"

2020/09/28 17:17:57 [debug] database = "cassandra"

2020/09/28 17:17:57 [debug] db_cache_ttl = 0

2020/09/28 17:17:57 [debug] db_cache_warmup_entities = {"services","plugins","consumers"}

2020/09/28 17:17:57 [debug] db_resurrect_ttl = 30

2020/09/28 17:17:57 [debug] db_update_frequency = 5

2020/09/28 17:17:57 [debug] db_update_propagation = 5

2020/09/28 17:17:57 [debug] dns_error_ttl = 1

2020/09/28 17:17:57 [debug] dns_hostsfile = "/etc/hosts"

2020/09/28 17:17:57 [debug] dns_no_sync = false

2020/09/28 17:17:57 [debug] dns_not_found_ttl = 30

2020/09/28 17:17:57 [debug] dns_order = {"LAST","SRV","A","CNAME"}

2020/09/28 17:17:57 [debug] dns_resolver = {}

2020/09/28 17:17:57 [debug] dns_stale_ttl = 4

2020/09/28 17:17:57 [debug] error_default_type = "text/plain"

2020/09/28 17:17:57 [debug] go_plugins_dir = "off"

2020/09/28 17:17:57 [debug] go_pluginserver_exe = "/usr/local/bin/go-pluginserver"

2020/09/28 17:17:57 [debug] headers = {"latency_tokens"}

2020/09/28 17:17:57 [debug] host_ports = {}

2020/09/28 17:17:57 [debug] kic = false

2020/09/28 17:17:57 [debug] log_level = "notice"

2020/09/28 17:17:57 [debug] lua_package_cpath = ""

2020/09/28 17:17:57 [debug] lua_package_path = "./?.lua;./?/init.lua;"

2020/09/28 17:17:57 [debug] lua_socket_pool_size = 30

2020/09/28 17:17:57 [debug] lua_ssl_trusted_certificate = "/usr/local/kong/ssl/kongcert.pem"

2020/09/28 17:17:57 [debug] lua_ssl_verify_depth = 3

2020/09/28 17:17:57 [debug] mem_cache_size = "1024m"

2020/09/28 17:17:57 [debug] nginx_admin_directives = {}

2020/09/28 17:17:57 [debug] nginx_daemon = "on"

2020/09/28 17:17:57 [debug] nginx_events_directives = {{name="multi_accept",value="on"},{name="worker_connections",value="auto"}}

2020/09/28 17:17:57 [debug] nginx_events_multi_accept = "on"

2020/09/28 17:17:57 [debug] nginx_events_worker_connections = "auto"

2020/09/28 17:17:57 [debug] nginx_http_client_body_buffer_size = "50m"

2020/09/28 17:17:57 [debug] nginx_http_client_max_body_size = "50m"

2020/09/28 17:17:57 [debug] nginx_http_directives = {{name="client_max_body_size",value="50m"},{name="ssl_prefer_server_ciphers",value="off"},{name="client_body_buffer_size",value="50m"},{name="ssl_protocols",value="TLSv1.2 TLSv1.3"},{name="ssl_session_timeout",value="1d"},{name="ssl_session_tickets",value="on"}}

2020/09/28 17:17:57 [debug] nginx_http_ssl_prefer_server_ciphers = "off"

2020/09/28 17:17:57 [debug] nginx_http_ssl_protocols = "TLSv1.2 TLSv1.3"

2020/09/28 17:17:57 [debug] nginx_http_ssl_session_tickets = "on"

2020/09/28 17:17:57 [debug] nginx_http_ssl_session_timeout = "1d"

2020/09/28 17:17:57 [debug] nginx_http_status_directives = {}

2020/09/28 17:17:57 [debug] nginx_http_upstream_directives = {{name="keepalive_requests",value="100"},{name="keepalive_timeout",value="60s"},{name="keepalive",value="60"}}

2020/09/28 17:17:57 [debug] nginx_http_upstream_keepalive = "60"

2020/09/28 17:17:57 [debug] nginx_http_upstream_keepalive_requests = "100"

2020/09/28 17:17:57 [debug] nginx_http_upstream_keepalive_timeout = "60s"

2020/09/28 17:17:57 [debug] nginx_main_daemon = "on"

2020/09/28 17:17:57 [debug] nginx_main_directives = {{name="daemon",value="on"},{name="worker_rlimit_nofile",value="auto"},{name="worker_processes",value="6"}}

2020/09/28 17:17:57 [debug] nginx_main_worker_processes = "6"

2020/09/28 17:17:57 [debug] nginx_main_worker_rlimit_nofile = "auto"

2020/09/28 17:17:57 [debug] nginx_optimizations = true

2020/09/28 17:17:57 [debug] nginx_proxy_directives = {{name="real_ip_header",value="X-Forwarded-For"},{name="real_ip_recursive",value="on"}}

2020/09/28 17:17:57 [debug] nginx_proxy_real_ip_header = "X-Forwarded-For"

2020/09/28 17:17:57 [debug] nginx_proxy_real_ip_recursive = "on"

2020/09/28 17:17:57 [debug] nginx_sproxy_directives = {}

2020/09/28 17:17:57 [debug] nginx_status_directives = {}

2020/09/28 17:17:57 [debug] nginx_stream_directives = {{name="ssl_session_tickets",value="on"},{name="ssl_protocols",value="TLSv1.2 TLSv1.3"},{name="ssl_session_timeout",value="1d"},{name="ssl_prefer_server_ciphers",value="off"}}

2020/09/28 17:17:57 [debug] nginx_stream_ssl_prefer_server_ciphers = "off"

2020/09/28 17:17:57 [debug] nginx_stream_ssl_protocols = "TLSv1.2 TLSv1.3"

2020/09/28 17:17:57 [debug] nginx_stream_ssl_session_tickets = "on"

2020/09/28 17:17:57 [debug] nginx_stream_ssl_session_timeout = "1d"

2020/09/28 17:17:57 [debug] nginx_supstream_directives = {}

2020/09/28 17:17:57 [debug] nginx_upstream_directives = {{name="keepalive_requests",value="100"},{name="keepalive_timeout",value="60s"},{name="keepalive",value="60"}}

2020/09/28 17:17:57 [debug] nginx_upstream_keepalive = "60"

2020/09/28 17:17:57 [debug] nginx_upstream_keepalive_requests = "100"

2020/09/28 17:17:57 [debug] nginx_upstream_keepalive_timeout = "60s"

2020/09/28 17:17:57 [debug] nginx_worker_processes = "auto"

2020/09/28 17:17:57 [debug] pg_database = "kong"

2020/09/28 17:17:57 [debug] pg_host = "127.0.0.1"

2020/09/28 17:17:57 [debug] pg_max_concurrent_queries = 0

2020/09/28 17:17:57 [debug] pg_port = 5432

2020/09/28 17:17:57 [debug] pg_ro_ssl = false

2020/09/28 17:17:57 [debug] pg_ro_ssl_verify = false

2020/09/28 17:17:57 [debug] pg_semaphore_timeout = 60000

2020/09/28 17:17:57 [debug] pg_ssl = false

2020/09/28 17:17:57 [debug] pg_ssl_verify = false

2020/09/28 17:17:57 [debug] pg_timeout = 5000

2020/09/28 17:17:57 [debug] pg_user = "kong"

2020/09/28 17:17:57 [debug] plugins = {"kong-siteminder-auth","kong-kafka-log","stargate-waf-error-log","mtls","stargate-oidc-token-revoke","kong-tx-debugger","kong-plugin-oauth","zipkin","kong-error-log","kong-oidc-implicit-token","kong-response-size-limiting","request-transformer","kong-service-virtualization","kong-cluster-drain","kong-upstream-jwt","kong-splunk-log","kong-spec-expose","kong-path-based-routing","kong-oidc-multi-idp","correlation-id","oauth2","statsd","jwt","rate-limiting","acl","request-size-limiting","request-termination","cors"}

2020/09/28 17:17:57 [debug] port_maps = {}

2020/09/28 17:17:57 [debug] prefix = "/usr/local/kong/"

2020/09/28 17:17:57 [debug] proxy_access_log = "off"

2020/09/28 17:17:57 [debug] proxy_error_log = "/dev/stderr"

2020/09/28 17:17:57 [debug] proxy_listen = {"0.0.0.0:8000","0.0.0.0:8443 ssl http2 deferred reuseport"}

2020/09/28 17:17:57 [debug] real_ip_header = "X-Real-IP"

2020/09/28 17:17:57 [debug] real_ip_recursive = "off"

2020/09/28 17:17:57 [debug] role = "traditional"

2020/09/28 17:17:57 [debug] ssl_cert = "/usr/local/kong/ssl/kongcert.crt"

2020/09/28 17:17:57 [debug] ssl_cert_key = "/usr/local/kong/ssl/kongprivatekey.key"

2020/09/28 17:17:57 [debug] ssl_cipher_suite = "intermediate"

2020/09/28 17:17:57 [debug] ssl_ciphers = "ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384"

2020/09/28 17:17:57 [debug] ssl_prefer_server_ciphers = "on"

2020/09/28 17:17:57 [debug] ssl_protocols = "TLSv1.1 TLSv1.2 TLSv1.3"

2020/09/28 17:17:57 [debug] ssl_session_tickets = "on"

2020/09/28 17:17:57 [debug] ssl_session_timeout = "1d"

2020/09/28 17:17:57 [debug] status_access_log = "off"

2020/09/28 17:17:57 [debug] status_error_log = "logs/status_error.log"

2020/09/28 17:17:57 [debug] status_listen = {"off"}

2020/09/28 17:17:57 [debug] stream_listen = {"off"}

2020/09/28 17:17:57 [debug] trusted_ips = {"0.0.0.0/0","::/0"}

2020/09/28 17:17:57 [debug] upstream_keepalive = 60

2020/09/28 17:17:57 [debug] upstream_keepalive_idle_timeout = 30

2020/09/28 17:17:57 [debug] upstream_keepalive_max_requests = 50000

2020/09/28 17:17:57 [debug] upstream_keepalive_pool_size = 400

2020/09/28 17:17:57 [debug] waf = "off"

2020/09/28 17:17:57 [debug] waf_audit = "RelevantOnly"

2020/09/28 17:17:57 [debug] waf_debug_level = "0"

2020/09/28 17:17:57 [debug] waf_mode = "On"

2020/09/28 17:17:57 [debug] waf_paranoia_level = "1"

2020/09/28 17:17:57 [debug] waf_pcre_match_limit = "10000"

2020/09/28 17:17:57 [debug] waf_pcre_match_limit_recursion = "10000"

2020/09/28 17:17:57 [debug] waf_request_file_size_limit = "50000000"

2020/09/28 17:17:57 [debug] waf_request_no_file_size_limit = "50000000"

2020/09/28 17:17:57 [debug] worker_consistency = "eventual"

2020/09/28 17:17:57 [debug] worker_state_update_frequency = 5

2020/09/28 17:17:57 [verbose] prefix in use: /usr/local/kong

2020/09/28 17:17:57 [debug] resolved Cassandra contact point 'server05503' to: 10.204.90.234

2020/09/28 17:17:57 [debug] resolved Cassandra contact point 'server05505' to: 10.204.90.235

2020/09/28 17:17:57 [debug] resolved Cassandra contact point 'server05507' to: 10.86.173.32

2020/09/28 17:17:57 [debug] resolved Cassandra contact point 'server05502' to: 10.106.184.179

2020/09/28 17:17:57 [debug] resolved Cassandra contact point 'server05504' to: 10.106.184.193

2020/09/28 17:17:57 [debug] resolved Cassandra contact point 'server05506' to: 10.87.52.252

2020/09/28 17:17:58 [debug] loading subsystems migrations...

2020/09/28 17:17:58 [verbose] retrieving keyspace schema state...

2020/09/28 17:17:58 [verbose] schema state retrieved

2020/09/28 17:17:58 [debug] loading subsystems migrations...

2020/09/28 17:17:58 [verbose] retrieving keyspace schema state...

2020/09/28 17:17:58 [verbose] schema state retrieved

2020/09/28 17:17:58 [debug] migrations to run:

core: 009_200_to_210, 010_210_to_211, 011_212_to_213

jwt: 003_200_to_210

acl: 003_200_to_210, 004_212_to_213

rate-limiting: 004_200_to_210

oauth2: 004_200_to_210, 005_210_to_211

2020/09/28 17:17:58 [info] migrating core on keyspace 'kong_prod2'...

2020/09/28 17:17:58 [debug] running migration: 009_200_to_210

2020/09/28 17:18:02 [info] core migrated up to: 009_200_to_210 (pending)

2020/09/28 17:18:02 [debug] running migration: 010_210_to_211

2020/09/28 17:18:02 [info] core migrated up to: 010_210_to_211 (pending)

2020/09/28 17:18:02 [debug] running migration: 011_212_to_213

2020/09/28 17:18:02 [info] core migrated up to: 011_212_to_213 (executed)

2020/09/28 17:18:02 [info] migrating jwt on keyspace 'kong_prod2'...

2020/09/28 17:18:02 [debug] running migration: 003_200_to_210

2020/09/28 17:18:02 [info] jwt migrated up to: 003_200_to_210 (pending)

2020/09/28 17:18:02 [info] migrating acl on keyspace 'kong_prod2'...

2020/09/28 17:18:02 [debug] running migration: 003_200_to_210

2020/09/28 17:18:02 [info] acl migrated up to: 003_200_to_210 (pending)

2020/09/28 17:18:02 [debug] running migration: 004_212_to_213

2020/09/28 17:18:02 [info] acl migrated up to: 004_212_to_213 (pending)

2020/09/28 17:18:02 [info] migrating rate-limiting on keyspace 'kong_prod2'...

2020/09/28 17:18:02 [debug] running migration: 004_200_to_210

2020/09/28 17:18:02 [info] rate-limiting migrated up to: 004_200_to_210 (executed)

2020/09/28 17:18:02 [info] migrating oauth2 on keyspace 'kong_prod2'...

2020/09/28 17:18:02 [debug] running migration: 004_200_to_210

2020/09/28 17:18:03 [info] oauth2 migrated up to: 004_200_to_210 (pending)

2020/09/28 17:18:03 [debug] running migration: 005_210_to_211

2020/09/28 17:18:03 [info] oauth2 migrated up to: 005_210_to_211 (pending)

2020/09/28 17:18:03 [verbose] waiting for Cassandra schema consensus (120000ms timeout)...

2020/09/28 17:18:09 [verbose] Cassandra schema consensus: reached

2020/09/28 17:18:09 [info] 9 migrations processed

2020/09/28 17:18:09 [info] 2 executed

2020/09/28 17:18:09 [info] 7 pending

2020/09/28 17:18:09 [debug] loading subsystems migrations...

2020/09/28 17:18:09 [verbose] retrieving keyspace schema state...

2020/09/28 17:18:09 [verbose] schema state retrieved

2020/09/28 17:18:09 [info]

Database has pending migrations; run 'kong migrations finish' when ready

So far so good right? Well its after the up that the finish starts to see problems, (removed env variable dump from verbosity for brevity):

/ $ kong migrations finish --db-timeout 120 --vv

2020/09/28 17:19:57 [debug] loading subsystems migrations...

2020/09/28 17:19:57 [verbose] retrieving keyspace schema state...

2020/09/28 17:19:57 [verbose] schema state retrieved

2020/09/28 17:19:57 [debug] loading subsystems migrations...

2020/09/28 17:19:57 [verbose] retrieving keyspace schema state...

2020/09/28 17:19:58 [verbose] schema state retrieved

2020/09/28 17:19:58 [debug] pending migrations to finish:

core: 009_200_to_210, 010_210_to_211

jwt: 003_200_to_210

acl: 003_200_to_210, 004_212_to_213

oauth2: 004_200_to_210, 005_210_to_211

2020/09/28 17:19:58 [info] migrating core on keyspace 'kong_prod2'...

2020/09/28 17:19:58 [debug] running migration: 009_200_to_210

2020/09/28 17:20:12 [verbose] waiting for Cassandra schema consensus (120000ms timeout)...

2020/09/28 17:20:12 [verbose] Cassandra schema consensus: reached

2020/09/28 17:20:12 [info] core migrated up to: 009_200_to_210 (executed)

2020/09/28 17:20:12 [debug] running migration: 010_210_to_211

2020/09/28 17:20:12 [verbose] waiting for Cassandra schema consensus (120000ms timeout)...

2020/09/28 17:20:12 [verbose] Cassandra schema consensus: reached

2020/09/28 17:20:12 [info] core migrated up to: 010_210_to_211 (executed)

2020/09/28 17:20:12 [info] migrating jwt on keyspace 'kong_prod2'...

2020/09/28 17:20:12 [debug] running migration: 003_200_to_210

2020/09/28 17:20:14 [verbose] waiting for Cassandra schema consensus (120000ms timeout)...

2020/09/28 17:20:14 [verbose] Cassandra schema consensus: not reached

Error:

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:161: [Cassandra error] cluster_mutex callback threw an error: ...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:142: [Cassandra error] failed to wait for schema consensus: [Read failure] Operation failed - received 0 responses and 1 failures

stack traceback:

[C]: in function 'assert'

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:142: in function <...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:126>

[C]: in function 'xpcall'

/usr/local/kong/luarocks/share/lua/5.1/kong/db/init.lua:364: in function </usr/local/kong/luarocks/share/lua/5.1/kong/db/init.lua:314>

[C]: in function 'pcall'

/usr/local/kong/luarocks/share/lua/5.1/kong/concurrency.lua:45: in function 'cluster_mutex'

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:126: in function 'finish'

...ocal/kong/luarocks/share/lua/5.1/kong/cmd/migrations.lua:184: in function 'cmd_exec'

/usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88: in function </usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88>

[C]: in function 'xpcall'

/usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88: in function </usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:45>

/usr/bin/kong:9: in function 'file_gen'

init_worker_by_lua:50: in function <init_worker_by_lua:48>

[C]: in function 'xpcall'

init_worker_by_lua:57: in function <init_worker_by_lua:55>

stack traceback:

[C]: in function 'error'

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:161: in function 'finish'

...ocal/kong/luarocks/share/lua/5.1/kong/cmd/migrations.lua:184: in function 'cmd_exec'

/usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88: in function </usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88>

[C]: in function 'xpcall'

/usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88: in function </usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:45>

/usr/bin/kong:9: in function 'file_gen'

init_worker_by_lua:50: in function <init_worker_by_lua:48>

[C]: in function 'xpcall'

init_worker_by_lua:57: in function <init_worker_by_lua:55>

Second run of finish to try again:

2020/09/28 17:22:14 [debug] loading subsystems migrations...

2020/09/28 17:22:14 [verbose] retrieving keyspace schema state...

2020/09/28 17:22:14 [verbose] schema state retrieved

2020/09/28 17:22:15 [debug] loading subsystems migrations...

2020/09/28 17:22:15 [verbose] retrieving keyspace schema state...

2020/09/28 17:22:15 [verbose] schema state retrieved

2020/09/28 17:22:15 [debug] pending migrations to finish:

acl: 003_200_to_210, 004_212_to_213

oauth2: 004_200_to_210, 005_210_to_211

2020/09/28 17:22:15 [info] migrating acl on keyspace 'kong_prod2'...

2020/09/28 17:22:15 [debug] running migration: 003_200_to_210

2020/09/28 17:22:28 [verbose] waiting for Cassandra schema consensus (120000ms timeout)...

2020/09/28 17:22:28 [verbose] Cassandra schema consensus: not reached

Error:

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:161: [Cassandra error] cluster_mutex callback threw an error: ...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:142: [Cassandra error] failed to wait for schema consensus: [Read failure] Operation failed - received 0 responses and 1 failures

stack traceback:

[C]: in function 'assert'

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:142: in function <...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:126>

[C]: in function 'xpcall'

/usr/local/kong/luarocks/share/lua/5.1/kong/db/init.lua:364: in function </usr/local/kong/luarocks/share/lua/5.1/kong/db/init.lua:314>

[C]: in function 'pcall'

/usr/local/kong/luarocks/share/lua/5.1/kong/concurrency.lua:45: in function 'cluster_mutex'

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:126: in function 'finish'

...ocal/kong/luarocks/share/lua/5.1/kong/cmd/migrations.lua:184: in function 'cmd_exec'

/usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88: in function </usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88>

[C]: in function 'xpcall'

/usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88: in function </usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:45>

/usr/bin/kong:9: in function 'file_gen'

init_worker_by_lua:50: in function <init_worker_by_lua:48>

[C]: in function 'xpcall'

init_worker_by_lua:57: in function <init_worker_by_lua:55>

stack traceback:

[C]: in function 'error'

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:161: in function 'finish'

...ocal/kong/luarocks/share/lua/5.1/kong/cmd/migrations.lua:184: in function 'cmd_exec'

/usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88: in function </usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88>

[C]: in function 'xpcall'

/usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88: in function </usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:45>

/usr/bin/kong:9: in function 'file_gen'

init_worker_by_lua:50: in function <init_worker_by_lua:48>

[C]: in function 'xpcall'

init_worker_by_lua:57: in function <init_worker_by_lua:55>

Third Finish....:

2020/09/28 17:23:28 [verbose] schema state retrieved

2020/09/28 17:23:28 [debug] loading subsystems migrations...

2020/09/28 17:23:28 [verbose] retrieving keyspace schema state...

2020/09/28 17:23:29 [verbose] schema state retrieved

2020/09/28 17:23:29 [debug] pending migrations to finish:

acl: 004_212_to_213

oauth2: 004_200_to_210, 005_210_to_211

2020/09/28 17:23:29 [info] migrating acl on keyspace 'kong_prod2'...

2020/09/28 17:23:29 [debug] running migration: 004_212_to_213

2020/09/28 17:23:29 [verbose] waiting for Cassandra schema consensus (120000ms timeout)...

2020/09/28 17:23:29 [verbose] Cassandra schema consensus: not reached

Error:

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:161: [Cassandra error] cluster_mutex callback threw an error: ...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:142: [Cassandra error] failed to wait for schema consensus: [Read failure] Operation failed - received 0 responses and 1 failures

stack traceback:

[C]: in function 'assert'

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:142: in function <...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:126>

[C]: in function 'xpcall'

/usr/local/kong/luarocks/share/lua/5.1/kong/db/init.lua:364: in function </usr/local/kong/luarocks/share/lua/5.1/kong/db/init.lua:314>

[C]: in function 'pcall'

/usr/local/kong/luarocks/share/lua/5.1/kong/concurrency.lua:45: in function 'cluster_mutex'

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:126: in function 'finish'

...ocal/kong/luarocks/share/lua/5.1/kong/cmd/migrations.lua:184: in function 'cmd_exec'

/usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88: in function </usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88>

[C]: in function 'xpcall'

/usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88: in function </usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:45>

/usr/bin/kong:9: in function 'file_gen'

init_worker_by_lua:50: in function <init_worker_by_lua:48>

[C]: in function 'xpcall'

init_worker_by_lua:57: in function <init_worker_by_lua:55>

stack traceback:

[C]: in function 'error'

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:161: in function 'finish'

...ocal/kong/luarocks/share/lua/5.1/kong/cmd/migrations.lua:184: in function 'cmd_exec'

/usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88: in function </usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88>

[C]: in function 'xpcall'

/usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88: in function </usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:45>

/usr/bin/kong:9: in function 'file_gen'

init_worker_by_lua:50: in function <init_worker_by_lua:48>

[C]: in function 'xpcall'

init_worker_by_lua:57: in function <init_worker_by_lua:55>

4th Finish...... :

2020/09/28 17:24:15 [debug] loading subsystems migrations...

2020/09/28 17:24:15 [verbose] retrieving keyspace schema state...

2020/09/28 17:24:15 [verbose] schema state retrieved

2020/09/28 17:24:15 [debug] loading subsystems migrations...

2020/09/28 17:24:15 [verbose] retrieving keyspace schema state...

2020/09/28 17:24:16 [verbose] schema state retrieved

2020/09/28 17:24:16 [debug] pending migrations to finish:

oauth2: 004_200_to_210, 005_210_to_211

2020/09/28 17:24:16 [info] migrating oauth2 on keyspace 'kong_prod2'...

2020/09/28 17:24:16 [debug] running migration: 004_200_to_210

2020/09/28 17:24:18 [verbose] waiting for Cassandra schema consensus (120000ms timeout)...

2020/09/28 17:24:18 [verbose] Cassandra schema consensus: reached

2020/09/28 17:24:18 [info] oauth2 migrated up to: 004_200_to_210 (executed)

2020/09/28 17:24:18 [debug] running migration: 005_210_to_211

2020/09/28 17:24:18 [verbose] waiting for Cassandra schema consensus (120000ms timeout)...

2020/09/28 17:24:18 [verbose] Cassandra schema consensus: reached

2020/09/28 17:24:18 [info] oauth2 migrated up to: 005_210_to_211 (executed)

2020/09/28 17:24:18 [info] 2 migrations processed

2020/09/28 17:24:18 [info] 2 executed

2020/09/28 17:24:18 [debug] loading subsystems migrations...

2020/09/28 17:24:18 [verbose] retrieving keyspace schema state...

2020/09/28 17:24:18 [verbose] schema state retrieved

2020/09/28 17:24:18 [info] No pending migrations to finish

Lastly confirmed via list and finish again that there was nothing left to do:

2020/09/28 17:25:35 [debug] loading subsystems migrations...

2020/09/28 17:25:35 [verbose] retrieving keyspace schema state...

2020/09/28 17:25:35 [verbose] schema state retrieved

2020/09/28 17:25:35 [info] Executed migrations:

core: 000_base, 003_100_to_110, 004_110_to_120, 005_120_to_130, 006_130_to_140, 007_140_to_150, 008_150_to_200, 009_200_to_210, 010_210_to_211, 011_212_to_213

jwt: 000_base_jwt, 002_130_to_140, 003_200_to_210

acl: 000_base_acl, 002_130_to_140, 003_200_to_210, 004_212_to_213

rate-limiting: 000_base_rate_limiting, 003_10_to_112, 004_200_to_210

oauth2: 000_base_oauth2, 003_130_to_140, 004_200_to_210, 005_210_to_211

Looks like re-entrancy is still a problem for sure with the workspace tables, likely correlating to how many times I re-executed the finish command trying to get through this upgrade since the finish failed me a few times in a row and seemed to progress 1 table at a time with each failure:

Also find it interesting how I set the C* schema consensus timeouts long but it bounces back instantly saying consensus not reached? Ex:

2020/09/28 17:23:29 [verbose] waiting for Cassandra schema consensus (120000ms timeout)...

2020/09/28 17:23:29 [verbose] Cassandra schema consensus: not reached

cc @bungle @locao @hishamhm

Edit:

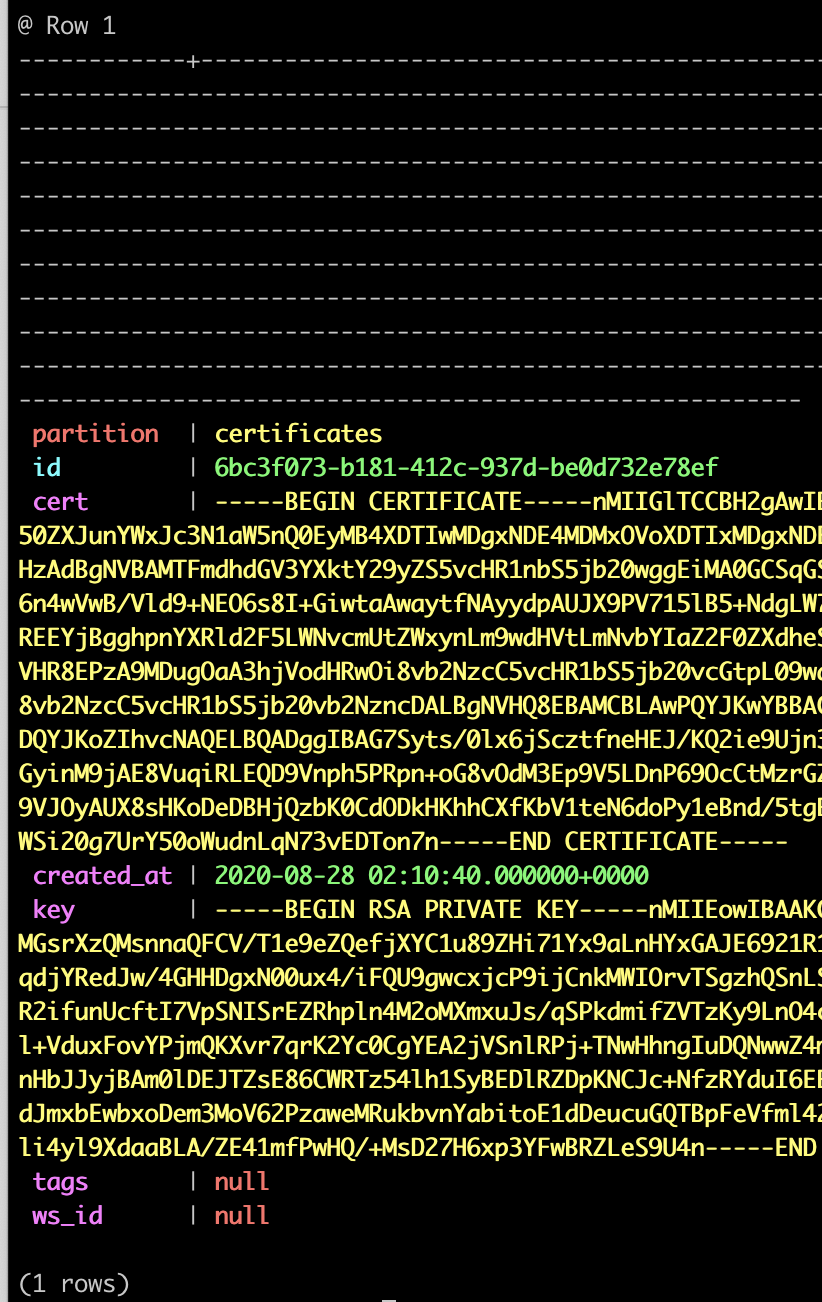

Yeah I see some tables like oauth2_credentials using ws_id: 38321a07-1d68-4aa8-b01f-8d64d5bf665a

and jwt_secrets using ws_id: 8f1d01e1-0609-4bda-a021-a01e619d1590

Additional Details & Logs

- Kong version 2.1.4

All 81 comments

Thank you for the detailed report as always! We'll be looking into it.

Yeah I think it would have worked fine if the finish had not died reaching schema consensus and continued processing. But that can't always be the case it looks like for us, therefore can't be assumed in code. I tried this same thing in another of our prod environments and same errors happened too. I decided to check the C* nodes as well looking for any discrepancies in schema mismatches or cluster healthy but nothing shows up there either. Because this may point to C* issues potentially as well alongside Kong not being able to handle all situations(such as restarting a migrations finish because the first execution didn't work out).

root@server05502:/root

# nodetool describecluster

Cluster Information:

Name: Stargate

Snitch: org.apache.cassandra.locator.GossipingPropertyFileSnitch

DynamicEndPointSnitch: enabled

Partitioner: org.apache.cassandra.dht.Murmur3Partitioner

Schema versions:

12b6ca53-8e84-3a66-8793-7ce091b5dcca: [10.204.90.234, 10.86.173.32, 10.204.90.235, 10.106.184.179, 10.106.184.193, 10.87.52.252]

root@server05502:/root

# nodetool status

Datacenter: DC1

===============

Status=Up/Down

|/ State=Normal/Leaving/Joining/Moving

-- Address Load Tokens Owns (effective) Host ID Rack

UN 10.204.90.234 113.89 MiB 256 100.0% 5be9bdf7-1337-42ac-8177-341044a4758b RACK2

UN 10.86.173.32 117.4 MiB 256 100.0% 0f8d693c-4d99-453b-826e-6e5c68538037 RACK6

UN 10.204.90.235 335.54 MiB 256 100.0% 8e3192a7-e60c-41fb-8ca6-ce809440d982 RACK4

Datacenter: DC2

===============

Status=Up/Down

|/ State=Normal/Leaving/Joining/Moving

-- Address Load Tokens Owns (effective) Host ID Rack

UN 10.106.184.179 117.48 MiB 256 100.0% 33747998-70fd-42c4-87cb-c7c49a182c3e RACK1

UN 10.87.52.252 104.64 MiB 256 100.0% 74a60b30-c83c-4aa8-b3ae-7ccb16fb817f RACK5

UN 10.106.184.193 107.06 MiB 256 100.0% 61320d76-aa12-43f0-9684-b1413e57c90d RACK3

So that wasn't any help. I wonder if there are any analytic health checks I can run on the cloned keyspace itself to check for weirdness or mismatches too.

Edit - Used another node in other DC w same commands above and they checked out fine too. 😢 so no smoking gun there as far as why C* could be the culprit to some of this. Regardless hope Kong enables the finish to be safer if it needs to execute multiple times and still achieve 1 ws_id all the tables end up using and have in sync together.

It certainly feels somekind of eventual consistency issue. We now insert the workspace only when after doing read-before-write (AFAIK), but perhaps that read will return nil when the write is done eventually, making us to do write again. And as the default ws id can be different, we cannot just do update.

Is it not possible to add logic into the migrations process for the finish command in code such that if a workspace table has a default record in it, use that record to continue processing? Or would this potentially break other enterprise use cases is the reservation there?

Or maybe thats exactly what you meant here?

We now insert the workspace only when after doing read-before-write (AFAIK), but perhaps that read will return nil when the write is done eventually

But I imagine independent executions of the finish command don't keep up with checking if there was already a ws generated, it probably just generates a fresh one and drops it in based on behavior I have seen?

Maybe the case we do full replication and local quorum r/w and all our Kong C* settings play a roll in this problem but we do so because it's a must to ensure OAuth2.0 bearer token plugin functions correctly for us .

To debug the behavior even if you cant produce this against your own localhost C* cluster:

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:161: [Cassandra error] cluster_mutex callback threw an error: ...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:142: [Cassandra error] failed to wait for schema consensus: [Read failure] Operation failed - received 0 responses and 1 failures

I imagine can do manual break points in the code to reproduce the re-entrance of injecting ws id's when finish runs multiple times and stops at a certain portion of its execution like above situations happened for us.

Or maybe thats exactly what you meant here?

Yes, that's what we try to do. During finish, we first try read the default workspace value to use it, and if we get nothing, we create it. Looks like the SELECT is returning an empty response.

Maybe the case we do full replication and local quorum r/w and all our Kong C* settings play a roll in this problem but we do so because it's a must to ensure OAuth2.0 bearer token plugin functions correctly for us .

If anything, that should have brought _stronger_ consistency, right?

Puzzling.

I'm wondering if chasing after this isn't a more fruitful avenue:

Also find it interesting how I set the C* schema consensus timeouts long but it bounces back instantly saying consensus not reached? Ex:

2020/09/28 17:23:29 [verbose] waiting for Cassandra schema consensus (120000ms timeout)...

2020/09/28 17:23:29 [verbose] Cassandra schema consensus: not reached

Hmm if it was eventual consistency problem though the time between executing the finish multiple times could not be that though :/ ... Pretty sure I could wait 10 mins between each time I run finish and it would still result in the new ws_id's being entered meaning that first select must always be failing somehow for us... odd.

And yeah one obvious red flag to me is how we set the schema consensus timeout huge but does not seem like its being honored. It either snaps back right away due to an underlying error(thats not printed or details given) or it just straight up won't wait for the given time and the wait is being derived from some other value either default or hardcoded.

I believe that it fails here:

https://github.com/thibaultcha/lua-cassandra/blob/master/lib/resty/cassandra/cluster.lua#L755-L764

Which means either of these fail:

https://github.com/thibaultcha/lua-cassandra/blob/master/lib/resty/cassandra/cluster.lua#L726-L730

It is busy looping, but it seems like it fails quite quick on either of those queries.

As a workaround, one thing is for us to try to reach schema consensus more than once. But as you said, even if you waited you could still get the issue. I also thinking that Cassandra indexes could lie or that some of the queries are not executed on right consistency level. Also could nodes go down while migrating, but you got it 4 times a row, so...

Can you execute those queries:

SELECT schema_version FROM system.local

-- AND

SELECT schema_version FROM system.peers

and share the data when this happens (or is about to happen)?

Feels like this has been discussed several occasions:

https://github.com/Kong/kong/issues/4229

https://github.com/Kong/kong/issues/4228

https://discuss.konghq.com/t/kong-migrations-against-a-cassandra-cluster-fails/2660

I left them here just as a pointers.

@jeremyjpj0916, could it be a tombstone related issue:

https://stackoverflow.com/a/37119894

Okay, It might be that we talk about two different issues.

- duplicated default workspaces

- waiting for schema consensus fails

For 1: duplicated default workspaces:

We might want to ensure that these:

https://github.com/Kong/kong/blob/master/kong/db/migrations/operations/200_to_210.lua#L24

https://github.com/Kong/kong/blob/master/kong/db/migrations/operations/200_to_210.lua#L43

https://github.com/Kong/kong/blob/master/kong/db/migrations/operations/212_to_213.lua#L24

https://github.com/Kong/kong/blob/master/kong/db/migrations/operations/212_to_213.lua#L43

Runs on stricter consistency. At the moment we are not sure if changing the consistency level fixes it, but it is a good guess.

For 2: waiting for schema consensus to fails:

It feels like it is failing here:

https://github.com/Kong/kong/blob/master/kong/db/init.lua#L577

And Cassandra implements it here:

https://github.com/Kong/kong/blob/master/kong/db/strategies/cassandra/connector.lua#L396-L415

For some reason it fails sometimes with:

[Read failure] Operation failed - received 0 responses and 1 failures

This error message is coming from Cassandra itself, and is forwarded from here:

https://github.com/thibaultcha/lua-cassandra/blob/master/lib/resty/cassandra/cluster.lua#L726-L730

At the moment we don't fully understand why this error happens (tombstones?), but it seems to happen on a rather non-fatal place. We could try it more, e.g. try it for the full max_schema_consensus_wait (120000ms timeout), and not fail on the first [Read failure].

@jeremyjpj0916 there is now #6411, would it be too much asked if you could try that, and report back if it has any effect on your environment? That branch does not have much more that that compared to latest 2.1.4. as can be seen here: https://github.com/Kong/kong/compare/2.1.4...fix/cassandra-migrations

Just tried the two commands randomly on a given prod node where I had been testing these migrations on a cloned keyspace, but outside of context of the processing for how kong uses the C* lua client lib tibo wrote not sure if this helps, just shows these commands do generally work in a vacuume:

Can you execute those queries:

SELECT schema_version FROM system.local

-- AND

SELECT schema_version FROM system.peers

root@server05502:/root

# cqlsh --ssl

Connected to Stargate at 127.0.0.1:9042.

[cqlsh 5.0.1 | Cassandra 3.11.2 | CQL spec 3.4.4 | Native protocol v4]

Use HELP for help.

****@cqlsh> SELECT schema_version FROM system.local;

schema_version

--------------------------------------

12b6ca53-8e84-3a66-8793-7ce091b5dcca

(1 rows)

****@cqlsh> SELECT schema_version FROM system.peers;

schema_version

--------------------------------------

12b6ca53-8e84-3a66-8793-7ce091b5dcca

12b6ca53-8e84-3a66-8793-7ce091b5dcca

12b6ca53-8e84-3a66-8793-7ce091b5dcca

12b6ca53-8e84-3a66-8793-7ce091b5dcca

12b6ca53-8e84-3a66-8793-7ce091b5dcca

(5 rows)

****@cqlsh>

As for tombstones I remember increasing the tombstone fail threshold higher too. Also making the gc policy more aggressive than the default:

ALTER TABLE kong_stage3.ratelimiting_metrics WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.locks WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.oauth2_tokens WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.locks WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.snis WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.plugins WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.targets WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.consumers WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.upstreams WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.schema_meta WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.response_ratelimiting_metrics WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.tags WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.acls WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.cluster_ca WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.cluster_events WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.jwt_secrets WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.apis WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.basicauth_credentials WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.keyauth_credentials WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.oauth2_credentials WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.certificates WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.services WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.oauth2_authorization_codes WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.routes WITH GC_GRACE_SECONDS = 3600;ALTER TABLE kong_stage3.hmacauth_credentials WITH GC_GRACE_SECONDS = 3600;

Which is 1 hr vs the default 10 days for GC grace. I have forgotten if the default_time_to_live field impacts anything as well with the gc grace set before then though. Are you familiar? I may need to google around on that if its not optimal currently too.

Ex:

Then the fail threshold was set to:

# When executing a scan, within or across a partition, we need to keep the

# tombstones seen in memory so we can return them to the coordinator, which

# will use them to make sure other replicas also know about the deleted rows.

# With workloads that generate a lot of tombstones, this can cause performance

# problems and even exaust the server heap.

# (http://www.datastax.com/dev/blog/cassandra-anti-patterns-queues-and-queue-like-datasets)

# Adjust the thresholds here if you understand the dangers and want to

# scan more tombstones anyway. These thresholds may also be adjusted at runtime

# using the StorageService mbean.

tombstone_warn_threshold: 10000

tombstone_failure_threshold: 500000

vs the default of 100,000

What would the migrations be querying each time that would cause that though? The cluster events table get hit each time? I know the oauth2 token table(probably our largest table in terms of records) probably does not get hit on every migrations finish command, especially after its been passed through in the list. I mean I guess could oauth2 tokens table getting in the millions of tombstones within an hour be causing it? OR cluster events_table for that matter? Unsure... not sure how I could ever tune it more than that unless we set it to 0 which is not recommended in C* cluster environments because if a node goes down you start getting "ghost" records or something I have heard since proper consensus can't be achieved via tombstone info. Maybe on a table like oauth2 tokens or cluster_events its less important because the rows have timestamp values that help dictate their validity to kong if they are present in the query or not eh so maybe 0 isn't so bad for some of the more intensely written to tables.

@bungle More than happy to try your latest PR today, won't have results likely till later this evening so in about 10 or so hours due to some restrictions on when I can touch some of our hosts for this testing. Will get that PR of files dropped in so the modified Kong will be ready to go then though. Fingers crossed it yields some positive success.

Appreciate the work you put in trying to resolve this scenario.

Also did do a few queries against our live C* db:

****@cqlsh:kong_prod> select count(*) from cluster_events;

count

-------

87271

(1 rows)

Warnings :

Aggregation query used without partition key

Read 100 live rows and 89643 tombstone cells for query SELECT * FROM kong_prod.cluster_events LIMIT 100 (see tombstone_warn_threshold)

Lotta tombstones here for sure.

As for querying the oauth2_tokens table from one of the nodes via cqlsh... uhh thats probably not good:

****@cqlsh:kong_prod> select count(*) from oauth2_tokens;

OperationTimedOut: errors={'127.0.0.1': 'Client request timeout. See Session.execute[_async](timeout)'}, last_host=127.0.0.1

Need a longer timeout just to figure things out I suppose.

Honestly pondering if not even tombstoning the cluster events and oauth tokens is probably the play... since that kinda data should not really need to be that in strict consensus(okay for a little mismatch data or temporary ghost records right?) since Kong can see timestamps and valid tokens etc. cluster_events may be more important but I am not sure.. pretty sure most of those events are 1 to 1 with oauth token related invalidations.

yep a little select confirms most of those entries too:

*****@cqlsh:kong_prod> select * from cluster_events limit 10;

channel | at | node_id | id | data | nbf

---------------+---------------------------------+--------------------------------------+--------------------------------------+--------------------------------------------------------+---------------------------------

invalidations | 2020-10-01 19:44:10.123000+0000 | 3ec49684-1e0b-45ac-a13b-33135cc09164 | 6de48d36-18cd-41e9-b431-6f24f0a507f0 | oauth2_tokens:XXX | 2020-10-01 19:44:15.111000+0000

invalidations | 2020-10-01 19:44:10.153000+0000 | 3ec49684-1e0b-45ac-a13b-33135cc09164 | c683d31f-c001-4c45-80ba-47b95780f3f4 | oauth2_tokens:XXX | 2020-10-01 19:44:15.152000+0000

invalidations | 2020-10-01 19:44:10.167000+0000 | 7dccf201-351a-402e-a33f-f1f291063f87 | 407b95f7-05eb-47f8-bc25-689c3065b9bf | oauth2_tokens:XXX | 2020-10-01 19:44:15.167000+0000

invalidations | 2020-10-01 19:44:10.223000+0000 | 21881b66-c99a-470c-ab76-76dcd7fbbc90 | 86180cd0-07ea-43a0-9189-bc63452a817d | oauth2_tokens:XXX | 2020-10-01 19:44:15.222000+0000

invalidations | 2020-10-01 19:44:10.230000+0000 | 7456814f-9464-4602-9a1e-7e2b55e4c4b2 | 1aa7f89d-a98c-4a3b-a62f-cbe8458d2b12 | oauth2_tokens:XXX | 2020-10-01 19:44:15.229000+0000

invalidations | 2020-10-01 19:44:10.256000+0000 | 21881b66-c99a-470c-ab76-76dcd7fbbc90 | 6b5c3f94-db6b-4103-95c1-95b4f1de2700 | oauth2_tokens:XXX | 2020-10-01 19:44:15.244000+0000

..... continued ...

Probably not totally related, but the large amount of invalidation events due to oauth2 does start to clog up the cluster events table in general... :

I would think oauth2 client credential grant type tokens don't even need an "invalidation" event really ever? They are generated and valid for a given ttl, unless you could revoke them early(is that even a thing in the oauth2 CC pattern, Kong supports revoking of client credential bearer tokens? we personally have never used such a feature) I think nodes can query the tokens table and know whats valid/invalid based on the token generation timetamps in the table?? Isn't it just a /token endpoint to produce valid tokens with the right client_id and client_secret and bearer type provided for oauth2 cc?

Not super familiar either but nodetool cfstats details on that given oauth2_tokens table looks like this in one prod env:

Table: oauth2_tokens

SSTable count: 4

Space used (live): 153678228

Space used (total): 153678228

Space used by snapshots (total): 0

Off heap memory used (total): 2694448

SSTable Compression Ratio: 0.5890186627210159

Number of partitions (estimate): 1614912

Memtable cell count: 5138

Memtable data size: 1268839

Memtable off heap memory used: 0

Memtable switch count: 1288

Local read count: 24577751

Local read latency: 0.067 ms

Local write count: 53152217

Local write latency: 0.109 ms

Pending flushes: 0

Percent repaired: 70.18

Bloom filter false positives: 1

Bloom filter false ratio: 0.00000

Bloom filter space used: 2307288

Bloom filter off heap memory used: 2307256

Index summary off heap memory used: 363776

Compression metadata off heap memory used: 23416

Compacted partition minimum bytes: 43

Compacted partition maximum bytes: 179

Compacted partition mean bytes: 128

Average live cells per slice (last five minutes): 1.0

Maximum live cells per slice (last five minutes): 1

Average tombstones per slice (last five minutes): 1.984081041968162

Maximum tombstones per slice (last five minutes): 4

Dropped Mutations: 2052

This is mostly just informational to get an idea of what its like running things like oauth2 plugin in a well used environment.

@jeremyjpj0916 good feedback as always. I will create cards for us to improve oauth2 situation. We have two lines in code:

kong.db.oauth2_authorization_codes:delete({ id = auth_code.id })

and

kong.db.oauth2_tokens:delete({ id = token.id })

There are couple of updates too. The invalidation event is for nodes to clear caches. But let's see if there is a better way.

@bungle

Files all prepped, will be cloning the keyspace again in a few hrs, I noticed you refactored all the core migrations.

Would others such as plugins need a refactor too like from my initial migration logs as well:

jwt: 003_200_to_210

acl: 003_200_to_210, 004_212_to_213

oauth2: 004_200_to_210, 005_210_to_211

To gain the benefits you are hoping will occur on the core migration executions?

Such as the connector:query() -> coordinator:execute() ?

Asking this because we saw in my first finish execution the core migrations seemed to have gone off without problem, but it was subsequent individual plugin migrations finish calls that seemed to get stuck 1 by 1:

For reference again:

2020/09/28 17:19:58 [debug] pending migrations to finish:

core: 009_200_to_210, 010_210_to_211

jwt: 003_200_to_210

acl: 003_200_to_210, 004_212_to_213

oauth2: 004_200_to_210, 005_210_to_211

2020/09/28 17:19:58 [info] migrating core on keyspace 'kong_prod2'...

2020/09/28 17:19:58 [debug] running migration: 009_200_to_210

2020/09/28 17:20:12 [verbose] waiting for Cassandra schema consensus (120000ms timeout)...

2020/09/28 17:20:12 [verbose] Cassandra schema consensus: reached

2020/09/28 17:20:12 [info] core migrated up to: 009_200_to_210 (executed)

2020/09/28 17:20:12 [debug] running migration: 010_210_to_211

2020/09/28 17:20:12 [verbose] waiting for Cassandra schema consensus (120000ms timeout)...

2020/09/28 17:20:12 [verbose] Cassandra schema consensus: reached

2020/09/28 17:20:12 [info] core migrated up to: 010_210_to_211 (executed)

2020/09/28 17:20:12 [info] migrating jwt on keyspace 'kong_prod2'...

2020/09/28 17:20:12 [debug] running migration: 003_200_to_210

2020/09/28 17:20:14 [verbose] waiting for Cassandra schema consensus (120000ms timeout)...

2020/09/28 17:20:14 [verbose] Cassandra schema consensus: not reached

Error:

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:161: [Cassandra error] cluster_mutex callback threw an error: ...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:142: [Cassandra error] failed to wait for schema consensus: [Read failure] Operation failed - received 0 responses and 1 failures

stack traceback:

[C]: in function 'assert'

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:142: in function <...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:126>

[C]: in function 'xpcall'

/usr/local/kong/luarocks/share/lua/5.1/kong/db/init.lua:364: in function </usr/local/kong/luarocks/share/lua/5.1/kong/db/init.lua:314>

[C]: in function 'pcall'

/usr/local/kong/luarocks/share/lua/5.1/kong/concurrency.lua:45: in function 'cluster_mutex'

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:126: in function 'finish'

...ocal/kong/luarocks/share/lua/5.1/kong/cmd/migrations.lua:184: in function 'cmd_exec'

/usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88: in function </usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88>

[C]: in function 'xpcall'

/usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88: in function </usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:45>

/usr/bin/kong:9: in function 'file_gen'

init_worker_by_lua:50: in function <init_worker_by_lua:48>

[C]: in function 'xpcall'

init_worker_by_lua:57: in function <init_worker_by_lua:55>

stack traceback:

[C]: in function 'error'

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:161: in function 'finish'

...ocal/kong/luarocks/share/lua/5.1/kong/cmd/migrations.lua:184: in function 'cmd_exec'

/usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88: in function </usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88>

[C]: in function 'xpcall'

/usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88: in function </usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:45>

/usr/bin/kong:9: in function 'file_gen'

init_worker_by_lua:50: in function <init_worker_by_lua:48>

[C]: in function 'xpcall'

init_worker_by_lua:57: in function <init_worker_by_lua:55>

Also worth noting odd behavior when cloning the keyspaces I go through and run a script that does some commands, the only table that throws an error is the oauth2 creds table oddly:

error: Unknown column redirect_uri during deserialization

-- StackTrace --

java.lang.RuntimeException: Unknown column redirect_uri during deserialization

at org.apache.cassandra.db.SerializationHeader$Component.toHeader(SerializationHeader.java:321)

at org.apache.cassandra.io.sstable.format.SSTableReader.open(SSTableReader.java:511)

at org.apache.cassandra.io.sstable.format.SSTableReader.open(SSTableReader.java:385)

at org.apache.cassandra.db.ColumnFamilyStore.loadNewSSTables(ColumnFamilyStore.java:771)

at org.apache.cassandra.db.ColumnFamilyStore.loadNewSSTables(ColumnFamilyStore.java:709)

at org.apache.cassandra.service.StorageService.loadNewSSTables(StorageService.java:5110)

at sun.reflect.GeneratedMethodAccessor519.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at sun.reflect.misc.Trampoline.invoke(MethodUtil.java:71)

at sun.reflect.GeneratedMethodAccessor3.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at sun.reflect.misc.MethodUtil.invoke(MethodUtil.java:275)

at com.sun.jmx.mbeanserver.StandardMBeanIntrospector.invokeM2(StandardMBeanIntrospector.java:112)

at com.sun.jmx.mbeanserver.StandardMBeanIntrospector.invokeM2(StandardMBeanIntrospector.java:46)

at com.sun.jmx.mbeanserver.MBeanIntrospector.invokeM(MBeanIntrospector.java:237)

at com.sun.jmx.mbeanserver.PerInterface.invoke(PerInterface.java:138)

at com.sun.jmx.mbeanserver.MBeanSupport.invoke(MBeanSupport.java:252)

at com.sun.jmx.interceptor.DefaultMBeanServerInterceptor.invoke(DefaultMBeanServerInterceptor.java:819)

at com.sun.jmx.mbeanserver.JmxMBeanServer.invoke(JmxMBeanServer.java:801)

at javax.management.remote.rmi.RMIConnectionImpl.doOperation(RMIConnectionImpl.java:1468)

at javax.management.remote.rmi.RMIConnectionImpl.access$300(RMIConnectionImpl.java:76)

at javax.management.remote.rmi.RMIConnectionImpl$PrivilegedOperation.run(RMIConnectionImpl.java:1309)

at javax.management.remote.rmi.RMIConnectionImpl.doPrivilegedOperation(RMIConnectionImpl.java:1401)

at javax.management.remote.rmi.RMIConnectionImpl.invoke(RMIConnectionImpl.java:829)

at sun.reflect.GeneratedMethodAccessor547.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at sun.rmi.server.UnicastServerRef.dispatch(UnicastServerRef.java:357)

at sun.rmi.transport.Transport$1.run(Transport.java:200)

at sun.rmi.transport.Transport$1.run(Transport.java:197)

at java.security.AccessController.doPrivileged(Native Method)

at sun.rmi.transport.Transport.serviceCall(Transport.java:196)

at sun.rmi.transport.tcp.TCPTransport.handleMessages(TCPTransport.java:573)

at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler.run0(TCPTransport.java:834)

at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler.lambda$run$0(TCPTransport.java:688)

at java.security.AccessController.doPrivileged(Native Method)

at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler.run(TCPTransport.java:687)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

So the oauth2 creds don't refresh over right away when running the below command(the error above is what happens when I execute the below command):

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} oauth2_credentials"

So I follow up with a:

Original shell script doing keyspace cloning I am referring to:

#!/bin/bash

cloneKeyspace()

{

local username="${1}"

local start_host="${2}"

local hostnames="${3}"

local keyspace_source="${4}"

local keyspace_destination="${5}"

if ssh ${username}@${start_host} "echo 2>&1"; then

ssh ${username}@${start_host} "cqlsh --ssl -e 'DROP KEYSPACE ${keyspace_destination}'"

fi

sleep 10

for hostname in $(echo $hostnames | sed "s/,/ /g")

do

if ssh ${username}@${hostname} "echo 2>&1"; then

ssh ${username}@${hostname} "rm -rf /data/cassandra/data/${keyspace_destination}"

ssh ${username}@${hostname} "nodetool clearsnapshot -t copy"

fi

done

if ssh ${username}@${start_host} "echo 2>&1"; then

ssh ${username}@${start_host} "cqlsh --ssl -e 'DESCRIBE KEYSPACE ${keyspace_source}' > ${keyspace_source}.txt"

ssh ${username}@${start_host} "sed -i 's/${keyspace_source}/${keyspace_destination}/g' ${keyspace_source}.txt"

ssh ${username}@${start_host} "cqlsh --ssl -f '${keyspace_source}.txt'"

ssh ${username}@${start_host} "rm ${keyspace_source}.txt"

echo "Success on Keyspace Schema clone: "${start_host}

else

echo "Failed on Keyspace Schema clone: "${start_host}

fi

for hostname in $(echo $hostnames | sed "s/,/ /g")

do

if ssh ${username}@${hostname} "echo 2>&1"; then

ssh ${username}@${hostname} "nodetool snapshot -t copy ${keyspace_source}"

echo "Success on Cassandra snapshot: "${hostname} ${keyspace_source}

sleep 20

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/acls-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/acls-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/apis-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/apis-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/ca_certificates-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/ca_certificates-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/certificates-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/certificates-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/cluster_events-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/cluster_events-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/consumers-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/consumers-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/jwt_secrets-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/jwt_secrets-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/keyauth_credentials-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/keyauth_credentials-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/locks-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/locks-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/oauth2_authorization_codes-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/oauth2_authorization_codes-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/oauth2_credentials-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/oauth2_credentials-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/oauth2_tokens-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/oauth2_tokens-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/plugins-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/plugins-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/ratelimiting_metrics-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/ratelimiting_metrics-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/routes-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/routes-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/schema_meta-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/schema_meta-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/services-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/services-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/snis-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/snis-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/tags-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/tags-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/targets-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/targets-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/upstreams-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/upstreams-*/"

ssh ${username}@${hostname} "mv /data/cassandra/data/${keyspace_source}/workspaces-*/snapshots/copy/* /data/cassandra/data/${keyspace_destination}/workspaces-*/"

ssh ${username}@${hostname} "nodetool clearsnapshot -t copy"

echo "Success on Cassandra mv of snapshot files to destination: "${keyspace_destination}

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} ratelimiting_metrics"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} oauth2_tokens"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} locks"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} snis"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} plugins"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} targets"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} consumers"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} upstreams"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} schema_meta"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} tags"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} acls"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} cluster_events"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} workspaces"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} jwt_secrets"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} apis"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} keyauth_credentials"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} oauth2_credentials"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} certificates"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} services"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} ca_certificates"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} oauth2_authorization_codes"

ssh ${username}@${hostname} "nodetool refresh ${keyspace_destination} routes"

echo "Success on Cassandra nodetool refresh on destination keyspace: "${keyspace_destination}

else

echo "Failed on Cassandra snapshot: "${hostname}

fi

done

if ssh ${username}@${start_host} "echo 2>&1"; then

ssh ${username}@${start_host} "nodetool repair -full ${keyspace_destination}"

echo "Success on Keyspace repair: "${start_host} ${keyspace_destination}

else

echo "Failed on Keyspace repair : "${start_host} ${keyspace_destination}

fi

echo "Script processing completed!"

}

cloneKeyspace "${1}" "${2}" "${3}" "${4}" "${5}"

So to get the creds to import and show up when I query the table properly afterward I do:

***@cqlsh> COPY kong_src.oauth2_credentials TO 'oauth2_credentials.dat';

Using 1 child processes

Starting copy of kong_desto.oauth2_credentials with columns [id, client_id, client_secret, client_type, consumer_id, created_at, hash_secret, name, redirect_uris, tags].

Processed: 81 rows; Rate: 46 rows/s; Avg. rate: 23 rows/s

81 rows exported to 1 files in 3.563 seconds.

****@cqlsh> COPY kong_desto.oauth2_credentials FROM 'oauth2_credentials.dat';

Welp trying it out now as is:

First the migrations up(which so far has never given my grief):

kong migrations up --db-timeout 120 --vv

2020/10/02 02:39:50 [debug] loading subsystems migrations...

2020/10/02 02:39:50 [verbose] retrieving keyspace schema state...

2020/10/02 02:39:50 [verbose] schema state retrieved

2020/10/02 02:39:50 [debug] loading subsystems migrations...

2020/10/02 02:39:50 [verbose] retrieving keyspace schema state...

2020/10/02 02:39:50 [verbose] schema state retrieved

2020/10/02 02:39:50 [debug] migrations to run:

core: 009_200_to_210, 010_210_to_211, 011_212_to_213

jwt: 003_200_to_210

acl: 003_200_to_210, 004_212_to_213

rate-limiting: 004_200_to_210

oauth2: 004_200_to_210, 005_210_to_211

2020/10/02 02:39:51 [info] migrating core on keyspace 'kong_prod2'...

2020/10/02 02:39:51 [debug] running migration: 009_200_to_210

2020/10/02 02:39:53 [info] core migrated up to: 009_200_to_210 (pending)

2020/10/02 02:39:53 [debug] running migration: 010_210_to_211

2020/10/02 02:39:53 [info] core migrated up to: 010_210_to_211 (pending)

2020/10/02 02:39:53 [debug] running migration: 011_212_to_213

2020/10/02 02:39:53 [info] core migrated up to: 011_212_to_213 (executed)

2020/10/02 02:39:53 [info] migrating jwt on keyspace 'kong_prod2'...

2020/10/02 02:39:53 [debug] running migration: 003_200_to_210

2020/10/02 02:39:54 [info] jwt migrated up to: 003_200_to_210 (pending)

2020/10/02 02:39:54 [info] migrating acl on keyspace 'kong_prod2'...

2020/10/02 02:39:54 [debug] running migration: 003_200_to_210

2020/10/02 02:39:54 [info] acl migrated up to: 003_200_to_210 (pending)

2020/10/02 02:39:54 [debug] running migration: 004_212_to_213

2020/10/02 02:39:54 [info] acl migrated up to: 004_212_to_213 (pending)

2020/10/02 02:39:54 [info] migrating rate-limiting on keyspace 'kong_prod2'...

2020/10/02 02:39:54 [debug] running migration: 004_200_to_210

2020/10/02 02:39:54 [info] rate-limiting migrated up to: 004_200_to_210 (executed)

2020/10/02 02:39:54 [info] migrating oauth2 on keyspace 'kong_prod2'...

2020/10/02 02:39:54 [debug] running migration: 004_200_to_210

2020/10/02 02:39:56 [info] oauth2 migrated up to: 004_200_to_210 (pending)

2020/10/02 02:39:56 [debug] running migration: 005_210_to_211

2020/10/02 02:39:56 [info] oauth2 migrated up to: 005_210_to_211 (pending)

2020/10/02 02:39:56 [verbose] waiting for Cassandra schema consensus (120000ms timeout)...

2020/10/02 02:39:59 [verbose] Cassandra schema consensus: reached in 3049ms

2020/10/02 02:39:59 [info] 9 migrations processed

2020/10/02 02:39:59 [info] 2 executed

2020/10/02 02:39:59 [info] 7 pending

2020/10/02 02:39:59 [debug] loading subsystems migrations...

2020/10/02 02:39:59 [verbose] retrieving keyspace schema state...

2020/10/02 02:39:59 [verbose] schema state retrieved

2020/10/02 02:39:59 [info]

Database has pending migrations; run 'kong migrations finish' when ready

Welp migrations finish more problems again, the good news was the 120 seconds was respected it seems, but just never agreed on anything there, look at how fast those core migrations reached consensus too hah(3ms), the other one just hangs :/ :

kong migrations finish --db-timeout 120 --vv

The environment variables are the same from my original post so cutting those out for brevity.

2020/10/02 02:42:24 [debug] loading subsystems migrations...

2020/10/02 02:42:24 [verbose] retrieving keyspace schema state...

2020/10/02 02:42:24 [verbose] schema state retrieved

2020/10/02 02:42:24 [debug] loading subsystems migrations...

2020/10/02 02:42:24 [verbose] retrieving keyspace schema state...

2020/10/02 02:42:25 [verbose] schema state retrieved

2020/10/02 02:42:25 [debug] pending migrations to finish:

core: 009_200_to_210, 010_210_to_211

jwt: 003_200_to_210

acl: 003_200_to_210, 004_212_to_213

oauth2: 004_200_to_210, 005_210_to_211

2020/10/02 02:42:25 [info] migrating core on keyspace 'kong_prod2'...

2020/10/02 02:42:25 [debug] running migration: 009_200_to_210

2020/10/02 02:42:37 [verbose] waiting for Cassandra schema consensus (120000ms timeout)...

2020/10/02 02:42:37 [verbose] Cassandra schema consensus: reached in 3ms

2020/10/02 02:42:37 [info] core migrated up to: 009_200_to_210 (executed)

2020/10/02 02:42:37 [debug] running migration: 010_210_to_211

2020/10/02 02:42:37 [verbose] waiting for Cassandra schema consensus (120000ms timeout)...

2020/10/02 02:42:37 [verbose] Cassandra schema consensus: reached in 3ms

2020/10/02 02:42:37 [info] core migrated up to: 010_210_to_211 (executed)

2020/10/02 02:42:37 [info] migrating jwt on keyspace 'kong_prod2'...

2020/10/02 02:42:37 [debug] running migration: 003_200_to_210

2020/10/02 02:42:38 [verbose] waiting for Cassandra schema consensus (120000ms timeout)...

2020/10/02 02:44:38 [verbose] Cassandra schema consensus: not reached in 120095ms

Error:

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:161: [Cassandra error] cluster_mutex callback threw an error: ...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:142: [Cassandra error] failed to wait for schema consensus: [Read failure] Operation failed - received 0 responses and 1 failures

stack traceback:

[C]: in function 'assert'

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:142: in function <...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:126>

[C]: in function 'xpcall'

/usr/local/kong/luarocks/share/lua/5.1/kong/db/init.lua:364: in function </usr/local/kong/luarocks/share/lua/5.1/kong/db/init.lua:314>

[C]: in function 'pcall'

/usr/local/kong/luarocks/share/lua/5.1/kong/concurrency.lua:45: in function 'cluster_mutex'

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:126: in function 'finish'

...ocal/kong/luarocks/share/lua/5.1/kong/cmd/migrations.lua:184: in function 'cmd_exec'

/usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88: in function </usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88>

[C]: in function 'xpcall'

/usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:88: in function </usr/local/kong/luarocks/share/lua/5.1/kong/cmd/init.lua:45>

/usr/bin/kong:9: in function 'file_gen'

init_worker_by_lua:50: in function <init_worker_by_lua:48>

[C]: in function 'xpcall'

init_worker_by_lua:57: in function <init_worker_by_lua:55>

stack traceback:

[C]: in function 'error'

...ong/luarocks/share/lua/5.1/kong/cmd/utils/migrations.lua:161: in function 'finish'