Istio currently does not support statefulset. There are already many issues related, so here is a umbrella issue.

10053 #1277 #10490 #10586 #9666

Anyone can add missing issues below.

All 96 comments

We should build listeners and clusters for statefulset pod, like outbound|9042||cassandra-0.cassandra.default.svc.cluster.local . Not just outbound|9042||cassandra.default.svc.cluster.local

https://kubernetes.io/docs/tutorials/stateful-application/cassandra/

AFAIK we do support stateful sets - using original dst, with the restriction that stateful sets can't share port.

Cassandra - it is not clear what is wrong, I tried it once and the envoy config looked right but it didn't work. We need to repro again and get tcpdumps.

We can't build n.cassandra.... clusters - too many and we would need too many listeners as well.

On-demand LDS may allow more flexibility and sharing the port - but I think dedicated port is a reasonable option until we have that.

@costinm I have tried with zookeeper and kafka. I am facing similar issue because we use headless service for inter pod communication. What should be the workaround for that? I have tried this solution - https://github.com/istio/istio/issues/7495. But still zookeeper pods cannot talk to each other. Istio resolve zookeeper.zookeeper.svc.cluster.local instead of resolving zookeeper-0.zookeeper.svc.cluster.local.

cassandra can not handshake even without mTLS.

FYI, #10053 is not because of statefulset. After change the cassandra listen_address to localhost, everything now work, with or without mTLS.

Afaik, statefulset is working fine as of today (1.0.x, 1.1)

After change the cassandra listen_address to localhost, everything now work, with or without mTLS.

Does this method also work for other statefulset applications, like Zookeeper?

@asifdxtreme

@hzxuzhonghu I am trying stateful sets with simple TCP server - Do I have to create multiple Destination Rules one for each host? any idea which steps I should follow - Does it even work?

sorry, I donot understand you.

@hzxuzhonghu Sorry for being vague. My question was - Is stateful set functionality fully supported in Istio? I am seeing conflicting docs and when I try TCP service - I am not able to make it work. So

My question was

- Are there any docs that explain how to configure stateful service?

- Some issues mention that we have to create destination services for each of the stateful service node - Is that how it will work?

Is stateful set functionality fully supported in Istio?

I think no. At least this https://github.com/istio/istio/issues/10659#issuecomment-449935064 is not implemented.

Are there any docs that explain how to configure stateful service?

No

Some issues mention that we have to create destination services for each of the stateful service node - Is that how it will work?

Do you mean DestinationRule? If so, I can hardly think how to config. But i think we can create a service entry for statefulset pod as a workaround.

Yes. I meant ‘DestinationRule’. Ok I tried creating ‘ServiceEntry’ also. But let me look at that as well

@hzxuzhonghu I tried a stateful set service with three nodes with simple TCP service and found the following

- Pilot is creating a upstream cluster with

ORIGINAL_DSTLB for each of the three nodes deployed as stateful sets and - However, Pilot is creating only one EGRESS Listener that points to cluster which is regular EDS.

So if the request lands on this egress listener, it goes to any of the nodes.

I think as you mentioned #10659 (comment) we need three egress listeners pointing to each of theORIGINAL_DSTclusters. Is that what you are also thinking?

How did you get ORIGINAL_DST cluster, with ServiceEntry.Resolution=NONE ?

I think as you mentioned #10659 (comment) we need three egress listeners pointing to each of the ORIGINAL_DST clusters. Is that what you are also thinking?

Yes, i mean we need three listeners if the service is tcp protocol. Each one

{

"name": "xxxxxxx_8000",

"address": {

"socketAddress": {

"address": "xxxxxxx", // pod0 ip

"portValue": 8000

}

},

...

{

"name": "xxxxxx2_8000",

"address": {

"socketAddress": {

"address": "xxxxxxx2", // pod2 ip

"portValue": 8000

}

},

@hzxuzhonghu I just deployed at stateful TCP service with the following service object

apiVersion: v1

kind: Service

metadata:

name: istio-tcp-stateful

labels:

app: istio-tcp-stateful

type: LoadBalancer

spec:

type: ClusterIP

clusterIP: None

selector:

app: istio-tcp-stateful

ports:

- port: 9000

name: tcp

protocol: TCP

---

apiVersion: apps/v1beta1

kind: StatefulSet

metadata:

name: istio-tcp-stateful

spec:

serviceName: istio-tcp-stateful

replicas: 3

template:

metadata:

labels:

app: istio-tcp-stateful

version: v1

spec:

containers:

- name: tcp-echo-server

image: ops0-artifactrepo1-0-prd.data.sfdc.net/docker-sam/rama.rao/ramaraochavali/tcp-echo-server:latest

args: [ "9000", "one" ] # prefix: one

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9000

With the above definition,

- It generated one ingress listener and inbound cluster which is as expected.

- It generated one

ORIGINAL_DSTcluster for outbound - Not sure if this is expected

{

"version_info": "2019-04-11T09:30:43Z/70",

"cluster": {

"name": "outbound|9000||istio-tcp-stateful.default.svc.cluster.local",

"type": "ORIGINAL_DST",

"connect_timeout": "1s",

"lb_policy": "ORIGINAL_DST_LB",

"circuit_breakers": {

"thresholds": [

{

"max_retries": 1024

}

]

}

},

"last_updated": "2019-04-11T09:30:51.987Z"

}

- And one egress listener - which is definitely an issue as mentioned above.

Did you see any thing different in your tests?

I tried with stateful gRPC service and it generated the following cluster of type EDS - Should it not generate as many clusters as there are nodes?

{

"version_info": "2019-04-12T07:22:04Z/25",

"cluster": {

"name": "outbound|7443||istio-test.default.svc.cluster.local",

"type": "EDS",

"eds_cluster_config": {

"eds_config": {

"ads": {}

},

"service_name": "outbound|7443||istio-test.default.svc.cluster.local"

},

"connect_timeout": "1s",

"circuit_breakers": {

"thresholds": [

{

"max_retries": 1024

}

]

},

"http2_protocol_options": {

"max_concurrent_streams": 1073741824

}

},

Is there any update to this?

i've tried to attach istio to RabbitMQ - with MTLS it failed, without - it succeeded.

i've tried adding ServiceEntry, but it did just ignore the TLS and worked without.

i'm reading mixed posts about it - what's the verdict?

i've tried to attach istio to RabbitMQ - with MTLS it failed, without - it succeeded.

Can you share your RabbitMQ service definition and how you attached Istio and how you are accessing individual node without mTLS? Even that is not working for me - I did not test RabbitMQ specifically but a simple TCP service. I want to understand where is the gap.

@hzxuzhonghu Did you try http/gRPC stateful service? What do you think about https://github.com/istio/istio/issues/10659#issuecomment-482520125? If we create only one EDS cluster like regular services - how can we access individual node? WDYT?

i've tried to attach istio to RabbitMQ - with MTLS it failed, without - it succeeded.

Can you share your RabbitMQ service definition and how you attached Istio and how you are accessing individual node without mTLS? Even that is not working for me - I did not test RabbitMQ specifically but a simple TCP service. I want to understand where is the gap.

Hi, so eventually i managed to set up mtls successfully for rabbitmq - which means, it can be done with any stateful set.

the main gotchas are:

- Register each stateful pod and its ports as a ServiceEntry

- If a pod uses pod IP - it's a big no, and should use localhost / 127.0.0.1, if not possible - it is require to exclude this port from MTLS

- note that headless services are not participating in MTLS communication.

I've written a full article and attached a working helm charts with istio and MTLS support:

https://github.com/arielb135/RabbitMQ-with-istio-MTLS

Register each stateful pod and its ports as a ServiceEntry

I did try registering each stateful pod as a ServiceEntry. But it did not create the necessary clusters/listeners in Istio 1.1.2. Which version of Istio did you try this with?

Register each stateful pod and its ports as a ServiceEntry

I did try registering each stateful pod as a ServiceEntry. But it did not create the necessary clusters/listeners in Istio 1.1.2. Which version of Istio did you try this with?

i'm using istio 1.0.

Can you please describe what are your errors? does rabbit crash with the epmd error? if so - you'll have to exclude this port from the headless service as i'm stating in my article:

for example:

apiVersion: authentication.istio.io/v1alpha1

kind: Policy

metadata:

name: rabbitmq-disable-mtls

namespace: rabbitns

spec:

targets:

- name: rabbitmq-headless

ports:

- number: 4369

Another option is to go into the epmd code, and change it to use localhost / 127.0.0.1 instead of local IP. but i guess its not worth it.

I am trying a simple TCP and Http Service not the rabbitMQ (sorry for the confusion - I was thinking any TCP should work similar to rabbitMQ that is the reason I asked for the config). I am creating a service entry as shown below

apiVersion: networking.istio.io/v1alpha3

kind: ServiceEntry

metadata:

name: istio-http1-stateful-service-entry

namespace: default

spec:

hosts:

- istio-http1-stateful-0.istio-http1-stateful.default.svc.cluster.local

- istio-http1-stateful-1.istio-http1-stateful.default.svc.cluster.local

- istio-http1-stateful-2.istio-http1-stateful.default.svc.cluster.local

location: MESH_INTERNAL

ports:

- number: 7024

name: http-port

protocol: TCP

resolution: NONE

and I am creating service as shown below

apiVersion: v1

kind: Service

metadata:

name: istio-http1-stateful

labels:

app: istio-http1-stateful

spec:

ports:

- name: http-port

port: 7024

selector:

app: istio-http1-stateful

---

apiVersion: apps/v1beta1

kind: StatefulSet

metadata:

name: istio-http1-stateful

spec:

replicas: 3

template:

metadata:

labels:

app: istio-http1-stateful

version: v1

spec:

containers:

- name: istio-http1-stateful

image: h1-test-server:latest

args:

- "0.0.0.0:7024"

imagePullPolicy: Always

ports:

- containerPort: 7024

I am not using mTLS. With this I am expecting clusters to be created for the ServiceEntry - but it is not. Could it be Istio version difference?

I am trying a simple TCP and Http Service not the rabbitMQ (sorry for the confusion - I was thinking any TCP should work similar to rabbitMQ that is the reason I asked for the config). I am creating a service entry as shown below

apiVersion: networking.istio.io/v1alpha3 kind: ServiceEntry metadata: name: istio-http1-stateful-service-entry namespace: default spec: hosts: - istio-http1-stateful-0.istio-http1-stateful.default.svc.cluster.local - istio-http1-stateful-1.istio-http1-stateful.default.svc.cluster.local - istio-http1-stateful-2.istio-http1-stateful.default.svc.cluster.local location: MESH_INTERNAL ports: - number: 7024 name: http-port protocol: TCP resolution: NONEand I am creating service as shown below

apiVersion: v1 kind: Service metadata: name: istio-http1-stateful labels: app: istio-http1-stateful spec: ports: - name: http-port port: 7024 selector: app: istio-http1-stateful --- apiVersion: apps/v1beta1 kind: StatefulSet metadata: name: istio-http1-stateful spec: replicas: 3 template: metadata: labels: app: istio-http1-stateful version: v1 spec: containers: - name: istio-http1-stateful image: h1-test-server:latest args: - "0.0.0.0:7024" imagePullPolicy: Always ports: - containerPort: 7024I am not using mTLS. With this I am expecting clusters to be created for the

ServiceEntry- but it is not. Could it be Istio version difference?

What error do you get?

also - is this image accessible from a public repository? i can try to run the same configuration myself and let you know.

I do not get any error. It just does not create the required clusters/listeners. The image is not accessible in public but it is a simple hello world http app - You can use this strm/helloworld-http (https://hub.docker.com/r/strm/helloworld-http) and try. Thanks for trying.

I do not get any error. It just does not create the required clusters/listeners. The image is not accessible in public but it is a simple hello world http app - You can use this

strm/helloworld-http(https://hub.docker.com/r/strm/helloworld-http) and try. Thanks for trying.

I think you're missing the headless service (which is responsible in giving the pods their unique ips.

you've created a regular service that gives all pods one DNS to be redirected to.

could you try to create a headless service aswell (clusterIP: None)

apiVersion: v1

kind: Service

metadata:

name: istio-http1-stateful-headless

labels:

app: istio-http1-stateful

spec:

clusterIP: None

ports:

- name: http-port

port: 7024

selector:

app: istio-http1-stateful

Then, create the service entry according to the headless service, if i'm not mistaken:

apiVersion: networking.istio.io/v1alpha3

kind: ServiceEntry

metadata:

name: istio-http1-stateful-service-entry

namespace: default

spec:

hosts:

- istio-http1-stateful-0.istio-http1-stateful-headless.default.svc.cluster.local

- istio-http1-stateful-1.istio-http1-stateful-headless.default.svc.cluster.local

- istio-http1-stateful-2.istio-http1-stateful-headless.default.svc.cluster.local

location: MESH_INTERNAL

ports:

- number: 7024

name: http-port

protocol: TCP

resolution: NONE

https://kubernetes.io/docs/concepts/services-networking/service/#headless-services

i've simplified the names and tested:

apiVersion: v1

kind: Service

metadata:

name: hello

namespace: hello

labels:

app: hello

spec:

clusterIP: None

ports:

- name: http-port

port: 80

selector:

app: hello

---

apiVersion: apps/v1beta1

kind: StatefulSet

metadata:

name: hello

namespace: hello

spec:

serviceName: "hello"

replicas: 2

template:

metadata:

labels:

app: hello

version: v1

spec:

containers:

- name: hello

image: strm/helloworld-http

imagePullPolicy: Always

ports:

- containerPort: 80

---

apiVersion: networking.istio.io/v1alpha3

kind: ServiceEntry

metadata:

name: istio-http1-stateful-service-entry

namespace: hello

spec:

hosts:

- hello-0.hello.hello.svc.cluster.local

- hello-1.hello.hello.svc.cluster.local

location: MESH_INTERNAL

ports:

- number: 80

name: http-port

protocol: TCP

resolution: NONE

when running istioctl proxy-status:

NAME CDS LDS EDS RDS PILOT VERSION

hello-0.hello SYNCED SYNCED SYNCED (50%) SYNCED istio-pilot-6b7ccdc9d-w9vcc 1.1.0

hello-1.hello SYNCED SYNCED SYNCED (50%) SYNCED istio-pilot-6b7ccdc9d-w9vcc 1.1.0

Ok. Thank you. I also tested with the actual names. The proxy status says

istio-http1-stateful-0.default SYNCED SYNCED SYNCED (50%) SYNCED istio-pilot-c9575bd7d-n6g7m 1.1.2

istio-http1-stateful-1.default SYNCED SYNCED SYNCED (50%) SYNCED istio-pilot-c9575bd7d-n6g7m 1.1.2

istio-http1-stateful-2.default SYNCED SYNCED SYNCED (50%) SYNCED istio-pilot-c9575bd7d-n6g7m 1.1.2

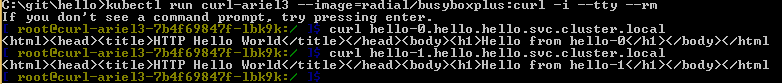

But still I am not able to hit the individual stateful POD from one of the containers as curl hello-0.hello.hello.svc.cluster.local:7024 - Were are you able hit individual pods and get the response?

If possible can you please share the output of

istioctl proxy-config clusters hello-0.hello ?

BTW, for me I just see the headless service has one both inbound and outbound(ORIGINAL_DST) cluster. But I do not see any thing being created for the ServiceEntry - I am expecting some clusters are created out of the ServiceEntry definition so that it can actually route to individual nodes.

I also tried this simple example creating ServiceEntry as commented by @howardjohn https://github.com/istio/istio/issues/13008#issuecomment-483890625 here - And I do not see outbound cluster being created similar to what is being seen there.

Ok. Thank you. I also tested with the actual names. The proxy status says

istio-http1-stateful-0.default SYNCED SYNCED SYNCED (50%) SYNCED istio-pilot-c9575bd7d-n6g7m 1.1.2 istio-http1-stateful-1.default SYNCED SYNCED SYNCED (50%) SYNCED istio-pilot-c9575bd7d-n6g7m 1.1.2 istio-http1-stateful-2.default SYNCED SYNCED SYNCED (50%) SYNCED istio-pilot-c9575bd7d-n6g7m 1.1.2But still I am not able to hit the individual stateful POD from one of the containers as

curl hello-0.hello.hello.svc.cluster.local:7024- Were are you able hit individual pods and get the response?

If possible can you please share the output of

istioctl proxy-config clusters hello-0.hello?

maybe you have some mismatches in the names there, i couldn't access the it aswell in your naming pattern so maybe there's a mismatch somewhere, but with hello all is working:

i've removed some outputs from other pods, but here you go the proxy-config command

SERVICE FQDN PORT SUBSET DIRECTION TYPE

BlackHoleCluster - - - <nil>

PassthroughCluster - - - &{ORIGINAL_DST}

details.default.svc.cluster.local 9080 - outbound &{EDS}

hello-0.hello.hello.svc.cluster.local 80 - outbound &{ORIGINAL_DST}

hello-1.hello.hello.svc.cluster.local 80 - outbound &{ORIGINAL_DST}

hello.hello.svc.cluster.local 80 - outbound &{ORIGINAL_DST}

hello.hello.svc.cluster.local 80 http-port inbound <nil>

istio-citadel.istio-system.svc.cluster.local 8060 - outbound &{EDS}

istio-citadel.istio-system.svc.cluster.local 15014 - outbound &{EDS}

istio-egressgateway.istio-system.svc.cluster.local 80 - outbound &{EDS}

istio-egressgateway.istio-system.svc.cluster.local 443 - outbound &{EDS}

istio-egressgateway.istio-system.svc.cluster.local 15443 - outbound &{EDS}

istio-galley.istio-system.svc.cluster.local 443 - outbound &{EDS}

istio-galley.istio-system.svc.cluster.local 9901 - outbound &{EDS}

istio-galley.istio-system.svc.cluster.local 15014 - outbound &{EDS}

istio-ingressgateway.istio-system.svc.cluster.local 80 - outbound &{EDS}

istio-ingressgateway.istio-system.svc.cluster.local 443 - outbound &{EDS}

istio-ingressgateway.istio-system.svc.cluster.local 15020 - outbound &{EDS}

istio-ingressgateway.istio-system.svc.cluster.local 15029 - outbound &{EDS}

istio-ingressgateway.istio-system.svc.cluster.local 15030 - outbound &{EDS}

istio-ingressgateway.istio-system.svc.cluster.local 15031 - outbound &{EDS}

istio-ingressgateway.istio-system.svc.cluster.local 15032 - outbound &{EDS}

istio-ingressgateway.istio-system.svc.cluster.local 15443 - outbound &{EDS}

istio-ingressgateway.istio-system.svc.cluster.local 31400 - outbound &{EDS}

istio-pilot.istio-system.svc.cluster.local 8080 - outbound &{EDS}

istio-pilot.istio-system.svc.cluster.local 15010 - outbound &{EDS}

istio-pilot.istio-system.svc.cluster.local 15011 - outbound &{EDS}

istio-pilot.istio-system.svc.cluster.local 15014 - outbound &{EDS}

istio-policy.istio-system.svc.cluster.local 9091 - outbound &{EDS}

istio-policy.istio-system.svc.cluster.local 15004 - outbound &{EDS}

istio-policy.istio-system.svc.cluster.local 15014 - outbound &{EDS}

istio-sidecar-injector.istio-system.svc.cluster.local 443 - outbound &{EDS}

istio-telemetry.istio-system.svc.cluster.local 9091 - outbound &{EDS}

istio-telemetry.istio-system.svc.cluster.local 15004 - outbound &{EDS}

istio-telemetry.istio-system.svc.cluster.local 15014 - outbound &{EDS}

istio-telemetry.istio-system.svc.cluster.local 42422 - outbound &{EDS}

jaeger-collector.istio-system.svc.cluster.local 14267 - outbound &{EDS}

jaeger-collector.istio-system.svc.cluster.local 14268 - outbound &{EDS}

jaeger-query.istio-system.svc.cluster.local 16686 - outbound &{EDS}

kiali.istio-system.svc.cluster.local 20001 - outbound &{EDS}

kubernetes.default.svc.cluster.local 443 - outbound &{EDS}

Ah.. That is interesting. I see the clusters your deployment which I was expecting to see

hello-0.hello.hello.svc.cluster.local 80 - outbound &{ORIGINAL_DST}

hello-1.hello.hello.svc.cluster.local 80 - outbound &{ORIGINAL_DST}

hello.hello.svc.cluster.local 80 - outbound &{ORIGINAL_DST}

hello.hello.svc.cluster.local 80 http-port inbound <nil>

I will change the naming pattern and try. Will let you know

Ah.. That is interesting. I see the clusters your deployment which I was expecting to see

hello-0.hello.hello.svc.cluster.local 80 - outbound &{ORIGINAL_DST} hello-1.hello.hello.svc.cluster.local 80 - outbound &{ORIGINAL_DST} hello.hello.svc.cluster.local 80 - outbound &{ORIGINAL_DST} hello.hello.svc.cluster.local 80 http-port inbound <nil>I will change the naming pattern and try. Will let you know

Anyway i deployed exactly this

**Don't forget to label the hello namespace

kubectl label namespace hello istio-injection=enabled

apiVersion: v1

kind: Service

metadata:

name: hello

namespace: hello

labels:

app: hello

spec:

clusterIP: None

ports:

- name: http-port

port: 80

selector:

app: hello

---

apiVersion: apps/v1beta1

kind: StatefulSet

metadata:

name: hello

namespace: hello

spec:

serviceName: "hello"

replicas: 2

template:

metadata:

labels:

app: hello

version: v1

spec:

containers:

- name: hello

image: strm/helloworld-http

imagePullPolicy: Always

ports:

- containerPort: 80

---

apiVersion: networking.istio.io/v1alpha3

kind: ServiceEntry

metadata:

name: istio-http1-stateful-service-entry

namespace: hello

spec:

hosts:

- hello-0.hello.hello.svc.cluster.local

- hello-1.hello.hello.svc.cluster.local

location: MESH_INTERNAL

ports:

- number: 80

name: http-port

protocol: TCP

resolution: NONE

@arielb135 With simplified names, I was also able to make it work. Thanks for your help. I will try to figure out what is the problem the original name - One of the differences is I was trying in the default namespace not sure if it matters.

@arielb135 With simplified names, I was also able to make it work. Thanks for your help. I will try to figure out what is the problem the original name - One of the differences is I was trying in the

defaultnamespace not sure if it matters.

Sure, i hardly believe default matters - as long as you're able to inject the sidecar there.

good luck, if you solve it, update this thread so future people can also solve the naming problem

@ramaraochavali any news on that issue ? Were you able to make it work ?

@cscetbon do you mean stateful sets in general? If yes, I was able to make it work as documented https://github.com/istio/istio/issues/10659#issuecomment-484470101. I was able to work with the original complex names as well.

Another example: https://github.com/istio/tools/pull/127

@ramaraochavali, @howardjohn so it seems one of the key is to have that ServiceEntry. So each time we add one node, we need to update that ServiceEntry. Any order in which it should be done ? Will it mean that there will be some temporary disruption between the new added node and the others ?

By node I assume you mean node of your application, not Kubernetes node right?

If so I think you can update the ServiceEntry first -- this should be no risk. Say we add hello-3 and we only have 0, 1, and 2. Any requests to hello-3 will still fail, just like if you did a request to hello-3 without the serviceentry. Then once hello-3 does come up, the requests should be sent to hello-3 and it should work again. In theory you could just make hello-{0..100} but I haven't tested this and it would come at a performance hit (mostly in terms of pilot/time to config sync, but maybe a tiny amount in the request path as well if Envoy has to do more processing to find the right match. I think this only happens on routes though, not clusters, so probably not an issue.)

@howardjohn yes I meant pods (sorry I had Cassandra in mind and was thinking about Casandra nodes). Okay good. I'll test it as soon as I can and see if I can make it work with my statefulsets as well.

It seems we can also use wildcards

apiVersion: networking.istio.io/v1alpha3

kind: ServiceEntry

...

spec:

hosts:

- "*.hello.hello.svc.cluster.local"

location: MESH_INTERNAL

...

Do your responses mean that this ticket should be closed ? Or is it open in order for Istio to create that missing ServiceEntry on the fly instead of having to create it manually ?

Lets not close this for now - we shouldn't need a ServiceEntry for this ideally. Good idea on the wildcards, I'll have to try that out.

This PR https://github.com/istio/istio/pull/13970/files seems to solve many of the problems we see with kafka, cassandra, etc

+1 for making Istio create service entries to avoid manual creation.

@arielb135 I've tested your sample at https://github.com/istio/istio/issues/10659#issuecomment-484130321. It works except when mtls is enabled

kdp hello|egrep -i ' POD_NAME:|TLS'

MUTUAL_TLS

POD_NAME: hello-0 (v1:metadata.name)

MUTUAL_TLS

POD_NAME: hello-1 (v1:metadata.name)

I get errors when trying to curl both servers :

root@hello-0:/www# curl hello-1.hello.hello.svc.cluster.local

upstream connect error or disconnect/reset before headers. reset reason: connection terminationroot@hello-0:/www# ^C

root@hello-0:/www# curl hello-0.hello.hello.svc.cluster.local

curl: (56) Recv failure: Connection reset by peer

I have the same config as you had :

istioctl proxy-status|egrep 'NAME|hello'

NAME CDS LDS EDS RDS PILOT VERSION

hello-0.hello SYNCED SYNCED SYNCED (50%) SYNCED istio-pilot-d9559549b-rwxvw 1.1.3

hello-1.hello SYNCED SYNCED SYNCED (50%) SYNCED istio-pilot-d9559549b-rwxvw 1.1.3

istioctl authn tls-check hello-0.hello.svc.cluster.local|egrep 'STATUS|hello'

HOST:PORT STATUS SERVER CLIENT AUTHN POLICY DESTINATION RULE

hello-0.hello.hello.svc.cluster.local:80 OK mTLS mTLS default/ default/istio-system

hello-1.hello.hello.svc.cluster.local:80 OK mTLS mTLS default/ default/istio-system

hello.hello.svc.cluster.local:80 OK mTLS mTLS default/ default/istio-system

k get service hello

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hello ClusterIP None <none> 80/TCP 4h49m

k describe service hello

Name: hello

Namespace: hello

Labels: app=hello

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"app":"hello"},"name":"hello","namespace":"hello"},"spec":{"clu...

Selector: app=hello

Type: ClusterIP

IP: None

Port: http-port 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.3.60:80,10.244.4.55:80

Session Affinity: None

Events: <none>

Any idea what could be wrong ?

@howardjohn can you elaborate why the PR you pasted helps ? It's gonna take me some time to digest it

@howardjohn, @arielb135 👆?

the pr above helps because you can turn off proxying for just communication within the headless service. Other apps calling the service still get the benefits of Istio, but no need to worry about dealing with the proxy for internal communication, where it probably brings less benefits.

sorry not very familiar with mtls so not sure what is going wrong in youre case, maybe need a destination rule to specify to use mtls for the headless services that you added with service entry

@arielb135 I've tested your sample at #10659 (comment). It works except when mtls is enabled

kdp hello|egrep -i ' POD_NAME:|TLS' MUTUAL_TLS POD_NAME: hello-0 (v1:metadata.name) MUTUAL_TLS POD_NAME: hello-1 (v1:metadata.name)I get errors when trying to curl both servers :

root@hello-0:/www# curl hello-1.hello.hello.svc.cluster.local upstream connect error or disconnect/reset before headers. reset reason: connection terminationroot@hello-0:/www# ^C root@hello-0:/www# curl hello-0.hello.hello.svc.cluster.local curl: (56) Recv failure: Connection reset by peerI have the same config as you had :

istioctl proxy-status|egrep 'NAME|hello' NAME CDS LDS EDS RDS PILOT VERSION hello-0.hello SYNCED SYNCED SYNCED (50%) SYNCED istio-pilot-d9559549b-rwxvw 1.1.3 hello-1.hello SYNCED SYNCED SYNCED (50%) SYNCED istio-pilot-d9559549b-rwxvw 1.1.3 istioctl authn tls-check hello-0.hello.svc.cluster.local|egrep 'STATUS|hello' HOST:PORT STATUS SERVER CLIENT AUTHN POLICY DESTINATION RULE hello-0.hello.hello.svc.cluster.local:80 OK mTLS mTLS default/ default/istio-system hello-1.hello.hello.svc.cluster.local:80 OK mTLS mTLS default/ default/istio-system hello.hello.svc.cluster.local:80 OK mTLS mTLS default/ default/istio-system k get service hello NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE hello ClusterIP None <none> 80/TCP 4h49m k describe service hello Name: hello Namespace: hello Labels: app=hello Annotations: kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"app":"hello"},"name":"hello","namespace":"hello"},"spec":{"clu... Selector: app=hello Type: ClusterIP IP: None Port: http-port 80/TCP TargetPort: 80/TCP Endpoints: 10.244.3.60:80,10.244.4.55:80 Session Affinity: None Events: <none>Any idea what could be wrong ?

@howardjohn can you elaborate why the PR you pasted helps ? It's gonna take me some time to digest it

Hey @cscetbon , did you create the appropriate policy and destination rules (this is the ability to tell a client to talk with MTLS)

https://github.com/arielb135/RabbitMQ-with-istio-MTLS#issue-2---mutual-tls-for-pod-ip-communication

try to do something like:

apiVersion: v1

items:

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

labels:

app: hello

name: mtls-per-pod

namespace: hello

spec:

host: '*.hello.hello.svc.cluster.local'

trafficPolicy:

tls:

mode: ISTIO_MUTUAL

-about the dynamic creation of service entries - for me it's pretty easy as i'm using Helm to deploy my applications, so my script is pretty simple, example:

-about the wildcard service entry, it indeed should work, i dont remember if i had issues with it but its worth a try.

@arielb135, it does not work for me. Here is the deployment file I use https://pastebin.com/raw/3XnSz7L3

root@hello-0:/www# curl hello-1.hello.hello.svc.cluster.local

upstream connect error or disconnect/reset before headers. reset reason: connection

root@hello-0:/www# curl hello-0.hello.hello.svc.cluster.local

curl: (56) Recv failure: Connection reset by peer

It's interesting to note the difference here ^

At https://aspenmesh.io/2019/03/running-stateless-apps-with-service-mesh-kubernetes-cassandra-with-istio-mtls-enabled/ they say to use a command to check if mtls is enabled. which I did but I get nothing. I don't know if it can help to find where is the issue :

istioctl authn tls-check hello.hello.svc.cluster.local

Error: nothing to output

I've updated the previous comment as I also added a Policy. It did not help

@howardjohn, @arielb135, if one of you can help with the previous issue. Everything is accessible through the pastebin link and that should make it easier for you to see what I'm doing wrong. I'm kinda blocked by this issue. Thanks

the pr above helps because you can turn off proxying for just communication within the headless service

@howardjohn can you give a short description of what it fixes and how it helps in our case ?

@howardjohn, @arielb135, if one of you can help with the previous issue. Everything is accessible through the pastebin link and that should make it easier for you to see what I'm doing wrong. I'm kinda blocked by this issue. Thanks

I've reproduced your issue, it's actually really weird..

i will have time to investigate it around next week (million of stuff at work), and i had troubles installing curl on the hello pod, but meanwhile - can you try switching the stateful set to regular deployment, see that it works? (even CURL a pod by it's generated domain name / IP)

@arielb135 I've updated the pastebin link in the previous comment to use a docker image that has curl already available to make it easier. I'm gonna try what you proposed and hope you'll have some time to help figure out the issue.

I successfully deployed a golang GRPC service as a statefulset (istio-injected) with both headless and clusterIP services for two separate ports respectively. I used a wildcard: "*.servicename.ns.svc.cluster.local" in the service entry, which, confirmed, does work, and I did not find the ServiceEntry step to be prohibitively inconvenient, as it will not require any further editing upon scaling.

I worked off the example manifests provided in this issue thread, and a more comprehensive section in the documentation may be required.

To make it clear, users try examples above should change the ServiceEntry yaml protocol to HTTP

apiVersion: networking.istio.io/v1alpha3

kind: ServiceEntry

metadata:

name: istio-http1-stateful-service-entry

namespace: hello

spec:

hosts:

- hello-0.hello.hello.svc.cluster.local

- hello-1.hello.hello.svc.cluster.local

location: MESH_INTERNAL

ports:

- number: 80

name: http-port

protocol: HTTP

resolution: NONE

correct @hzxuzhonghu, I was able to fix it by using HTTP protocol thanks to @andrewjjenkins.

When host names are used in a ServiceEntry, Istio needs to extract the host from the host header in network paquets and that's why HTTP protocol is needed.

In my case i specified GRPC protocol in my service entry and it works as well.

cool and happy to see the proposed wildcard works !

Problems reported with Elasticsearch which also uses StatefulSet: https://github.com/istio/istio/issues/14662

I can confirm I have also seen this with Elasticsearch.

I would propose there will be a component that watches statefulsets and create the related ServiceEntries for it. So native k8s statefulset can be deployed transparently.

It should watch the statefulsets and associated services (acquire ports), and create a SE if not exists. It can be a controller of pilot, or a standalone process.

@hzxuzhonghu It can't always be a Service Entry. If the protocol is supported you can but in other cases you can't and have to use a Headless Service.

If the protocol is supported you can but in other cases you can't and have to use a Headless Service.

I do not understand this well, can you elaborate a little more? My understanding is for headless service, istio currently already generated a listener.

@hzxuzhonghu you're correct. Just saying that some cases, like for a Cassandra cluster for example, it does not make sense to create a ServiceEntry. The reason is because ips can't be extracted automatically and we would have to update the ServiceEntry each time a pod's ip changes.

I am not familiar with Cassandra, but why need to update SE when pod ip changes, how Cassandra cannot use the pod dns to talk to each other?

Cause Istio can’t extract dns hosts from cassandra packets like it can with http packets. So you can’t use dns names and have to use ip addresses if you use a Service Entry. But then you have issues with IPs that can change, that’s what I was talking about.

- hello-0.hello.hello.svc.cluster.local

- hello-1.hello.hello.svc.cluster.local

These dns resolve is served by kube-dns, am i missing something?

Yes I’m talking when Istio proxies receive network packets. The best is to try it with a non supported protocol and see by yourself. I got this information from an Istio’s developer

Stateful sets work fine as long as the corresponding headless service (clusterIP: None) contains the ports information. No need for service entries or anything else. Istio mtls can be enabled/disabled as usual. The only caveat is that stateful sets should avoid sharing ports.

@mbanikazemi another caveat is that you cannot currently use mTLS if a Pod in a StatefulSet wants to connect to its own PodIP (https://github.com/istio/istio/issues/12551). This is common in many Kafka configurations.

@andrewjjenkins @arielb135 @esnible @howardjohn I am confused by using ServiceEntries with host names for TCP protocols like the ones used by RabbitMQ. For a TCP protocol, the original host name is not known, so how the ServiceEntry in https://github.com/arielb135/RabbitMQ-with-istio-MTLS could be useful? How Envoy will use rabbitmq-n.rabbitmq-discovery.rabbitns.svc.cluster.local hosts for an amqp port?

@vadimeisenbergibm for TCP hostnames are just used for DestinationRules/VirtualServices as far as I know, not by envoy directly

Now we have supported headless service instances listeners and split inbound and outbound listener, i think this has been fixed. @rshriram @lambdai Could we close this?

yep

/close

If anyone has some other issue, feel free to reopen it.

I think you should reopen that umbrella. I tried 1.3.3 and Cassandra nodes still can't connect to their local POD_IP which prevents the cluster from working. I was expecting the split between inbound and outbound to solve that issue but it does not if it's in 1.3.3.

$ helm ls istio

NAME REVISION UPDATED STATUS CHART APP VERSION NAMESPACE

istio 1 Wed Oct 16 22:57:36 2019 DEPLOYED istio-1.3.3 1.3.3 istio-system

istio-init 1 Wed Oct 16 22:52:19 2019 DEPLOYED istio-init-1.3.3 1.3.3 istio-system

$ kl --tail=10 cassandra-1 cassandra

INFO 03:13:18 Cannot handshake version with cassandra-0.cassandra.cassandra-e2e.svc.cluster.local/10.244.1.15

INFO 03:13:18 Handshaking version with cassandra-0.cassandra.cassandra-e2e.svc.cluster.local/10.244.1.15

@hzxuzhonghu Do you have a link for the relavant documentation or changelog?

conversation killer ._. @hzxuzhonghu

@hzxuzhonghu That is awesome, let me ask something different what should we do differently/change, right now we are using the rabbitmq example that have been circling around various issues.

- Do we still need specific entries for each pod in a statefulset?

- What do we do when we have a headless and a clusterIP for a statefulset

- Is there any special step we need to take when using mTLS?

Do we still need specific entries for each pod in a statefulset?

NO

What do we do when we have a headless and a clusterIP for a statefulset

You can access by service name as normal service from client. For peer to peer communication, you need to specify the pod dns name.

Is there any special step we need to take when using mTLS?

For some apps, you need take care. FYI https://istio.io/faq/security/#mysql-with-mtls

@howardjohn it seems you missed my comment https://github.com/istio/istio/issues/10659#issuecomment-542979434

@arielb135 I've read your article and still can't make it work with Cassandra by disabling mtls on Gossip ports. I'm atm trying to disable MTLS for all the ports it uses (and will at the end just encrypt the clients connections). Here's what I use, if you can help that'd be more than appreciated https://pastebin.com/raw/pgDSH0RN. I can't understand why they still can't communicate. I see that it applies my DestinationRule

{

"name": "outbound|7001||cassandra.cassandra-e2e.svc.cluster.local",

"type": "ORIGINAL_DST",

....

"metadata": {

"filterMetadata": {

"istio": {

"config": "/apis/networking/v1alpha3/namespaces/cassandra-e2e/destination-rule/tls-only-native-port"

....

We can also see the MTLS is disabled for the service

HOST:PORT STATUS SERVER CLIENT AUTHN POLICY DESTINATION RULE

cassandra.cassandra-e2e.svc.cluster.local:7000 OK DISABLE DISABLE cassandra-e2e/policy-disable-mtls cassandra-e2e/tls-only-native-port

cassandra.cassandra-e2e.svc.cluster.local:7001 OK DISABLE DISABLE cassandra-e2e/policy-disable-mtls cassandra-e2e/tls-only-native-port

cassandra.cassandra-e2e.svc.cluster.local:7199 OK DISABLE DISABLE cassandra-e2e/policy-disable-mtls cassandra-e2e/tls-only-native-port

cassandra.cassandra-e2e.svc.cluster.local:9042 OK DISABLE DISABLE cassandra-e2e/policy-disable-mtls cassandra-e2e/tls-only-native-port

Can confirm this is still a problem in 1.4

Any workaround to solve this issue??

Opening because this problem is still being reported.

If the maintainers feel this is mostly solved please create a new issue for the parts that aren't solved for that activity. We can't keep ignoring feedback regarding stateful sets.

https://github.com/istio/istio/pull/19992/files This fixes headless service problem, Now the actual problem is with stateful apps. Any workaround would be helpful.

We are facing a issue when trying mtls with zookeeper.

below is the summary,

items done:

- default mtls policy with STRICT mode in the client/server(both are in single namespace) namespace.

- default destination rule with *.local wildcard in the client/server(both are in single namespace) namespace with ISTIO_MUTUAL mode.

- global.mtls is disabled.

- zookeeper quorumListenOnAllIPs=true to make zk listen on all the IPs.

- No virtual service or gateways created.

- exclude 2388 port from envoy side car interception.

traffic.sidecar.istio.io/includeInboundPorts: "2181"

traffic.sidecar.istio.io/excludeInboundPorts: "2888,3888"

traffic.sidecar.istio.io/excludeOutboundIPRanges: "0.0.0.0/0"

traffic.sidecar.istio.io/includeOutboundIPRanges: ""

With these settings, only 2181 client port is exposed via envoy and with mTLS.

single zookeeper replica.

To test this, introduced another pod with zookeeper shell libraries and mTLS enabled as a client.

Now when zookeeper shell is run from the client pod with zk pod fqdn (zk-zkist-0.zk-zkist-headless.istiotest.svc.cluster.local), we see below error and warnings

WARN Session 0x0 for server zk-zkist-0.zk-zkist-headless.istiotest.svc.cluster.local/x.x.x.x:2181, unexpected error, closing socket connection and attempting reconnect (org.apache.zookeeper.ClientCnxn)

java.io.IOException: Packet len352518400 is out of range!

at org.apache.zookeeper.ClientCnxnSocket.readLength(ClientCnxnSocket.java:113)

at org.apache.zookeeper.ClientCnxnSocketNIO.doIO(ClientCnxnSocketNIO.java:79)

at org.apache.zookeeper.ClientCnxnSocketNIO.doTransport(ClientCnxnSocketNIO.java:366)

at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1141)

Exception in thread "main" org.apache.zookeeper.KeeperException$ConnectionLossException: KeeperErrorCode = ConnectionLoss for /

at org.apache.zookeeper.KeeperException.create(KeeperException.java:102)

at org.apache.zookeeper.KeeperException.create(KeeperException.java:54)

at org.apache.zookeeper.ZooKeeper.getChildren(ZooKeeper.java:1541)

at org.apache.zookeeper.ZooKeeper.getChildren(ZooKeeper.java:1569)

at org.apache.zookeeper.ZooKeeperMain.processZKCmd(ZooKeeperMain.java:732)

at org.apache.zookeeper.ZooKeeperMain.processCmd(ZooKeeperMain.java:600)

at org.apache.zookeeper.ZooKeeperMain.executeLine(ZooKeeperMain.java:372)

at org.apache.zookeeper.ZooKeeperMain.run(ZooKeeperMain.java:358)

at org.apache.zookeeper.ZooKeeperMain.main(ZooKeeperMain.java:291)

zookeeper logs the below:

"type":"log", "host":"zk-zkist-0", "level":"WARN", "neid":"zookeeper-902a7179214b4780bf0189fceb111b59", "system":"zookeeper", "time":"2020-01-30T07:19:31.766Z", "timezone":"UTC", "log":{"message":"NIOServerCxn.Factory:0.0.0.0/0.0.0.0:2181 - org.apache.zookeeper.server.NIOServerCnxn - Unable to read additional data from client sessionid 0x0, likely client has closed socket"}}

{"type":"log", "host":"zk-zkist-0", "level":"INFO", "neid":"zookeeper-902a7179214b4780bf0189fceb111b59", "system":"zookeeper", "time":"2020-01-30T07:19:31.766Z", "timezone":"UTC", "log":{"message":"NIOServerCxn.Factory:0.0.0.0/0.0.0.0:2181 - org.apache.zookeeper.server.NIOServerCnxn - Closed socket connection for client /127.0.0.1:47750 (no session established for client)"}}

Note: the zk process is up, confirmed the same by running the same command from within the zookeeper container itself and works fine.

Also when we delete the mtls policy, things work fine even from the client pod.

Only when the mTLS is enabled this issue is occurring.

Any reasonable issues in configurations above ?

@kaushiksrinivas From your testclient Is dns resolution happening for zk-zkist-0.zk-zkist-headless.istiotest.svc.cluster.local ?

it is happening, because i am able to see the warning logs in the zookeeper server as soon as i run my client commands and also when mtls is disabled, this works fine. I tried even adding a serviceEntry with this fqdn and still does not work.

telnet zk-zkist-0.zk-zkist-headless.istiotest.svc.cluster.local 2181

Trying 192.168.1.61...

Connected to zk-zkist-0.zk-zkist-headless.istiotest.svc.cluster.local.

Edit: I've since been able to get this to work, but preserving the below in case its helpful for anyone else. I ended up needing to label grpcs services as tcp instead of http or grpc. This still feels like a bug on istio/envoys end, but could just be an incompatibility with my current application requirements (i.e., if I was using istio mTLS or grpc instead of grpcs maybe it would work).

I am working on integrating sidecars into a PERMISSIVE default profile istioctl installation. I am hitting issues once I attempt to communicate with a handful of StatefulSet services (specifically, hyperledger fabric nodes). Communication works prior to turning on side-car injection.

These nodes are configured with one-way grpcs. I've seen a lot of independent problems with directly configured TLS in permissive mode, StatefulSets, and grpc. Are there any instructions/debugging tips on how to narrow down where my problem might lie?

If it helps, APGGroeiFabriek/PIVT is my reference implementation (using single node raft samples as a starting point). I've made local modifications to implement a headless service for both orderer and peer. I'm happy to try and provide a fork with relevant changes to narrow down issues, but will take some time to detangle them from internal assumptions.

@howardjohn @hzxuzhonghu and other contributors,

This seems to be a common area of difficulty that people face in Istio --- deploying and configuring with StatefulSets.

Is there any active development happening to make this easier?

We really need to make some improvements in this space.

Links that I have found with related issues:

https://github.com/istio/istio/pull/20231

https://github.com/istio/istio/issues/19280

https://github.com/istio/istio/issues/14743

https://github.com/istio/istio/issues/10053

https://github.com/istio/istio/issues/1277

https://github.com/istio/istio/issues/10586

https://github.com/istio/istio/issues/9666

https://github.com/istio/istio/issues/10490

https://github.com/istio/istio/issues/7495

https://github.com/istio/istio/issues/21964

Is there a summary of what is the Istio support for statefulset or a doc page pointing to what is missing and what is included ? WHat about Things like blue/green ?

@hzxuzhonghu @enis I doubt if istio really support statefulset application Now.

Recently, I deploy mariadb galera cluser using helm bitnami, and got some problem.

when the k8s cluster only have one helm, the mariadb-galera-cluster can start which means that all the pod running, but not work well for data sync, especially when I cut down one of the statefulset pod and then waiting for its up, the database will not work with the error

ERROR 1047 (08S01): WSREP has not yet prepared node for application use. My operating steps are as flow:step1, install the mariadb-galera-cluster using helm, and got 3 pod in my namesapce lcf

# kubectl get po -nlcf

NAME READY STATUS RESTARTS AGE

lcf7162-mariadb-galera-0 2/2 Running 0 4h54m

lcf7162-mariadb-galera-1 2/2 Running 0 4h6m

lcf7162-mariadb-galera-2 2/2 Running 1 4h16m

step2, enter lcf7162-mariadb-galera-0 to create a datebase, a table, and insert some data;

step3, check the data insert in step2 in other two pod, the data sync(the first time, it out-sync, thesecond time, it sync)

step4, delete lcf7162-mariadb-galera-1, waiting for running, at the same time, enter lcf7162-mariadb-galera-2,and can't see the database created in step2;

step5, after lcf7162-mariadb-galera-1 running, enter lcf7162-mariadb-galera-1 to see the database, can't see the database created in step2 also;

step6, enter lcf7162-mariadb-galera-2 to create database, the pod crashed

step7, after lcf7162-mariadb-galera-2 running, the mariadb-galera-cluster can't work well.

- when I use helm to install mariadb-galera-cluster more than one time, the after mariadb-galera-cluster will not start well(some of the pods will creashBackOff). When I check the log of istio-proxy, I got some route which is not belong to itsself, I think its so strange.

# kubectl version

Client Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.3", GitCommit:"2e7996e3e2712684bc73f0dec0200d64eec7fe40", GitTreeState:"clean", BuildDate:"2020-05-20T12:52:00Z", GoVersion:"go1.13.9", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.3", GitCommit:"2e7996e3e2712684bc73f0dec0200d64eec7fe40", GitTreeState:"clean", BuildDate:"2020-05-20T12:43:34Z", GoVersion:"go1.13.9", Compiler:"gc", Platform:"linux/amd64"}

# istioctl version

client version: 1.6.4

control plane version: 1.6.4

data plane version: 1.6.4 (15 proxies)

Is anyone else has the similar problem?

@hzxuzhonghu @enis when I do step1-step7 in a namespace without istio, there is no database sync problem, so I doubt istio may not support this statefuset kube-app?

@rshriram

🚧 This issue or pull request has been closed due to not having had activity from an Istio team member since 2020-01-23. If you feel this issue or pull request deserves attention, please reopen the issue. Please see this wiki page for more information. Thank you for your contributions.

_Created by the issue and PR lifecycle manager_.

Hey, I had trouble with a Zookeeper StatefulSet configuration when I applied mesh-wide strict mTLS. This fixed it.

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

labels:

app: zk

name: zk

spec:

host: zk-service-name

trafficPolicy:

tls:

mode: ISTIO_MUTUAL

Most helpful comment

@howardjohn @hzxuzhonghu and other contributors,

This seems to be a common area of difficulty that people face in Istio --- deploying and configuring with StatefulSets.

Is there any active development happening to make this easier?

We really need to make some improvements in this space.

Links that I have found with related issues:

https://github.com/istio/istio/pull/20231

https://github.com/istio/istio/issues/19280

https://github.com/istio/istio/issues/14743

https://github.com/istio/istio/issues/10053

https://github.com/istio/istio/issues/1277

https://github.com/istio/istio/issues/10586

https://github.com/istio/istio/issues/9666

https://github.com/istio/istio/issues/10490

https://github.com/istio/istio/issues/7495

https://github.com/istio/istio/issues/21964