Ingress-nginx: traffic imbalance issue with lua balancer / default ingress-nginx load balancing

Is this a request for help? (If yes, you should use our troubleshooting guide and community support channels, see https://kubernetes.io/docs/tasks/debug-application-cluster/troubleshooting/.):

What keywords did you search in NGINX Ingress controller issues before filing this one? (If you have found any duplicates, you should instead reply there.):

Is this a BUG REPORT or FEATURE REQUEST? (choose one):

Bug

NGINX Ingress controller version:

0.23.0

Kubernetes version (use kubectl version):

1.11.6

Environment:

- Cloud provider or hardware configuration:

- OS (e.g. from /etc/os-release): Debian GNU/Linux 9 (stretch) (kops image)

- Kernel (e.g.

uname -a): Linux ip-172-18-76-224 4.9.0-7-amd64 #1 SMP Debian 4.9.110-3+deb9u2 (2018-08-13) x86_64 GNU/Linux - Install tools: Kops

- Others:

What happened:

On our production cluster, we found there is a severe traffic imbalance with lua balancer when there are many pods. For example when we have 50pods, I found only top 20% of pods are getting traffics and thus suffering from probe failures and crash loop back offs.

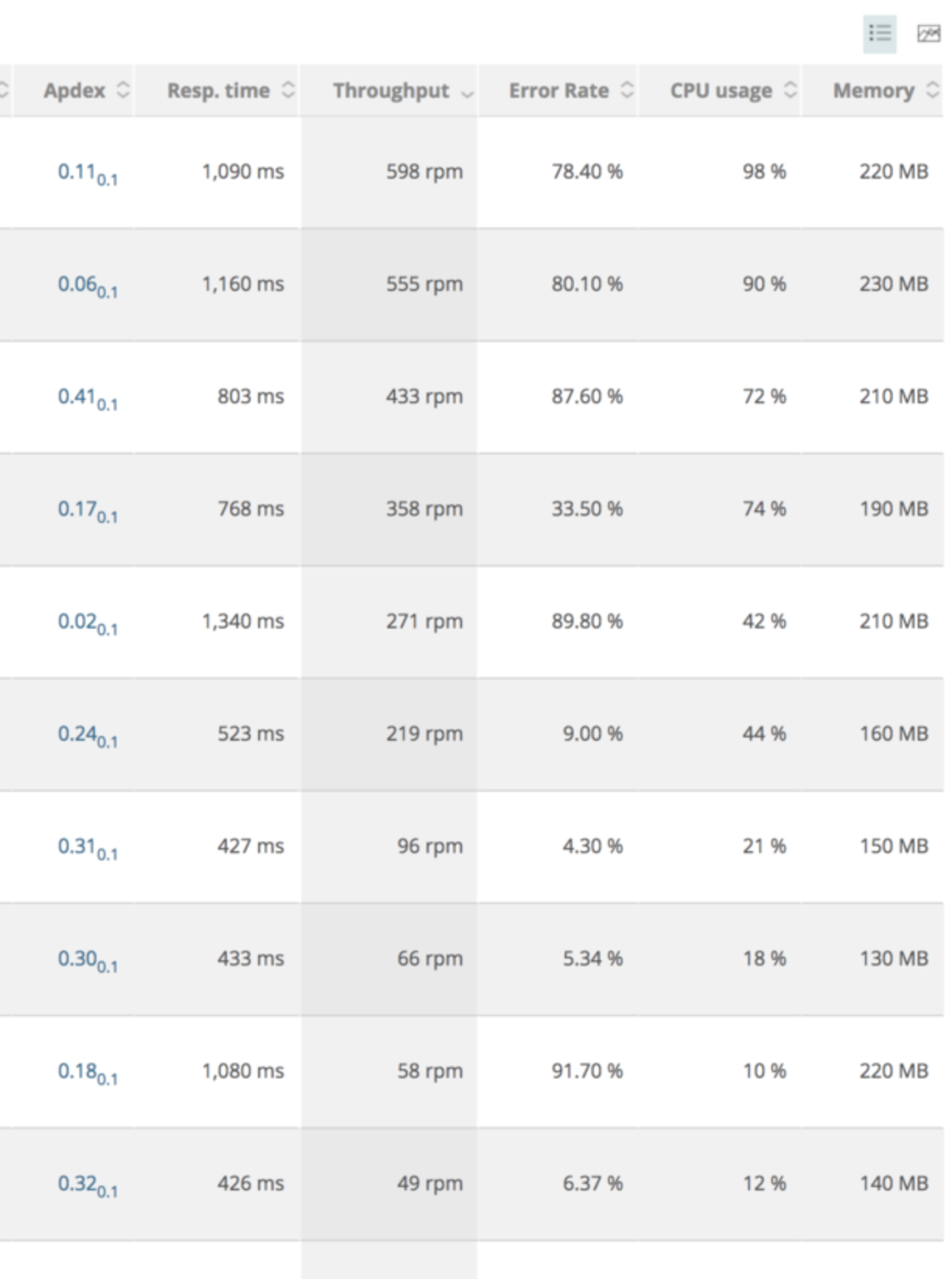

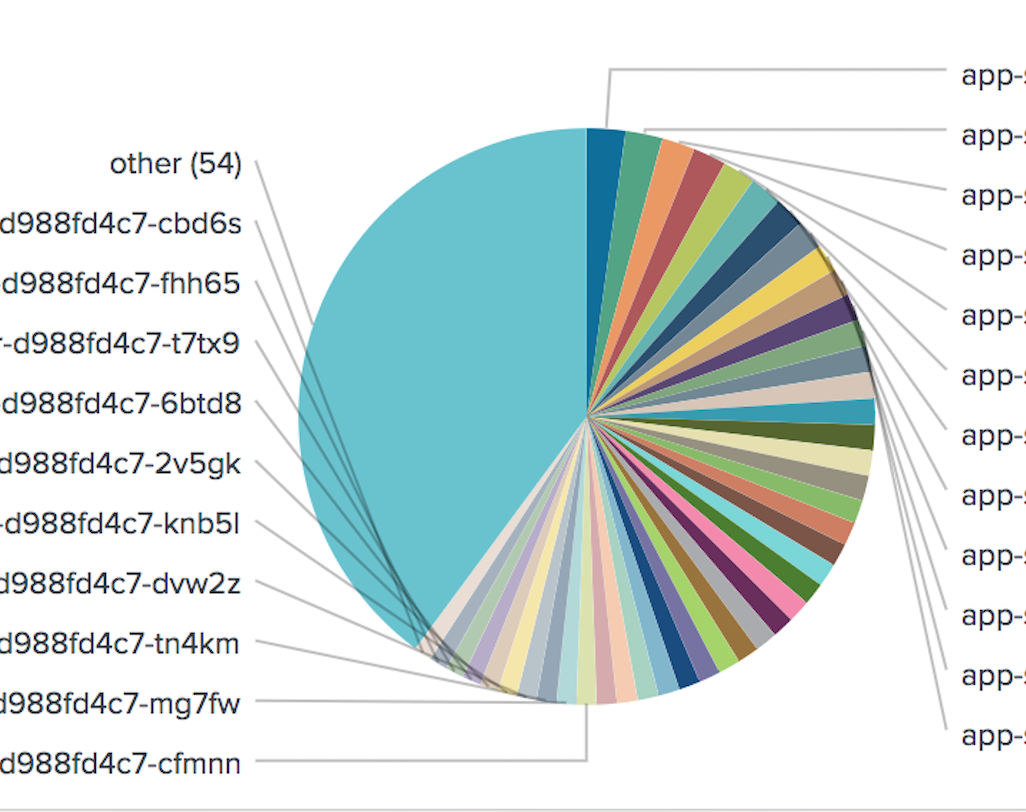

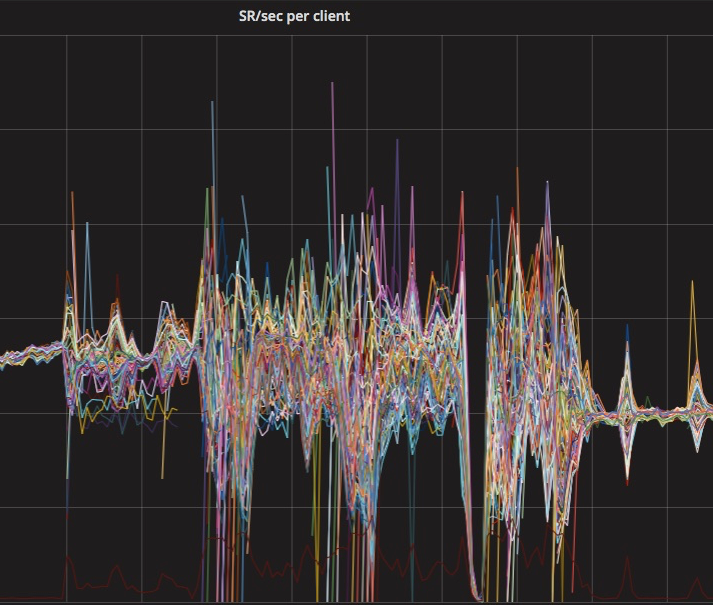

The following two metrics show the severe traffic imbalance across the pods.

Throughput across the pods (sorted by rpm)

Not shown in this pic but the half of the pods at the bottom were actually getting almost no traffic.

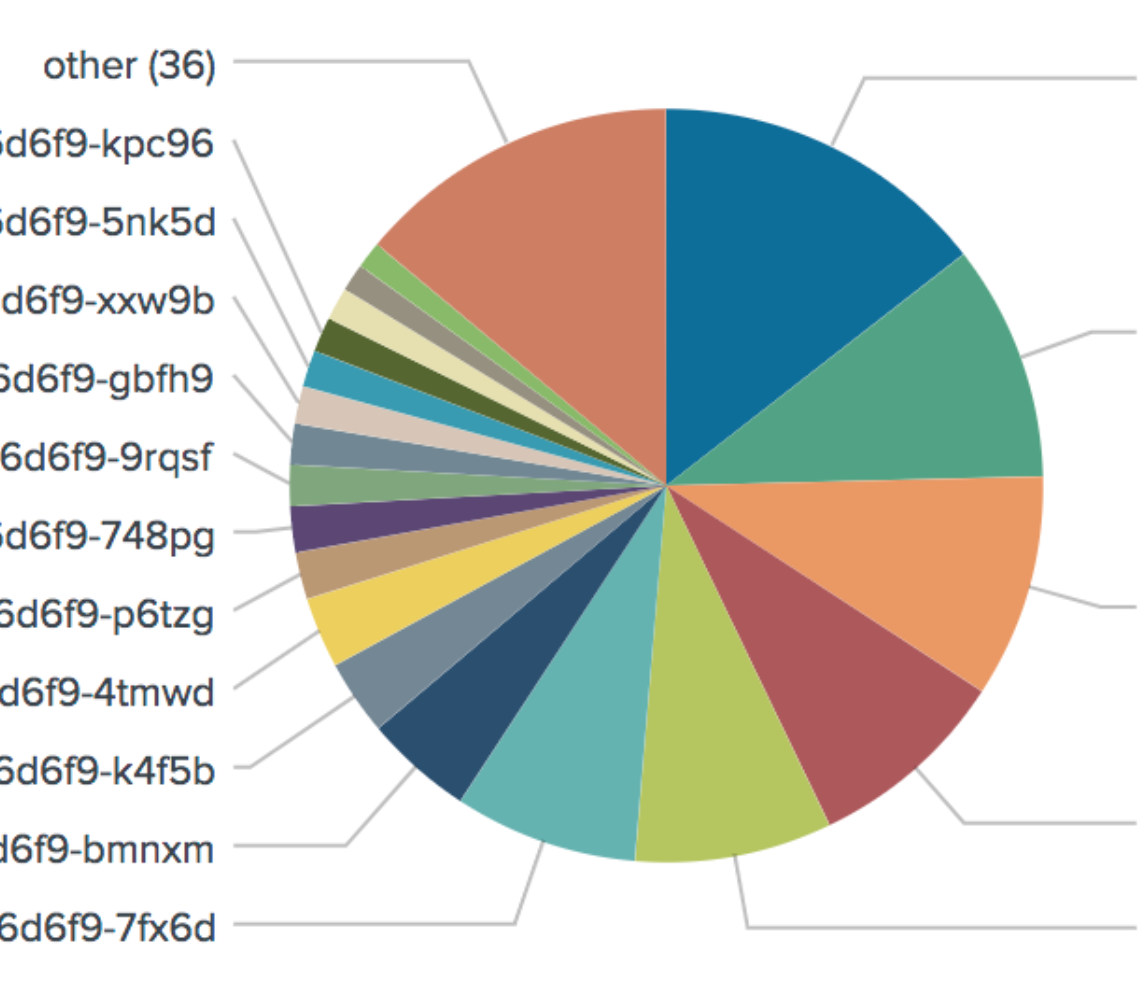

Piechart of a incoming traffic(# of access logs). top 5pods were getting over 50% of whole traffic whereas 36 "other" pods were getting almost no traffic

One interesting aspect was that, the traffic was always concentrated onto those specific pods even after the probe failures and restart events as if there is some stickiness, and the traffic had never been balanced no matter how long I waited.

We found all the internal services (i.e., services with http://<service_name>.<namespace> inside the kubernetes cluster) were working great, and then later I realized after speaking with @aledbf on slack and reading through the doc that ingress nginx does not use kube-proxy for load balancing.

So we decided to enable service-upstream annotation for all the ingress entries so we can force ingress-nginx to use iptables for load balancing.

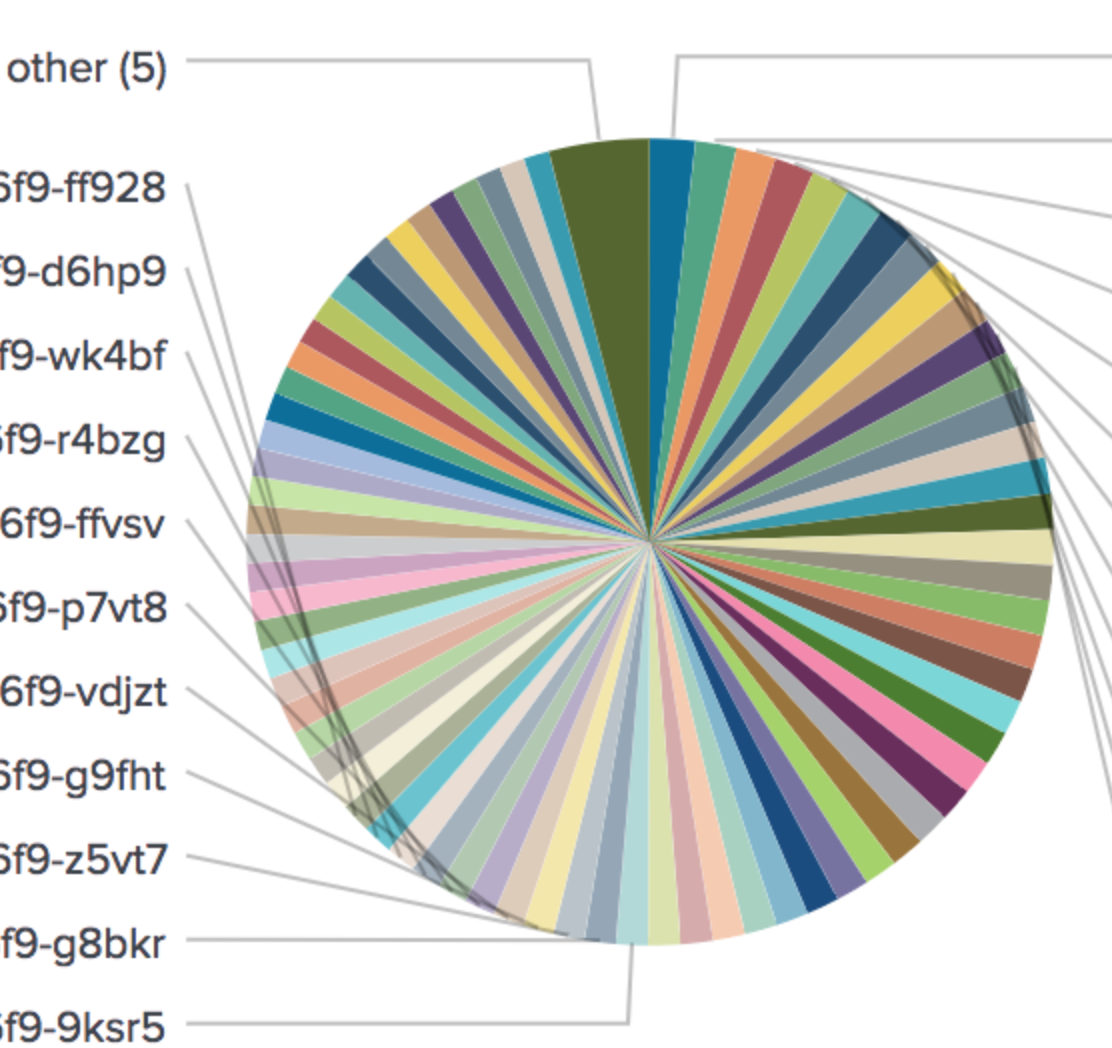

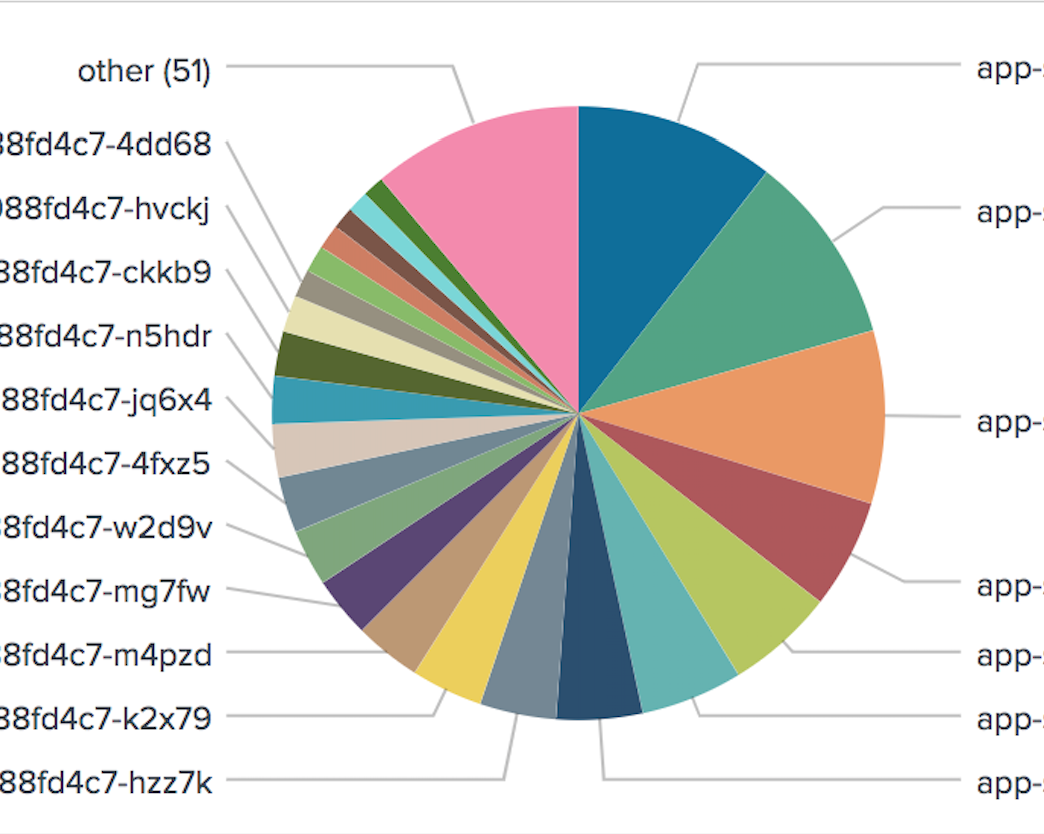

This is the piechart after we applied the annotation.

We verified the behavior using new debugging tool dbg by printing out the endpoints

/dbg backends get <service-upstream>

when the upstream is a set of Pod IPs we see the traffic imbalance, and the balancing normalizes when it is pointing at one service endpoint after applying service-upstream

Is this a known issue or do you have any clue why this is possibly happening?

What you expected to happen:

Lua balancer should perform similar traffic balance as kube-proxy load balancing.

How to reproduce it (as minimally and precisely as possible):

Remove service-upstream annotation

Anything else we need to know:

One thing may worth mentioning is that the traffic I mentioned above is not a public user traffic directly hitting our k8s cluster, but it is curl requests from our datacenter.

Public Datacenter AWS K8S

------ ---------- -------

Host 1

users -> LB Host 2(curl) -> app.foo.com(Ingress-Nginx)

Host n

Not sure if lua loadbalancer would have yielded different result if that was organic public traffic.

All 24 comments

@dmxlsj please the ingress definition you are using for this test

Hi @aledbf, sure this is the ingress.

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/configuration-snippet: |

if ($http_x_is_testing_pool = "1") {

set $proxy_upstream_name "$namespace-$service_name-testing-$service_port";

}

nginx.ingress.kubernetes.io/proxy-buffer-size: 16k

nginx.ingress.kubernetes.io/proxy-buffering: "on"

name: www-private-ingress

namespace: app

spec:

rules:

- host: foo.privatesvc.net

http:

paths:

- backend:

serviceName: app-private

servicePort: 80

path: /svc

- backend:

serviceName: app-private-testing

servicePort: 80

path: /-testing-/svc

The logic is when the requests hit the datacenter (please see the diagram above), it first determines if this is a part of testing pool and if so, we append X-Is-Testing-Pool: 1 header.

When the request hits Kubernetes Ingress-nginx, it checks if it has the header and override the $proxy_upstream_name to point at app-private-testing svc.

So Inside the nginx.conf, it would look like the following.

set $proxy_upstream_name "app-app-private-80";

set $proxy_host $proxy_upstream_name;

...

proxy_buffering on;

proxy_buffer_size 16k;

proxy_buffers 4 16k;

proxy_request_buffering on;

...

if ($http_x_is_testing_pool = "1") {

set $proxy_upstream_name "$namespace-$service_name-testing-$service_port";

}

proxy_pass http://upstream_balancer;

proxy_redirect off;

Other than this, I believe the ingress config seems pretty straightforward.

Other than this, I believe the ingress config seems pretty straightforward.

Well, not really, basically, you are changing the normal behavior of the lua balancing.

@ElvinEfendi can you take a look, please?

Can you try without that configuration snippet to see if the distribution is better?

Also you can use canary feature of ingress-nginx to achieve that in a tested and cleaner way: https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration/annotations/#canary

Thanks @aledbf and @ElvinEfendi ,

I think we tried it without configuration snippet before but for sure I will try it again today.

Yeah. we are aware of the new canary annotation, but actually that x-is-testing-pool header for us is to shape the traffic between users and bots so it has been a long-term logic although there is a consolidation effort now.

Have you verified these stats using something else than NewRelic?

The instance overview can't to be trusted, it includes pods that have long been terminated. The tool isn't made for hostnames to be changing.

Hi @joekohlsdorf

Yes we've verified this across multiple apm/toolings/manual testings. for example the piechart above is the splunk access log not the APM. Above all, the performance difference is very visible when you watch k8s pod events.

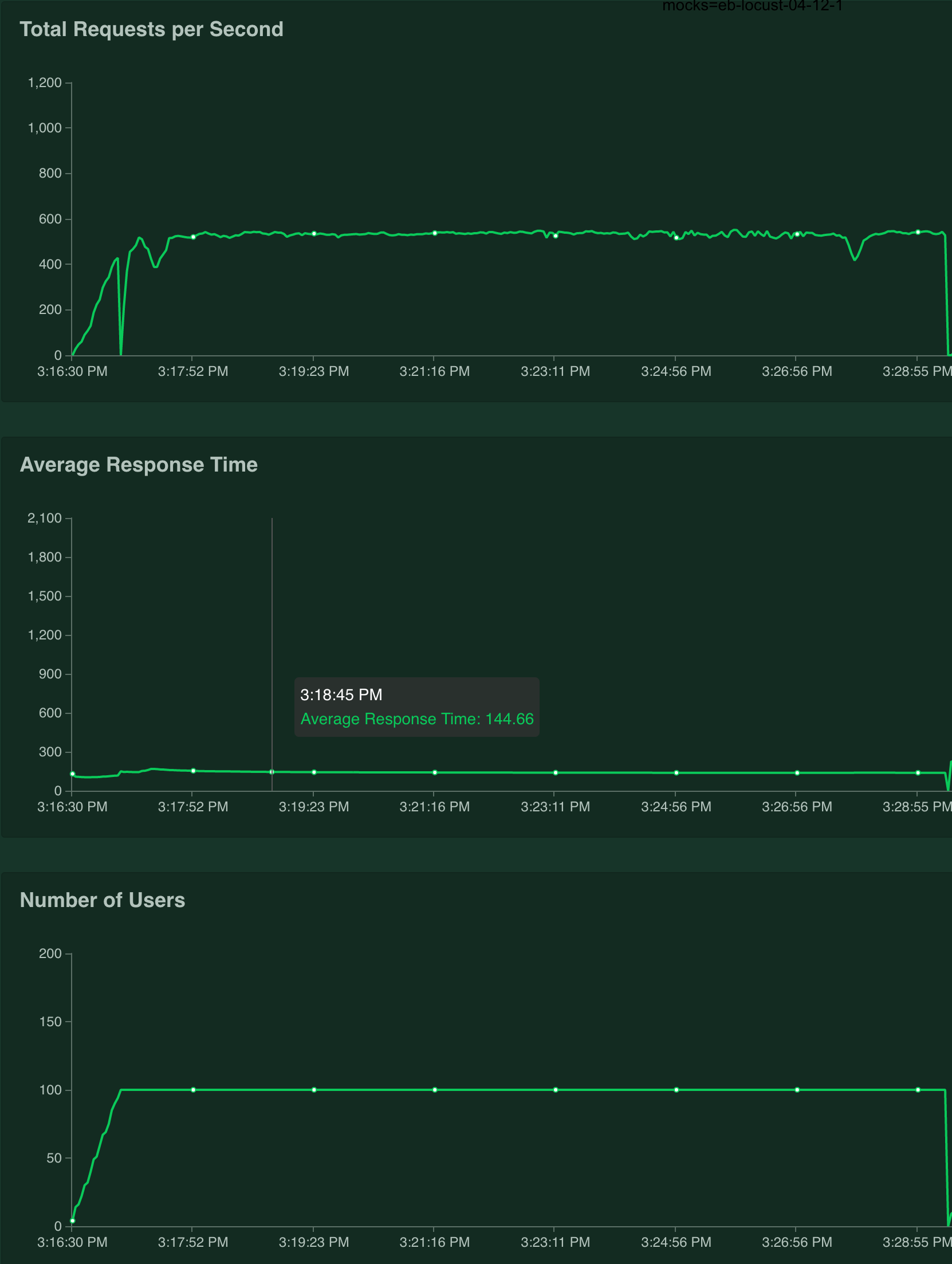

I ran a small load testing today against the endpoints after removing the configuration snippet.

The ingress is now as simple as the following.

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: app-load-test

namespace: app

spec:

rules:

- host: app-load-test.foo.com

http:

paths:

- backend:

serviceName: app-load-test

servicePort: 80

path: /target

I set 100 replicas with minimum of 1vCPU/512Mi per pod.

- Using

service-upstreamannotation. (kube-proxy)

In average, 520rps and 145ms response time

Piechart of accesses for initial 15mins.

balance based on access log.

app-load-test-d988fd4c7-mp2nc | 1314

app-load-test-d988fd4c7-cp7rp | 1270

app-load-test-d988fd4c7-gvw92 | 834

app-load-test-d988fd4c7-hzz7k | 813

app-load-test-d988fd4c7-mrmm5 | 773

app-load-test-d988fd4c7-qhfbm | 753

app-load-test-d988fd4c7-nz2vc | 707

app-load-test-d988fd4c7-f9vsm | 667

app-load-test-d988fd4c7-zvdr5 | 647

app-load-test-d988fd4c7-fdknz | 627

app-load-test-d988fd4c7-w2d9v | 622

app-load-test-d988fd4c7-kzk8m | 618

app-load-test-d988fd4c7-mk488 | 616

app-load-test-d988fd4c7-jddwh | 614

app-load-test-d988fd4c7-kxqmv | 613

app-load-test-d988fd4c7-jq6x4 | 612

app-load-test-d988fd4c7-k2x79 | 610

app-load-test-d988fd4c7-7k84n | 606

app-load-test-d988fd4c7-m4pzd | 600

app-load-test-d988fd4c7-fbpcp | 592

app-load-test-d988fd4c7-75bxb | 585

app-load-test-d988fd4c7-9xk7s | 579

app-load-test-d988fd4c7-r7dzw | 557

app-load-test-d988fd4c7-8zh4v | 549

app-load-test-d988fd4c7-wcgfw | 534

app-load-test-d988fd4c7-mg7fw | 532

app-load-test-d988fd4c7-95xhn | 531

app-load-test-d988fd4c7-q49tb | 530

app-load-test-d988fd4c7-vpq8x | 522

app-load-test-d988fd4c7-s97ck | 517

app-load-test-d988fd4c7-4dd68 | 512

app-load-test-d988fd4c7-tklbt | 507

app-load-test-d988fd4c7-9xj6w | 506

app-load-test-d988fd4c7-vfflp | 504

app-load-test-d988fd4c7-cbd6s | 495

app-load-test-d988fd4c7-thmsh | 488

app-load-test-d988fd4c7-knb5l | 481

app-load-test-d988fd4c7-726nw | 478

app-load-test-d988fd4c7-zptmv | 473

app-load-test-d988fd4c7-krrch | 470

app-load-test-d988fd4c7-fhh65 | 459

app-load-test-d988fd4c7-dvw2z | 443

app-load-test-d988fd4c7-cfmnn | 440

app-load-test-d988fd4c7-xm2v5 | 438

app-load-test-d988fd4c7-vv68z | 436

app-load-test-d988fd4c7-t7tx9 | 435

app-load-test-d988fd4c7-4fxz5 | 429

app-load-test-d988fd4c7-dxw49 | 426

app-load-test-d988fd4c7-zcn67 | 425

app-load-test-d988fd4c7-c97jj | 423

app-load-test-d988fd4c7-r8wxx | 419

app-load-test-d988fd4c7-ww8vb | 418

app-load-test-d988fd4c7-n8w6j | 415

app-load-test-d988fd4c7-njr8t | 409

app-load-test-d988fd4c7-k2bh9 | 408

app-load-test-d988fd4c7-5xc2n | 403

app-load-test-d988fd4c7-qdvsp | 398

app-load-test-d988fd4c7-z49fm | 391

app-load-test-d988fd4c7-m4j8t | 390

app-load-test-d988fd4c7-hvckj | 386

app-load-test-d988fd4c7-qwcbd | 383

app-load-test-d988fd4c7-8vfxl | 367

app-load-test-d988fd4c7-bfs67 | 361

app-load-test-d988fd4c7-p76cx | 352

app-load-test-d988fd4c7-8nbhm | 348

app-load-test-d988fd4c7-cb4kq | 348

app-load-test-d988fd4c7-n5hdr | 347

app-load-test-d988fd4c7-6btd8 | 344

app-load-test-d988fd4c7-wzj74 | 335

app-load-test-d988fd4c7-tn4km | 334

app-load-test-d988fd4c7-kbmsv | 333

app-load-test-d988fd4c7-v7jh5 | 332

app-load-test-d988fd4c7-6wtg7 | 328

app-load-test-d988fd4c7-d9w9z | 328

app-load-test-d988fd4c7-wqhqv | 315

app-load-test-d988fd4c7-2v5gk | 312

app-load-test-d988fd4c7-rkm4d | 311

app-load-test-d988fd4c7-sm9zk | 305

app-load-test-d988fd4c7-qb7sl | 301

app-load-test-d988fd4c7-qtr8j | 299

app-load-test-d988fd4c7-ffgj6 | 294

app-load-test-d988fd4c7-t47z2 | 294

app-load-test-d988fd4c7-ckkb9 | 274

app-load-test-d988fd4c7-j7mkr | 273

app-load-test-d988fd4c7-4srx8 | 268

app-load-test-d988fd4c7-9cl9h | 264

app-load-test-d988fd4c7-jhhnl | 264

app-load-test-d988fd4c7-zw9vh | 260

app-load-test-d988fd4c7-cdhnk | 239

app-load-test-d988fd4c7-bs5rg | 235

app-load-test-d988fd4c7-9d8ml | 223

app-load-test-d988fd4c7-rc5kv | 223

app-load-test-d988fd4c7-xzwsx | 212

app-load-test-d988fd4c7-8x85s | 209

app-load-test-d988fd4c7-cl862 | 177

app-load-test-d988fd4c7-d4mvx | 171

app-load-test-d988fd4c7-x9qcn | 158

app-load-test-d988fd4c7-76zht | 152

- Without

service-upstreamannotation (lua balancer)

In average, 110rps and 960ms response time.

Piechart of accesses logs for initial 15mins.

and balance based on access log.

app-load-test-d988fd4c7-gvw92 | 880

app-load-test-d988fd4c7-dxw49 | 842

app-load-test-d988fd4c7-mk488 | 761

app-load-test-d988fd4c7-j7mkr | 483

app-load-test-d988fd4c7-qwcbd | 478

app-load-test-d988fd4c7-f9vsm | 449

app-load-test-d988fd4c7-bfs67 | 379

app-load-test-d988fd4c7-hzz7k | 337

app-load-test-d988fd4c7-k2x79 | 315

app-load-test-d988fd4c7-m4pzd | 293

app-load-test-d988fd4c7-mg7fw | 268

app-load-test-d988fd4c7-w2d9v | 250

app-load-test-d988fd4c7-4fxz5 | 247

app-load-test-d988fd4c7-jq6x4 | 235

app-load-test-d988fd4c7-n5hdr | 209

app-load-test-d988fd4c7-ckkb9 | 198

app-load-test-d988fd4c7-hvckj | 161

app-load-test-d988fd4c7-4dd68 | 122

app-load-test-d988fd4c7-ww8vb | 118

app-load-test-d988fd4c7-kxqmv | 109

app-load-test-d988fd4c7-z49fm | 98

app-load-test-d988fd4c7-kzk8m | 94

app-load-test-d988fd4c7-thmsh | 94

app-load-test-d988fd4c7-bs5rg | 79

app-load-test-d988fd4c7-rc5kv | 63

app-load-test-d988fd4c7-9xk7s | 56

app-load-test-d988fd4c7-6btd8 | 54

app-load-test-d988fd4c7-wcgfw | 44

app-load-test-d988fd4c7-9d8ml | 42

app-load-test-d988fd4c7-nz2vc | 39

app-load-test-d988fd4c7-wzj74 | 38

app-load-test-d988fd4c7-7k84n | 24

app-load-test-d988fd4c7-cfmnn | 24

app-load-test-d988fd4c7-cdhnk | 22

app-load-test-d988fd4c7-fhh65 | 21

app-load-test-d988fd4c7-kbmsv | 20

app-load-test-d988fd4c7-m4j8t | 20

app-load-test-d988fd4c7-vv68z | 20

app-load-test-d988fd4c7-zvdr5 | 20

app-load-test-d988fd4c7-8vfxl | 19

app-load-test-d988fd4c7-2v5gk | 18

app-load-test-d988fd4c7-cbd6s | 18

app-load-test-d988fd4c7-8x85s | 17

app-load-test-d988fd4c7-jhhnl | 17

app-load-test-d988fd4c7-xm2v5 | 16

app-load-test-d988fd4c7-zptmv | 16

app-load-test-d988fd4c7-dvw2z | 15

app-load-test-d988fd4c7-krrch | 15

app-load-test-d988fd4c7-t7tx9 | 15

app-load-test-d988fd4c7-vpq8x | 15

app-load-test-d988fd4c7-5xc2n | 14

app-load-test-d988fd4c7-6wtg7 | 12

app-load-test-d988fd4c7-qdvsp | 12

app-load-test-d988fd4c7-qpj56 | 11

app-load-test-d988fd4c7-tklbt | 10

app-load-test-d988fd4c7-75bxb | 9

app-load-test-d988fd4c7-95xhn | 9

app-load-test-d988fd4c7-mrmm5 | 9

app-load-test-d988fd4c7-vfflp | 9

app-load-test-d988fd4c7-c97jj | 8

app-load-test-d988fd4c7-d4mvx | 8

app-load-test-d988fd4c7-d9w9z | 7

app-load-test-d988fd4c7-p76cx | 7

app-load-test-d988fd4c7-r7dzw | 7

app-load-test-d988fd4c7-v7jh5 | 7

app-load-test-d988fd4c7-tn4km | 5

app-load-test-d988fd4c7-726nw | 3

app-load-test-d988fd4c7-8zh4v | 3

app-load-test-d988fd4c7-k2bh9 | 3

app-load-test-d988fd4c7-r8wxx | 3

app-load-test-d988fd4c7-q49tb | 2

app-load-test-d988fd4c7-9xj6w | 1

app-load-test-d988fd4c7-s97ck | 1

app-load-test-d988fd4c7-sslb5 | 1

Besides the severe traffic imbalance, there are 74 pods which means there are 26 pods which didn't get any traffic when using lua balancer

Hi @dmxlsj you are in good company 😄 we are experiencing the same behaviour in our cluster for services with a number of pods bigger than 50...

We are trying to replicate and debug this issue and so far found the same behaviour you described: as soon as a deployment is scaled out or in, the number of requests is not balanced between all pods, but the whole traffic is sent to a subset of pods.

May I ask what balancing algorithm are you using?

We specified the algo in the configMap so we expect the NGINX to be configured with round_robin balancing (https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration/configmap/#load-balance).

@dmxlsj @inge4pres please disable reuse-port and try again. This setting is enabled by default, helping in cases with high load, avoiding ports exhaustion and waits

Hi @aledbf thanks for your hint, I don't see any correlation between the effect of traffic imbalance and the parameter you posted, can you please elaborate on that?

@dmxlsj after some debugging of the LUA code and some tests we switched to the ewma load-balancing algorithm by configuring it in the annotation

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: my-ingress

namespace: my-ns

annotations:

ingress.kubernetes.io/load-balance: "ewma"

...

and this solved our issue with traffic imbalance while keeping the stickiness feature that would be otherwise lost using service-upstream. Hope this helps...

We also found a possible bug in the LUA round-robin balancer code that is a _reprise_ of a previous issue: we will write some tests and try to come up with a fix for it.

Hello there, I'd like to give more context around my statement in previous comment.

We believe the current round robin implementation is not suitable for a production environment: because the list of IPs returned by the k8s endpoint API is always ordered in the same way, the round_robin algorithm generates a table that is exactly the same for all instances of ingress pods.

This results in the first IPs in the list to receive more requests that the last because the requests that are balanced on multiple ingress pods all gets routed at the first, then second, then third item and so on...

This results in overloading some pods while leaving others with less requests to handle, hence generating the graphs shown by @dmxlsj.

We already found this behaviour in the NGINX C implementation of the round robin algorithm; since the code to perform the balancing is now in Lua, I tried so hard to replicate the same logic here

https://github.com/inge4pres/ingress-nginx/blob/issues/4023/rootfs/etc/nginx/lua/util.lua#L90

but as you will see if you checkout that branch, tests fail because the resulting shuffled table is nil.

I was reading the Lua manual for the past day and could not really do more than this, so before opening a PR I'd love to hear your opinion on the code and on the overall approach to this issue.

Thank you

@inge4pres correct me if I'm wrong but that can happen only if Nginx workers constantly reload and the list of endpoints change. Because when that happens we reinit roundrobin balancer instance which means setting last_id to nil (https://github.com/openresty/lua-resty-balancer/blob/0fb8a792ca69a3769b358fb489e2fb36c183bb6e/lib/resty/roundrobin.lua#L71) which in its turn means start from the first element in the list. Only and only in this case we can see what you describe.

If that's not the case, maybe we have a bug in https://github.com/kubernetes/ingress-nginx/blob/dfa7f10fc9691a3be90fd30cb458b64b617ef440/rootfs/etc/nginx/lua/balancer/resty.lua#L17 that always returns true for some reason (a.k.a thinks the list of endpoints changed) and therefore reinits roundrobin instance.

I'll add some info logging in there to give some visibility.

Also we have e2e test covering round robing load balancing functionality in test/e2e/loadbalance/round_robin.go, feel free to try to regenerate this issue with that.

Hi @ElvinEfendi thanks for the follow up on this and the additional info.

We are seeing this issue only during rollout of a Deployment, when the list of backend.endpoints is updated continuously by the deployment controller and hence the NGINX backends list refreshed.

I looked into the e2e tests for round robin and yes it is correctly distributing evenly the requests, I'm not arguing the algorithm _per se_ in the single ingress instance, only that it can be a problem if the list is exactly the same for multiple ingress instances, which seems to be the case.

Imagine the scenario where you have an ingress deployment of 50 pods sending requests to a backend with 5 pod: during a deployment rollout, as soon as the config changes, all first 50 requests coming in each ingress pod are routed to the first pod in the backend list; at first glance this might seem innocuous although if the service is tuned for 10 concurrent requests and suddenly gets 50 requests it might overload. This gets repeated for all the times the rollout updates its state so the overload goes even worse.

Personally I have not enough Lua skills (yet! 😄) to help debugging/improving this, so we can only report the same data provided by @dmxlsj (showing that during a deployment some pods are routed with more requests, some with less)

and suggest to reimplement in the round_robin module a shuffle of the pods list before using it as list of IPs to balance.

Thanks for more context @inge4pres. We are still not sure whether this is the reason why the original OP in this thread is seeing the issue but I agree that we should fix the scenario you described above.

I created https://github.com/openresty/lua-resty-balancer/pull/26 to tackle it.

Agree with you both in putting this behind a flag and thanks for the new issue!

I'll follow up in there and see if I can help 😄

@dmxlsj @inge4pres please test quay.io/kubernetes-ingress-controller/nginx-ingress-controller:dev

That image contains #4225 (with the fix @ElvinEfendi mentioned)

Hello @aledbf and all,

I apologize for my late response, but is the dev tag still valid? I am considering to upgrade nginx container to 0.25.0 this week, so I wonder if that dev tag fix is included in 0.25.0

@dmxlsj yes, please use 0.25.0.

Thanks, I will test lua balancer and let you know.

Closing. Please update to 0.26.0 and reopen the issue if the problem persists.

Most helpful comment

Thanks for more context @inge4pres. We are still not sure whether this is the reason why the original OP in this thread is seeing the issue but I agree that we should fix the scenario you described above.

I created https://github.com/openresty/lua-resty-balancer/pull/26 to tackle it.