Darknet: Is there pixel shuffle layer?

BTW, is there doc where API is introduced?

All 33 comments

No. https://github.com/AlexeyAB/darknet/blob/master/src/upsample_layer.c. It is resize, but reshape.

@AlexeyAB

Is there a guide how to write customer layer?

@AlexeyAB

Could you give a guide how to write customer layer? I want to implement pixel shuffle layer.

Thanks a lot.

@Francis-Xia Hi,

If you want to add your custom layer:

Add

new_layer.candnew_layer.hfiles to the directorydarknet/src/Add here

#include "new_layer.h"https://github.com/AlexeyAB/darknet/blob/b751bac17505a742f149ada81d75689b5e692cde/src/parser.c#L37Add lines for your new layer in these places:

if (strcmp(type, "[newlayer]") == 0) return NEWLAYER;https://github.com/AlexeyAB/darknet/blob/b751bac17505a742f149ada81d75689b5e692cde/src/parser.c#L79layer parse_newlayer(list *options, size_params params, network net) { .. }https://github.com/AlexeyAB/darknet/blob/b751bac17505a742f149ada81d75689b5e692cde/src/parser.c#L572}else if (lt == NEWLAYER) { l = parse_newlayer(options, params, net);https://github.com/AlexeyAB/darknet/blob/b751bac17505a742f149ada81d75689b5e692cde/src/parser.c#L821-L822

And implement these functions in the

new_layer.clayer make_newlayer_layer()void resize_newlayer_layer(layer *l, int w, int h)void forward_newlayer_layer(layer l, network_state state)void backward_newlayer_layer(layer l, network_state state)void update_newlayer_layer(layer l, int batch, float learning_rate, float momentum, float decay)void forward_newlayer_layer_gpu(layer l, network_state state)void backward_newlayer_layer_gpu(layer l, network_state state)void update_newlayer_layer_gpu(layer l, int batch, float learning_rate_init, float momentum, float decay)void pull_newlayer_layer(layer layer)void push_newlayer_layer(layer layer)

@Francis-Xia

What is the shuffle-layer, is it in the Caffe? http://caffe.berkeleyvision.org/tutorial/layers.html

There is [reorg] layer (it isn't Caffe reshape layer, it change [w,h,c] too, but also shuffle elements) https://github.com/AlexeyAB/darknet/blob/master/src/reorg_layer.c

this is the fixed [old_reorg] from original repository layer https://github.com/pjreddie/darknet/blob/master/src/reorg_layer.c

If you have a time, you can read about old reorg layer here: https://github.com/opencv/opencv/pull/9705#discussion_r143136536

@Francis-Xia

What is the shuffle-layer, is it in the Caffe? http://caffe.berkeleyvision.org/tutorial/layers.html

There is [reorg] layer (it isn't Caffe reshape layer, it change [w,h,c] too, but also shuffle elements) https://github.com/AlexeyAB/darknet/blob/master/src/reorg_layer.c

this is the fixed [old_reorg] from original repository layer https://github.com/pjreddie/darknet/blob/master/src/reorg_layer.c

If you have a time, you can read about old reorg layer here: opencv/opencv#9705 (comment)

@AlexeyAB

Pixel shuffle is one of upsample layer, and the source code in pytorch is at following linking:

https://pytorch.org/docs/stable/_modules/torch/nn/modules/pixelshuffle.html

Thanks a lot.

Solved and thanks.

Pixel shuffle is one of upsample layer, and the source code in pytorch is at following linking:

https://pytorch.org/docs/stable/_modules/torch/nn/modules/pixelshuffle.html

This is the same as new [reorg] layer in this repository.

@AlexeyAB

Could you give me a example how to use [reorg] layer? Thanks in advance.

Pixel shuffle is one of upsample layer, and the source code in pytorch is at following linking:

https://pytorch.org/docs/stable/_modules/torch/nn/modules/pixelshuffle.htmlThis is the same as new [reorg] layer in this repository.

I try new [reorg] layer, but I think it is not pixel shuffle layer. I want to upsample but downsample.

@AlexeyAB

Pixel shuffle is one of upsample layer, and the source code in pytorch is at following linking:

https://pytorch.org/docs/stable/_modules/torch/nn/modules/pixelshuffle.htmlThis is the same as new [reorg] layer in this repository.

I am sorry. I solved the problem. Thanks a lot.

BTW, the solution is displayed below:

[reorg]

stride=2

reverse=1

- Upsampling

[reorg]

stride=2

- Downsampling

[reorg]

stride=2

reverse=1

Upsampling

[reorg]

stride=2Downsampling

[reorg]

stride=2

reverse=1

Dear @AlexeyAB :

Could you tell me where can I find reorg_cpu()?

Thanks in advance.

Francis

Upsampling

[reorg]

stride=2

Downsampling

[reorg]

stride=2

reverse=1Dear @AlexeyAB :

Could you tell me where can I find

reorg_cpu()?Thanks in advance.

Francis

@AlexeyAB

Thanks in advance.

@Francis-Xia

https://github.com/AlexeyAB/darknet/blob/master/src/blas.c#L9-L33

@Francis-Xia

https://github.com/AlexeyAB/darknet/blob/master/src/blas.c#L9-L33

@AlexeyAB

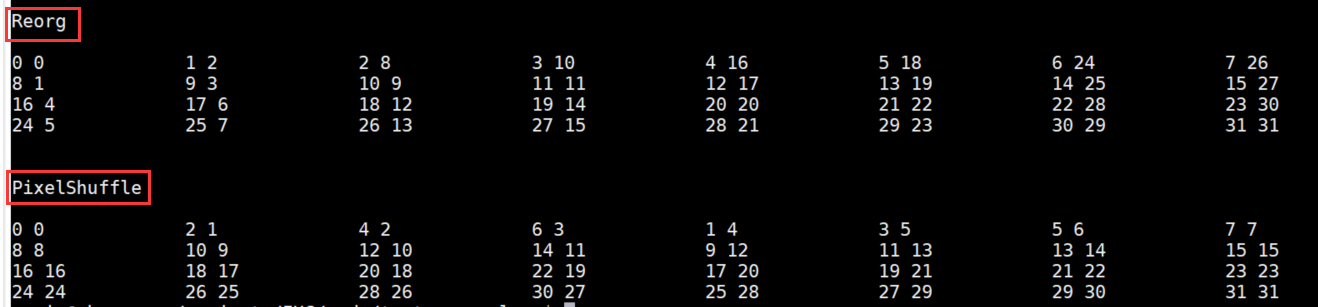

I test reorg layer from darknet and reshape layer from pytorch, but I found they are difference.

@Francis-Xia

I test reorg layer from darknet and reshape layer from pytorch

What do you use?

np.reshapehttps://docs.scipy.org/doc/numpy-1.15.1/reference/generated/numpy.reshape.htmltorch.reshapehttps://pytorch.org/docs/stable/torch.htmltorch.view()ort.permute()https://discuss.pytorch.org/t/equivalent-of-np-reshape-in-pytorch/144/17torch.nn.PixelShuffle()https://pytorch.org/docs/stable/_modules/torch/nn/modules/pixelshuffle.html and https://pytorch.org/docs/0.1.12/nn.html?highlight=pixel%20shuffle#torch.nn.PixelShuffle

...

@Francis-Xia

I test reorg layer from darknet and reshape layer from pytorch

What do you use?

np.reshapehttps://docs.scipy.org/doc/numpy-1.15.1/reference/generated/numpy.reshape.htmltorch.reshapehttps://pytorch.org/docs/stable/torch.htmltorch.view()ort.permute()https://discuss.pytorch.org/t/equivalent-of-np-reshape-in-pytorch/144/17torch.nn.PixelShuffle()https://pytorch.org/docs/stable/_modules/torch/nn/modules/pixelshuffle.html and https://pytorch.org/docs/0.1.12/nn.html?highlight=pixel%20shuffle#torch.nn.PixelShuffle

...

@AlexeyAB

Sorry for my latter response.

I use 4. torch.nn.PixelShuffle()

Dear @AlexeyAB

I realized pixelshuffle, however, the performance reduce around 20%. In order to implement the layer quickly, I just replace the key part of reorg layer in reorg_cpu and reorg_kernel with pixelshuffle operations.

The two layer rearrange tensor with different order and no more operation, so I guess the performances should be similarity. Could help me?

@Francis-Xia Show your implementation.

@AlexeyAB

I only change the key parts of above two functions.

Dear @AlexeyAB

Is it right if I just replaced the key part of reorg layer[ function _reorg_kernel_ _and reorg_cpu_]? Furthermore, I got a unusual result. I trained my net without _-map_ for 100000 ites and I got map around 60%, Then I loaded the weights from net without _-map_ and trained net with _-map_ after 1000 ites, the map became around 25%. I think it is unexpected result, right?

@AlexeyAB

I am sorry, the implementation is wrong, and I am testing the correct one again.

int ho = ht * stride + t / (w/stride);

int wo = wt * stride + t % (w/stride);

=>

int ho = ht * stride + t / stride;

int wo = wt * stride + t % stride;

@AlexeyAB

I completed testing. The pixelshuffle layer is lower than the reorg layer and the specific value is around 4%.

@Francis-Xia

The pixelshuffle layer is lower than the reorg layer and the specific value is around 4%.

Do you mean that pixelshuffle is 4 percent (1.04x times) slower than reorg?

Or does it gives 4% lower mAP?

@Francis-Xia

The pixelshuffle layer is lower than the reorg layer and the specific value is around 4%.

- Do you mean that pixelshuffle is 4 percent (1.04x times) slower than reorg?

- Or does it gives 4% lower mAP?

@AlexeyAB

It gives 4% lower mAP

@Francis-Xia

Also you can try to compare

[old_reorg]- this is the same as[reorg]from original repository: https://github.com/pjreddie/darknet[reorg]- this is fixed reorg in my repository

[reorg] is better than [old_reorg]

Dear @AlexeyAB

The old version is poxelshuffle exactly. Could you give me the reason why the new version is better than old version?

Thanks in advance.

@Francis-Xia

As I wrote the [old_reorg] is maden with mistakes: https://github.com/AlexeyAB/darknet/issues/2336#issuecomment-470918580

Discussion: https://github.com/opencv/opencv/pull/9705#discussion_r143136536

[reorg] in my repository is a fixed layer, it gives higher mAP.

@Francis-Xia

As I wrote the[old_reorg]is maden with mistakes: #2336 (comment)

Discussion: opencv/opencv#9705 (comment)

[reorg]in my repository is a fixed layer, it gives higher mAP.

I am sorry to say, it is different between old version of reorg layer and pixelshuffle. And I find your reorg is better than pixelshuffle [that is the reshape layer in caffe].

@AlexeyAB

I completed testing, and the performance of caffe reshape layer is better than reorg layer's. However, The effect is slight [~0.5%].

@Francis-Xia

I completed testing, and the performance of caffe reshape layer is better than reorg layer's. However, The effect is slight [~0.5%].

Is caffe reshape layer faster or more accuracte?

As I remember caffe reshape layer doesn't shuffle inputs in memory, it just change shape from w,h,c to w/2,h/2,c*4.

@Francis-Xia

I completed testing, and the performance of caffe reshape layer is better than reorg layer's. However, The effect is slight [~0.5%].

Is caffe reshape layer faster or more accuracte?

As I remember caffe reshape layer doesn't shuffle inputs in memory, it just change shape from w,h,c to w/2,h/2,c*4.

@AlexeyAB

You are right, more accuracte[~0.5%].