Darknet: Why FN is negetive number and recall>1?

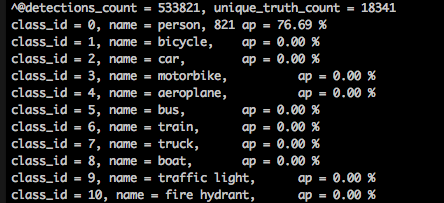

I use "detector map" to evaluate the pretrained yolov3.weights on a person dataset where only persons are annotated. The result is like this

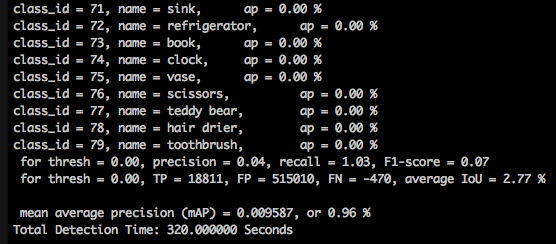

Other classes are all zeros, which is reasonable, but in the end

FN is negetive number and recall>1, I guess some detections hit the same ground truth, and FN is calculated by unique_truth_count-TP.

I think it's better to record whether each GT is detected and define FN as the number of GTs that are not detected.

All 3 comments

FN is negetive number and recall>1, I guess some detections hit the same ground truth

There were multiple hit for GT, so TP/FP/FN/IoU could be calculated wrong. (with mAP everything was fine.)

I fixed it. Now I check was this GT already detected or not. In any cases should be unique_truth_count=TP+FN

What TP/FP/FN/IoU can you get now?

I was running into the same problem, it appears to have been fixed now

Yes, it goes well now, thanks!

I'm very glad to see the recall of benchmark weights decreases by nearly a half, and my new-trained weights appears to work a little :)

Most helpful comment

There were multiple hit for GT, so TP/FP/FN/IoU could be calculated wrong. (with mAP everything was fine.)

I fixed it. Now I check was this GT already detected or not. In any cases should be

unique_truth_count=TP+FNWhat TP/FP/FN/IoU can you get now?