Virtual-environments: pip installation of large Python libraries failing

Describe the bug

Hi. In the last week we've (the pyhf dev team) started seeing many CI jobs fail when trying to install large Python libraries like TensorFlow and PyTorch. An example log snippet for such a failure is

python -m pip install --upgrade pip setuptools wheel

python -m pip install --ignore-installed -U -q --no-cache-dir -e .[test]

python -m pip list

shell: /bin/bash -e {0}

shell: /bin/bash -e {0}

env:

pythonLocation: /opt/hostedtoolcache/Python/3.8.5/x64

Collecting pip

Downloading pip-20.2-py2.py3-none-any.whl (1.5 MB)

Collecting setuptools

Downloading setuptools-49.2.0-py3-none-any.whl (789 kB)

Collecting wheel

Downloading wheel-0.34.2-py2.py3-none-any.whl (26 kB)

Installing collected packages: pip, setuptools, wheel

Attempting uninstall: pip

Found existing installation: pip 20.1.1

Uninstalling pip-20.1.1:

Successfully uninstalled pip-20.1.1

Attempting uninstall: setuptools

Found existing installation: setuptools 47.1.0

Uninstalling setuptools-47.1.0:

Successfully uninstalled setuptools-47.1.0

Successfully installed pip-20.2 setuptools-49.2.0 wheel-0.34.2

ERROR: THESE PACKAGES DO NOT MATCH THE HASHES FROM THE REQUIREMENTS FILE. If you have updated the package versions, please update the hashes. Otherwise, examine the package contents carefully; someone may have tampered with them.

torch~=1.2; extra == "test" from https://files.pythonhosted.org/packages/8c/5d/faf0d8ac260c7f1eda7d063001c137da5223be1c137658384d2d45dcd0d5/torch-1.6.0-cp38-cp38-manylinux1_x86_64.whl#sha256=5357873e243bcfa804c32dc341f564e9a4c12addfc9baae4ee857fcc09a0a216 (from pyhf==0.1.dev1):

Expected sha256 5357873e243bcfa804c32dc341f564e9a4c12addfc9baae4ee857fcc09a0a216

Got c3eb38095bfa5caae1adaf1e8543872fe572586a9589ccd65de8368fd71763a0

##[error]Process completed with exit code 1.

The errors in CI from

python -m pip install --upgrade pip setuptools wheel

python -m pip install --ignore-installed -U -q --no-cache-dir -e .[test]

are not able to be replicated in a clean virtual environment with the same commands. This is only happening in the CI runners on GitHub Actions (we can't reproduce this locally or in Docker images and there's nothing wrong with tensorflow or torch as far as we can tell). Can you advise what might be the issue here and if there are other reports of this? We can supply other examples if that is helpful.

Area for Triage: Python

Question, Bug, or Feature?: Bug

Virtual environments affected

- [x] macOS 10.15

- [ ] Ubuntu 16.04 LTS

- [ ] Ubuntu 18.04 LTS

- [x] Ubuntu 20.04 LTS

- [ ] Windows Server 2016 R2

- [ ] Windows Server 2019

Expected behavior

The same as of a few weeks ago: For all our our required libraries to be able to be installed from PyPI from

python -m pip install --upgrade pip setuptools wheel

python -m pip install --ignore-installed -U -q --no-cache-dir -e .[test]

Actual behavior

Running the CI for pyhf (https://github.com/scikit-hep/pyhf/blob/f584dab9d984ac03d09764001532acca268563c4/.github/workflows/ci.yml#L25-L29) on most recent runs results in this behavior. An example of a recent one is: https://github.com/scikit-hep/pyhf/runs/941561793?check_suite_focus=true. I've attached the full log archive here as well: logs_9415.zip

If we can provide more actionable feedback please let us know as we're happy to help.

cc @lukasheinrich @kratsg

All 23 comments

@github-actions Sorry but this needs to get the Area: Python label added too (I forgot to add that on before I clicked).

Hi @matthewfeickert , thank you for your report, I was able to reproduce this trouble, it reproduced periodically on various Ubuntu versions and various Python versions, the cause of the problem is not yet clear, we will continue to investigate it.

I was able to reproduce this trouble, it reproduced periodically on various Ubuntu versions and various Python versions, the cause of the problem is not yet clear, we will continue to investigate it.

Thanks very much @vsafonkin. If there is any additional information that we can provide please let us know, as we'd be most happy to help.

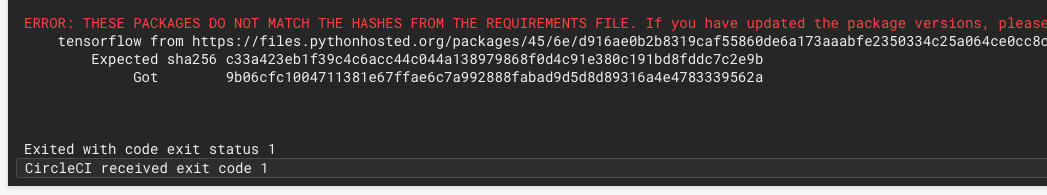

FYI: I'm observing a similar issue in CircleCI:

https://app.circleci.com/pipelines/github/optuna/optuna/1855/workflows/f56ad71d-2bff-4fb3-9a31-3e4567f37f5a/jobs/51057

FYI: I'm observing a similar issue in CircleCI:

https://app.circleci.com/pipelines/github/optuna/optuna/1855/workflows/f56ad71d-2bff-4fb3-9a31-3e4567f37f5a/jobs/51057

Do you have a public link? This requires login to CircleCI

@matthewfeickert I don't. I'm not sure if CircleCI provides links that we can see withtout logging in (you can log in with your github account though). Here's a screeshot of the error.

We have the same problem. Could this have to do with the cache action?

This issue appears even if the package downloads via wget from direct link. It looks like connection aborting by the remote host.

related issue in the pip repository: https://github.com/pypa/pip/issues/8510

I've been experiencing the same issue in GitHub Actions CI.

Checksum verification fails on large libraries (mainly xgboost, numpy)

Experiencing the same problem.

- Only happens for large libraries

- Only happens for matrix-based runs

- Only happens when installing multiple packages (ex via

poetry installorpip install -r req.txtand not when installing a single package directly.

My guess: the matrix based machines share an IP and end up trying to download the same packages (and many packages) at the same time. This triggers some type of DDOS protection on the PyPi side.

@adriangb , there is no any difference between matrix based machines and the single machine. Matrix based builds just pick up a few random machines instead of the single one.

Your guess about a few machines that are trying to download the same package at the same time sounds reasonable (I believe machines in CI doesn't share IP but need to double check).

We will get back when we have any updates

I guess using a matrix is an easy way to end up with multiple machines trying to install the same package at the same time. Thanks for looking into this!

Linking this comment as it is relevant: https://github.com/actions/virtual-environments/issues/1343#issuecomment-672735255

Basically, one of the pypi.org admins checked and did not see anything correlated with the issues we see.

This issue no longer reproduces for me. Could you please check if you still see the same issue?

@matthewfeickert @harupy @hodisr @jonasrauber

@vsafonkin Same here. I haven't seen the issue for a week.

This issue no longer reproduces for me. Could you please check if you still see the same issue?

@vsafonkin We seem to be good now. Thanks for the work everyone.

Same here, all our last builds have been carrying on as expected. 👍

Unfortunately, we have not been able to find out the root cause of this issue, perhaps it was fixed from PyPI side. I've closed this issue, please feel free to reopen it, if the problem appears again. Thanks!

Having the same problem again. Random packages are affected.

For further investigation, I have tried to download pycryptodome using wget:

Each time, I get a different file. Same size (13688561). unzip -t reports between 9 and 31 CRC errors for 20 downloads, at varying positions.

Maybe a faulty RAM?

Hey, @pfifltrigg !

Tried to reproduce your problem, but all downloads were successful,

80 threads downloading this package 200 times, checking sha256, unzip check

I guess there is a really flaky problem, we currently investigating it, if you have any logs or pipelines that needs a look, please, share if it's possible.

Thank you!

@LeonidLapshin :

It looks like it's a local problem with Debian running in VirtualBox. Downloads on the host system are fine, while simultaneous downloads on the guest system produce errors. After some fiddling and a reboot, everything is working again, at least for now. Sigh. Sorry for any inconvenience.

@pfifltrigg thank you for clarification, please reopen this ticket in case if you still have the problem :)

Most helpful comment

Hi @matthewfeickert , thank you for your report, I was able to reproduce this trouble, it reproduced periodically on various Ubuntu versions and various Python versions, the cause of the problem is not yet clear, we will continue to investigate it.