Transformers: Sequence Classification pooled output vs last hidden state

❓ Questions & Help

Why in BertForSequenceClassification do we pass the pooled output to the classifier as below from the source code

outputs = self.bert(input_ids,

attention_mask=attention_mask,

token_type_ids=token_type_ids,

position_ids=position_ids,

head_mask=head_mask)

pooled_output = outputs[1]

pooled_output = self.dropout(pooled_output)

logits = self.classifier(pooled_output)

but in RobertaForSequenceClassification we do not seem to pass the pooler output?

outputs = self.roberta(input_ids,

attention_mask=attention_mask,

token_type_ids=token_type_ids,

position_ids=position_ids,

head_mask=head_mask)

sequence_output = outputs[0]

logits = self.classifier(sequence_output)

I thought we would pass the pooled_output in both cases to the classifier?

All 13 comments

Both would probably work, but I agree that streamlining is a good idea. In their paper, BERT gets the best results by concatenating the last four layers, so what I always use is something like this (from the top of my head):

outputs = self.bert(input_ids,

attention_mask=attention_mask,

token_type_ids=token_type_ids,

position_ids=position_ids,

head_mask=head_mask)

hidden_states = outputs[1]

pooled_output = torch.cat(tuple([hidden_states[i] for i in [-4, -3, -2, -1]]), dim=-1)

pooled_output = pooled_output[:, 0, :]

pooled_output = self.dropout(pooled_output)

# classifier of course has to be 4 * hidden_dim, because we concat 4 layers

logits = self.classifier(pooled_output)

I might put a pre_classifier and an activation function before the drop out depending on the case.

This is very helpful. Thanks @BramVanroy for the ideas

@BramVanroy Thanks for the solution, but I think you meant writing hidden_states = outputs[2] instead of pooled_output = outputs[1], right?

@mkaze I think you are talking about TFBertModel which has hidden_states at index 2, but OP is talking about TFBertForSequenceClassification which has hidden_states at index 1, so we need to use index 1. @BramVanroy is this correct?

@BramVanroy also, is it useful to use outputs[1] as in your code example with the RobertaForSequenceClassification and TFDistilBertForSequenceClassification models?

@mkaze @don-prog My variables were badly named, indeed. In BertForSequenceClassification, the hidden_states are at index 1 (if you provided the option to return all hidden_states) and if you are not using labels. At index 2 if you did pass the labels.

I do not know the position of hidden states for the other models by heart. Just read through the documentation and look at the forward method. There you can see under "returns" what is returned at which index.

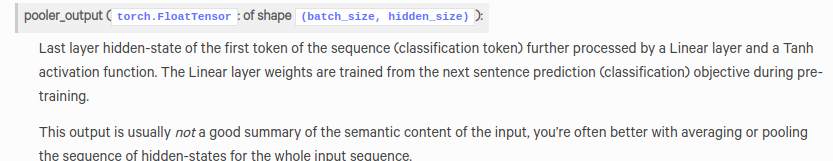

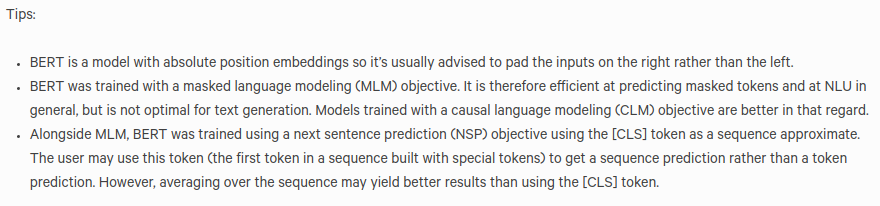

@BramVanroy @don-prog The weird thing is that the documentation claims that the pooler_output of BERT model is not a good semantic representation of the input, one time in "Returns" section of forward method of BertModel (here):

and another one at the third tip in "Tips" section of "Overview" (here):

However, despite these two tips, the pooler output is used in implementation of BertForSequenceClassification (here).

Interestingly, when I used their suggestion, i.e. using the average of hidden-states for sequence classification instead of pooler output, I got a worse result. I asked about this a few months ago in issue #4048, but unfortunately no one provided an explanation.

@BramVanroy Many thanks for the quick reply! So, this is my usage of the last TFDistilBertModel 4 hidden states in the TensorFlow:

def create_model():

input_ids = tf.keras.Input(shape=(100,), dtype='int32')

transformer = TFDistilBertModel.from_pretrained('distilbert-base-uncased', output_hidden_states=True)(input_ids)

print(len(transformer)) #2

print(len(transformer[1])) #7

hidden_states = transformer[1]

merged = tf.keras.layers.concatenate(tuple([hidden_states[i] for i in [-4, -3, -2, -1]]))

output = tf.keras.layers.Dense(32,activation='relu')(merged)

output = tf.keras.layers.Dropout(0.1)(output)

output = tf.keras.layers.Dense(1, activation='sigmoid')(output)

model = tf.keras.models.Model(inputs = input_ids, outputs = output)

model.compile(tf.keras.optimizers.Adam(lr=6e-6), loss='binary_crossentropy', metrics=['accuracy'])

return model

Is this this correct representation of your PyTorch code in the TensorFlow(except for the difference in additional layers)?

@mkaze Yes, this is always something that comes up for discussion. I think the only correct answer here is (as so often): try it out and see what works best in your scemario. Results will differ between different projects, depending on the task, training steps, dataset, and so on. There is no one right answer. You may even decide to use maxpooling rather than average pooling. There are loads of things to try if you really want to. But generally speaking, you should get good results with either CLS or averaging over tokens.

@don-prog Unfortunately I am not very familiar with TF so I fear I cannot help you with that. Try it out, and keep track of the sizes of the tensors that are passed through (or just have a look at the graph of your model). If those are correct, then I think it's fine. You can ask your question on the forums, maybe someone can help you out there.

I think the classification for robertaforsequenceclassification is the RobertaClassificationHead, which takes the CLS embedding for classification

https://github.com/huggingface/transformers/blob/master/src/transformers/modeling_roberta.py#L957

I also found that AlBERT takes pooler result as bert, but distillbert has something different

https://github.com/huggingface/transformers/blob/master/src/transformers/modeling_distilbert.py#L607-L610

just wondering if huggingface plans to consolidate this part for the sequence classification?

@DanqingZ Probably not. Most often these implementation are specific to how the original paper implemented them for downstream tasks. In that sense, it is normal that they differ. If you want to create your own one, as I did before, you can simply create a custom SequenceClassificationHead that works with any PretrainedModel's output. It is quite simple, so I don't think the library should provide this.

@BramVanroy yeah, I can do that.

But imagine a scenario. If I want to inherit the AutoModelForSequenceClassification, and add my own components to different types of model(bert, roberta, distillbert). If huggingface could make classifier have the same meaning and usage, it will be easier for other people to make downstream changes for multiple models at the same time, like adding label attention layer etc. The classifier is a bit misleading now, like roberta has pooler within the classifier while bert has pooled output.

Yeah I agree that if one has enough time to dig into details then it should be easy for them to make changes, but it is just less intuitive for people who just start using huggingface transformers.

@DanqingZ I understand what you mean, but these implementations are not necessarily chosen by HuggingFace. Those are the original implementations in the paper by the authors. It is therefore not possible that they are all the same and they will not be changed.

If you want to add the functionality that you want, I would recommend writing your own extension to transformers. The process will teach you a lot about how PyTorch models work in general and how this library functions specifically. Yes, it will take a while, but it is the only solution.

Most helpful comment

Both would probably work, but I agree that streamlining is a good idea. In their paper, BERT gets the best results by concatenating the last four layers, so what I always use is something like this (from the top of my head):

I might put a pre_classifier and an activation function before the drop out depending on the case.