Transformers: Unable to get hidden states and attentions BertForSequenceClassification

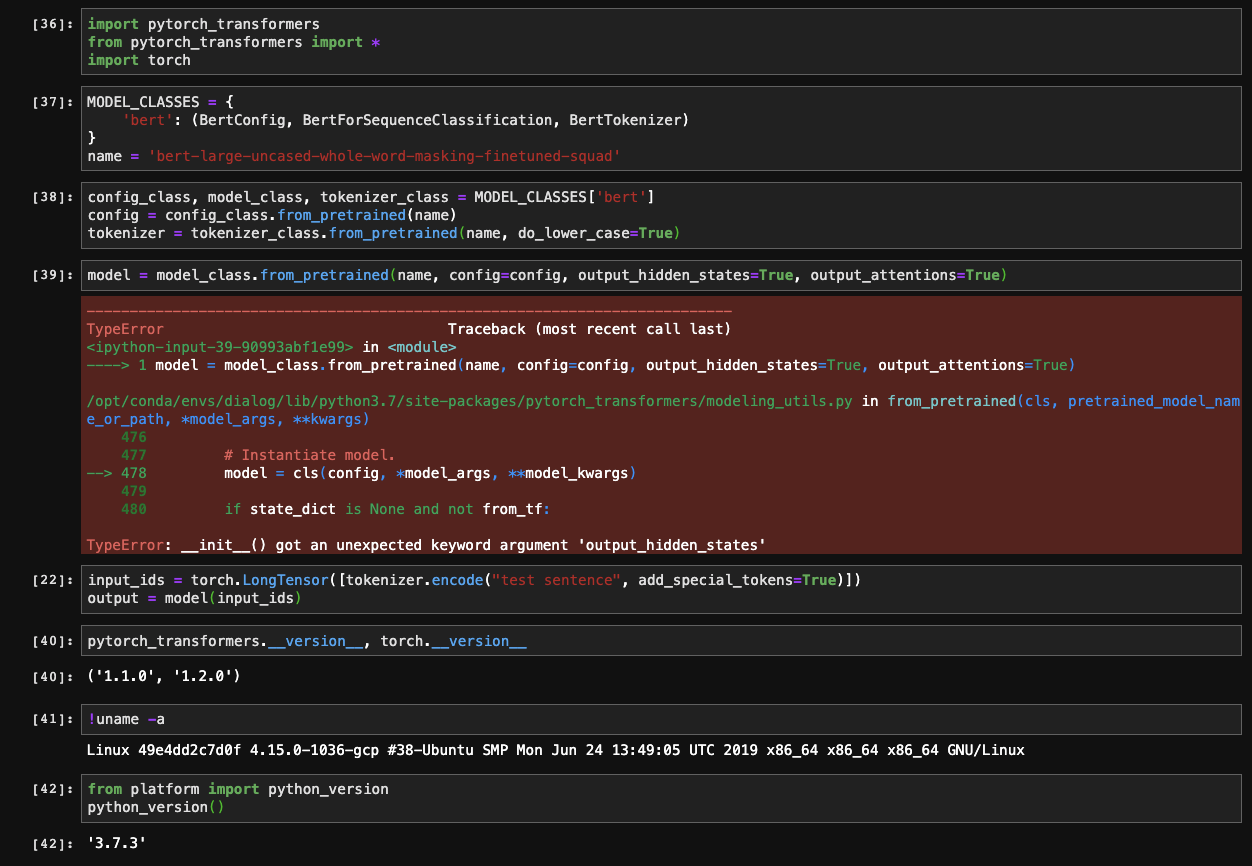

I am able to instantiate the model etc. without the output_ named arguments, but it fails when I include them. This is the latest master of pytorch_transformers installed via pip+git.

All 3 comments

Hi!

The two arguments output_hidden_states and output_attentions are arguments to be given to the configuration.

Here, you would do as follows:

config = config_class.from_pretrained(name, output_hidden_states=True, output_attentions=True)

tokenizer = tokenizer_class.from_pretrained(name, do_lower_case=True)

model = model.from_pretrained(name, config=config)

input_ids = torch.LongTensor([tok.encode("test sentence", add_special_tokens=True)])

output = model(input_ids)

# (logits, hidden_states, attentions)

You can have more information on the configuration object here.

Hope that helps!

Juste a few additional details:

The behavior of the added named arguments provided to model_class.from_pretrained() depends on whether you supply a configuration or not (see the doc/docstrings).

First, note that you don't have to supply a configuration to model_class.from_pretrained(). If you don't, the relevant configuration will be automatically downloaded. You can supply a configuration file if you want to control in details the parameters of the model.

As a consequence, if you supply a configuration, we assume you have already set up all the configuration parameters you need and then just forward the named arguments provided to model_class.from_pretrained() to the model __init__.

If you don't supply configuration, the relevant configuration will be automatically downloaded and the named arguments provided to model_class.from_pretrained() will be first passed to the configuration class initialization function (from_pretrained()). Each key of kwargs that corresponds to a configuration attribute will be used to override said attribute with the supplied kwargs value. Remaining keys that do not correspond to any configuration attribute will be passed to the underlying model’s __init__ function. This is a way to quickly set up a model with a personalized configuration.

TL;DR, you have a few ways to prepare a model like one you want:

# First possibility: prepare a modified configuration yourself and use it when you

# load the model:

config = config_class.from_pretrained(name, output_hidden_states=True)

model = model.from_pretrained(name, config=config)

# Second possibility: small variant of the first possibility:

config = config_class.from_pretrained(name)

config.output_hidden_states = True

model = model.from_pretrained(name, config=config)

# Third possibility: the quickest to write, do all in one take:

model = model.from_pretrained(name, output_hidden_states=True)

# This last variant doesn't work because model.from_pretrained() will assume

# the configuration you provide is already fully prepared and doesn't know what

# to do with the provided output_hidden_states argument

config = config_class.from_pretrained(name)

model = model.from_pretrained(name, config=config, output_hidden_states=True)

@LysandreJik and @thomwolf, thanks for your detailed answers. This is the best documentation of the relationship between config and the model class. I think I picked up the pattern I used in my notebook from the README, particularly this one:

https://github.com/huggingface/pytorch-transformers/blob/master/README.md#quick-tour

model = model_class.from_pretrained(pretrained_weights,

output_hidden_states=True,

output_attentions=True)

I might have picked up the config class use from here:

https://github.com/huggingface/pytorch-transformers/blob/master/examples/run_squad.py#L467

My thinking was the named arguments in model.from_pretrained override the config. I actually like the "second possibility" style a lot for doing that. It's explicit and very clear.

# Second possibility: small variant of the first possibility:

config = config_class.from_pretrained(name)

config.output_hidden_states = True

model = model.from_pretrained(name, config=config)

Thanks again for the clarity.

Most helpful comment

Juste a few additional details:

The behavior of the added named arguments provided to

model_class.from_pretrained()depends on whether you supply a configuration or not (see the doc/docstrings).First, note that you don't have to supply a configuration to

model_class.from_pretrained(). If you don't, the relevant configuration will be automatically downloaded. You can supply a configuration file if you want to control in details the parameters of the model.As a consequence, if you supply a configuration, we assume you have already set up all the configuration parameters you need and then just forward the named arguments provided to

model_class.from_pretrained()to the model__init__.If you don't supply configuration, the relevant configuration will be automatically downloaded and the named arguments provided to

model_class.from_pretrained()will be first passed to the configuration class initialization function (from_pretrained()). Each key ofkwargsthat corresponds to a configuration attribute will be used to override said attribute with the suppliedkwargsvalue. Remaining keys that do not correspond to any configuration attribute will be passed to the underlying model’s __init__ function. This is a way to quickly set up a model with a personalized configuration.TL;DR, you have a few ways to prepare a model like one you want: