Transformers: Chinese BERT broken probably after `pytorch-transformer` release

I suspect that there is some recent code change that breaks the Chinese BERT.

I used the following PyTorch hub code to load the Chinese BERT tokenizer and print out some tokens in the vocab perhaps just a few days ago and everything was fine:

import torch

GITHUB_REPO = "huggingface/pytorch-pretrained-BERT"

tokenizer = torch.hub.load(GITHUB_REPO, 'bertTokenizer', "bert-base-chinese")

# print some pre-determined tokens with their corresponding indices

indices = list(range(647, 657))

some_pairs = [(t, idx) for t, idx in vocab.items() if idx in indices]

for pair in some_pairs:

print(pair)

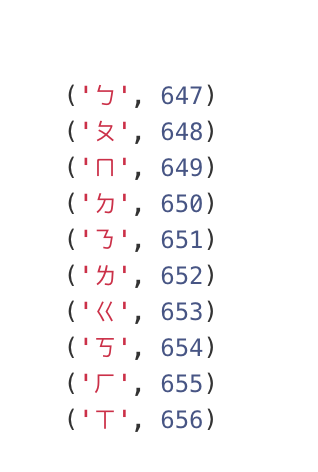

It used to produce the following result:

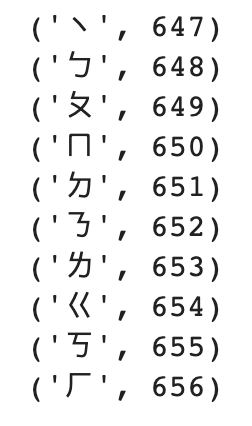

But after some recent commits or may the latest release, the voacab result slightly changed to even with the same code:

This difference should not happen since we're using the exact same model and code. And the following maskedLM task failed to predict the masked token accordingly and produced a broken result (which used to predict the correct result just a few days ago).

I already tried replacing pytorch-pretrained-BERT to pytorch-transformers but it still don't work.

I also tried to use the tokenizer directly from repo and it didn't work either.

from pytorch_transformers import BertTokenizer, BertModel, BertForMaskedLM

tokenizer = BertTokenizer.from_pretrained("bert-base-chinese")

Please kindly provide some guide or suggestion about how to fix this problem. Chinese BERT may not be functioning as expected now. Thanks in advance.

All 8 comments

We did slightly change the way the tokenizer strip the spaces at the end of the words when loading the tokenizer, as discussed in issue #328, in particular here https://github.com/huggingface/pytorch-transformers/issues/328#issuecomment-503630929.

Now, I'm not exactly sure what is the right solution for both cases. I'll give a look, but I won't have time right now. If you want to investigate the source of the issue and compare with #328, it can help.

@thomwolf Thanks for the suggestion. After some twists, I can reproduce the desired result (though it's very hacky and we should come up with a better solution)

I used the previous version of load_vocb function to regenerate vocabulary, and it did reproduce the desired vocab:

import collections

# previous version of `load_voacb`

def load_vocab(vocab_file):

"""Loads a vocabulary file into a dictionary."""

vocab = collections.OrderedDict()

index = 0

with open(vocab_file, "r", encoding="utf-8") as reader:

while True:

token = reader.readline()

if not token:

break

token = token.strip()

vocab[token] = index

index += 1

return vocab

# get the vocab file to regenerate vocb

!wget https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-chinese-vocab.txt

# first load the latest version tokenizer and overwrite the vocab by previous version of `load_vocab`

tokenizer = torch.hub.load(GITHUB_REPO, 'bertTokenizer', "bert-base-chinese")

tokenizer.vocab = load_vocab("bert-base-chinese-vocab.txt")

# get the desired result as previously

indices = list(range(647, 657))

some_pairs = [(t, idx) for t, idx in vocab.items() if idx in indices]

for pair in some_pairs:

print(pair)

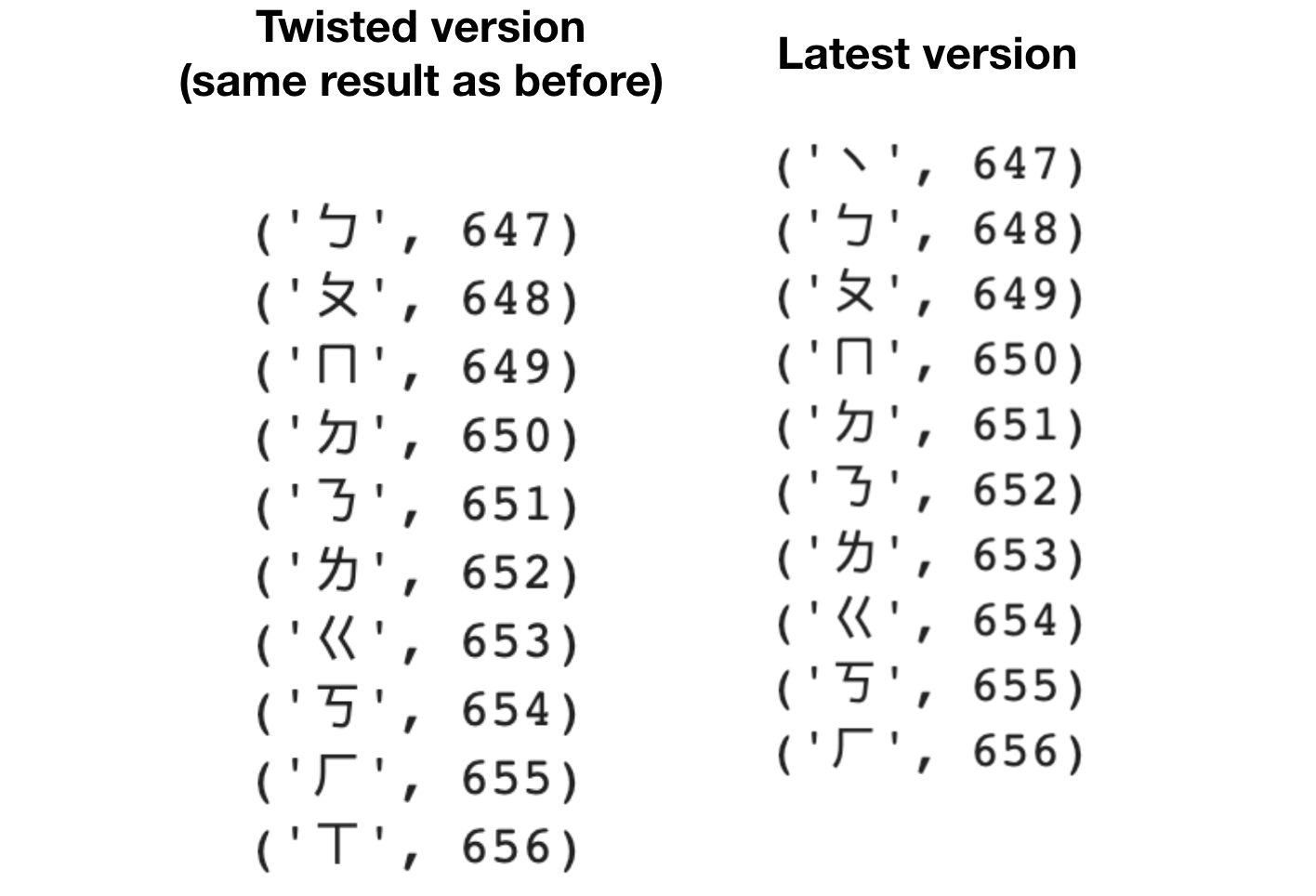

(left is the desired result acquired by using old load_vab function)

The vocab size is the same, but it seems that current / previous vocab index is kind of offset by 1 so after getting the prediction (using twisted tokenizer) from the model, I have to first add 1 to all predicted tokens and then convert them back to tokens:

# predict masked token

maskedLM_model = torch.hub.load(GITHUB_REPO,

'bertForMaskedLM',

"bert-base-chinese")

maskedLM_model.eval()

with torch.no_grad():

outputs = maskedLM_model(tokens_tensor, segments_tensors)

predictions = outputs[0]

probs, indices = torch.topk(torch.softmax(predictions[0, masked_index], -1), k)

# HACKY HOTFIX HERE

indices += 1

# correct result

predicted_tokens = tokenizer.convert_ids_to_tokens(indices.tolist())

In sum, by:

- use previous

load_vocabfunction - add 1 to model output

I can reproduce the same correct result as before in this maskLM scenario. But of course, this is very hacky. We need a better solution.

At the line 344 of bert-base-chinese vocab file the token is '\u2028', which is an unicode line separator.

I think using 'token = reader.readlines()' instead of 'token = reader.read().splitlines()' might solve the problem.

Have submitted a PR for this: https://github.com/huggingface/pytorch-transformers/pull/860

Great, thanks for investigating deeper @Yiqing-Zhou and @leemengtaiwan!

Thank you guys @Yiqing-Zhou and @thomwolf!

I have used the latest version of Chinese BERT and it seems that the vocab and accuracy of my downstream task work perfectly now. :)

Hi, @leemengtaiwan What kind of dataset are you running on?

I get the same issue even when I update to the latest version.

The same issue is also mention in #903

I'm running on Chinese-Style SQuAD dataset (DRCD).

I can train Chinese-Bert successfully about half year ago.

However, I could not train the model successfully but I can train Multi-Bert successfully.

@thomwolf Do you update Chinese-Bert recently? or there are still some bugs in preprocess step?

Hi, @leemengtaiwan What kind of dataset are you running on?

@Liangtaiwan I'm using custom dataset (to be more specific, WSDM Fake News Classification on Kaggle).

The updated version seems to work fine for me, but if you still encounter some issues, maybe you can create a separate issue and reference this issue if needed.

Most helpful comment

Have submitted a PR for this: https://github.com/huggingface/pytorch-transformers/pull/860