Test-infra: presubmits and postsubmits jobs are not triggered

What happened:

I'm following getting_started_deploy.md to setup prow with manual steps in my GKE cluster in web UI.

All the prow components are running and prow status web UI is working normally, because I can see the periodic echo-test job is completed successfully:

root@mcdev1:~/go/src/k8s.io/test-infra# kc get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

deck 2/2 2 2 20h

hook 2/2 2 2 20h

horologium 1/1 1 1 20h

plank 1/1 1 1 20h

sinker 1/1 1 1 20h

statusreconciler 1/1 1 1 20h

tide 1/1 1 1 20h

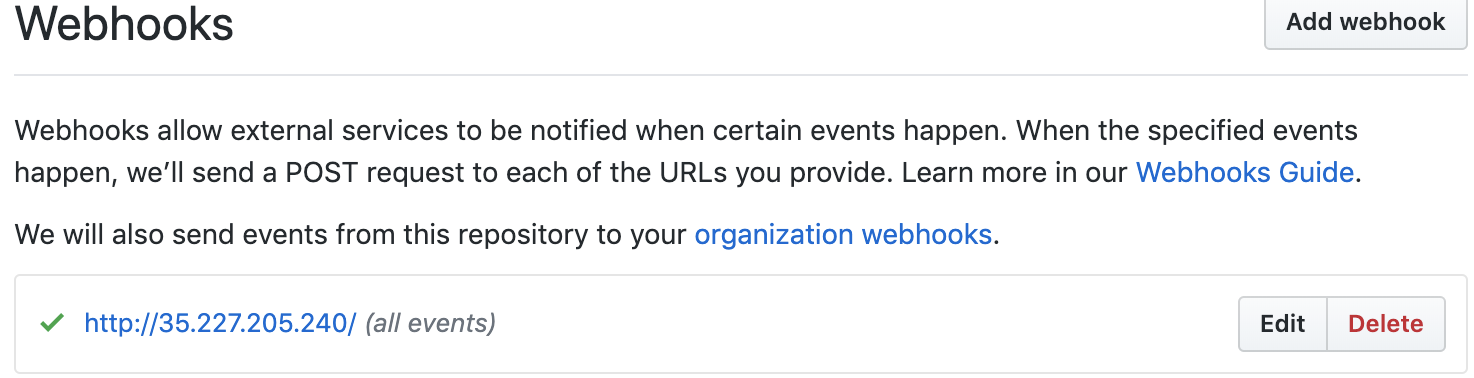

Also webhook is successfully added because I can see a green check mark in repo webhook settings.

Then I tried to add more plugins to plugins.yaml and more jobs to config.yaml.

plugins.yaml:

root@mcdev1:~/go/src/k8s.io/test-infra# cat /root/go/src/k8s.io/test-infra/prow/plugins.yaml

triggers:

- repos:

- oasis

trusted_org: multicloudlab

elide_skipped_contexts: true

plugins:

multicloudlab/oasis:

- size

- trigger

config.yaml:

root@mcdev1:~/go/src/k8s.io/test-infra# cat /root/go/src/k8s.io/test-infra/prow/config.yaml

plank:

default_decoration_config:

utility_images: # using the tag we identified above

clonerefs: "gcr.io/k8s-prow/clonerefs:v20190917-395658c48"

initupload: "gcr.io/k8s-prow/initupload:v20190917-395658c48"

entrypoint: "gcr.io/k8s-prow/entrypoint:v20190917-395658c48"

sidecar: "gcr.io/k8s-prow/sidecar:v20190917-395658c48"

gcs_configuration:

bucket: prow_job_bucket # the bucket we just made

path_strategy: explicit

gcs_credentials_secret: gcs-credentials # the secret we just made

prowjob_namespace: default

pod_namespace: test-pods

periodics:

- interval: 10m

name: echo-test

decorate: true

spec:

containers:

- image: alpine

command: ["/bin/date"]

postsubmits:

multicloudlab/oasis:

- name: test-postsubmit

decorate: true

spec:

containers:

- image: alpine

command: ["/bin/printenv"]

presubmits:

multicloudlab/oasis:

- name: test-presubmit

decorate: true

always_run: true

skip_report: true

spec:

containers:

- image: alpine

command: ["/bin/printenv"]

Also the preconfig check is passed:

root@mcdev1:~/go/src/k8s.io/test-infra# bazel run //prow/cmd/checkconfig -- --plugin-config=/root/go/src/k8s.io/test-infra/prow/plugins.yaml --config-path=/root/go/src/k8s.io/test-infra/prow/config.yaml

...

INFO: Analyzed target //prow/cmd/checkconfig:checkconfig (0 packages loaded, 0 targets configured).

INFO: Found 1 target...

Target //prow/cmd/checkconfig:checkconfig up-to-date:

bazel-bin/prow/cmd/checkconfig/linux_amd64_pure_stripped/checkconfig

INFO: Elapsed time: 0.747s, Critical Path: 0.27s

INFO: 0 processes.

INFO: Build completed successfully, 1 total action

INFO: Build completed successfully, 1 total action

{"component":"checkconfig","file":"prow/cmd/checkconfig/main.go:288","func":"main.main","level":"info","msg":"checkconfig passes without any error!","time":"2019-09-19T04:00:46-07:00"}

However, when I raise PR in the targeted repo, I did't see size/* label is automatically added, also the presubmits and postsubmits jobs were never triggered, because I can't find them in prow status web UI.

Am I missing anything? anyone helps me on this?

BTW, the GCS bucket in plank setting was created successfully.

What you expected to happen:

When a new PR is filed in the targeted repo, I should see size/* label is automatically added, also the presubmits and postsubmits jobs should be triggered and found in prow status web UI.

How to reproduce it (as minimally and precisely as possible):

Follow getting_started_deploy.md to setup prow with manual steps in GKE cluster

/cc @gyliu513

All 12 comments

/sig testing

/cc @kubernetes/sig-testing

First thing I notice is that the trigger config looks wrong. This config applies to https://github.com/oasis not https://github.com/multicloudlab/oasis

triggers:

- repos:

- oasis

trusted_org: multicloudlab

elide_skipped_contexts: true

Can you please provide a link to your Prow instance's front end so I can confirm that the configs you are sharing are what is actually applied in the cluster?

Please also check hooks logs and share the logs related to a webhook that should trigger a job (e.g. a new PR or a /test all comment). The size plugin not working makes me think either webhooks aren't being delivered correctly or the plugin config you shared isn't applied.

@cjwagner Thanks for quick reply, if you're asking for prow status UI, you can assess it: http://35.227.205.240

I can't see anything from the hook logs, is it possible that github didn't see anything to hook, but I can see a green check mark in repo webhook settings.

root@mcdev1:~/go/src/k8s.io/test-infra# kc logs -f hook-7bdcdd9d64-kw62q

{"component":"hook","file":"prow/flagutil/bugzilla.go:45","func":"k8s.io/test-infra/prow/flagutil.(*BugzillaOptions).Validate","level":"info","msg":"empty -bugzilla-endpoint, will not create Bugzilla client","time":"2019-09-20T02:14:40Z"}

{"client":"github","component":"hook","file":"prow/github/client.go:523","func":"k8s.io/test-infra/prow/github.(*client).log","level":"info","msg":"User()","time":"2019-09-20T02:14:40Z"}

{"component":"hook","file":"prow/kube/config.go:143","func":"k8s.io/test-infra/prow/kube.LoadClusterConfigs","level":"info","msg":"Loading cluster contexts...","time":"2019-09-20T02:14:41Z"}

{"component":"hook","file":"prow/cmd/hook/main.go:165","func":"main.main","level":"info","msg":"Using fake slack client.","time":"2019-09-20T02:14:41Z"}

I have updated trigger config to:

triggers:

- repos:

- multicloudlab

trusted_org: multicloudlab

elide_skipped_contexts: true

And the follow are the configmaps config and plugins from k8s cluster:

root@mcdev1:~/go/src/k8s.io/test-infra# kc get cm config -o yaml

apiVersion: v1

data:

config.yaml: |

plank:

default_decoration_config:

utility_images: # using the tag we identified above

clonerefs: "gcr.io/k8s-prow/clonerefs:v20190917-395658c48"

initupload: "gcr.io/k8s-prow/initupload:v20190917-395658c48"

entrypoint: "gcr.io/k8s-prow/entrypoint:v20190917-395658c48"

sidecar: "gcr.io/k8s-prow/sidecar:v20190917-395658c48"

gcs_configuration:

bucket: prow_job_bucket # the bucket we just made

path_strategy: explicit

gcs_credentials_secret: gcs-credentials # the secret we just made

prowjob_namespace: default

pod_namespace: test-pods

periodics:

- interval: 10m

name: echo-test

decorate: true

spec:

containers:

- image: alpine

command: ["/bin/date"]

postsubmits:

multicloudlab/oasis:

- name: test-postsubmit

decorate: true

spec:

containers:

- image: alpine

command: ["/bin/printenv"]

presubmits:

multicloudlab/oasis:

- name: test-presubmit

decorate: true

always_run: true

skip_report: true

spec:

containers:

- image: alpine

command: ["/bin/printenv"]

kind: ConfigMap

metadata:

creationTimestamp: "2019-09-18T14:31:25Z"

name: config

namespace: default

resourceVersion: "571089"

selfLink: /api/v1/namespaces/default/configmaps/config

uid: fe65eed7-da20-11e9-a352-42010a8a01c9

root@mcdev1:~/go/src/k8s.io/test-infra# kc get cm plugins -o yaml

apiVersion: v1

data:

plugins.yaml: |

triggers:

- repos:

- multicloudlab

trusted_org: multicloudlab

elide_skipped_contexts: true

plugins:

multicloudlab/oasis:

- size

- trigger

kind: ConfigMap

metadata:

creationTimestamp: "2019-09-18T14:31:25Z"

name: plugins

namespace: default

resourceVersion: "571000"

selfLink: /api/v1/namespaces/default/configmaps/plugins

uid: fe531c7e-da20-11e9-a352-42010a8a01c9

I found some error logs in sinker:

root@mcdev1:~/go/src/k8s.io/test-infra# kc logs sinker-54c4fb95cf-vhsnn | head -n 20

{"component":"sinker","file":"prow/cmd/sinker/main.go:103","func":"main.main","level":"warning","msg":"Sinker requires --dry-run=false to function correctly in production.","time":"2019-09-20T02:14:42Z"}

{"component":"sinker","file":"prow/cmd/sinker/main.go:104","func":"main.main","level":"warning","msg":"--dry-run will soon default to true. Set --dry-run=false by March 15.","time":"2019-09-20T02:14:42Z"}

{"component":"sinker","file":"prow/kube/config.go:143","func":"k8s.io/test-infra/prow/kube.LoadClusterConfigs","level":"info","msg":"Loading cluster contexts...","time":"2019-09-20T02:14:42Z"}

E0920 02:15:00.474476 1 reflector.go:270] external/io_k8s_client_go/tools/cache/reflector.go:94: Failed to watch *v1.ProwJob: unknown (get prowjobs.prow.k8s.io)

{"component":"sinker","file":"prow/cmd/sinker/main.go:396","func":"main.(*controller).clean","level":"info","msg":"Deleted old completed pod.","pod":"60f42a91-da82-11e9-8d64-22164b095e1c","time":"2019-09-20T02:15:00Z"}

{"component":"sinker","file":"prow/cmd/sinker/main.go:396","func":"main.(*controller).clean","level":"info","msg":"Deleted old completed pod.","pod":"fb531c69-da80-11e9-8d64-22164b095e1c","time":"2019-09-20T02:15:00Z"}

{"component":"sinker","file":"prow/cmd/sinker/main.go:421","func":"main.(*controller).clean","level":"info","msg":"Sinker reconciliation complete.","time":"2019-09-20T02:15:00Z"}

{"component":"sinker","file":"prow/cmd/sinker/main.go:195","func":"main.(*controller).Start","level":"info","msg":"Sync time: 311.169619ms","time":"2019-09-20T02:15:00Z"}

E0920 02:15:01.504611 1 reflector.go:270] external/io_k8s_client_go/tools/cache/reflector.go:94: Failed to watch *v1.ProwJob: unknown (get prowjobs.prow.k8s.io)

E0920 02:15:02.534047 1 reflector.go:270] external/io_k8s_client_go/tools/cache/reflector.go:94: Failed to watch *v1.ProwJob: unknown (get prowjobs.prow.k8s.io)

E0920 02:15:03.566081 1 reflector.go:270] external/io_k8s_client_go/tools/cache/reflector.go:94: Failed to watch *v1.ProwJob: unknown (get prowjobs.prow.k8s.io)

E0920 02:15:04.597893 1 reflector.go:270] external/io_k8s_client_go/tools/cache/reflector.go:94: Failed to watch *v1.ProwJob: unknown (get prowjobs.prow.k8s.io)

E0920 02:15:05.632929 1 reflector.go:270] external/io_k8s_client_go/tools/cache/reflector.go:94: Failed to watch *v1.ProwJob: unknown (get prowjobs.prow.k8s.io)

E0920 02:15:06.673346 1 reflector.go:270] external/io_k8s_client_go/tools/cache/reflector.go:94: Failed to watch *v1.ProwJob: unknown (get prowjobs.prow.k8s.io)

E0920 02:15:07.703010 1 reflector.go:270] external/io_k8s_client_go/tools/cache/reflector.go:94: Failed to watch *v1.ProwJob: unknown (get prowjobs.prow.k8s.io)

E0920 02:15:08.732514 1 reflector.go:270] external/io_k8s_client_go/tools/cache/reflector.go:94: Failed to watch *v1.ProwJob: unknown (get prowjobs.prow.k8s.io)

E0920 02:15:09.761235 1 reflector.go:270] external/io_k8s_client_go/tools/cache/reflector.go:94: Failed to watch *v1.ProwJob: unknown (get prowjobs.prow.k8s.io)

E0920 02:15:10.790045 1 reflector.go:270] external/io_k8s_client_go/tools/cache/reflector.go:94: Failed to watch *v1.ProwJob: unknown (get prowjobs.prow.k8s.io)

E0920 02:15:11.818946 1 reflector.go:270] external/io_k8s_client_go/tools/cache/reflector.go:94: Failed to watch *v1.ProwJob: unknown (get prowjobs.prow.k8s.io)

E0920 02:15:12.847939 1 reflector.go:270] external/io_k8s_client_go/tools/cache/reflector.go:94: Failed to watch *v1.ProwJob: unknown (get prowjobs.prow.k8s.io)

It's weird because I did't make any update to prow/cluster/starter.yaml, and it looks like the permission issue, but the serviceaccount, role and rolebinding were configured properly:

root@mcdev1:~/go/src/k8s.io/test-infra# kc get sa sinker -o yaml

apiVersion: v1

kind: ServiceAccount

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"ServiceAccount","metadata":{"annotations":{},"name":"sinker","namespace":"default"}}

creationTimestamp: "2019-09-18T14:31:30Z"

name: sinker

namespace: default

resourceVersion: "79103"

selfLink: /api/v1/namespaces/default/serviceaccounts/sinker

uid: 012fc919-da21-11e9-a352-42010a8a01c9

secrets:

- name: sinker-token-8n69j

root@mcdev1:~/go/src/k8s.io/test-infra# kc get role sinker -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"rbac.authorization.k8s.io/v1beta1","kind":"Role","metadata":{"annotations":{},"name":"sinker","namespace":"default"},"rules":[{"apiGroups":["prow.k8s.io"],"resources":["prowjobs"],"verbs":["delete","list"]},{"apiGroups":[""],"resourceNames":["prow-sinker-leaderlock"],"resources":["configmaps"],"verbs":["get","update"]},{"apiGroups":[""],"resources":["configmaps","events"],"verbs":["create"]}]}

creationTimestamp: "2019-09-18T14:48:32Z"

name: sinker

namespace: default

resourceVersion: "82605"

selfLink: /apis/rbac.authorization.k8s.io/v1/namespaces/default/roles/sinker

uid: 6265c8f9-da23-11e9-a352-42010a8a01c9

rules:

- apiGroups:

- prow.k8s.io

resources:

- prowjobs

verbs:

- delete

- list

- apiGroups:

- ""

resourceNames:

- prow-sinker-leaderlock

resources:

- configmaps

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

- events

verbs:

- create

root@mcdev1:~/go/src/k8s.io/test-infra# kc get rolebinding sinker -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"rbac.authorization.k8s.io/v1beta1","kind":"RoleBinding","metadata":{"annotations":{},"name":"sinker","namespace":"default"},"roleRef":{"apiGroup":"rbac.authorization.k8s.io","kind":"Role","name":"sinker"},"subjects":[{"kind":"ServiceAccount","name":"sinker"}]}

creationTimestamp: "2019-09-18T14:48:32Z"

name: sinker

namespace: default

resourceVersion: "82607"

selfLink: /apis/rbac.authorization.k8s.io/v1/namespaces/default/rolebindings/sinker

uid: 6285e006-da23-11e9-a352-42010a8a01c9

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: sinker

subjects:

- kind: ServiceAccount

name: sinker

The auth errors in sinker are most likely a result of the recent sinker changes #13647 to use a lister combined with the fact that the starter.yaml was not fully updated (i.e. the update is missing the watch and get verbs).

@morvencao - I ~will~ did create a PR for this (#14418). You can try making this update to the sinker Role with the aforementioned verbs and apply to your cluster. Although, I am not convinced this is the underlying problem with presubmits and postsubmits jobs not being triggered.

@clarketm Thanks, it fix the error logs in sinker. And you're right, it's not the root cause of presubmits and postsubmits jobs not being triggered.

If you aren't seeing any logs related to webhooks from hook then it is likely the case that hook is not receiving any. We log every webhook we handle e.g. https://github.com/kubernetes/test-infra/blob/5a67b1fcfaee09833e722df91c98841409f40165/prow/hook/events.go#L65

Before we even check to see if it is a webhook type that we handle, we increment a webhook counter. So you could also check the hook pod's /metrics endpoint to confirm that prow_webhook_counter and prow_webhook_response_codes are not being incremented when you do things that should trigger webhooks.

https://github.com/kubernetes/test-infra/blob/5a67b1fcfaee09833e722df91c98841409f40165/prow/hook/server.go#L82-L85

Make sure your webhook in GitHub is configured to send to http://35.227.205.240/hook

Did you set up the webhook using the add-hook tool described in the docs or did you set it up manually in the GitHub UI?

Yes, I was following the doc to set up the webhook. It's weird that the webhook url is http://35.227.205.240 instead of http://35.227.205.240/hook

@cjwagner I manually updated my webhook url from http://35.227.205.240 to http://35.227.205.240/hook, and the presubmits and postsubmits jobs are triggered, will test more cases.

Glad its working! The docs do specify that the webhook endpoint is /hook, but we could make that clearer. I don't think you're the first person to have this problem.

It is interesting that GitHub was showing hooks successfully delivered to /... Maybe we need to explicitly reject POST requests for these kind of endpoints? @Katharine would it make sense for handleSimpleTemplate to return a 405 for anything besides a GET request?

/close

@cjwagner: Closing this issue.

In response to this:

/close

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository.

Most helpful comment

@cjwagner I manually updated my webhook url from http://35.227.205.240 to http://35.227.205.240/hook, and the presubmits and postsubmits jobs are triggered, will test more cases.