Rust: Float parsing can fail on valid float literals

That is, it returns Err(..) on inputs with lots of digits and extreme exponents — it doesn't (shouldn't) panic.

This is a limitation of the current code that has been known since that code was originally written, but is quite nontrivial to fix, so I didn't get around to it yet. Basically one would have to implement an algorithm similar to glib's strtod and replace the current slow paths (Algorithm M and possibly Algorithm R) with it. If someone wants to have a crack at it, go ahead, just give me a heads up so we don't duplicate work. See also #27307 for some discussion.

This bug doesn't affect reasonably short (17-ish decimal digits) representations of finite (even subnormal) floats, and neither does it affect most inputs that are rounded down to zero or rounded up to infinity. The only problem are very small exponents with so many integer digits that the number can't just be rounded down to zero and can't be represented with our custom 1280-bit bignums. One example, for f64, is 1234567890123456789012345678901234567890e-340 (which is a finite and normal float, about 1.23456789e-301). This would be annoying on its own, but it also trips up constant evaluation in the compiler (see #31109).

All 22 comments

cc me

can't we just have a slowpath that uses 3072-bit bignums or something?

I mean, for numbers with a very long number of digits, we could:

- find one of the (2, or 1 if exactly equal) halfway-floating-point-numbers closest to our string. This can be done by truncating the number to 20 or so digits, converting _that_ to a float, and taking the halfway-point above that.

- compare our string with that halfway-float and use that to pick which float to round it to.

Certainly, at the worst-case, we _do_ need to compare our string with an halfway-float, After all, 0.00000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000247032822920623272088284396434110686182529901307162382212792841250337753635104375932649918180817996189898282347722858865463328355177969898199387398005390939063150356595155702263922908583924491051844359318028499365361525003193704576782492193656236698636584807570015857692699037063119282795585513329278343384093519780155312465972635795746227664652728272200563740064854999770965994704540208281662262378573934507363390079677619305775067401763246736009689513405355374585166611342237666786041621596804619144672918403005300575308490487653917113865916462395249126236538818796362393732804238910186723484976682350898633885879256283027559956575244555072551893136908362547791869486679949683240497058210285131854513962138377228261454376934125320985913276672363281249 is zero and 0.00000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000247032822920623272088284396434110686182529901307162382212792841250337753635104375932649918180817996189898282347722858865463328355177969898199387398005390939063150356595155702263922908583924491051844359318028499365361525003193704576782492193656236698636584807570015857692699037063119282795585513329278343384093519780155312465972635795746227664652728272200563740064854999770965994704540208281662262378573934507363390079677619305775067401763246736009689513405355374585166611342237666786041621596804619144672918403005300575308490487653917113865916462395249126236538818796362393732804238910186723484976682350898633885879256283027559956575244555072551893136908362547791869486679949683240497058210285131854513962138377228261454376934125320985913276672363281251 is the smallest denormal.

@arielb1 How would you actually implement the second step? Keep in mind that this is in libcore, so we can't use float formatting because that would allocate. (_Maybe_ you could use the underlying functions that are also in libcore, but this probably complicates everything significantly. However I have to admit that I don't even know what the interface of those functions looks like, so maybe it's not that bad.)

I actually read the paper, and its Algorithm R is a variant of what I've came up with. I guess we just need to enlarge our bignums to sufficiently-many bits and use sticky bits+rounding (we don't really need 1075 digits = 3571 bits because we can skip the powers-of-2).

I've gathered a collection of String to Double test cases proved to be problematic in various projects during the past few decades:

https://github.com/ahrvoje/numerics/blob/master/strtod/strtod_tests.toml

Rust 1.14.0 (e8a012324 2016-12-16) for Windows fails on 17 of 81 conversion tests (C21, C22, C23, C25, C29, C37, C38, C39, C40, C64, C65, C66, C67, C68, C69, C76, C79), with the following being the shortest one (C29):

fn main() {

println!("{}", 2.47032822920623272e-324);

}

error[E0080]: constant evaluation error

--> src\main.rs:2:20

|

2 | println!("{}", 2.47032822920623272e-324);

| ^^^^^^^^^^^^^^^^^^^^^^^^ unimplemented constant expression: could not evaluate float literal (see issue #31407)

@ahrvoje That's a very nice collection of test cases, thanks for sharing!

This is a regression from 1.7.0 to 1.8.0. It broke my crate (lol).

@nagisa's crate is math.rs.

I've nominated this for discussion in the T-libs. This is T-libs area, because error comes from the floating number parsing routines in libstd/core.

This is an annoying regression to me and not a nice regression to have in the compiler overall, as one cannot feed any sort of non-normal floating point numbers to the compiler (compile-time) or the parser (run-time). You can’t do something like *(&0xBITSBITSBITSu32 as *const _ as *const f32) at compile time either, which makes it impossible to construct esoteric floating point numbers at compile time.

@nagisa AFAIK for every floating point value [] there's a canonical literal that *is parsed correctly, it's just that some literals that would also result in the value don't work. Do you have a counter example?

[*] Other than the bazillion NaNs with different payloads, of course, but we don't have literals for them anyway.

@rkruppe my counter example is that I have no idea what the canonical literals for my denormals are and am in general paranoid enough about floats to always write them down with some extra precision.

That being said, I’m gonna believe you and retract my statement that there’s no way at all to write the denormals down.

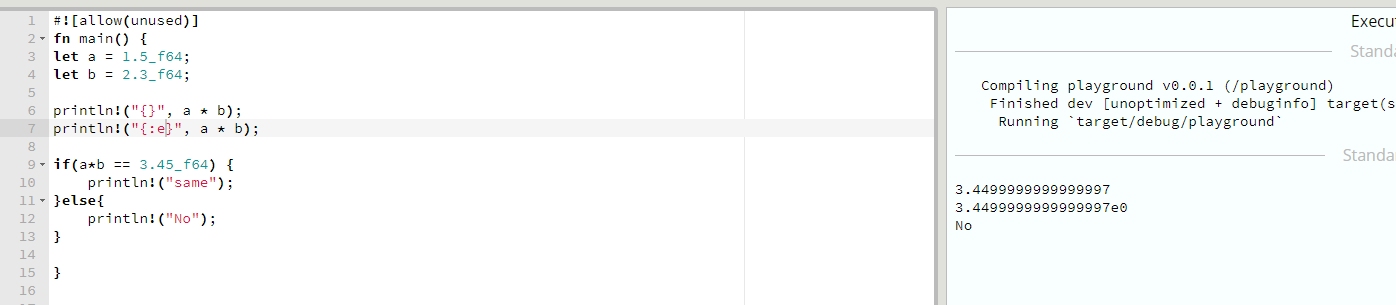

If you know the bit pattern you want, you can construct the right float value via transmute and format it (either {} or {:e} should work). The resulting decimal will be as short as possible in the respective format and so the current code should manage to parse it. (This is tested for a couple subnormals in the test suite.)

To be clear, I don't want to deflect attention away from this issue — far from it, I'd really love to see it addressed somehow, and would do so right now I had the time and energy. I'm just trying to understand how it's causing you trouble and offer workarounds in the mean time.

discussed during libs triage today conclusion was we'd definitely like to fix but otherwise doesn't seem P-high, so P-medium

What about falling back to a C library in the meantime? For example, using https://crates.io/crates/strtod instead of Rust's built-in parser seems to fix this bug for me.

The problem is that float parsing is in core, not just in std, so it can't depend on memory allocation or C libraries.

Note that you can now (on nightly and beta) cast bit representations to floats inside constants:

pub static F: f32 = unsafe {

union Helper {

bits: u32,

f: f32,

}

Helper { bits: 0x0000_0000 }.f

};

It seems inconsistent to me that rust discourages thoughtless conversion from 64 bit to 32 bit integers, or thoughtless conversion between signed and unsigned integers, but it allows thoughtless conversion from decimal to binary. Decimal and Binary are not the same set of numbers, just like i32 and u64 are not the same set, just like binary floats and binary integers are not the same set. To be precise, (or, safe, even), maybe there could be a basic decimal number type in the language and decisions could be made about its conversion to other types.

Is switching to the Ryū algorithm (https://github.com/rust-lang/rust/issues/52811) likely to address this?

As far as I know, Ryu is only a float -> string algorithm, not the other direction (which this bug is about), so: no.

That is, it returns

Err(..)on inputs with lots of digits and extreme exponents — it doesn't (shouldn't) panic.This is a limitation of the current code that has been known since that code was originally written, but is quite nontrivial to fix, so I didn't get around to it yet. Basically one would have to implement an algorithm similar to glib's strtod and replace the current slow paths (Algorithm M and possibly Algorithm R) with it. If someone wants to have a crack at it, go ahead, just give me a heads up so we don't duplicate work. See also #27307 for some discussion.

This bug doesn't affect reasonably short (17-ish decimal digits) representations of finite (even subnormal) floats, and neither does it affect most inputs that are rounded down to zero or rounded up to infinity. The only problem are very small exponents with so many integer digits that the number can't just be rounded down to zero and can't be represented with our custom 1280-bit bignums. One example, for

f64, is1234567890123456789012345678901234567890e-340(which is a finite and normal float, about1.23456789e-301). This would be annoying on its own, but it also trips up constant evaluation in the compiler (see #31109).

Bummer. Here in 2020, with the latest version, I am seeing the same thing with too many repeating 0's in the rational part of a parse to f32. :-( Looks like I can truncate it to 5 significant digits before conversion, and avoid the Err. Thanks for describing this well. I tested your theory with parse and confirmed it was accurate.

@rustbot modify labels: +A-floating-point

Most helpful comment

I've gathered a collection of String to Double test cases proved to be problematic in various projects during the past few decades:

https://github.com/ahrvoje/numerics/blob/master/strtod/strtod_tests.toml

Rust 1.14.0 (e8a012324 2016-12-16) for Windows fails on 17 of 81 conversion tests (C21, C22, C23, C25, C29, C37, C38, C39, C40, C64, C65, C66, C67, C68, C69, C76, C79), with the following being the shortest one (C29):