Rocket.chat: Scaling beyond 100.000 users or 50 messages/second

Hi,

we're currently looking for options to scale to large number of users

(up to 300.000 registered, ~100k daily active). The our setup in server information.

We're using MongoDB Atlas as managed solution with 2 configurations:

- M30 (2 vCPUs, 8 GiB RAM)

- M200 (64 vCPUs, 256 GiB RAM)

SSL is offloaded by AWS network load balancer, multiple instances per

server are load-balanced by nginx (see config below). Our setup is 7 node instances

per server (AWS m5.2xlarge). Up to 8 servers were used (up to 56 node instances).

Performance test result

Large MongoDB cluster

- 7 instances (1 server) -> 30 messages sent per second, received within 1 second

- 14 instances (2 server) -> 39 messages sent per second, received within 1 second

- 21 instances (3 server) -> 45 messages sent per second, received within 1 second

- 28 instances (4 servers) -> 48 messages sent per second, received within 1 second

- 35 instances (5 servers) -> 48 messages sent per second, received within 1 second

- 42 instances (6 servers) -> 48 messages sent per second, received within 1 second

- 49 instances (7 servers) -> 42 messages sent per second, received within 1 second

- 56 instances (8 servers) -> 38 messages sent per second, received within 1 second

Small MongoDB cluster

- 1 instances (1 server) -> 14 messages sent per second, received within 1 second

- 2 instances (2 server) -> 20 messages sent per second, received within 1 second

- 4 instances (2 server) -> 26 messages sent per second, received within 1 second

- 8 instances (2 server) -> 28 messages sent per second, received within 1 second

- 14 instances (2 server) -> 27 messages sent per second, received within 1 second

What we expect

RocketChat can scale horizontally. We're unable to get more concurrent users beyond 40-50

messages received withing a reasonable amount of time (avoiding to build up a backlog/queue).

How can we support more concurrent users?

We've looked into Redis-Oplog, but it creates multiple defects when using multiple instances.

What is the best approach here?

Server Setup Information:

- Version of Rocket.Chat Server: 0.74.1

- Operating System: Ubuntu 18.04 LTS

- Deployment Method: Docker

- Number of Running Instances: 28 instances on 4 servers

- DB Replicaset Oplog: Yes

- NodeJS Version: 8.11

- MongoDB Version: 4.0.5

Additional context

Our test me have multiple clients on multiple servers to login & send or receive a defined

amount of messages and we measure the timings of how long it takes for RocketChat to

receive & broadcast it to the user (1-1 private chat).

The client servers are running on 3 (AWS t3.xlarge) with 5 GBit/s network throughput each.

$ cat docker-compose.yml

version: "3.3"

services:

rocketchat-01:

image: rocketchat/rocket.chat:0.74.1

restart: always

environment:

PORT: "8000"

INSTANCE_IP: "127.245.131.123"

ROOT_URL: 'https://performance.local'

MONGO_URL: 'mongodb://cloud-mongodb:27017/rocketchat?ssl=true&replicaSet=PerformanceTest-shard-0&authSource=admin&retryWrites=true'

MONGO_OPLOG_URL: 'mongodb://cloud-mongodb:27017/local?ssl=true&replicaSet=PerformanceTest-shard-0&authSource=admin&retryWrites=true'

ports:

- "8000:8000"

[...]

rocketchat-07:

image: rocketchat/rocket.chat:0.74.1

restart: always

environment:

PORT: "8006"

INSTANCE_IP: "127.245.131.123"

ROOT_URL: 'https://performance.local'

MONGO_URL: 'mongodb://cloud-mongodb:27017/rocketchat?ssl=true&replicaSet=PerformanceTest-shard-0&authSource=admin&retryWrites=true'

MONGO_OPLOG_URL: 'mongodb://cloud-mongodb:27017/local?ssl=true&replicaSet=PerformanceTest-shard-0&authSource=admin&retryWrites=true'

ports:

- "8006:8006"

$ cat nginx.conf

upstream backend {

server localhost:8000;

server localhost:8001;

server localhost:8002;

server localhost:8003;

server localhost:8004;

server localhost:8005;

server localhost:8006;

}

server {

listen 80;

server_name performance.local;

error_log /var/log/nginx/rocketchat_error.log;

location / {

proxy_pass http://backend/;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forward-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forward-Proto http;

proxy_set_header X-Nginx-Proxy true;

proxy_redirect off;

}

}

All 47 comments

@bytepoets-mzi thanks for sharing your results =)

do you mind sharing your test suit? what did you use as clients to get this numbers?

we're working to improve these numbers, but there is nothing concrete to share at moment, although we value any additional information that can help.

RocketChat will never be performance monster because it is written in NodeJS which is single threaded.

Simple messages are one of the way to test performance but when upload a file then performance goes down drastically.

I dont think the language is the problem biggest here (of course there are some benefits to use one or another language, or not).

right, just test send messages is a little naive sometimes, but, as a chat should be our best/performant method :x, send the message and store on db its not the problem, the problem is the number of users on the room, the notifications config, the number of users online, and the number of users with that room opened.

we are writing some use cases/tests maybe @bytepoets-mzi could help us testing and adding more cases :)

@ggazzo sure, we'll provide you our test case on monday. It's basically a simple Node.js client using the SDK and firing up multiple processes. A single machine can easily saturate our large-scale setup.

nice! we made some tests with something around 7k users (online/connected) sending 1350msg/minute, with relative success. let us know more.

Following this as well!

Hello @ggazzo, you can find our test runner here: https://github.com/eyetime-international-ltd/rocketchat_loadtest_message_listener.

If you have trouble with the setup, please do not hesistate to contact me. It is currently sufficient to run the test on a single machine, as it can easily slow down our test cluster. I've tested up to 10.000 concurrent users/connections with this script, though.

nice! we made some tests with something around 7k users (online/connected) sending 1350msg/minute, with relative success. let us know more.

@ggazzo would you be so kind as to share your rocket.chat setup for this test?

@bytepoets-mzi @ggazzo do we know where the bottleneck is in this test yet?

Yes, we've made more tests. In general, the login process produces a lot of data (initial connection to the server and getting server data after the login), this produces really heavy load.

In addition to that, presence is a huge problem: for each new user getting online (being in a channel), all online users get the notification, that a particular user is now online. This gets exponentially worse, the more users are related in a channel.

We were able to handle ~4,500 concurrent users in a single channel.

@bytepoets-mzi can you tell me the reason you used Network Load Balancer rather than the Application Load Balancer?

Yes, we've made more tests. In general, the login process produces a lot of data (initial connection to the server and getting server data after the login), this produces really heavy load.

In addition to that, presence is a huge problem: for each new user getting online (being in a channel), all online users get the notification, that a particular user is now online. This gets exponentially worse, the more users are related in a channel.

We were able to handle ~4,500 concurrent users in a single channel.

Have you tried disabling UserPresenceMonitor on most of your instances https://github.com/RocketChat/Rocket.Chat/pull/12353? There was similar issue before regarding to UserPresenceMonitor like you mentioned https://github.com/RocketChat/Rocket.Chat/issues/11288. I don't see UserPresenceMonitor being disabled in your docker-compose.yml.

@bytepoets-mzi perfect, there are a lot of things we can do better, and there something we should do right, presence is one of them, we use meteor publications to handle presence, its N x N mergebox, and doesnt scale very well because we have to keep in memory all users online for each user online and notify each one.

In addition to that, presence is a huge problem: for each new user getting online (being in a channel), all online users get the notification, that a particular user is now online. This gets exponentially worse, the more users are related in a channel.

again, perfect. We send/receive a lot of data (settings, userpresence) after login

the login process produces a lot of data (initial connection to the server and getting server data after the login), this produces really heavy load.

about "a lot of things we can do better" we have plans to get rid of ddp/publications, to use something more "light" based on paths (like Mqqt or AMQP) and use a binary data compressor (like Protobuf/Messagepack), its not a BIG DEAL but should let us able to scale properly.

what do you mean? 4.5k users sending messages to the same channel? what its the rate message? or just connected? its not very common 4500 users with the same room opened ( ok, if you have a huge server maybe :) )

We were able to handle ~4,500 concurrent users in a single channel.

@bytepoets-mzi can you clarify if disabling UserPresenceMonitor solved some of your login process heavy load?

And thanks for your Load tests for Rocket.Chat

@ankar84 Sorry, we're no longer interested in Rocket.Chat and have moved to a different solution (Matrix).

@ankar84 Sorry, we're no longer interested in Rocket.Chat and have moved to a different solution (Matrix).

@bytepoets-mzi Which alternative solution did you move into?

@ankar84 Sorry, we're no longer interested in Rocket.Chat and have moved to a different solution (Matrix).

@bytepoets-mzi Which alternative solution did you move into?

He wrote it - Matrix.

does this exist notifycation bug

Since 3.0.0 it is much better performance.

16348 do a lot to improve that.

therer are so many bugs in 3.0.3 and 3.0.4 has not fixed them. @ankar84

https://github.com/RocketChat/Rocket.Chat/issues/16865

@bytepoets-mzi how to use mongodb with authen

@ankar84

docker run

--env MONGO_URL=mongodb://admin:[email protected]:27017/rocketchat?replicaSet=rocketchatReplicaSet&authSource=admin

--env MONGO_OPLOG_URL=mongodb://admin:[email protected]:27017/local?replicaSet=rocketchatReplicaSet&authSource=admin

--name docker3000 -p 3000:3000

-d rocket.chat:2.4.11

but error is :

MongoNetworkError: failed to connect to server [127.0.0.1:27017] on first connect [MongoNetworkError: connect ECONNREFUSED 127.0.0.1:27017]

why 127????

sir ,why I can't use ip(172.),is it must be domain name?

sir,@bytepoets-mzi ,how I can connect to a thrid party mongo server?

Have you tried disabling UserPresenceMonitor on most of your instances #12353? There was similar issue before regarding to UserPresenceMonitor like you mentioned #11288. I don't see UserPresenceMonitor being disabled in your docker-compose.yml.

Hi @sumit-kumar-rai

Did that DISABLE_PRESENCE_MONITOR improved performance and stability of your Rocket Chat deployment?

Hi we have setup 5 servers and 6 rocket instances on each. We have set DISABLE_PRESENCE_MONITOR=NO for only one instance for server, rest DISABLE_PRESENCE_MONITOR=YES. On version 3.0.12 we have no unexpected crashes and performance is better in comparison to default configuration.

Hi we have setup 5 servers and 6 rocket instances on each. We have set DISABLE_PRESENCE_MONITOR=NO for only one instance for server, rest DISABLE_PRESENCE_MONITOR=YES. On version 3.0.12 we have no unexpected crashes and performance is better in comparison to default configuration.

Thanks a lot for your answer @emikolajczak

We have a similar environment with 5 servers and 5 instances each.

And since last weekend only one instance with DISABLE_PRESENCE_MONITOR=NO and other with DISABLE_PRESENCE_MONITOR=yes

Now I don't see any difference in CPU, Network and other resource load with that configuration, but we need more time to make conclusions.

@ankar84 @emikolajczak if DISABLE_PRESENCE_MONITOR =NO,

Does it mean that we do not know the user status of other servers?

@ankar84 @emikolajczak if DISABLE_PRESENCE_MONITOR=NO,

Does it mean that we do not know the user status of other servers?

No. Now I have only 1 instance with DISABLE_PRESENCE_MONITOR=NO and all other with DISABLE_PRESENCE_MONITOR=YES and all users presence statuses works fine.

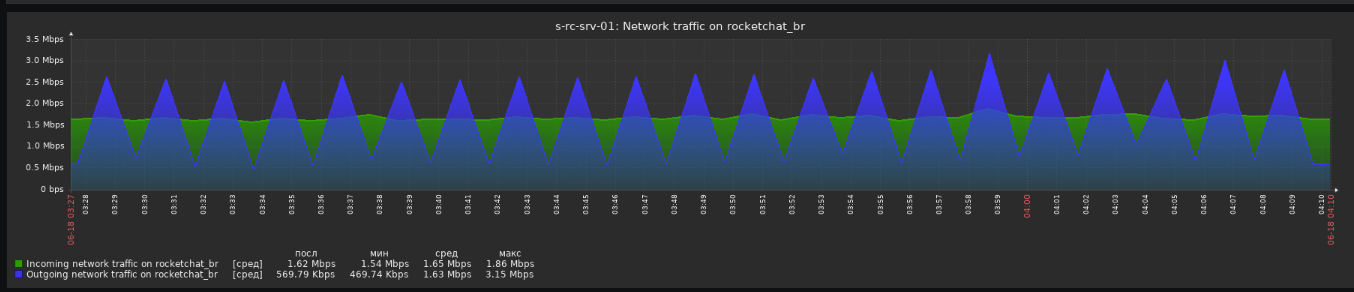

But outgoing network graph for server with that instance looks different compare to others with periodic sharp peaks.

Now I have only 1 instance with DISABLE_PRESENCE_MONITOR=NO and all other with DISABLE_PRESENCE_MONITOR=YES and all users presence statuses works fine.

It's amazing! I will try it!Thank you every day in my left life..... @ankar84

@ankar84 ,Sir,one Rocket.Chat instance or All Rocket.Chat instances in One Server

@ankar84 ,Sir,one Rocket.Chat instance or All Rocket.Chat instances in One Server

Only one instance of all instances in my case.

But you can do like @emikolajczak and configure one instance per server with DISABLE_PRESENCE_MONITOR=NO

Thank you every much. But what will be efected by DISABLE_PRESENCE_MONITOR=YES

@ankar84

But what will be efected by DISABLE_PRESENCE_MONITOR=YES

Nice question. I think that presence monitor will be disabled.

@ankar84 I’m a bit confused, you said that the user status will not be affected, will there be any other areas that will be affected?

@ankar84 I’m a bit confused, you said that the user status will not be affected, will there be any other areas that will be affected?

I'm not a developer. I'm administrator. And I don't see any issues with presence monitor disabled on all instances except one.

If you need more details about what could be affected and how does it works, you should ask developer, who wrote that code. And it is a @sampaiodiego

@ankar84 Thank you ,I have known some differences, what will be efected by DISABLE_PRESENCE_MONITOR=YES detail? @sampaiodiego

@ankar84 we plant to upgrade 2.4.1* to the latest,is it ok? Will it go well if we do this? we have much user data...

@ankar84 we plant to upgrade 2.4.1* to the latest,is it ok?

I don't know about most recent 3.3.3, but we are on 3.1.1 and all looks good and stable.

Will it go well if we do this? we have much user data...

You need to consider to test upgrade in test environment in that case.

ok.but how to upgrade ? is there any doc ?@ankar84

@ankar84 Hi,Dear Sir,We have upgrade rc to 3.3.3,it's every fluky,and 3.3.3 is very fast.But we don't know what kind of server an instance should be deployed on. Can you recommend your current situation? we wan't to support more than 7k people

@ankar84 Hi,Dear Sir,We have upgrade rc to 3.3.3,it's every fluky,and 3.3.3 is very fast.But we don't know what kind of server an instance should be deployed on. Can you recommend your current situation? we wan't to support more than 7k people

I'm still on 3.1.1

We have 5 servers 8 vCores, 16 Gb of RAM with 5 instances each. We can increase amount of instances to 7 according to docs recommendations about number of CPU cores - 1 formula.

We have 3k online users daily peaks.

All stable and fast.

@ankar84 ,thank you sir

@ankar84 Could you please share the size of you DB cluster ? Thanks

@ankar84 Could you please share the size of you DB cluster ? Thanks

We have 2 db servers and one arbiter.

Storage engine is wiredTiger

CPU 8 cores

Ram 8 or 16 Gb (don't remember currently, can take a look on monday)

@ankar84 Thank you

Hi @ankar84, @sampaiodiego, @ggazzo

We are looking to scale rocketchat for about 20000 users joining in for a live event.

What was the best number which you have achieved in terms of messages/minute ?

Do we have any recommendations on the setup to meet this requirement ?

Thanks in advance

@vigneshwarveluchamy this scale would be achieved via micro services version only. It's an enterprise feature, please contact [email protected] for more information.

Most helpful comment

Have you tried disabling UserPresenceMonitor on most of your instances https://github.com/RocketChat/Rocket.Chat/pull/12353? There was similar issue before regarding to UserPresenceMonitor like you mentioned https://github.com/RocketChat/Rocket.Chat/issues/11288. I don't see UserPresenceMonitor being disabled in your docker-compose.yml.