Description:

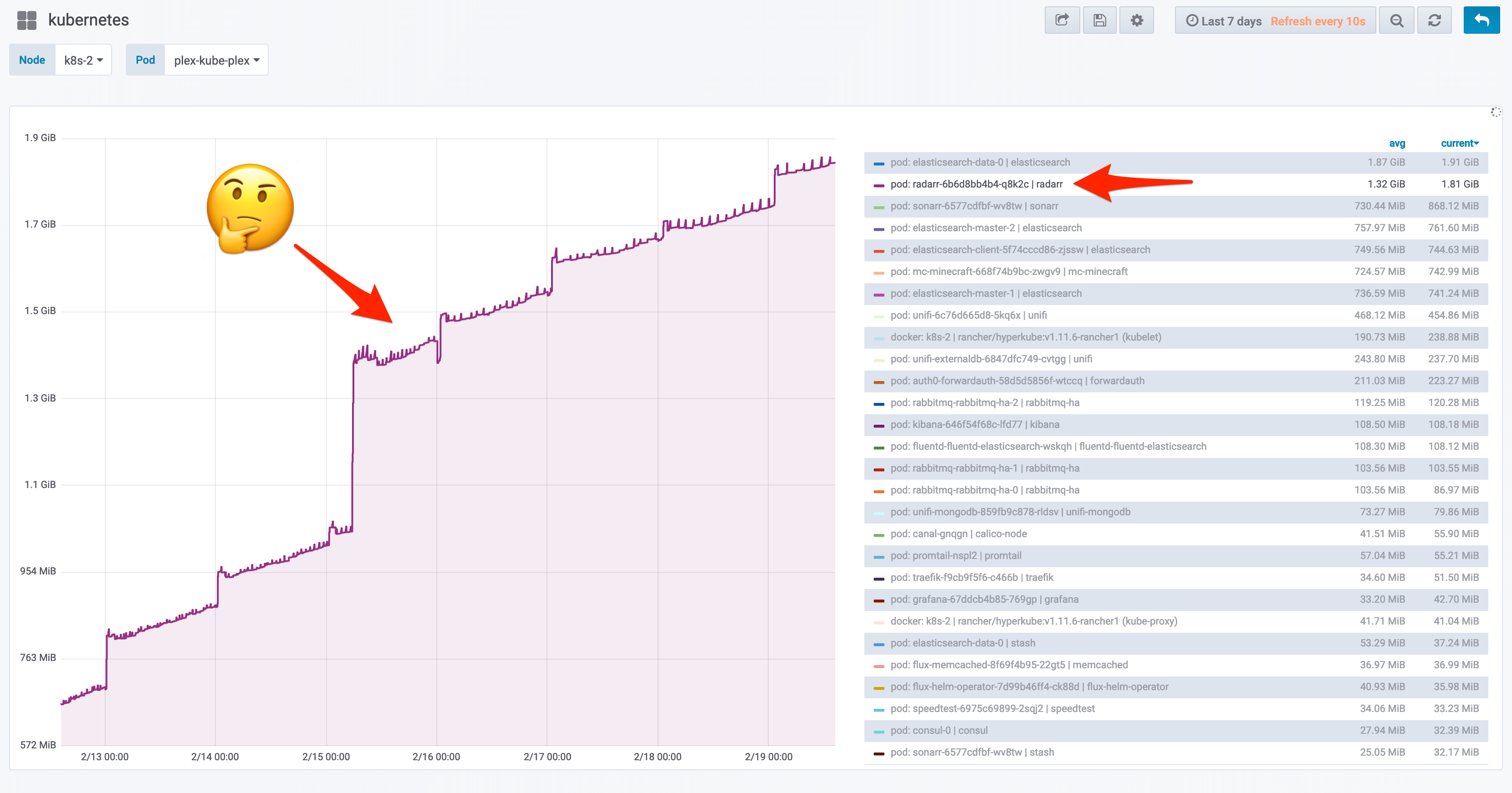

Radarr appears to have a memory leak. I have started getting warnings in the past few weeks about used SWAP space, and I had narrowed it down to being one of my Docker containers. Whenever I restarted all my containers, the usage dropped substantially. Today I went through and found that Radarr was using over half that space (2GB). I removed and rebuilt the container, and that dropped to 0.

Radarr Version:

I am running Radarr through the LSIO Docker container.

Version

0.2.0.696

Mono Version

5.0.1.1 (2017-02/5077205 Thu May 25 09:19:18 UTC 2017)

Let me know any information I can provide to help diagnose this issue.

All 220 comments

Same problem here, not running on docker.

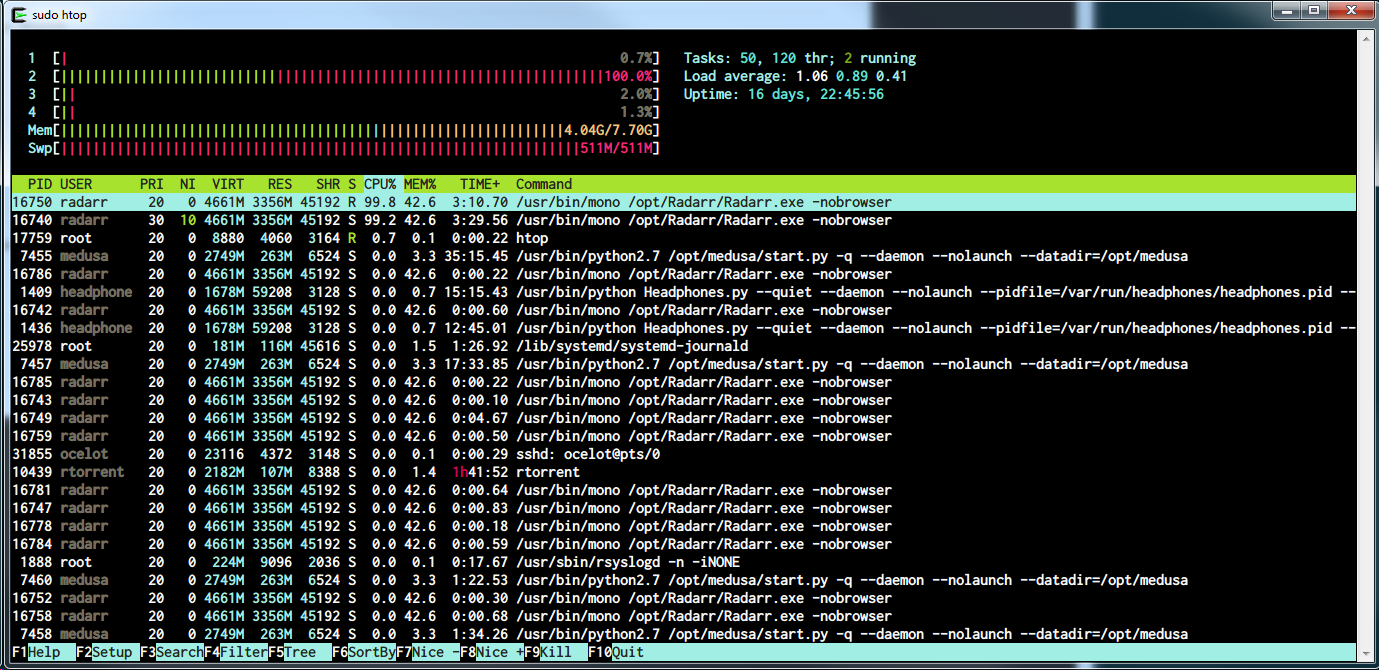

Radarr starts to take up more than 3-4GB of memory 🗡

BareMetal Server

Version

0.2.0.696

Mono Version

Mono JIT compiler version 5.0.1.1

Do you guys currently have errored items in your queue?

No errored items, no.

Could it involve the process of updating the media library? At least with import, I basically have to restart between importing each disk.

@ZimbiX Yes that would have been the next question. Since we have to load all movies into memory, that can be really memory intensive for large libraries.

No "error" movies in the list. Libraris is only 100 Movies big (10 missing or so, rest is unmonitored)

It only starts to grow after a couple off days. If i restart the Radarr, all is fine. Everything works. Library updates are fine. But after a couple of days, the radarr process is over 4GB and the system starts pagina, (and bogs down)

Radarr doesnt crash either (system has 8GB RAM) and nothing special in the log files.

My library is a bit bigger (~1000 movies, about 25 missing), but I have the same symptoms. It's fine after restarting, but a few days later, it is consuming multiple gigabytes. I have not been adding new movies from the disk during this time.

Can you monitor this and tell me wether the memory usage goes up after / during an update library?

Here is my memory usage when I triggered a library update. Where it starts rising is where I triggered it, and right around the right side of the graph is where it finished.

Cpu goes to like 50-100% (normal) and ram goes to 12 % now when i manually did the library update. After the library update, the cpu goes back to 'idle'. The ram drops but not by much. stays at 11.8 (radar is running for 5 hours or so now)

Ps sonarr is running on the same machine for like 14 days and doesnt change much in ram usage.

@kmlucy @kvanbiesen Seems like the library update could be the cause then?

It looks that way. It seems like the library updates uses a lot of ram, but that ram is never freed, and eventually the system starts swapping it.

Hi,

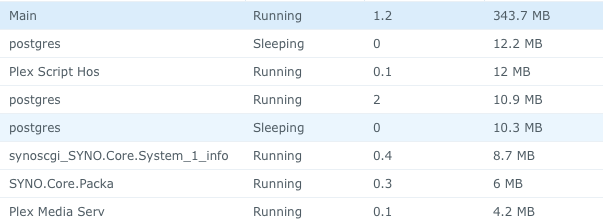

I don't know if this is the same problem but the result is the same : hight RAM usage. Since a few weeks or so, my Synology started to be always at 90% of RAM and was previously at 30% or less. When I stop Radarr the problem disappear. It seems to be the process "Main". Any idea ?

Same problem here fellas, i was having ram panics on my docker vm after a few weeks running, I am running this under docker with 275 movies,

So i found the radarr app using 1-2GB's of ram which is unussual compared to sonarr that was using 256mb, so i decided a few days ago to set a 512mb ram limit on the container and see how that goes, now its decided to offload into the swap space, which is only 1GB.

Swap space usage.

PID User Command Swap USS PSS RSS

6441 ben /bin/sh -c LD_LIBRARY_PATH= 92 4 4 8

6558 ben /usr/lib/plexmediaserver/Pl 1076 1408 1935 3360

13561 ben /lib/systemd/systemd --user 0 1444 2841 6536

13569 ben -bash 0 3172 3462 5024

6576 ben Plex Plug-in [com.plexapp.p 23824 12156 12451 13164

16156 ben /usr/bin/python /usr/bin/sm 0 12764 13135 14776

6578 ben Plex Plug-in [com.plexapp.p 2408 58484 59869 62588

6444 ben /usr/lib/plexmediaserver/Pl 6452 71524 72327 74244

6415 ben python /opt/plexpy/PlexPy.p 3720 77676 77676 77680

5278 ben mono /app/Jackett/JackettCo 119832 86600 90978 95752

6465 ben Plex Plug-in [com.plexapp.s 6272 162868 164329 167148

5899 ben /usr/bin/python -OO /usr/bi 36156 181900 182630 183756

12078 ben mono --debug NzbDrone.exe - 65900 389408 389798 390192

5747 ben mono --debug Radarr.exe -no 475240 484056 488782 493904

6040 ben /usr/bin/mono-sgen --optimi 27400 681524 681524 681528

I didn't take any metrics/screenshots or anything else but also came across this.

I started the docker container and after importing the movies (15 at a time, I went easy on it 😄 ) and setting everything up (indexer, downloader, etc.) it began to freeze both container and host. I'm using a Mac with Mac OS with 4GB of RAM (not much, but enough so far).

My lib has 45 movies, it isn't that big so I don't know if that could be the issue.

Let me know if I can help somehow.

I can report memory problems too. Radarr uses 4x the memory that my Sonarr instance does.

Restarted the container and memory usage dropped 10x.

Guess the solution for now is to schedule a restart every day or so.

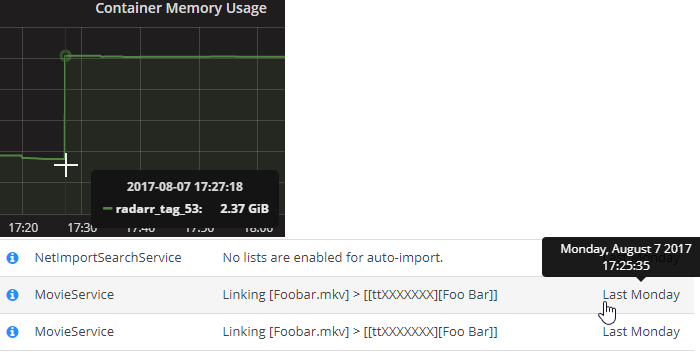

Ok I checked my logging and I found that the ram tripled after a download, when it did something called "Linking".

The log was "Movie service - Linking [<movie name>]"

Before this log, ~800 megs of ram, after 2,37GB ram.

@bassebaba I created a docker container with max of 500MB of RAM and 30% of CPU. Seems to be going well so far. Maybe you want to do something similar instead of restarting the container.

Also, @bassebaba , there's an option to not use hard linking. Have you tried disabling it and see if it helps? Just throwing it wild ideas.

Some of us doesn't use a container and have the same issue...

Matt'

On 10 Aug 2017, at 10:27, Antonio Ribeiro notifications@github.com wrote:

@bassebaba I created a docker container with max of 500MB of RAM and 30% of CPU. Seems to be going well so far. Maybe you want to do something similar instead of restarting the container.

—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub, or mute the thread.

Ram-limit doesnt help, @externalz did that and instead got swapping.

We need to get the memory leak sorted.

I have acces to dotMemory so Ill run a trace as soon as I get a chance, but my memoryleak-fix skills suck.

@here This was markus (from Sonarr team) on discord:

I'm thinking it's more likely mono, since I haven't seen that behaviour on windows and I'm running under mono 5.0.1 (linuxserver docker container) and haven't been seeing it recently

So it may not be our fault. @bassebaba Let me know if you find anything! Wanted to do the same, but haven't found the time to do it.

This happened to me in the docker container, unless it is a problem running this container on MacOS, but shouldn't be an issue.

I'm experiencing the same issue, without docker. on a fresh install of radarr with the following mono version.

Mono JIT compiler version 5.2.0.215 (tarball Mon Aug 14 15:46:23 UTC 2017)

Copyright (C) 2002-2014 Novell, Inc, Xamarin Inc and Contributors. www.mono-project.com

TLS: __thread

SIGSEGV: altstack

Notifications: epoll

Architecture: amd64

Disabled: none

Misc: softdebug

LLVM: supported, not enabled.

GC: sgen (concurrent by default)

my sonarr runs on the same box without any memory leak issue's.

where radarr is eating around 2gb of ram on idle.

Radarr Ver. 0.2.0.778

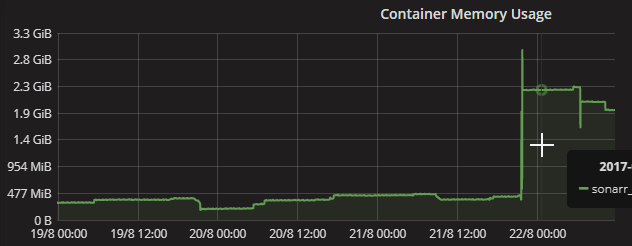

I haven't had the time to debug yet, but I am starting to see the same problem with Sonarr.

I'm using the dockerfiles from linuxserver.io

From around 450 megs to 2,2 GB, booom!

ping @galli-leo @markus101

I know this thread has been inactive since a few months but I'm still experiencing the same issues.

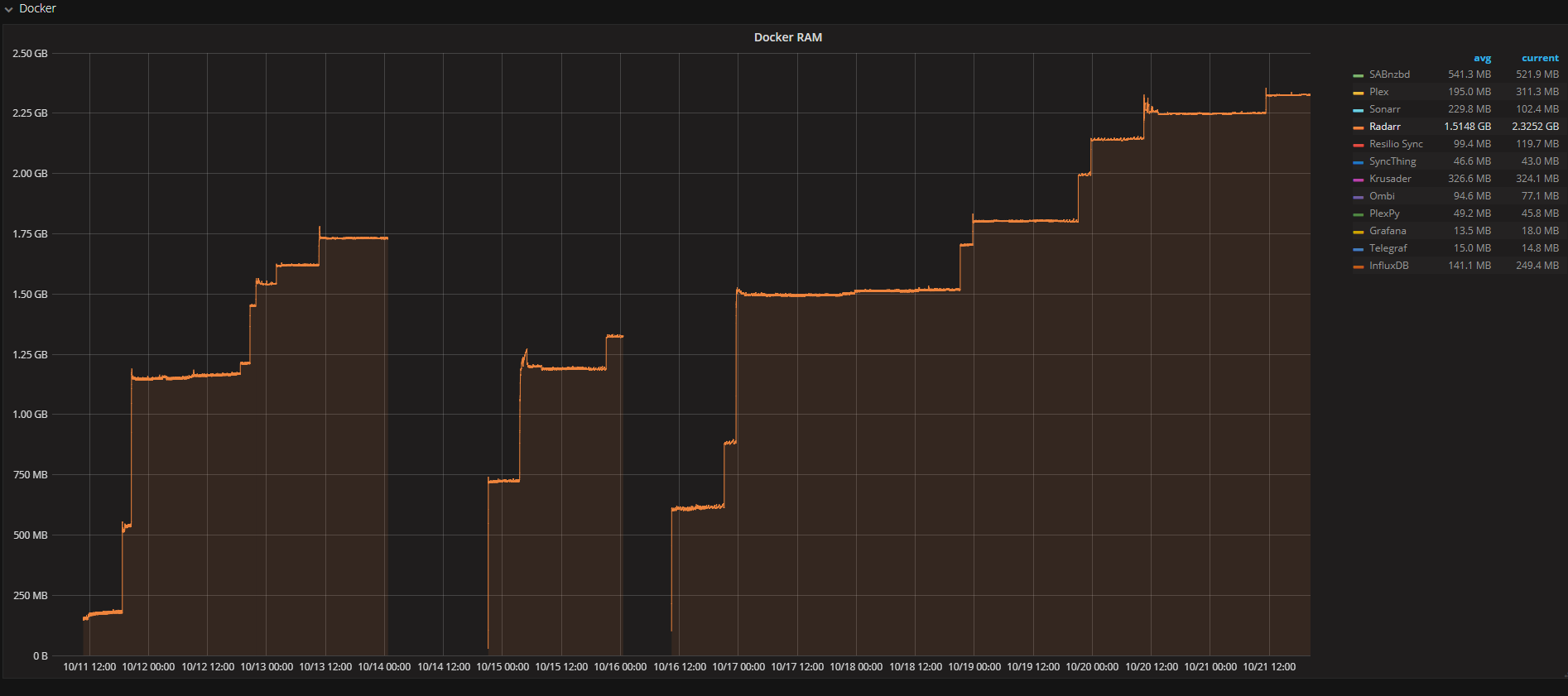

I'm running binhex's Radarr docker on unRAID 6.3.5 and whenever the container is being restarted RAM usage is fairly normal (~750MB for a library of 480 downloaded & 150 monitored).

Whenever I'm starting a library scan though the RAM usage obviously rises but it seems like that RAM space is not being freed up again after the scan finishes. My server is up for about 6 days now and RAM usage climbed from 600mb up to 2.3GB in that time, performing five library scans.

Radarr version is 0.2.0.870

Mono version is 4.8.0 (Stable 4.8.0.495/e4a3cf3 Mon Feb 27 08:30:18 UTC 2017)

Maybe this helps.

EDIT:

Just for testing purposes I switched to linuxserver's docker image because they're using the newer

Mono version 5.4.0.201 (tarball Fri Oct 13 21:51:45 UTC 2017).

I'll keep an eye on RAM usage. Let's see if it changes anything.

Once again updating this thread, I'm having similar issues.

This occurs during/after importing a movie (in my case Deadpool 4K). It appears it might be relative to how much memory the system as, as others have seen memory growth up to 4GB, I have a 64GB system and hover around ~2GB normally and spiked to 13GB after import.

As you can see memory spiked beyond 13GB during import, and after the import dropped down, however not completely. Might give Sonarr a test to see if Mono is the issue.

@Firefly How many movies do you have?

@galli-leo 401 listed in Radarr, however less than 300 downloaded. I should mention I use linuxserver.io docker container on unraid 6.3.5. I've noticed ram usage eventually went slightly down every 5 minutes, then dropped to normal usage in about half an hour:

You can ignore the Plex usage going up, that's just Plex transcoding to ram.

I feel like after this amount of time unraid itself might be clearing ram, not sure though. If you need logs hit me up, I'll chuck em up when I get the time.

Logs would certainly be good. Might be mono clearing up as well.

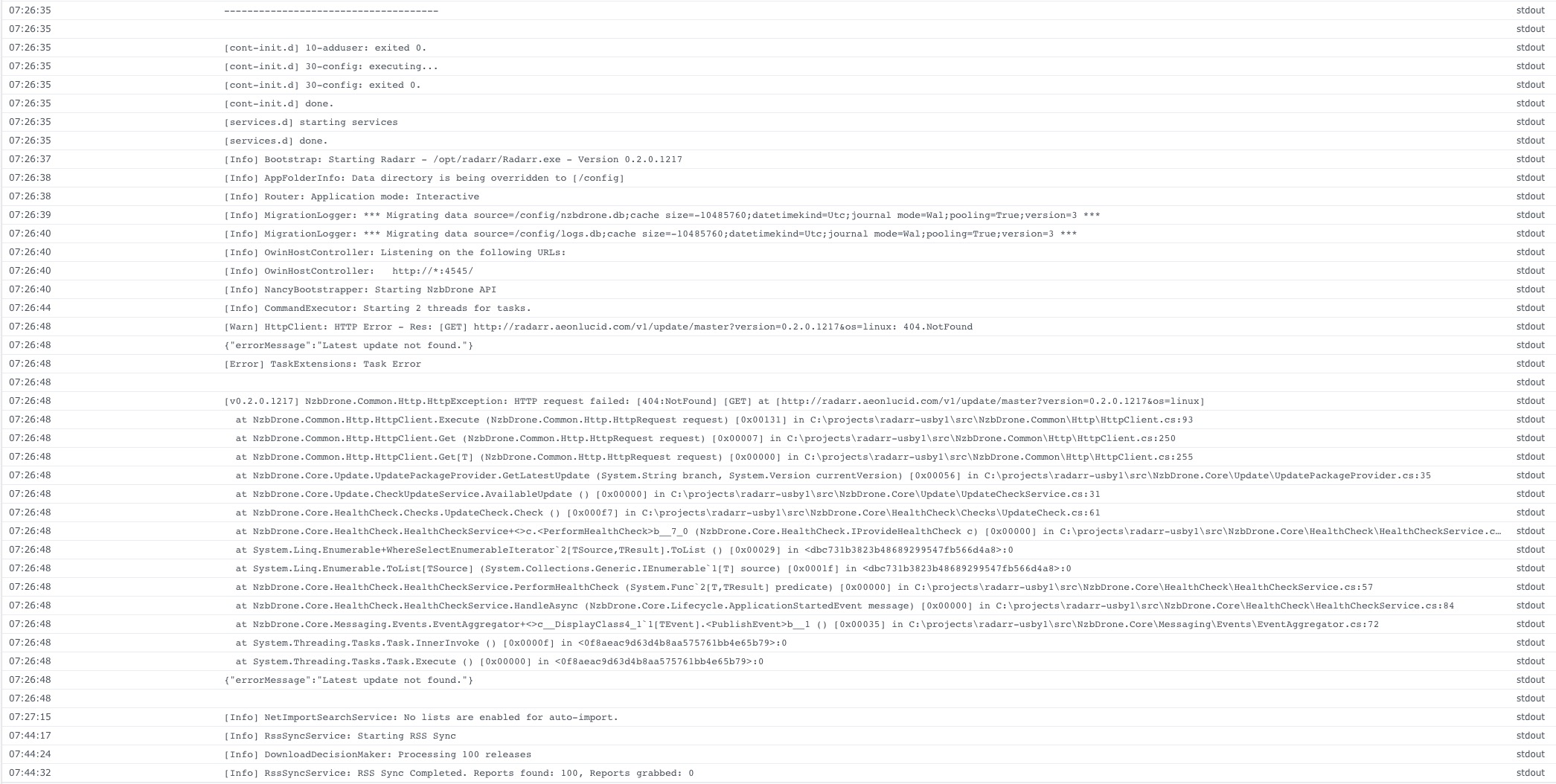

Okay so here's the logs:

radarr.txt

The file is quite large, and I've noticed some other errors in there that I haven't been affected by/not noticed. For that reason here's where the memory issues occur:

17-11-29 07:18:08.0|Error|DownloadedMovieImportService|Import failed, path does not exist or is not accessible by Radarr: /downloads/Deadpool.2016.UHD.BluRay.2160p.TrueHD.Atmos.7.1.HEVC.REMUX-FraMeSToR

17-11-29 07:18:38.6|Info|RecycleBinProvider|Recycling Bin has not been configured, deleting permanently. /movies/Movies/Deadpool (2016)/Deadpool.2016.Remux-1080p.mkv

I've now noticed the same thing occuring in Sonarr. When replacing an entire Series in Sonarr I got similar memory spikes, that also gradually declined.

I'll put debugging on both Radarr and Sonarr and will start up an issue with them as well. I've noticed others are using docker containers above, some with linuxserver.io. could this be an issue with the container implementation?

One last thing, because my implementation involves importing the files via lftp from a remote location I get a lot of file not found errors until they appear, is there any way to reduce/stop this warning or should I not bother?

Thanks for the help!

A few things I noticed in this thread:

First the graph @kmlucy posted back in June. The increase from ~1000 to 1236 resident set isn't really a problem unless it keeps happening on subsequent library scans. The rest is just 747 cache and is something the OS would free if the memory is needed.

That said, 1 GB resident memory is high on itself, my Sonarr instance is at 278 MB (which I already consider higher than I'd like)

But the graph is excellent (netdata ftw) because it appropriately shows swap, rss and cache separately.

@Firelfy Your graph missing something important: what kind of memory usage. If during a library scan Radarr opens up a lot of files then the OS will happily load/cache those files in memory.

I have a mongodb instance on my server that uses 139 GB of virtual memory... (the machine has 8 GB of physical ram). It just happens to have mapped the database into virtual memory space, but in reality it uses only 58 MB with another 111 MB swapped out. Once the database gets busy, the RAM usage shoots up since data from the disk is suddenly cached into memory, and that's fine.

I'm not saying this is the case, there might very well be a memory problem, but saying "App x uses too much memory" simply means very little without appropriate context.

The memory starts to drop significantly when plex gets busy, could either be that memory gets swapped out or caches get releases... a very important distinction and exactly the context I'm taking about.

@galli-leo If there is a managed memory problem in Radarr, then you need to use the mono log profiler to create a report which you can analyse (to see which managed objects remain in memory). But that doesn't cover unmanaged memory, of course.

@galli-leo @markus101 We should log some cpu/mem/io stats at Debug level every 5 min or so.

Thanks for the info @Taloth , I've produced another graph and reimported a movie and produced this:

It appears that you are correct, and that the increase in usage is an increase is cache, is this something I should be worried about?

Looking for a suggestion here, when reporting my memory usage for my containers should I report rss usage or total (including cache/rss) usage in my statistics?

Thanks for the help.

@Taloth Cool, I will try that, though we shouldn't have any unmanaged memory, right? (Sorry if it's a dumb question, not too familiar with C# memory management).

@Firelfy the cache is what we like to see, but the rss is still high. Can you include swap in the graph and then see if it drops again like before when plex started doing it's thing?

The rss is pretty stable around 2.61 GB even during the scan, so it's not leaking memory, but that's very high.

I would recommend recording a graph that from startup of Radarr, preferably over 24h or so, before moving on to the log profiler to get detailed memory statistics.

The 24h graph with tell you if the memory usage 'levels off'. That way galli knows whether we're dealing with a memory leak or just some static high memory usage.

@galli-leo unmanaged memory would be IO buffers, libraries, and lots of mono internals. Native libraries like openssl all use unmanaged memory exclusively. So yes, there's going to be quite a bit of unmanaged memory in use.

I'm not going to guide ppl through the process of using the log profiler, I simply doing have the time for it, but the mono docs at http://www.mono-project.com/docs/debug+profile/profile/profiler/ should give you all the info you need.

@Taloth Unfortunately I am unable to add swap in the graph, in a short while I'll try another way to grab that info.

In the meantime I restarted Radarr and took a couple of screenshots of my usage over just over 24 hours:

As you can see memory usage has continued to climb, every time I visit the web-UI I see a small increase in usage that never decreases. Also every time the Refresh Movie task is run, memory increases and never decreases.

What I'm not sure about is if this is some kind of leak why it has only gone up to around 2.5-3GB of usage and no further.

I have turned on debugging for the same period, however Radarr has split those logs up into many different files. If you would like those logs I'll try and join them up and send them your way.

@galli-leo Hopefully this will help in diagnosing this issue.

it's caused by the "Update Library" ... I guess 'feature'... once you restart the ram usage goes back to normal. Once you hit "Update Library" once it's finished it doesn't release the ram it has taken up.

temporary fix to kill the entire thing and restart it multiple times a day

Seems like the culprit to me. I have plenty of ram so it's no concern to me, especially since my dockers auto restart weekly anyway. However I'm sure this would be an issue for some.

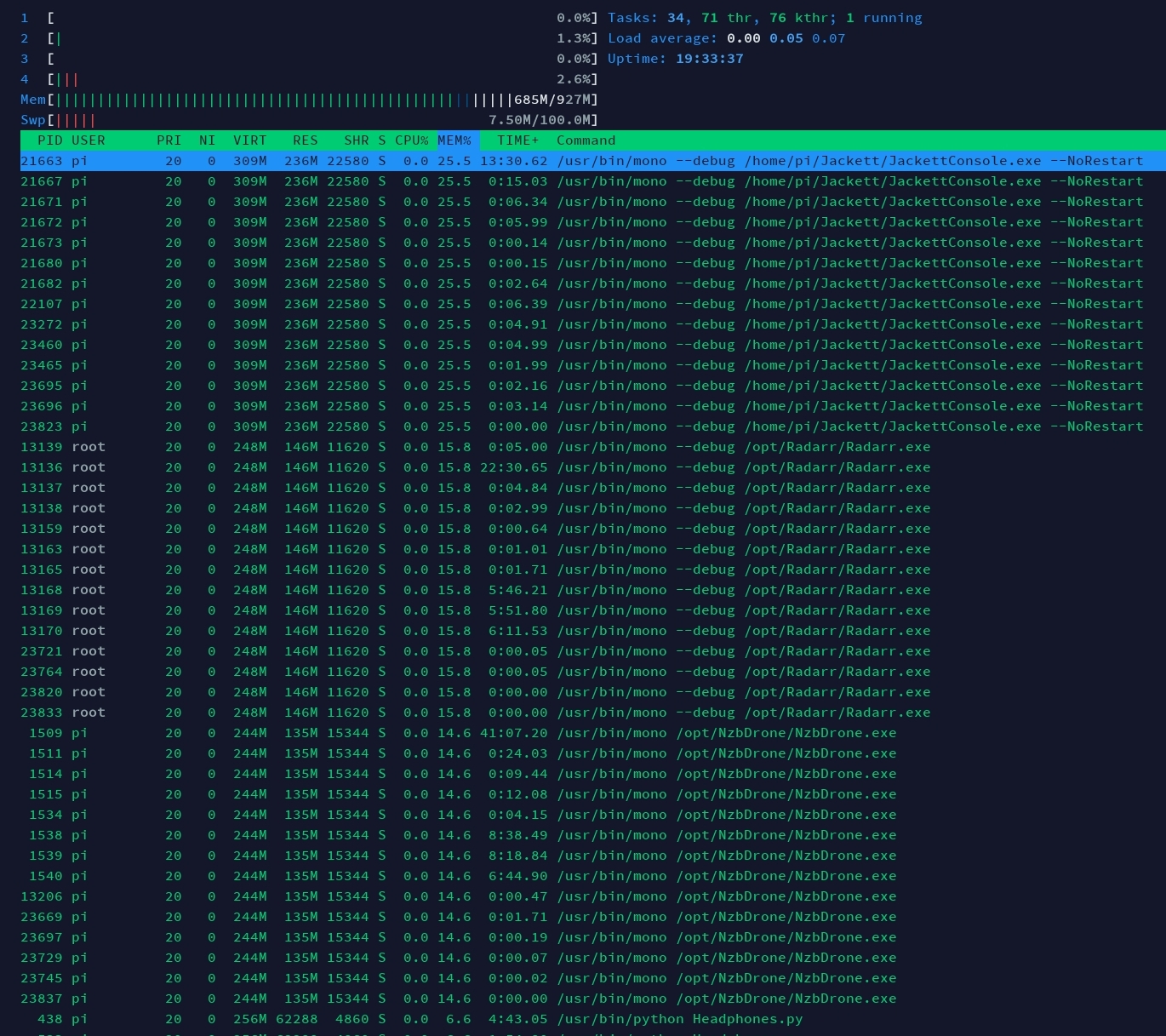

Been watching this as I have radarr running on a memory limited raspberry pi, and been running into crashes due to radarr/mono running out of memory. Total memory in use sits just under 50% with radarr on a fresh start, and I could see in use memory going up each time I did an update library. Restart radarr, and back under 50% again. So it would appear to be it to me as well, and I just run into issues a lot sooner than most due to my limited available memory.

I can confirm that the RAM usage is out of the ordinary on my side too. running 3 days and I am on 800mb of ram used just by radarr (running inside of linuxserver.io container). I suspect that the crash my nas had a few days ago was caused by Radarr taking up too much ram. I will keep an eye on it from now on forward.

@BoKKeR I doubt it was caused by Radarr, even if it was using too much Ram. That should never lead to an os crash, especially if it's inside a docker. Also 800mb doesn't seem to unreasonable for a mono application this large, since it has to run a webserver as well. When I finally have some more time I will try to profile the memory usage of Radarr.

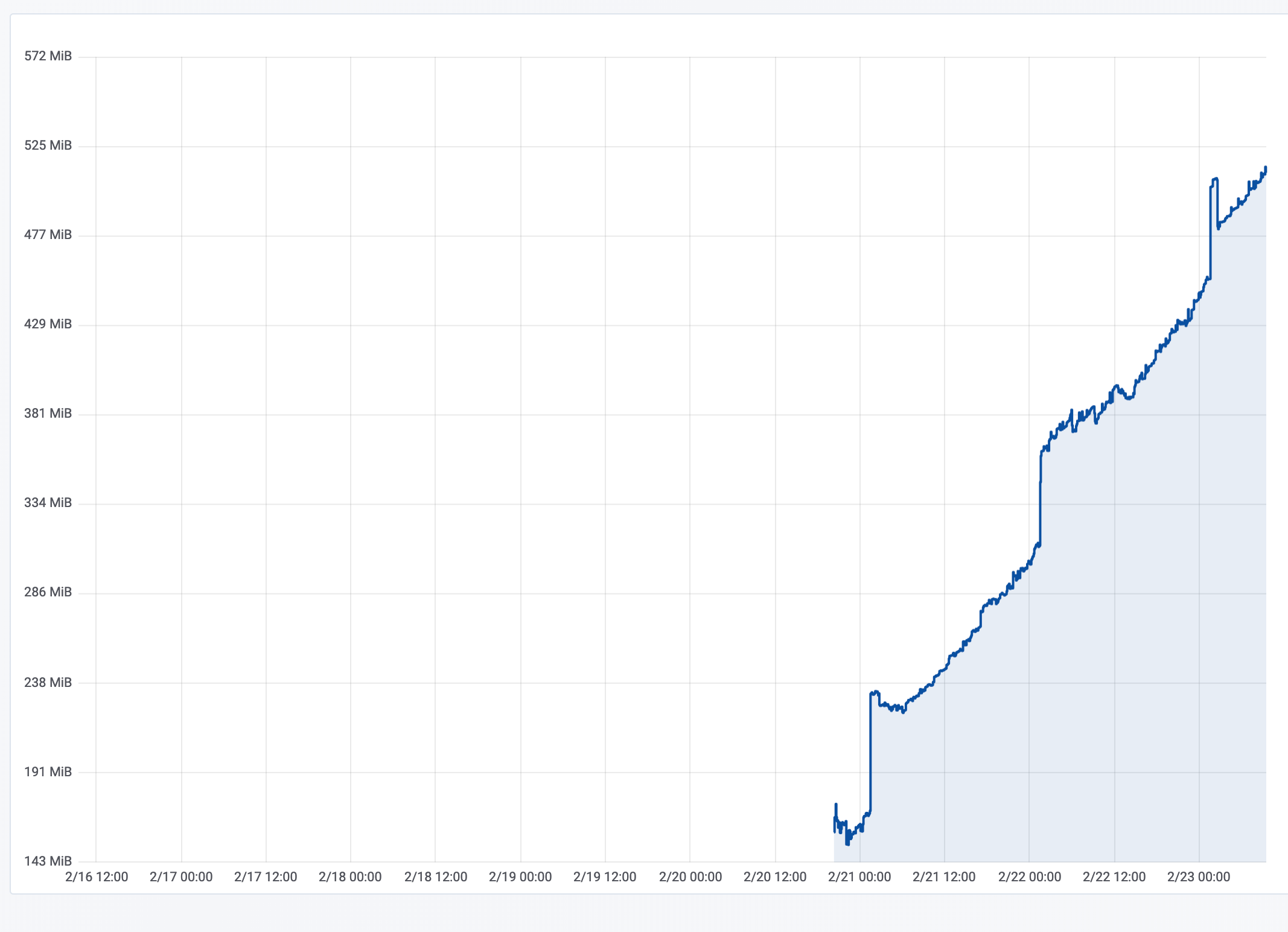

I don't know if this would be the same thing but I can reproduce a leak by triggering the housekeeping task (so I have this problem every 24 hours):

This is on a linux system with latest mono 5.8.0 and 2GB of mem total. Thats a +500MB increase in an instant (pure mem usage, non shared, non virtual) that eventually crashes Radarr but in the mean time might make the system unusable, as soon as something else (kodi playing) is going on. This has been happening to me more less since the latest update where previously would not go over ~200MB. Radarr holds ~50 items. Got no problems with Sonarr nor Jackett.

Executing the housekeeping a second time does not further increase memory usage as far as I can tell.

Profiling data:

https://ufile.io/peu3y

Trace logs, not much happening to be honest.

radarr.trace.txt

@jacaru Did you try limiting Radarr's memory usage? Not sure why the houskeeping task would cause such a memory increase, it only executes a few sql queries. Especially, since we didn't add any stuff to that in the latest release. Are you sure the daily movies refresh doesn't happen at the same time?

Thanks for the profile report, unfortunately the allocations dont seem to be in there for whatever reason :( Can you redo the report with log:calls,alloc ?

Probably did something wrong with that profile, I wanted to leave calls out because it becomes very big and slow. This new profile has both calls and allocs anyway.

https://ufile.io/jgv5o

How would I go about limiting the memory for radarr? Any hint?

Oh wow yeah that's big (rip my laptop processing that). Are you using docker, if so it should be relatively easy (just search for, don't know it myself either). If not, probably a lot harder.

Took a look on my side, and the profile does not match the numbers:

Total memory allocated: 101057416 bytes in 1984716 objects

Against the +500MB usage the system reports. How would that be? I guess the architecture where you analyze the report needs not be the same as where it was generated?

@jacaru Could be something like Taloth is experiencing?:

Your graph missing something important: what kind of memory usage. If during a library scan Radarr opens up a lot of files then the OS will happily load/cache those files in memory.

I have a mongodb instance on my server that uses 139 GB of virtual memory... (the machine has 8 GB of physical ram). It just happens to have mapped the database into virtual memory space, but in reality it uses only 58 MB with another 111 MB swapped out. Once the database gets busy, the RAM usage shoots up since data from the disk is suddenly cached into memory, and that's fine.

Also what's the timeframe of your profile? i.e. started radarr at 0s, executed x at 2s, killed radarr at 4s.

@Taloth Sorry to bother you again, but I basically have no idea what I am doing when it comes to memory debugging 😬.

Anyways, according to @jacaru profile, the most memory is taken up by Strings. Most of that is taken up by sqlite stuff, especially the Providers. Maybe something in the sqlite process, BasicRepository, DataMapper doesn't release the strings / objects? As I said, no idea why that would be happening.

28013440 489131 57 System.String

6858192 bytes from:

Marr.Data.QGen.QueryBuilder`1<T_REF>:ToList ()

Marr.Data.DataMapper:Query<T_REF> (string,System.Collections.Generic.ICollection`1<T_REF>,bool)

Marr.Data.Mapping.MappingHelper:CreateAndLoadEntity<T_REF> (Marr.Data.Mapping.ColumnMapCollection,System.Data.Common.DbDataReader,bool)

Marr.Data.Mapping.MappingHelper:CreateAndLoadEntity (System.Type,Marr.Data.Mapping.ColumnMapCollection,System.Data.Common.DbDataReader,bool)

Marr.Data.Mapping.MappingHelper:LoadExistingEntity (Marr.Data.Mapping.ColumnMapCollection,System.Data.Common.DbDataReader,object,bool)

NzbDrone.Core.Datastore.Converters.ProviderSettingConverter:FromDB (Marr.Data.Converters.ConverterContext)

NzbDrone.Common.Reflection.ReflectionExtensions:FindTypeByName (System.Reflection.Assembly,string)

System.Linq.Enumerable:SingleOrDefault<TSource_REF> (System.Collections.Generic.IEnumerable`1<TSource_REF>,System.Func`2<TSource_REF, bool>)

NzbDrone.Common.Reflection.ReflectionExtensions/<>c__DisplayClass7_0:<FindTypeByName>b__0 (System.Type)

(wrapper managed-to-native) System.RuntimeType:get_Name (System.RuntimeType)

1376216 bytes from:

Marr.Data.QGen.SortBuilder`1<T_REF>:ToList ()

Marr.Data.QGen.QueryBuilder`1<T_REF>:ToList ()

Marr.Data.DataMapper:QueryToGraph<T_REF> (string,Marr.Data.EntityGraph,System.Collections.Generic.List`1<System.Reflection.MemberInfo>)

Marr.Data.Mapping.MappingHelper:CreateAndLoadEntity (System.Type,Marr.Data.Mapping.ColumnMapCollection,System.Data.Common.DbDataReader,bool)

Marr.Data.Mapping.MappingHelper:LoadExistingEntity (Marr.Data.Mapping.ColumnMapCollection,System.Data.Common.DbDataReader,object,bool)

System.Data.SQLite.SQLiteDataReader:GetOrdinal (string)

System.Data.SQLite.SQLite3:ColumnIndex (System.Data.SQLite.SQLiteStatement,string)

System.Data.SQLite.SQLite3:ColumnName (System.Data.SQLite.SQLiteStatement,int)

System.Data.SQLite.SQLiteConvert:UTF8ToString (intptr,int)

(wrapper managed-to-native) string:FastAllocateString (int)

339848 bytes from:

Marr.Data.QGen.QueryBuilder`1<T_REF>:ToList ()

Marr.Data.DataMapper:Query<T_REF> (string,System.Collections.Generic.ICollection`1<T_REF>,bool)

Marr.Data.Mapping.MappingHelper:CreateAndLoadEntity<T_REF> (Marr.Data.Mapping.ColumnMapCollection,System.Data.Common.DbDataReader,bool)

Marr.Data.Mapping.MappingHelper:CreateAndLoadEntity (System.Type,Marr.Data.Mapping.ColumnMapCollection,System.Data.Common.DbDataReader,bool)

Marr.Data.Mapping.MappingHelper:LoadExistingEntity (Marr.Data.Mapping.ColumnMapCollection,System.Data.Common.DbDataReader,object,bool)

System.Data.SQLite.SQLiteDataReader:GetValue (int)

System.Data.SQLite.SQLite3:GetValue (System.Data.SQLite.SQLiteStatement,System.Data.SQLite.SQLiteConnectionFlags,int,System.Data.SQLite.SQLiteType)

System.Data.SQLite.SQLite3:GetText (System.Data.SQLite.SQLiteStatement,int)

System.Data.SQLite.SQLiteConvert:UTF8ToString (intptr,int)

(wrapper managed-to-native) string:FastAllocateString (int)

333216 bytes from:

Marr.Data.QGen.QueryBuilder`1<T_REF>:ToList ()

Marr.Data.DataMapper:QueryToGraph<T_REF> (string,Marr.Data.EntityGraph,System.Collections.Generic.List`1<System.Reflection.MemberInfo>)

Marr.Data.EntityGraph:IsNewGroup (System.Data.Common.DbDataReader)

Marr.Data.GroupingKeyCollection:CreateGroupingKey (System.Data.Common.DbDataReader)

System.Data.SQLite.SQLiteDataReader:get_Item (string)

System.Data.SQLite.SQLiteDataReader:GetOrdinal (string)

System.Data.SQLite.SQLite3:ColumnIndex (System.Data.SQLite.SQLiteStatement,string)

System.Data.SQLite.SQLite3:ColumnName (System.Data.SQLite.SQLiteStatement,int)

System.Data.SQLite.SQLiteConvert:UTF8ToString (intptr,int)

(wrapper managed-to-native) string:FastAllocateString (int)

325008 bytes from:

System.Data.SQLite.UnsafeNativeMethods:Initialize ()

System.Data.SQLite.UnsafeNativeMethods:SearchForDirectory (string&,string&)

System.Data.SQLite.UnsafeNativeMethods:GetPlatformName (string)

System.Data.SQLite.UnsafeNativeMethods:GetProcessorArchitecture ()

System.Data.SQLite.UnsafeNativeMethods:GetSettingValue (string,string)

System.Data.SQLite.UnsafeNativeMethods:GetXmlConfigFileName ()

System.Data.SQLite.UnsafeNativeMethods:GetAssemblyDirectory ()

System.Data.SQLite.UnsafeNativeMethods:CheckAssemblyCodeBase (System.Reflection.Assembly,string&)

System.Data.SQLite.UnsafeNativeMethods:CheckForArchitecturesAndPlatforms (string,System.Collections.Generic.List`1<string>&)

(wrapper managed-to-native) string:FastAllocateString (int)

323056 bytes from:

System.Data.SQLite.SQLiteConnection:.ctor (string,bool)

System.Data.SQLite.UnsafeNativeMethods:Initialize ()

System.Data.SQLite.UnsafeNativeMethods:SearchForDirectory (string&,string&)

System.Data.SQLite.UnsafeNativeMethods:GetProcessorArchitecture ()

System.Data.SQLite.UnsafeNativeMethods:GetSettingValue (string,string)

System.Data.SQLite.UnsafeNativeMethods:GetXmlConfigFileName ()

System.Data.SQLite.UnsafeNativeMethods:GetAssemblyDirectory ()

System.Data.SQLite.UnsafeNativeMethods:CheckAssemblyCodeBase (System.Reflection.Assembly,string&)

System.Data.SQLite.UnsafeNativeMethods:CheckForArchitecturesAndPlatforms (string,System.Collections.Generic.List`1<string>&)

(wrapper managed-to-native) string:FastAllocateString (int)

323056 bytes from:

System.Data.SQLite.SQLiteConnection:.ctor (string,bool)

System.Data.SQLite.UnsafeNativeMethods:Initialize ()

System.Data.SQLite.UnsafeNativeMethods:PreLoadSQLiteDll (string,string,string&,intptr&)

System.Data.SQLite.UnsafeNativeMethods:GetProcessorArchitecture ()

System.Data.SQLite.UnsafeNativeMethods:GetSettingValue (string,string)

System.Data.SQLite.UnsafeNativeMethods:GetXmlConfigFileName ()

System.Data.SQLite.UnsafeNativeMethods:GetAssemblyDirectory ()

System.Data.SQLite.UnsafeNativeMethods:CheckAssemblyCodeBase (System.Reflection.Assembly,string&)

System.Data.SQLite.UnsafeNativeMethods:CheckForArchitecturesAndPlatforms (string,System.Collections.Generic.List`1<string>&)

(wrapper managed-to-native) string:FastAllocateString (int)

323056 bytes from:

System.Data.SQLite.SQLiteConnection:.ctor (string,bool)

System.Data.SQLite.UnsafeNativeMethods:Initialize ()

System.Data.SQLite.UnsafeNativeMethods:PreLoadSQLiteDll (string,string,string&,intptr&)

System.Data.SQLite.UnsafeNativeMethods:GetBaseDirectory ()

System.Data.SQLite.UnsafeNativeMethods:GetSettingValue (string,string)

System.Data.SQLite.UnsafeNativeMethods:GetXmlConfigFileName ()

System.Data.SQLite.UnsafeNativeMethods:GetAssemblyDirectory ()

System.Data.SQLite.UnsafeNativeMethods:CheckAssemblyCodeBase (System.Reflection.Assembly,string&)

System.Data.SQLite.UnsafeNativeMethods:CheckForArchitecturesAndPlatforms (string,System.Collections.Generic.List`1<string>&)

(wrapper managed-to-native) string:FastAllocateString (int)

267000 bytes from:

Marr.Data.QGen.SortBuilder`1<T_REF>:ToList ()

Marr.Data.QGen.QueryBuilder`1<T_REF>:ToList ()

Marr.Data.DataMapper:QueryToGraph<T_REF> (string,Marr.Data.EntityGraph,System.Collections.Generic.List`1<System.Reflection.MemberInfo>)

Marr.Data.Mapping.MappingHelper:CreateAndLoadEntity (System.Type,Marr.Data.Mapping.ColumnMapCollection,System.Data.Common.DbDataReader,bool)

Marr.Data.Mapping.MappingHelper:LoadExistingEntity (Marr.Data.Mapping.ColumnMapCollection,System.Data.Common.DbDataReader,object,bool)

System.Data.SQLite.SQLiteDataReader:GetValue (int)

System.Data.SQLite.SQLite3:GetValue (System.Data.SQLite.SQLiteStatement,System.Data.SQLite.SQLiteConnectionFlags,int,System.Data.SQLite.SQLiteType)

System.Data.SQLite.SQLite3:GetText (System.Data.SQLite.SQLiteStatement,int)

System.Data.SQLite.SQLiteConvert:UTF8ToString (intptr,int)

(wrapper managed-to-native) string:FastAllocateString (int)

265920 bytes from:

TinyIoC.TinyIoCContainer:ConstructType (System.Type,System.Type,System.Reflection.ConstructorInfo,TinyIoC.NamedParameterOverloads,TinyIoC.ResolveOptions)

(wrapper dynamic-method) object:lambda_method (System.Runtime.CompilerServices.Closure,object[])

NzbDrone.Core.Download.DownloadClientFactory:.ctor (NzbDrone.Core.Download.IDownloadClientRepository,System.Collections.Generic.IEnumerable`1<NzbDrone.Core.Download.IDownloadClient>,NzbDrone.Common.Composition.IContainer,NzbDrone.Core.Messaging.Events.IEventAggregator,NLog.Logger)

NzbDrone.Core.ThingiProvider.ProviderFactory`2<TProvider_REF, TProviderDefinition_REF>:.ctor (NzbDrone.Core.ThingiProvider.IProviderRepository`1<TProviderDefinition_REF>,System.Collections.Generic.IEnumerable`1<TProvider_REF>,NzbDrone.Common.Composition.IContainer,NzbDrone.Core.Messaging.Events.IEventAggregator,NLog.Logger)

System.Linq.Enumerable:ToList<TSource_REF> (System.Collections.Generic.IEnumerable`1<TSource_REF>)

System.Linq.Enumerable/<CastIterator>d__34`1<TResult_REF>:MoveNext ()

System.Linq.Enumerable/WhereSelectEnumerableIterator`2<TSource_REF, TResult_REF>:MoveNext ()

TinyIoC.TinyIoCContainer:<ResolveAllInternal>b__134_2 (TinyIoC.TinyIoCContainer/TypeRegistration)

TinyIoC.TinyIoCContainer:ResolveInternal (TinyIoC.TinyIoCContainer/TypeRegistration,TinyIoC.NamedParameterOverloads,TinyIoC.ResolveOptions)

TinyIoC.TinyIoCContainer/DelegateFactory:GetObject (System.Type,TinyIoC.TinyIoCContainer,TinyIoC.NamedParameterOverloads,TinyIoC.ResolveOptions)

The next most allocated object seems to be just bytes, which seems to mostly stem from cryptography (I am assuming ssl):

15534992 95255 163 System.UInt32[]

14732088 bytes from:

Microsoft.Owin.Infrastructure.AppFuncTransition:Invoke (Microsoft.Owin.IOwinContext)

Microsoft.AspNet.SignalR.Owin.Handlers.PersistentConnectionHandler:Invoke (System.Collections.Generic.IDictionary`2<string, object>)

Microsoft.AspNet.SignalR.Owin.CallHandler:Invoke (System.Collections.Generic.IDictionary`2<string, object>)

Microsoft.AspNet.SignalR.PersistentConnection:ProcessRequest (Microsoft.AspNet.SignalR.Hosting.HostContext)

Microsoft.AspNet.SignalR.PersistentConnection:TryGetConnectionId (Microsoft.AspNet.SignalR.Hosting.HostContext,string,string&,string&,int&)

Microsoft.AspNet.SignalR.Infrastructure.DefaultProtectedData:Unprotect (string,string)

System.Security.Cryptography.ProtectedData:Unprotect (byte[],byte[],System.Security.Cryptography.DataProtectionScope)

Mono.Security.Cryptography.ManagedProtection:Unprotect (byte[],byte[],System.Security.Cryptography.DataProtectionScope)

(wrapper alloc) object:ProfilerAllocVector (intptr,intptr)

(wrapper managed-to-native) object:__icall_wrapper_mono_profiler_raise_gc_allocation (object)

774008 bytes from:

Microsoft.Owin.Infrastructure.AppFuncTransition:Invoke (Microsoft.Owin.IOwinContext)

Microsoft.AspNet.SignalR.Owin.Handlers.PersistentConnectionHandler:Invoke (System.Collections.Generic.IDictionary`2<string, object>)

Microsoft.AspNet.SignalR.Owin.CallHandler:Invoke (System.Collections.Generic.IDictionary`2<string, object>)

Microsoft.AspNet.SignalR.PersistentConnection:ProcessRequest (Microsoft.AspNet.SignalR.Hosting.HostContext)

Microsoft.AspNet.SignalR.PersistentConnection:TryGetConnectionId (Microsoft.AspNet.SignalR.Hosting.HostContext,string,string&,string&,int&)

Microsoft.AspNet.SignalR.Infrastructure.DefaultProtectedData:Unprotect (string,string)

System.Security.Cryptography.ProtectedData:Unprotect (byte[],byte[],System.Security.Cryptography.DataProtectionScope)

Mono.Security.Cryptography.ManagedProtection:Unprotect (byte[],byte[],System.Security.Cryptography.DataProtectionScope)

(wrapper alloc) object:ProfilerAllocVector (intptr,intptr)

(wrapper managed-to-native) object:__icall_wrapper_mono_gc_alloc_vector (intptr,intptr,intptr)

5792 bytes from:

Microsoft.Owin.Infrastructure.AppFuncTransition:Invoke (Microsoft.Owin.IOwinContext)

Microsoft.AspNet.SignalR.Owin.Handlers.PersistentConnectionHandler:Invoke (System.Collections.Generic.IDictionary`2<string, object>)

Microsoft.AspNet.SignalR.Owin.CallHandler:Invoke (System.Collections.Generic.IDictionary`2<string, object>)

Microsoft.AspNet.SignalR.PersistentConnection:ProcessRequest (Microsoft.AspNet.SignalR.Hosting.HostContext)

Microsoft.AspNet.SignalR.PersistentConnection:ProcessNegotiationRequest (Microsoft.AspNet.SignalR.Hosting.HostContext)

Microsoft.AspNet.SignalR.Infrastructure.DefaultProtectedData:Protect (string,string)

System.Security.Cryptography.ProtectedData:Protect (byte[],byte[],System.Security.Cryptography.DataProtectionScope)

Mono.Security.Cryptography.ManagedProtection:Protect (byte[],byte[],System.Security.Cryptography.DataProtectionScope)

(wrapper alloc) object:ProfilerAllocVector (intptr,intptr)

(wrapper managed-to-native) object:__icall_wrapper_mono_profiler_raise_gc_allocation (object)

5248 bytes from:

Microsoft.AspNet.SignalR.Owin.Handlers.PersistentConnectionHandler:Invoke (System.Collections.Generic.IDictionary`2<string, object>)

Microsoft.AspNet.SignalR.Owin.CallHandler:Invoke (System.Collections.Generic.IDictionary`2<string, object>)

Microsoft.AspNet.SignalR.PersistentConnection:ProcessRequest (Microsoft.AspNet.SignalR.Hosting.HostContext)

Microsoft.AspNet.SignalR.PersistentConnection:ProcessNegotiationRequest (Microsoft.AspNet.SignalR.Hosting.HostContext)

Microsoft.AspNet.SignalR.Infrastructure.DefaultProtectedData:Protect (string,string)

System.Security.Cryptography.ProtectedData:Protect (byte[],byte[],System.Security.Cryptography.DataProtectionScope)

Mono.Security.Cryptography.ManagedProtection:Protect (byte[],byte[],System.Security.Cryptography.DataProtectionScope)

Mono.Security.Cryptography.ManagedProtection:GetKey

So got some advice from mono devs. This might be unmanaged memory which wont show on profile, for example, open files. They suggested to take a coredump:

https://ufile.io/5f3zy

@jacaru Could you try using a different uploader (e.g. google drive) next time, this one takes ages to download. Also any suggestions from the mono devs on how to analyze a coredump?

@jacaru Could be something like Taloth is experiencing?:

Your graph missing something important: what kind of memory usage. If during a library scan Radarr opens up a lot of files then the OS will happily load/cache those files in memory.

I have a mongodb instance on my server that uses 139 GB of virtual memory... (the machine has 8 GB of physical ram). It just happens to have mapped the database into virtual memory space, but in reality it uses only 58 MB with another 111 MB swapped out. Once the database gets busy, the RAM usage shoots up since data from the disk is suddenly cached into memory, and that's fine.

It may load files into virtual memory, but that should not take up rss memory permanently.

These are the sizes of my radarr dir:

1,2M Backups

224M MediaCover

1,5M UpdateLogs

4,0K config.xml

12M logs

208M logs.db

1,1M logs.db-shm

214M logs.db-wal

1,8M nzbdrone.db

32K nzbdrone.db-shm

340K nzbdrone.db-wal

4,0K nzbdrone.pid

Also what's the timeframe of your profile? i.e. started radarr at 0s, executed x at 2s, killed radarr at 4s.

Yeah, thats what I do, dont have any precise timing at hand.

@jacaru Could you try using a different uploader (e.g. google drive) next time, this one takes ages to download.

Ok.

So I guess we need to find a way to track down unmanaged memory.

Yeah, thats what I do, dont have any precise timing at hand.

But do you have some estimations? e.g. did you start Radarr, ran it for 10 minutes, downloaded some stuff, then executed Housekeeping, then killed it?

I would say it would be start Radarr, go to the tasks page, let it breath 30 seconds, execute housekeeping, 30 seconds more, shutdown.

The bit about unmanaged memory is what I was going to say, coz 28 MB in 'strings' isn't much.

But 500 MB isn't a huge amount of memory... it's also not a leak unless triggering housekeeping will add 500 MB each time you do it. (to analyze that you'll have to look at virtual memory usage trend after triggering housekeeping repeatedly, since the resident usage will quickly top out due to physical memory constraints)

Finally, debugging core dump is particularly difficult. I think you'd have to look at tools like valgrind to be able to see where the relevant chunks of unmanaged memory is allocated (at runtime, can't do that with a core dump). I haven't gone down that particular rabbit hole myself.

To add: Determine the trend, if it's leaking periodically then valgrind might help. If it's just once after the first housekeeping then it probably requires a different approach.

@galli-leo Find out what the individual housekeeping does exactly and find a way to trigger those individually. Add a property to HousekeepingCommand so the individual housekeepers can be filtered (A string 'filter' property for housekeepers.Where(v =>v.GetType().Name.Contains(...))). Then jacaru can call the api and try the various combinations to see which adds the most memory.

Once the likely culprit has been found, make sure you have a managed memory profile for before housekeeping is run, and one after. So it can be compared. (The unmanaged memory might well be 'kept alive' by an managed instance of something)

I will check valgrind and crosscheck virtual memory. I do agree it might no be proper to talk about a leak but that does not mean the memory use is reasonable.

Comparing the file sizes to that of Sonnar...

2,1M Backups

26M MediaCover

788K UpdateLogs

4,0K config.xml

8,8M logs

3,0M logs.db

32K logs.db-shm

5,0M logs.db-wal

2,8M nzbdrone.db

32K nzbdrone.db-shm

4,0K nzbdrone.db-wal

4,0K nzbdrone.pid

... which roughly holds 25 items compared to the 50 items of Radarr. Much smaller sizes all around. I wonder if it has anything to do.

Is there a way to reproduce this on a Mac?

@Taloth Cool will check it out. I can just setup a linux environment or try to replicate memory usage on my mac, where I can attach valgrind. One thing I could think of would be the MediaCovers? Maybe it's loading the media covers into virtual memory.

Anyways, I still don't think the memory usage has anything to do with housekeepers, as others have seen it happen with the refresh movies task or rss sync. Hmm so two housekeepers (the media covers one and Clean Title Fixer), gets all movies. I am guessing _movieservice.GetAllMovies() is the culprit, since it occurs in all those other tasks too.

I will check valgrind and crosscheck virtual memory. I do agree it might no be proper to talk about a leak but that does not mean the memory use is reasonable.

Agreed, I just wanted to point out that it's not a necessarily a 'leak' since our approach will have to be different. (If it's a truly leak then we just repeat the process to find out what gets allocated the most).

Anyway, you might want to start with the virtual memory crosscheck. What add how much vsize vs vrss vs vdata. Baseline, 1x housekeeping, 2x housekeeping. Is the baseline the same for multiple startups? etc

I'd also like to know whether it scales: Backup your appdata dir. delete half the items. run housekeeping (vacuum's the db), restart Radarr and do the test again.

Doing those tests is a bit tedious, but it's going to be easier than valgrind and gives us some basic numbers.

The first time that housekeeping gets called, mono will 'jit compile' quite a bit of methods that haven't been called yet, and the sql abstraction layer will have generated cached queries etc. Stuff that won't (shouldn't) happen again, but adds to memory. I wouldn't expect that to add that much memory usage.

@galli-leo Yes, but housekeeping is fairly local. RssSync and Refresh does quite a bit of http calls and other noisy logic. If we can exclude http then we don't have to worry about that subsystem and related unmanaged libraries. It's not the 'cause', it just likely makes it easier to isolate.

Let us know if you get it reproduced on your mac. miguel's input could be invaluable.

Last time I opened Sonarr's mlpd trace into Xamarin's Profiler it took an hour to load :smile:

I haven't been able to get radarr working with valgrind, it starts but timeouts on access. Maybe I could try triggering the houseekeeping with a REST call but dont know what resource to point the request to.

Multiple calls to housekeeping doesn't increase the memory usage further, including virtual memory. The rss sync and movie refresh tasks dont have the problem either.

I tried a separate Radarr instance with an empty database, and the problem does not happen. How is the MediaCover folder structured? Does it hold a subdir per movie? In that case I have more subdirs than movies. Also, how is 214M logs.db-wal so big?

This is htop output. Each line after the following respective actions: radarr start, housekeeping x 3, refresh movies, rss sync, housekeeping:

PID USER PRI NI VIRT RES SHR S CPU% MEM% TIME+ Command

10493 radarr 30 10 160M 90464 24596 S 12.7 4.9 0:34.06 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

10493 radarr 30 10 662M 589M 25712 S 0.0 33.0 1:04.40 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

10493 radarr 30 10 663M 589M 25864 S 0.0 32.9 1:31.16 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

10493 radarr 30 10 663M 589M 25896 S 0.0 33.0 1:57.22 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

10493 radarr 30 10 681M 599M 26476 S 0.6 33.5 3:55.84 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

10493 radarr 30 10 684M 599M 26520 S 0.0 33.5 4:16.02 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

10493 radarr 30 10 1176M 1068M 1072 S 7.8 59.7 4:43.57 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

The I restarted radarr, deleted half of the movies, housekeeping, restarted again. Note the size of the radarr directory did not decrease after this procedure.

After that, got this htop output where each line after the following respective actions: radarr start, housekeeping x 3, refresh movies, housekeeping, rss sync, housekeeping, refresh movies, housekeeping:

PID USER PRI NI VIRT RES SHR S CPU% MEM% TIME+ Command

10956 radarr 30 10 162M 91836 24404 S 0.0 5.0 0:26.91 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

10956 radarr 30 10 665M 591M 26332 S 66.2 33.0 0:51.45 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

10956 radarr 30 10 672M 592M 26364 S 0.0 33.1 1:09.77 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

10956 radarr 30 10 672M 593M 26400 S 0.0 33.2 1:43.50 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

10956 radarr 30 10 694M 607M 22944 S 0.0 33.9 2:41.65 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

10956 radarr 30 10 1189M 1083M 2524 S 9.5 60.6 3:06.28 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

10956 radarr 30 10 1129M 1025M 4128 S 7.2 57.3 3:37.83 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

10956 radarr 30 10 1133M 1030M 5444 S 0.0 57.6 4:37.54 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

10956 radarr 30 10 1136M 1032M 4276 S 9.3 57.7 4:49.80 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

but dont know what resource to point the request to.

bash script https://gist.github.com/Taloth/dee53e840f5dd7080687cf65c02e2bc1 don't forget to update url and apikey, please note that commands are asynchronous, the api call will only trigger the command, it won't wait for it to finish.

Multiple calls to housekeeping doesn't increase the memory usage further

Excellent, we'll have to look at the one-time init and caches. You'll need @galli-leo help to trigger individual housekeepers.

I tried a separate Radarr instance with an empty database, and the problem does not happen.

Use half the items, not empty, but given the earlier results don't expect it to scale.

How is the MediaCover folder structured? Does it hold a subdir per movie?

It does on Sonarr, so probably yes. Each dir is the ID of the item in the database, an incremental integer. However, that's rather unlikely to be related.

Also, how is 214M logs.db-wal so big?

writeahead journal for sqlite. How big is logs.db itself?

214M is too big imho, at least for Sonarr, but I don't know if Radarr logs a lot more details to System->Logs.

Run sqlite3 logs.db "PRAGMA integrity_check" it should return 'ok'. If it's ok, then be sure Radarr is stopped and run sqlite3 logs.db "pragma journal_mode=truncate; VACUUM;" then restart Radarr. The wal file will return but start at 0.

If logs.db is that big too, then stop Radarr, move logs.db* (all 3 files) to somewhere else, start Radarr and run the memory test again. (again unlikely, but I rather not skip over anything at this point)

_I'll reply to your second post separately_

@galli-leo The increase from 600M RSS to 1060M after rss-sync+housekeeping is peculiar. Obviously the code has been jitted already and relevant caches filled due to earlier calls. So what's swallowing 400M? Can you get jacaru a version with housekeeper filter?

Yes, the logs.db file is just as big. Removed the log files and tested: these show start, housekeeping, rss sync, housekeeping, movie refresh, housekeeping x2, movie refresh, housekeeping, rss, housekeeping, rss, housekeeping:

PID USER PRI NI VIRT RES SHR S CPU% MEM% TIME+ Command

12374 radarr 30 10 167M 95208 24676 S 0.0 5.2 0:43.79 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

12374 radarr 30 10 168M 96848 24736 S 0.0 5.3 0:52.65 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

12374 radarr 30 10 174M 99M 24756 S 0.0 5.5 1:28.08 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

12374 radarr 30 10 177M 102M 24868 S 0.0 5.7 1:37.74 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

12374 radarr 30 10 186M 106M 26396 S 0.0 6.0 3:16.21 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

12374 radarr 30 10 190M 109M 26504 S 0.0 6.1 3:28.34 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

12374 radarr 30 10 192M 112M 26504 S 0.0 6.3 3:41.94 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

12374 radarr 30 10 191M 112M 26564 S 86.4 6.3 5:28.12 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

12374 radarr 30 10 191M 112M 26564 S 0.0 6.3 5:34.98 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

12374 radarr 30 10 193M 113M 26500 S 0.0 6.4 6:12.87 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

12374 radarr 30 10 193M 113M 26500 S 0.7 6.4 6:15.95 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

12374 radarr 30 10 192M 113M 26532 S 0.0 6.3 6:51.83 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

12374 radarr 30 10 192M 113M 26564 S 0.0 6.3 6:56.52 /usr/bin/mono /opt/share/Radarr/Radarr.exe -nobrowser

So problem is gone by removing the logs files, duh.

zip up the original logs.db* files and get em to galli, that might allow him to reproduce the issue consistently and find out where in sqlite the memory is kept alive.

Also, with the original logs db check /proc/(id)/maps to see if it actually is memory mapped.

my guess is that the VACUUM during housekeeping keeps open the connection.

@jacaru Yup if you could send over the log it should be easy to replicate.

@Taloth Do you think the issue could be the log statements surrounding the Vacuum command?:

_logger.Info("Vacuuming {0} database", _databaseName);

_datamapperFactory().ExecuteNonQuery("Vacuum;");

_logger.Info("{0} database compressed", _databaseName);

Not sure what else would keep the connection open.

@galli-leo Afaik the connection is always kept open. I kinda ran into that when tweaking the Backup logic last year. My concern is why it's using memory in the first place, hence the question about the memory map. In my Sonarr instance only .db-shm is memory mapped, which means the db data is probably loaded (and kept in memory) by libsqlite itself. I believe System.Data.Sqlite has some methods of looking at sqlite's memory usage.

Anyway, if you check the logs he posted you'll see an abundance of recurring warnings, that explains the db size. There are ways to prevent that Warn from being logged repeatedly, but it depends on how the ParserService is called.

I'm thinking two issues:

- That Warn logged every minute, causing too many log events.

- db data is likely being kept in memory. we need to find out if that's done by libsqlite and why.

@Taloth Hmm that makes a lot more sense than my guess. I really should stop guessing at things I have no idea about lol.

Regarding the warn (haven't had the time to look at the actual logs, guessing parser having problems with a download): This is probably why this issue has come up more for radarr users. We do the downloaded movies scan every 15 seconds instead of 1 minute like you. This would probably lead to a 4x blowup compared to Sonarr in similar situations. Pair that with users having much more movies compared to series in their libraries, info log entries (or warnings on poster downloads) could blow up the log db quite a bit after the daily refresh.

Regarding on how to fix this, maybe we should only keep one entry per log message? i.e. do an insert ... on duplicate update ... and add occurences as well as last_occurance?

sample query: insert into log (message) Values ('My Error') on duplicate key update last_occurance=now, occurances=occurances+1

Regarding your second point, no idea on how to fix that, will check it and the logs from jacaru out once I get home.

Regarding on how to fix this, maybe we should only keep one entry per log message? i.e. do an insert ... on duplicate update ... and add occurences as well as last_occurance?

It's a log, not a 'pending issues' list, so I don't think so. What's the problem here is that unidentifiable imports aren't visible anywhere (For Sonarr v3 we plan to add it to Activity), meaning the log is the user's only method of seeing something's wrong. Fix that and you won't need a Warn level message there.

The reason I asked for a repro on the Mac, is that on the Mac you can use Instruments to profile the unmanaged memory and it would be very simple to pinpoint the culprit there.

@migueldeicaza That's a good point. you could try using the huge log db from above to repro it. Though I doubt that instrument gives anything useful without debugging symbols.

Edit: Just noticed you're working for Microsoft :sweat_smile: Last time I used instruments for something, I had a really hard time getting the debug symbols to build and actually load. Is there something special you have to do, to get debugging symbols for mono apps?

Hi guys, I read this topic after I saw radarr consuming 1054MB while sonarr is consuming 299MB.

[Linux Server docker image]

Version: 0.2.0.995

Mono Version: 5.10.0.160

-------

Movies: 516

Downloaded: 62

Monitored: 60

-------

Idk if I'm on the same issue or the library is just big.

(these 516 is this big because of the movie Lists configuration

option, (i think) but few of them is monitored (and I think most

of them do not need to be monitored anymore) )

I will try to unmonitor some movies and disable the "Lists" to

reduce the movie count.

Anyway, if someone needs anything I could try to help.

Edit---------

I've deleted all my movies, and yes, i think the problem

was the movie count. With no Movies reduced to 159MB.

I will add my movies again and report how much it uses.

Edit2--------

Now with 61 Movies and 0 Monitored:

Before Library Update, it uses arround 299MB.

After Library Update, it uses arround 644MB.

It doubles the ram usage during Library Update,

and maintain the ram usage even after is done.

Any news about this issue? I can't even import my library after a fresh install, when bulk importing more than 50 movies at once it's 100% chance of OOM Killer killing something (most times it kills radarr). Machine has just 1GB ram and 1GB swap. Sonarr is working fine with 100+ series and 2000+ episodes.

Any update on this? Radarr was eating 2GB of RAM on my CentOS 7 install with latest kernel 4.x and latest patches to python & mono

Any update. I also can not get through a bulk import

is this solved? my radarr was using 12gb, running the latest version of it on ubuntu 18.04, after restart service it droped to almost 0 and slowly creep up 4-6gb a day until swap file is full.

Well it's been over a year and no fix yet. So here is my fix for this issue, I limited the memory my systemd process uses like so:

[Service]

CPUShares=512

MemoryLimit=1G

If you're running this inside a docker image, limit the container memory with the --memory=1G flag for example when you run your container.

Radarr seems to be fine when doing this and even though it eats all the memory you allocate to it obviously due to the memory leak, it runs fine.

One thing to try out is to delete your log db file and or post here how many entries you have / how large it is. It may be the culprit.

This bug is kind of easy to reproduce, so, I'm not sure if the devs really

need more information.. It's just a matter of bulk importing a small (100

movies is more than enough) collection. It happens also on a fresh install

of Radarr, and seems completely unrelated to the total DB size, if you keep

restarting Radarr every 50-100 movies imported you are able to have a 5000+

movies collection without problems. If you bulk import 200 movies at once

it will cause Radarr to use 3GB+ of RAM even if you start from scratch,

with a clean DB. It happens also when importing movies from lists or add

them manually.

Limiting the resource usage at container/systemd level isn't a proper fix

either, as it will simply kill Radarr when it reaches the limit, adding a

cron job to kill Radarr every X hours would be just as "good".

Em qua, 4 de jul de 2018 às 18:50, Leonardo Galli notifications@github.com

escreveu:

One thing to try out is to delete your log db file and or post here how

many entries you have / how large it is. It may be the culprit.—

You are receiving this because you are subscribed to this thread.

Reply to this email directly, view it on GitHub

https://github.com/Radarr/Radarr/issues/1580#issuecomment-402563787, or mute

the thread

https://github.com/notifications/unsubscribe-auth/AAhRBtY0RWz_0sA5mNgZoK-DeHQh3bspks5uDTiQgaJpZM4NoXml

.

--

Daniel Ribeiro

I can confirm that this leak is also happening in sonarr. I'm running sonarr and radarr through docker on a linux machine, so it's probably a mono problem. Does anyone have this issue on a windows box ?

Sonarr is fine for me, only Radarr uses abnormal amount of RAM. Linux, not

using docker, same mono binaries for both Sonarr and Radarr.

Em sex, 6 de jul de 2018 01:59, Daniel M. notifications@github.com

escreveu:

I can confirm that this leak is also happening in sonarr. I'm running

sonarr and radarr through docker on a linux machine, so it's probably a

mono problem. Does anyone have this issue on a windows box ?—

You are receiving this because you are subscribed to this thread.

Reply to this email directly, view it on GitHub

https://github.com/Radarr/Radarr/issues/1580#issuecomment-402925908, or mute

the thread

https://github.com/notifications/unsubscribe-auth/AAhRBpIarwTlI3n5O8KlpQXhFgOyqX2_ks5uDu6cgaJpZM4NoXml

.

Em 6 de jul de 2018 1:59 AM, "Daniel M." notifications@github.com

escreveu:

I can confirm that this leak is also happening in sonarr. I'm running

sonarr and radarr through docker on a linux machine, so it's probably a

mono problem. Does anyone have this issue on a windows box ?

—

You are receiving this because you are subscribed to this thread.

Reply to this email directly, view it on GitHub

https://github.com/Radarr/Radarr/issues/1580#issuecomment-402925908, or mute

the thread

https://github.com/notifications/unsubscribe-auth/AAhRBpIarwTlI3n5O8KlpQXhFgOyqX2_ks5uDu6cgaJpZM4NoXml

.

This issue has been automatically marked as stale because it has not had recent activity. Please verify that this is still an issue with the latest version of Radarr and report back. Otherwise this issue will be closed.

So I have tried reproducing it again under a profiler but failed. Can anyone confirm this is happening on vanilla osx (so no dockers)? Else I will have to fire up an ubuntu machine and try profiling there.

@bskrtich @drwyrm @yanxunhu What happens when you delete your log db file?

This bug is kind of easy to reproduce, so, I'm not sure if the devs really

need more information.. It's just a matter of bulk importing a small (100

movies is more than enough) collection. It happens also on a fresh install

of Radarr, and seems completely unrelated to the total DB size, if you keep

restarting Radarr every 50-100 movies imported you are able to have a 5000+

movies collection without problems. If you bulk import 200 movies at once

it will cause Radarr to use 3GB+ of RAM even if you start from scratch,

with a clean DB. It happens also when importing movies from lists or add

them manually.

It isn't as simple as it seems, since both Windows and MacOS do not exhibit this behaviour, so I will have to install ubuntu on an old machine and create a report there.

I have removed some unused housekeeping tasks in the latest nightly, could you guys try the latest nightly and report back on memory usage? (Before and after log deletion as well).

Still happening, made Radarr crash for me today

This is happening for me on Debian. It looks like memory isn't freed after running the 'Refresh Movie' task. I don't have the same issue with the other tasks, only 'Refresh Movie' causes ram usage to skyrocket and not return to its original level.

You won't really notice this unless you have a significant number of films as memory usage increases based on the number of films that needs scanning. Memory usage just keeps increasing and doesn't stop for the duration of that task.

@MrTopCat Do you have the latest nightly? How large is your log.db? Have you tried deleting your log.db?

I was running into issues where radar was using all the memory on my raspberry pi 3 running Arch Linux. Deleting the logs.db file resolved the issue for me, at least for now.

i have the same problem running on ubuntu 16.04 lts odroid c2. When starting radarr it only uses around 100mb but after half a day it uses 800mb and keeps growing till the point i run out of memory wich is only 2gb on the odroid c2.

I switched from CP to radarr and i think its verry good besides this memory problem. So if this could be fixed, that would be verry nice.

@macbeth322 Have you tried switching to nightly?

@Nervwrecker Have you tried nightly and deleting the logs.db?

After reports of multiple users having success with deleting their logs.db (and related) files, I suggest everyone try this as a tempory fix.

For the long run, I have increased the DownloadedMoviesScan interval, so that log messages shouldn‘t occur that frequently anymore. Additionally, I implemented a mechanism that prevents printing a warn message for a file every time the file is scanned (i.e. every 30 seconds). This should greatly help keeping the logs.db file down.

The underlying issue is still not fixed though :(

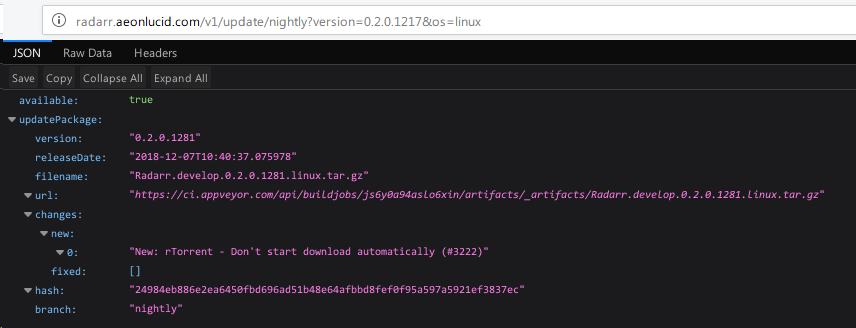

So I might have found out where the memory leak is. I have fixed the issue on a separate branch. Could anyone with this issue try out this build:

And tell me if anything has improved?

Note: This was quickly hacked together, so it might have some errors. Probably not good to use in production, so make a backup beforehand.

+1 for this. Ubuntu 18.04, first install of Radarr today and everything seems to be working great, except for the large amount of memory used. Fresh reboot shows less than 1GB total system usage, then goes up to 3.5 within an hour or two.

Excited to see if the memory leak fix works. I've seen my processes get up to 10G resident ;)

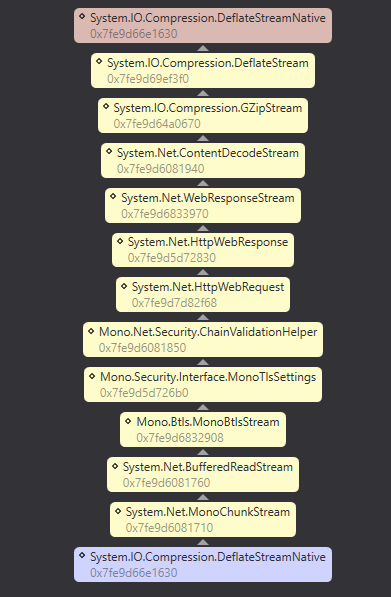

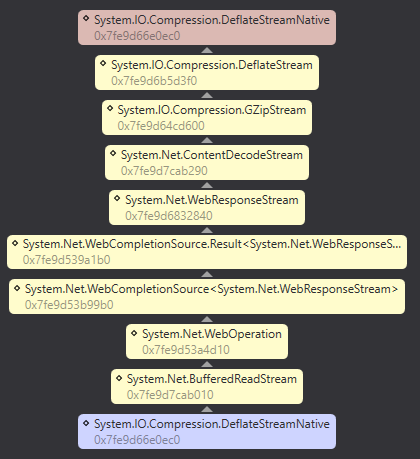

So I am sharing my findings here, in hope of someone able to help me figure out something about this.

@everyone in this thread, if you have some experience with finding memory leaks with mono or with valgrind, please let me know / help me out with the issues described below!

@Taloth I found a definite leak of resources with how the DataMappers are handled. They are IDisposables, but aren't actually disposed. I fixed that on a separate branch, you can view the changes here: https://github.com/Radarr/Radarr/compare/fix/memory-leak#diff-1f6555e9a3ca5f1b5f2d6610480e1079

On this branch, I also tried out some other things, that could have helped: Updating System.Data.Sqlite, not setting the sqlite cache size (you set it to -10M, but according to the sqlite docs, this seems to be 10M*1024 = around 10GB. Is that intended?), etc.

Unfortunately, none of these seem to have worked. I have ran a lot of Radarr instances with the mono log profiler and analyzed those results in the Xamarin profiler. You can find a "good" run here, if you want to look at it and see what you can find out: https://galli.me/output.mlpd (it's only 700MB so should load into Xamarin profiler somewhat quickly :)).

From what I can tell, while we do have a lot of allocations going to Strings for the sql queries, they do not seem to be retained. Additionally, from the various heapshots in that profiler run, I see that 11MB are persisted. However, this does not seem to match up with the working set, which is well in the 600MB range by that time. This means, the leak is likely in unmanaged code, correct?

I tried to run valgrind against Radarr, however, I didn't manage to find any leaks / as soon as I make an HTTP request, the following happens:

- Owin will set the CurrentPrincipal of the current thread to null.

- This calls the following function in the sdtlib: https://github.com/mono/mono/blob/ab0736fa79a31cff42abe5941deb225bb15b5461/mcs/class/corlib/System.Threading/Thread.cs#L278

- Since the new value is null, this will obviously throw an exception.

- It seems that mono implemented this, by listening for a SIGSEGV and then reconstructing the stack, to set the instruction pointer to where the catch block is.

Now, this is fine in normal execution and works correctly. However, valgrind thinks this is an error and kills the process :( do you have any idea how to get around this? I have tried to search for an option to ignore that for valgring, but I haven't found anything. I am currently in the process of "fixing" this in the mono stdlib itself locally, so I can at least have valgrind listen on an HTTP request. Is there a better option?

Furthermore, when valgrind kills the process, it has already detected some possible leaks, the ones especially interesting are in the sqlite library. However, this could also just be because it just killed the process? Anyways, here is the valgrind log for anyone that is interested:

--30309-- Reading syms from /home/leo/Radarr/_output_mono/Prowlin.dll.so

--30309-- Discarding syms at 0x10800450-0x108021f0 in /home/leo/Radarr/_output_mono/Prowlin.dll.so due to munmap()

--30309-- Reading syms from /home/leo/Radarr/_output_mono/Growl.CoreLibrary.dll.so

--30309-- Discarding syms at 0x10800450-0x10802f00 in /home/leo/Radarr/_output_mono/Growl.CoreLibrary.dll.so due to munmap()

--30309-- memcheck GC: 1000 nodes, 5 survivors (0.5%)

==30309== Conditional jump or move depends on uninitialised value(s)

==30309== at 0x5BFAFB: mono_icall_get_machine_name (icall.c:6741)

==30309== by 0x5D4EA7: ves_icall_System_Environment_get_MachineName (icall.c:6762)

==30309== by 0x5D4EA7: ves_icall_System_Environment_get_MachineName_raw (icall-def.h:318)

==30309== by 0x106D53F2: ???

==30309== by 0x42C7A0: mono_jit_runtime_invoke (mini-runtime.c:3189)

==30309== by 0x620F7F: do_runtime_invoke (object.c:2978)

==30309== by 0x627A93: mono_runtime_class_init_full (object.c:521)

==30309== by 0x459D93: mono_method_to_ir (method-to-ir.c:9321)

==30309== by 0x51E27F: mini_method_compile (mini.c:3488)

==30309== by 0x51FFB3: mono_jit_compile_method_inner (mini.c:4076)

==30309== by 0x42A75E: mono_jit_compile_method_with_opt (mini-runtime.c:2450)

==30309== by 0x4AC824: common_call_trampoline (mini-trampolines.c:751)

==30309== by 0x4AD1C2: mono_magic_trampoline (mini-trampolines.c:891)

==30309==

--30309-- memcheck GC: 1000 nodes, 5 survivors (0.5%)

--30309-- memcheck GC: 1000 nodes, 180 survivors (18.0%)

--30309-- memcheck GC: 1014 new table size (driftup)

--30309-- memcheck GC: 1014 nodes, 29 survivors (2.9%)

--30309-- Reading syms from /lib/x86_64-linux-gnu/libnss_files-2.23.so

--30309-- Considering /lib/x86_64-linux-gnu/libnss_files-2.23.so ..

--30309-- .. CRC mismatch (computed bbddf769 wanted cc29886c)

--30309-- Considering /usr/lib/debug/lib/x86_64-linux-gnu/libnss_files-2.23.so ..

--30309-- .. CRC is valid

--30309-- REDIR: 0x5a0d1a0 (libc.so.6:__GI_strcpy) redirected to 0x4c31110 (__GI_strcpy)

--30309-- memcheck GC: 1014 nodes, 7 survivors (0.7%)

--30309-- memcheck GC: 1014 nodes, 11 survivors (1.1%)

--30309-- memcheck GC: 1014 nodes, 7 survivors (0.7%)

--30309-- memcheck GC: 1014 nodes, 15 survivors (1.5%)

--30309-- memcheck GC: 1014 nodes, 11 survivors (1.1%)

--30309-- memcheck GC: 1014 nodes, 10 survivors (1.0%)

==30309== Thread 14 Thread Pool Wor:

==30309== Invalid read of size 8

==30309== at 0x868C118: System_Threading_Thread_SerializePrincipal_System_Threading_Thread_System_Security_Principal_IPrincipal (Thread.cs:203)

==30309== by 0x868C870: System_Threading_Thread_set_CurrentPrincipal_System_Security_Principal_IPrincipal (Thread.cs:278)

==30309== by 0x1332D9FA: ???

==30309== Address 0x0 is not stack'd, malloc'd or (recently) free'd

==30309==

==30309== Invalid write of size 8

==30309== at 0x70A6F2: mono_sigctx_to_monoctx (mono-context.c:205)

==30309== by 0x5123A0: mono_arch_handle_altstack_exception (exceptions-amd64.c:908)

==30309== by 0x42D483: mono_sigsegv_signal_handler_debug (mini-runtime.c:3546)

==30309== by 0x556038F: ??? (in /lib/x86_64-linux-gnu/libpthread-2.23.so)

==30309== by 0x868C117: System_Threading_Thread_SerializePrincipal_System_Threading_Thread_System_Security_Principal_IPrincipal (Thread.cs:202)

==30309== by 0x868C870: System_Threading_Thread_set_CurrentPrincipal_System_Security_Principal_IPrincipal (Thread.cs:278)

==30309== by 0x1332D9FA: ???

==30309== Address 0x12f13dc0 is on thread 14's stack

==30309==

==30309== Invalid write of size 8

==30309== at 0x70A6F9: mono_sigctx_to_monoctx (mono-context.c:205)

==30309== by 0x5123A0: mono_arch_handle_altstack_exception (exceptions-amd64.c:908)

==30309== by 0x42D483: mono_sigsegv_signal_handler_debug (mini-runtime.c:3546)

==30309== by 0x556038F: ??? (in /lib/x86_64-linux-gnu/libpthread-2.23.so)

==30309== by 0x868C117: System_Threading_Thread_SerializePrincipal_System_Threading_Thread_System_Security_Principal_IPrincipal (Thread.cs:202)

==30309== by 0x868C870: System_Threading_Thread_set_CurrentPrincipal_System_Security_Principal_IPrincipal (Thread.cs:278)

==30309== by 0x1332D9FA: ???

==30309== Address 0x12f13db8 is on thread 14's stack

==30309==

==30309== Invalid write of size 8

==30309== at 0x70A715: mono_sigctx_to_monoctx (mono-context.c:206)

==30309== by 0x5123A0: mono_arch_handle_altstack_exception (exceptions-amd64.c:908)

==30309== by 0x42D483: mono_sigsegv_signal_handler_debug (mini-runtime.c:3546)

==30309== by 0x556038F: ??? (in /lib/x86_64-linux-gnu/libpthread-2.23.so)

==30309== by 0x868C117: System_Threading_Thread_SerializePrincipal_System_Threading_Thread_System_Security_Principal_IPrincipal (Thread.cs:202)

==30309== by 0x868C870: System_Threading_Thread_set_CurrentPrincipal_System_Security_Principal_IPrincipal (Thread.cs:278)

==30309== by 0x1332D9FA: ???

==30309== Address 0x12f13dd0 is on thread 14's stack

==30309==

==30309== Invalid write of size 8

==30309== at 0x70A71C: mono_sigctx_to_monoctx (mono-context.c:206)

==30309== by 0x5123A0: mono_arch_handle_altstack_exception (exceptions-amd64.c:908)

==30309== by 0x42D483: mono_sigsegv_signal_handler_debug (mini-runtime.c:3546)

==30309== by 0x556038F: ??? (in /lib/x86_64-linux-gnu/libpthread-2.23.so)

==30309== by 0x868C117: System_Threading_Thread_SerializePrincipal_System_Threading_Thread_System_Security_Principal_IPrincipal (Thread.cs:202)

==30309== by 0x868C870: System_Threading_Thread_set_CurrentPrincipal_System_Security_Principal_IPrincipal (Thread.cs:278)

==30309== by 0x1332D9FA: ???

==30309== Address 0x12f13dc8 is on thread 14's stack

==30309==

==30309== Invalid write of size 8

==30309== at 0x70A738: mono_sigctx_to_monoctx (mono-context.c:207)

==30309== by 0x5123A0: mono_arch_handle_altstack_exception (exceptions-amd64.c:908)

==30309== by 0x42D483: mono_sigsegv_signal_handler_debug (mini-runtime.c:3546)

==30309== by 0x556038F: ??? (in /lib/x86_64-linux-gnu/libpthread-2.23.so)

==30309== by 0x868C117: System_Threading_Thread_SerializePrincipal_System_Threading_Thread_System_Security_Principal_IPrincipal (Thread.cs:202)

==30309== by 0x868C870: System_Threading_Thread_set_CurrentPrincipal_System_Security_Principal_IPrincipal (Thread.cs:278)

==30309== by 0x1332D9FA: ???

==30309== Address 0x12f13de0 is on thread 14's stack

==30309==

==30309== Invalid write of size 8

==30309== at 0x70A73F: mono_sigctx_to_monoctx (mono-context.c:207)

==30309== by 0x5123A0: mono_arch_handle_altstack_exception (exceptions-amd64.c:908)

==30309== by 0x42D483: mono_sigsegv_signal_handler_debug (mini-runtime.c:3546)

==30309== by 0x556038F: ??? (in /lib/x86_64-linux-gnu/libpthread-2.23.so)

==30309== by 0x868C117: System_Threading_Thread_SerializePrincipal_System_Threading_Thread_System_Security_Principal_IPrincipal (Thread.cs:202)

==30309== by 0x868C870: System_Threading_Thread_set_CurrentPrincipal_System_Security_Principal_IPrincipal (Thread.cs:278)

==30309== by 0x1332D9FA: ???

==30309== Address 0x12f13dd8 is on thread 14's stack

==30309==

==30309== Invalid write of size 8

==30309== at 0x70A75B: mono_sigctx_to_monoctx (mono-context.c:208)

==30309== by 0x5123A0: mono_arch_handle_altstack_exception (exceptions-amd64.c:908)

==30309== by 0x42D483: mono_sigsegv_signal_handler_debug (mini-runtime.c:3546)

==30309== by 0x556038F: ??? (in /lib/x86_64-linux-gnu/libpthread-2.23.so)

==30309== by 0x868C117: System_Threading_Thread_SerializePrincipal_System_Threading_Thread_System_Security_Principal_IPrincipal (Thread.cs:202)

==30309== by 0x868C870: System_Threading_Thread_set_CurrentPrincipal_System_Security_Principal_IPrincipal (Thread.cs:278)

==30309== by 0x1332D9FA: ???

==30309== Address 0x12f13df0 is on thread 14's stack

==30309==

==30309== Invalid write of size 8

==30309== at 0x70A762: mono_sigctx_to_monoctx (mono-context.c:208)

==30309== by 0x5123A0: mono_arch_handle_altstack_exception (exceptions-amd64.c:908)

==30309== by 0x42D483: mono_sigsegv_signal_handler_debug (mini-runtime.c:3546)

==30309== by 0x556038F: ??? (in /lib/x86_64-linux-gnu/libpthread-2.23.so)

==30309== by 0x868C117: System_Threading_Thread_SerializePrincipal_System_Threading_Thread_System_Security_Principal_IPrincipal (Thread.cs:202)

==30309== by 0x868C870: System_Threading_Thread_set_CurrentPrincipal_System_Security_Principal_IPrincipal (Thread.cs:278)

==30309== by 0x1332D9FA: ???

==30309== Address 0x12f13de8 is on thread 14's stack

==30309==

==30309== Invalid write of size 8

==30309== at 0x70A77E: mono_sigctx_to_monoctx (mono-context.c:209)

==30309== by 0x5123A0: mono_arch_handle_altstack_exception (exceptions-amd64.c:908)

==30309== by 0x42D483: mono_sigsegv_signal_handler_debug (mini-runtime.c:3546)

==30309== by 0x556038F: ??? (in /lib/x86_64-linux-gnu/libpthread-2.23.so)

==30309== by 0x868C117: System_Threading_Thread_SerializePrincipal_System_Threading_Thread_System_Security_Principal_IPrincipal (Thread.cs:202)

==30309== by 0x868C870: System_Threading_Thread_set_CurrentPrincipal_System_Security_Principal_IPrincipal (Thread.cs:278)

==30309== by 0x1332D9FA: ???

==30309== Address 0x12f13e00 is on thread 14's stack

==30309==

==30309== Invalid write of size 8

==30309== at 0x70A785: mono_sigctx_to_monoctx (mono-context.c:209)

==30309== by 0x5123A0: mono_arch_handle_altstack_exception (exceptions-amd64.c:908)

==30309== by 0x42D483: mono_sigsegv_signal_handler_debug (mini-runtime.c:3546)

==30309== by 0x556038F: ??? (in /lib/x86_64-linux-gnu/libpthread-2.23.so)

==30309== by 0x868C117: System_Threading_Thread_SerializePrincipal_System_Threading_Thread_System_Security_Principal_IPrincipal (Thread.cs:202)

==30309== by 0x868C870: System_Threading_Thread_set_CurrentPrincipal_System_Security_Principal_IPrincipal (Thread.cs:278)

==30309== by 0x1332D9FA: ???

==30309== Address 0x12f13df8 is on thread 14's stack

==30309==

==30309== Invalid write of size 8

==30309== at 0x70A7A1: mono_sigctx_to_monoctx (mono-context.c:210)

==30309== by 0x5123A0: mono_arch_handle_altstack_exception (exceptions-amd64.c:908)

==30309== by 0x42D483: mono_sigsegv_signal_handler_debug (mini-runtime.c:3546)

==30309== by 0x556038F: ??? (in /lib/x86_64-linux-gnu/libpthread-2.23.so)

==30309== by 0x868C117: System_Threading_Thread_SerializePrincipal_System_Threading_Thread_System_Security_Principal_IPrincipal (Thread.cs:202)

==30309== by 0x868C870: System_Threading_Thread_set_CurrentPrincipal_System_Security_Principal_IPrincipal (Thread.cs:278)

==30309== by 0x1332D9FA: ???

==30309== Address 0x12f13e10 is on thread 14's stack

==30309==

==30309== Invalid write of size 8

==30309== at 0x70A7A8: mono_sigctx_to_monoctx (mono-context.c:210)

==30309== by 0x5123A0: mono_arch_handle_altstack_exception (exceptions-amd64.c:908)

==30309== by 0x42D483: mono_sigsegv_signal_handler_debug (mini-runtime.c:3546)

==30309== by 0x556038F: ??? (in /lib/x86_64-linux-gnu/libpthread-2.23.so)

==30309== by 0x868C117: System_Threading_Thread_SerializePrincipal_System_Threading_Thread_System_Security_Principal_IPrincipal (Thread.cs:202)

==30309== by 0x868C870: System_Threading_Thread_set_CurrentPrincipal_System_Security_Principal_IPrincipal (Thread.cs:278)

==30309== by 0x1332D9FA: ???

==30309== Address 0x12f13e08 is on thread 14's stack

==30309==

==30309== Invalid write of size 8

==30309== at 0x70A7C4: mono_sigctx_to_monoctx (mono-context.c:211)

==30309== by 0x5123A0: mono_arch_handle_altstack_exception (exceptions-amd64.c:908)

==30309== by 0x42D483: mono_sigsegv_signal_handler_debug (mini-runtime.c:3546)

==30309== by 0x556038F: ??? (in /lib/x86_64-linux-gnu/libpthread-2.23.so)

==30309== by 0x868C117: System_Threading_Thread_SerializePrincipal_System_Threading_Thread_System_Security_Principal_IPrincipal (Thread.cs:202)

==30309== by 0x868C870: System_Threading_Thread_set_CurrentPrincipal_System_Security_Principal_IPrincipal (Thread.cs:278)

==30309== by 0x1332D9FA: ???

==30309== Address 0x12f13e20 is on thread 14's stack

==30309==

==30309== Invalid write of size 8

==30309== at 0x70A7CB: mono_sigctx_to_monoctx (mono-context.c:211)

==30309== by 0x5123A0: mono_arch_handle_altstack_exception (exceptions-amd64.c:908)

==30309== by 0x42D483: mono_sigsegv_signal_handler_debug (mini-runtime.c:3546)

==30309== by 0x556038F: ??? (in /lib/x86_64-linux-gnu/libpthread-2.23.so)

==30309== by 0x868C117: System_Threading_Thread_SerializePrincipal_System_Threading_Thread_System_Security_Principal_IPrincipal (Thread.cs:202)

==30309== by 0x868C870: System_Threading_Thread_set_CurrentPrincipal_System_Security_Principal_IPrincipal (Thread.cs:278)

==30309== by 0x1332D9FA: ???

==30309== Address 0x12f13e18 is on thread 14's stack

==30309==

==30309== Invalid write of size 8

==30309== at 0x70A7E7: mono_sigctx_to_monoctx (mono-context.c:212)

==30309== by 0x5123A0: mono_arch_handle_altstack_exception (exceptions-amd64.c:908)

==30309== by 0x42D483: mono_sigsegv_signal_handler_debug (mini-runtime.c:3546)

==30309== by 0x556038F: ??? (in /lib/x86_64-linux-gnu/libpthread-2.23.so)

==30309== by 0x868C117: System_Threading_Thread_SerializePrincipal_System_Threading_Thread_System_Security_Principal_IPrincipal (Thread.cs:202)

==30309== by 0x868C870: System_Threading_Thread_set_CurrentPrincipal_System_Security_Principal_IPrincipal (Thread.cs:278)

==30309== by 0x1332D9FA: ???

==30309== Address 0x12f13e30 is on thread 14's stack

==30309==

==30309== Invalid write of size 8

==30309== at 0x70A7EE: mono_sigctx_to_monoctx (mono-context.c:212)

==30309== by 0x5123A0: mono_arch_handle_altstack_exception (exceptions-amd64.c:908)

==30309== by 0x42D483: mono_sigsegv_signal_handler_debug (mini-runtime.c:3546)