Openmvg: How to promote matching efficiency and outcomes?

Thank you all for your selfless work.

Following openMVG and openMVS routine, I ran a test data set that includes 12 UAV images of a playground, and I got some results. But when zooming the matching results(.svg files), you can see some matches are not accurate and precise enough, and when looking at the final 3D .ply model, there are some ups and downs on the ground where it should be planar smooth.

Should I change some parameters from cmd interface or code?

Thanks in advance!

Sincerely,

Sherman.

All 15 comments

By the way, I used the incremental SfM routine.

Which matches did you export?

Matches that are no accurate or not precise enough will be filtered by the geometric consistency test in ComputeMatches and in the SfM stage.

Ups and downs in the ground

It can be due to imperfect camera calibration, you can fly at two height with your UAV to improve your results.

You can also try to provide more matches to the SfM stage by setting the option -p HIGH at the ComputeFeatures stage in order to provide more matches to help the SfM and Bundle Adjustment stage.

I export the matches.f.bin file to matches .svg format file. So it seems that these matches are filtered with geometric consistency test already. By the way, I also export other geometric filtered matches, more specifically, matches.e.bin and matches.h.bin, but why matches number are same despite what geometric model I use?

you can fly at two height with your UAV to improve your results.

I will try it.

You can also try to provide more matches to the SfM stage by setting the option -p HIGH at the ComputeFeatures stage

I will try it as soon as possible and see where it goes.

Thank you very much.

Sincerely,

Sherman

I forgot to mention this.

- The essential and fundamental matrix are point to line model constraint so, you can still have false positive matches after the geometric filtering if some wrong matches are along the epipolar lines.

why matches number are same despite what geometric model I use?

Essential (-g e) and Fundamental (-g f) should give very similar results if the calibration is good.

Homography (-g h) should have less matches since this geometric model involves a point to point error and work only for a planar surface. So less matches should be there. If you have the same number of matches for f/e/h it can be due to the geometry of your scene (almost flat).

Thanks for your attention and patience.

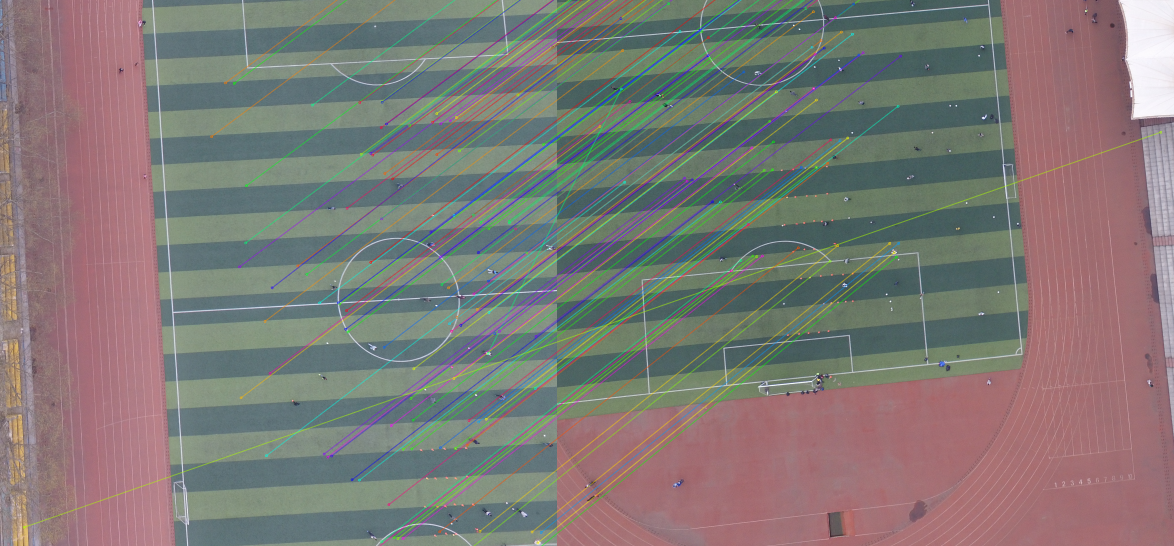

I tried to provide more matches to the SfM stage by setting the option -p HIGH at the ComputeFeatures stage, and I got more matches, I glanced at the results as follows:

It seems that we get two obvious wrong matches with the green line and the yellow one. I will zoom it later and see whether others are perfectly accurate. But what's going on with these obvious wrong two matches? Could you please tell me the reason?

Sincerely,

Sherman

Which model are you considering here e,f,h?

As I said, if you consider e or f, you can have wrong matches along the epipolar lines.

On the other side, false remaining matches will often be discarded by the SfM stage thanks to triangulation.

Thanks a lot, I use the f matches. I will give h a try and see how it works.

As I said, if you consider e or f, you can have wrong matches along the epipolar lines.

Thanks for explanation, now I understand it.

On the other side, false remaining matches will often be discarded by the SfM stage thanks to triangulation.

Could you please explain it a little bit more detailed, could you please tell me where the triangle code and model?

Thanks in advance.

Sincerely,

Sherman

Hi,

I test the h method, I use -r 0.6 for the first time: pMatches = subprocess.Popen( [os.path.join(OPENMVG_SFM_BIN, "openMVG_main_ComputeMatches"), "-i", matches_dir+"/sfm_data.json", "-o", matches_dir, "-f", "1", "-r", "0.6", "-g", "h"] )

It produces much less matches and when I exported the matches, I got 60 .svg format files. Here's the same two images proccessing with homography result:

Afterwards, I set the ratio to 0.8: pMatches = subprocess.Popen( [os.path.join(OPENMVG_SFM_BIN, "openMVG_main_ComputeMatches"), "-i", matches_dir+"/sfm_data.json", "-o", matches_dir, "-f", "1", "-r", "0.8", "-g", "h"] )

The matches are more than 0.6-ratio-results. But it only exports 47 .svg format files. Is this normal?

By the way, I know this is a stupid question: what is the meaning of ratio? It seems when using 0.6, it's more accurate but it produces less matches.

Sincerely,

Sherman

Hi Sherman,

If I may, you should not focus so much on "getting rid of wrong match" due to the many steps in the process.

If you have 40 lines (matches) going in the same direction, the two random wrong one will be discarded. When matching feature between images, an algorithm will check if "three nearby features" are still relatively "near by" in the next image. Hence can check if it is or not a real match.

In general, when you push the accurcy of a process, you tend to get less matches. Possible that a match is slightly offset and can be seen as an error if the "parameters" are too demanding.

Your model has several area with few features, it will greatly help to have different view. You can get very nice model with only 12 pictures but it is not a general rules. Try to have a set of images that cover the entire place you want to model, different altitude as already mention or simply more photo. You can always input less picture if you exagerate on the number taken.

Also if you have people on the field, it can affect the model. When I do a model near a raod, I have tones of "up and down" linked to the cars which do not appear on the texture as they are moving and there are several picture covering each area, but the 3D surface of the road is not flat in this case. Don't know your dataset but worth a check.

Hi MesHeritage ,

If you have 40 lines (matches) going in the same direction, the two random wrong one will be discarded. When matching feature between images, an algorithm will check if "three nearby features" are still relatively "near by" in the next image. Hence can check if it is or not a real match.

Thanks for your detailed explanation, it helps a lot. I will go through the whole pipeline and check the influence caused by different parameters. I will show my 3D model once it get done.

Try to have a set of images that cover the entire place you want to model, different altitude as already mention or simply more photo.

These 12 images covers the whole playground and I was focus each two-view matches, since I have been told that few random wrong matches will be discarded in following steps, I will reconstruct the mesh and 3D model and see how it works. As for different altitudes, that's a good advice, and I will try it. Thanks so much for your attention and patience.

Also if you have people on the field, it can affect the model.

Yes, I encountered this problem before. Whatever the moving object is (walking human, running cars, etc), it's bad for reconstruction. Bacause the same object appears in different places from different views and probably they are considered by SfM and not discarded. Thererfore, areas that are supposed to be flat turn out to be "up and down", I still don't know what to do with this problem. Anyway, I will run a test with my playground dataset.

Sincerely,

Sherman

Hi,

I ran a test with my playground dataset. And openMVS result is as follows:

- Dense point cloud

- Mesh

- Textured mesh

It seems that there are lots of problems here. We get a 3D model, but areas that are supposed to be flat turn out to be "up and down", and I don't know how to improve it. Any suggestions are welcome.

Sincerely,

Sherman

By the way, I downloaded the sample_openMVS exe, and I tried refinemesh.exe, and it took time like forever, so I skipped this step.

We will not deal with OpenMVS results there.

BTW, you can still use refine mesh (just use the option resolution-level with the parameter 1,2 or 4).

Moreover, please note that your object does not have a lot of texture and so if you want more accuracy you need to have a flight at different height and be closer to the ground in order to have a better pixel ground coverage.

OK, thank you a lot.

Hi @MesHeritage ,

When matching feature between images, an algorithm will check if "three nearby features" are still relatively "near by" in the next image. Hence can check if it is or not a real match.

Would you please give me some reference paper about it?

Thanks very much.

Sincerely,

Sherman

Most helpful comment

Hi Sherman,

If I may, you should not focus so much on "getting rid of wrong match" due to the many steps in the process.

If you have 40 lines (matches) going in the same direction, the two random wrong one will be discarded. When matching feature between images, an algorithm will check if "three nearby features" are still relatively "near by" in the next image. Hence can check if it is or not a real match.

In general, when you push the accurcy of a process, you tend to get less matches. Possible that a match is slightly offset and can be seen as an error if the "parameters" are too demanding.

Your model has several area with few features, it will greatly help to have different view. You can get very nice model with only 12 pictures but it is not a general rules. Try to have a set of images that cover the entire place you want to model, different altitude as already mention or simply more photo. You can always input less picture if you exagerate on the number taken.

Also if you have people on the field, it can affect the model. When I do a model near a raod, I have tones of "up and down" linked to the cars which do not appear on the texture as they are moving and there are several picture covering each area, but the 3D surface of the road is not flat in this case. Don't know your dataset but worth a check.