Openj9: Incorrect Array Size Offset for Inline Allocated Zero Sized Arrays (x86)

Background

In certain Garbage Collection policies, like Balanced GC for instance, there exist the notion of contiguous and discontiguous arrays. Typically 'large' arrays are allocated discontiguously, while small arrays are allocated contiguously[1], except in one case---a zero sized array, which is also allocated discontiguously.

The object structure of the two types of arrays can be seen below:

https://github.com/eclipse/openj9/blob/5f4a9c9170fcc15d73d09eaa978e31f50210b64b/runtime/oti/j9nonbuilder.h#L2888-L2891

Issue

Typically, we do not inline allocate discontiguous arrays, deferring to the VM. The exception here is for variable size arrays which evaluate to 0 at runtime. We check for this case and modify the size of the array accordingly.

However, when initializing the header, we seem to assume a contiguous layout and do not check for zero size arrays. This is seen in the following sequence which calculates the array offset in which to write in the size of the array:

https://github.com/eclipse/openj9/blob/5f4a9c9170fcc15d73d09eaa978e31f50210b64b/runtime/compiler/x/codegen/J9TreeEvaluator.cpp#L6903

Based on what we can observe from the object layouts above, it seems that for zero size arrays, we write the size to the mustBeZero field. Given that the size is 0, this shouldn't affect the mustBeZero field. However, since we don't actually write to size, if the field contains garbage values, we might encounter a few issues.

This issue is meant to understand if this situation can pose some problems, and if so, work towards a fix.

[1] = https://blog.openj9.org/2019/05/01/double-map-arraylets/

All 13 comments

In testing a potential fix for this issue internally, I ran into a few failures. I'm investigating these failures and will follow up once I learn more.

Managed to correct the failures mentioned above.

I think it's a good time to request some feedback. My primary concern stems from the fact that a fix requires the addition of 5 new instructions to the inline allocation sequence.

To highlight these changes, consider first, the following sequence in genHeapAlloc2

The sequence above is responsible for checking (at runtime) whether the given array is 0 sized and if so, adjust the object size accordingly. Effectively, it checks for whether the layout of the array is contiguous or discontiguous. The purpose of our fix is to write size into its correct offset in the array header. This offset varies depending on the layout of the array (discontiguous or contiguous). Thus, we can piggyback off the above sequence for our purposes

if (TR::Compiler->om.usesDiscontiguousArraylets()) {

generateRegImmInstruction(MOV4RegImm4, node, sizeOffsetReg, 0, cg);

// CF = 0 => contiguous array; CF = 1 => zero size discontiguous array

generateRegImmInstruction(CMP4RegImm4, node, segmentReg, 1, cg);

generateRegImmInstruction(ADC4RegImm4, node, sizeOffsetReg, 0, cg);

// segmentReg also needs to know what kind of array we're dealing with

generateRegRegInstruction(ADD4RegReg, node, segmentReg, sizeOffsetReg, cg);

// After SHL:

// If Contiguous: sizeOffsetReg = 0

// If Discontiguous: sizeOffsetReg = 4

//

// Final Value:

// If Contiguous: sizeOffset = sizeOffsetOfContiguousArray + 0

// If Discontiguous: sizeOffset = sizeOffsetOfContiguousArray + 4

// i.e. sizeOffsetReg = sizeOffsetReg + sizeOffsetOfContiguousArray

generateRegImmInstruction(SHL4RegImm1, node, sizeOffsetReg, 2, cg);

TR_J9VMBase *fej9 = (TR_J9VMBase *)(cg->fe());

int32_t arraySizeOffset = fej9->getOffsetOfContiguousArraySizeField();

generateRegImmInstruction(ADD4RegImm4, node, sizeOffsetReg, arraySizeOffset, cg);

}

This ensures that we have the correct offset for size at the cost of 4 additional instructions. However, this isn't all we need to do. If the array is discontiguous, we must also zero out the mustBeZero field.

If we observe the array layouts here

we note that mustBeZero in the discontiguous case, is at the same offset as size in the contiguous case (sizeOffsetOfContiguousArray). So we can solve this issue by adding an instruction to write size into the sizeOffsetOfContiguousArray field. Why does this work? Consider the only two cases possible:

- Discontiguous - This must mean it is a zero size array, so we are writing size (size = 0) to

mustBeZero, which maintains validity. - Contiguous - As mentioned,

mustBeZerois at the same offset assizeOffsetOfContiguousArray. Therefore, we are writingsizeinto it's correct field. This acts as a duplicate write to thesizefield, but it maintains our functional correctness.

This results in the addition of 1 extra instruction bringing the total up to 5 which is rather expensive for every inline array allocation.

As mentioned earlier, I am wondering if the potential benefits outweigh the associated costs. Additionally, are there better ways to do this?

Additionally, are there better ways to do this?

Took a look at the way Power does this and I think it revealed an interesting way forward. The sequence for arrays includes a check for zero size and a _branch_ to handle the zero and non-zero cases.

cmplwi CCR_0033, GPR_0016, 0

bne CCR_0033, Label L0048

...

...

stw [GPR_0037, 4], GPR_0016 <-- mustBeZero

stw [GPR_0037, 8], GPR_0016 <-- size

...

...

Label L0048: (non zero size case)

...

...

stw [GPR_0037, 4], GPR_0016 <-- size

A potential downside would be code duplication and a slight increase in memory footprint but performance wise, we execute one instruction extra / take one branch extra per inline array allocation. This is an improvement on the scheme I discussed in the previous comment which added 5 extra instructions per inline array allocation.

@PushkarBettadpur @fjeremic Will this be ready for the 0.22 code split currently scheduled for Aug 9? I'm trying to understand how much code needs to be merged before the code split.

This will not be ready for 0.22. There is technically no functional problem with x86 at the moment. But if we do want to introduce another field in the header we need to fix this.

Moving it out to 0.23 then

@fjeremic do we need to push this out again?

Yeah we do. @VermaSh has been picking this item up in the background. Hopefully we can have an update in the next few weeks once he ramps up. We are committed to getting this fixed.

Hi all, I found workable solution that solves the problem. It adds 2 instructions to the critical path of array allocations, and 3 instructions in case of zero sized arrays. The 2 added instructions are a compare and a jump, and the third is a store to properly initialize the size field in case of zero sized array.

This is x86 assembly before the change:

############# Before change ############

…

rep stosb # SAME 1

mov rax, rdx # SAME 2

mov dword ptr [rax], 0x0010b300 # SAME 3

mov dword ptr [rax+0x4], r12d # SAME 4

Label L0049:

…

And this is the x86 assembly after the change (Change labelled as # EXTRA), where EXTRA1/2 are the ones that would always be executed and # EXTRA 3 only executed in case of zero sized arrays:

############# After change #############

…

rep stosb # SAME 1

mov rax, rdx # SAME 2

mov dword ptr [rax], 0x0010b300 # SAME 3

cmp r12d, 0x00000000 # EXTRA 1

jne Label L0080 # EXTRA 2

mov dword ptr [rax+0x8], r12d # EXTRA 3* : Executed only if is zero sized array

Label L0080:

mov dword ptr [rax+0x4], r12d # SAME 4

Label L0049:

…

I tested and it does fix the problem and the code behaves as expected. There are probably other solutions but this seems to be the least intrusive. For instance the PPC equivalent there’ seems to have a little bit of code duplication; we could go towards that path but I thought this would be preferred? Also, this code branches in case we don’t have a zero sized array. If we think about the proportion of zero sized arrays in comparison to all array sizes, it only comprises of a minority 10% and some workloads not going over 1%. If we take that into consideration it would make sense to only branch out of line whenever we encounter zero sized arrays, and fall through otherwise. However, here since everything is very close and we “jump” one instruction, we shouldn’t lose locality, and the results below might prove that.

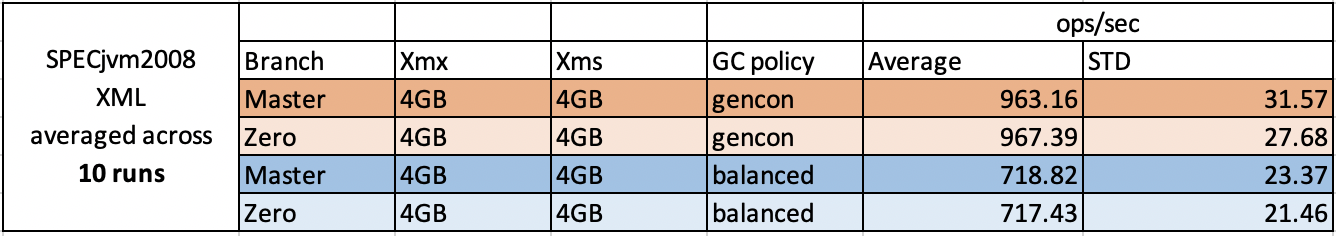

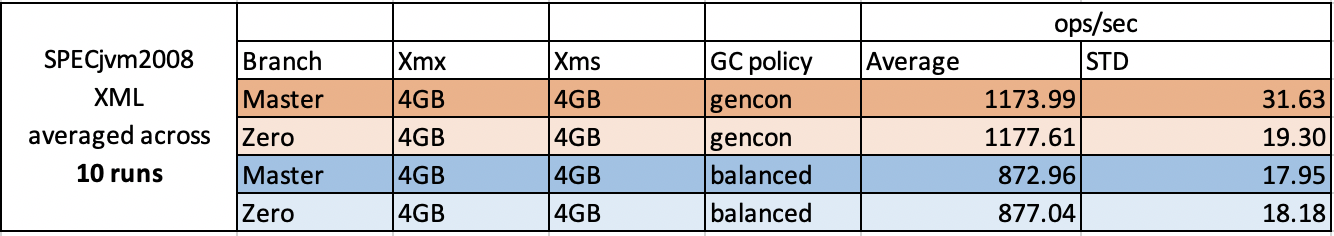

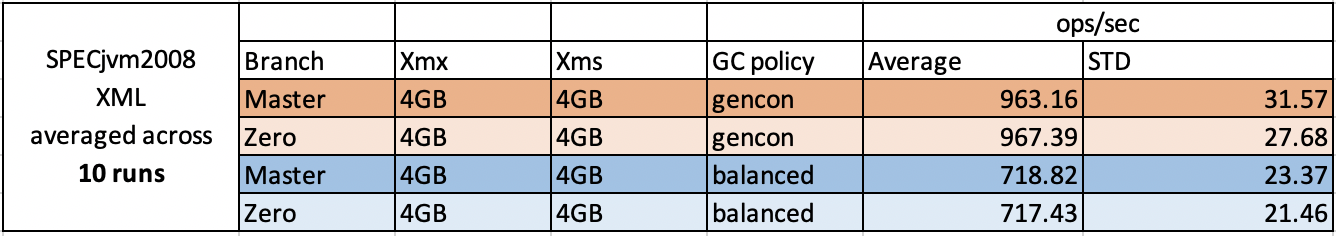

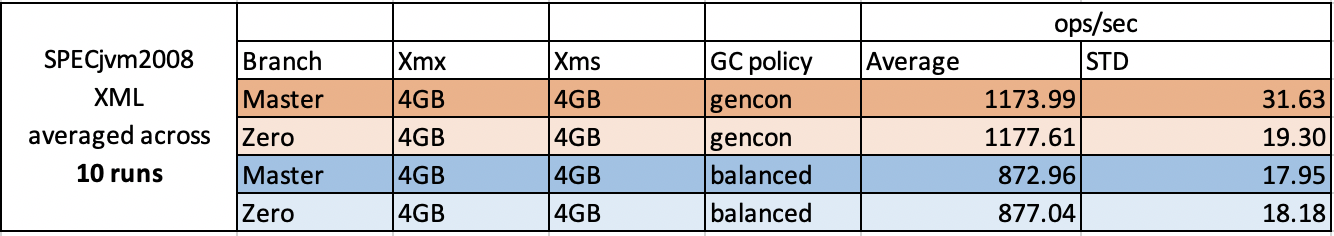

I’ve run a few benchmarks to check if there was any regression with the above solution and here are they. I’ve chosen workloads that has different proportions of zero sized arrays. Since the fix affects all GC policies, I tested against the most popular GC: gencon and balanced. I also color coded to better visualize the results, brown shades makes reference to gencon and blue shades makes reference to balanced. Master branch (dark shade) is a build WITHOUT the change, while Zero branch (light shade) contains the fix above.

This is the percentage of zero sized arrays (of all array sizes) for each of the workloads:

- Daytrader: ~5%

- SPECjbb2015: ~11%

- SPECjvm2008 XML: ~19%

Machine1: 24 CPUs, 58GB RAM

SPECjvm2008

SPECjbb2015

Difference here mean

Max jOps: mean difference: 281, STD: ~420

Critical jOps: mean difference: -108, STD: ~140

Machine2: 32 CPUs 128GB RAM

SPECjvm2008

Machine3: 28 CPUs, 98GB RAM

Daytrader

Note: Each of the Master + gencon Daytrader run corresponds to independent runs.

Given the results above, the fix doesn’t seem to have an impact on throughput and performance. Let me know what you think and if everyone agrees I’ll go ahead and open the PR.

SPECjbb2015 with balanced GC is running as we speak and will post the results here as soon as it becomes available.

@fjeremic @andrewcraik @VermaSh

Results look good. I would proceed with a PR and we can close this one off. Thanks so much for tackling this @bragaigor. It is very much appreciated and I'm impressed with how quickly you've picked up some JIT skills to get this done.

Great, PR is open! My pleasure and thanks @fjeremic and @VermaSh for all the help!

For completeness here are the results for SPECjbb2015 with balanced GC policy, which also shows no impact in performance:

Machine4: 48CPUs 256GB RAM

SPECjbb2015

Max Jops, mean difference: -60, STD: ~450

Critical Jops, mean difference: 65, STD: ~260

Re-opening since https://github.com/eclipse/openj9/pull/10946 is reverted.

Most helpful comment

Hi all, I found workable solution that solves the problem. It adds 2 instructions to the critical path of array allocations, and 3 instructions in case of zero sized arrays. The 2 added instructions are a compare and a jump, and the third is a store to properly initialize the size field in case of zero sized array.

This is x86 assembly before the change:

And this is the x86 assembly after the change (Change labelled as

# EXTRA), whereEXTRA1/2are the ones that would always be executed and# EXTRA 3only executed in case of zero sized arrays:I tested and it does fix the problem and the code behaves as expected. There are probably other solutions but this seems to be the least intrusive. For instance the PPC equivalent there’ seems to have a little bit of code duplication; we could go towards that path but I thought this would be preferred? Also, this code branches in case we don’t have a zero sized array. If we think about the proportion of zero sized arrays in comparison to all array sizes, it only comprises of a minority 10% and some workloads not going over 1%. If we take that into consideration it would make sense to only branch out of line whenever we encounter zero sized arrays, and fall through otherwise. However, here since everything is very close and we “jump” one instruction, we shouldn’t lose locality, and the results below might prove that.

I’ve run a few benchmarks to check if there was any regression with the above solution and here are they. I’ve chosen workloads that has different proportions of zero sized arrays. Since the fix affects all GC policies, I tested against the most popular GC: gencon and balanced. I also color coded to better visualize the results, brown shades makes reference to gencon and blue shades makes reference to balanced.

Masterbranch (dark shade) is a build WITHOUT the change, whileZerobranch (light shade) contains the fix above.This is the percentage of zero sized arrays (of all array sizes) for each of the workloads:

Machine1: 24 CPUs, 58GB RAM

SPECjvm2008

SPECjbb2015 subtracted from . If difference is positive, it means that the value with the fix was higher.

Difference here mean

Max jOps: mean difference: 281, STD: ~420

Critical jOps: mean difference: -108, STD: ~140

Machine2: 32 CPUs 128GB RAM

SPECjvm2008

Machine3: 28 CPUs, 98GB RAM

Daytrader

Note: Each of the Master + gencon Daytrader run corresponds to independent runs.

Given the results above, the fix doesn’t seem to have an impact on throughput and performance. Let me know what you think and if everyone agrees I’ll go ahead and open the PR.

SPECjbb2015 with balanced GC is running as we speak and will post the results here as soon as it becomes available.

@fjeremic @andrewcraik @VermaSh