We determined to port a complete runtime environment including OpenJDK11 + OpenJ9 + OMR (without JIT) to the RISC-V development board (e.g. HiFive Unleashed with Linux support / please refer to https://www.sifive.com/boards/hifive-unleashed for details) to get it work on the board.

According to the explanation of RISC-V cross-compilers at https://www.lowrisc.org/docs/untether-v0.2/riscv_compile/, the JDK /JRE is supposed to be executed with Linux support in the user mode as the JVM /application is multiple-threaded.

Overall, there are a bunch of prerequisites before compiling OpenJ9:

- the Freedom E SDK (https://github.com/sifive/freedom-e-sdk) + openOCD ready for use (already offered on the website at https://www.sifive.com)

- compile a cross compiler with glibc support: e.g. riscv-unknown-linux-gnu-gcc based on the instructions at https://github.com/riscv/riscv-gnu-toolchain; if OK, check whether it works good with simple test.

- probably need the Yocto build system (https://www.yoctoproject.org/ or https://github.com/riscv/meta-riscv) to build everything including a Linux/RISC-V kernel (https://github.com/riscv/riscv-linux), a root file system image, a bootloader, a toolchain, QEMU, etc.

- (to be added if anything else)

Considering the complexity & unexpected challenges during the porting, the project will be split into at least 4 sub-tasks as follows to be accomplished to achieve our goal:

- compile a build without JIT on Linux (changed all request config/setting to get it work) to ensure it works good as expected; if everything goes well, all changes will be added to the compilation with riscv-unknown-linux-gnu-gcc.

- import the FFI code (libffi) for RISC-V support to OpenJ9 (https://github.com/libffi/libffi/tree/master/src/riscv / need to open an Eclipse CQ for that)

- figure out whether we need to modify the related config/setting in the Freedom E SDK and how to integrate them into the config/setting in OpenJDK11 + OpenJ9 + OMR to ensure the build is compiled with riscv-unknown-linux-gnu-gcc.

- might need to run via an Emulator (e.g. QEMU) on the host to ensure it works before uploading.

- figure out how to flash/upload the compiled build & the Linux port to the development board to get it executed/tested.

- (to be added if anything else)

The issue at #11136 was created separately to track the progress of OpenJ9 JIT on RISC-V.

FYI: @DanHeidinga , @pshipton, @tajila

All 238 comments

@shingarov you might be interested in this one :)

Currently, I already finished the following jobs:

- verified the HiFive1 32bit board with simple tests (e.g. compile/upload a program with the Freedom E SDK to the board) to understand how it works.

- compiled a 32 bit Linux cross compiler (riscv32-unknown-linux-gnu-gcc) but there might be some issue with the compiler. Still working on that.

@pshipton , I am wondering whether we should disable the shared classes on the resource-limited board (only 128M flash memory available for use, which includes the Linux port/utilities, the Java build, user applications, etc) as the shared cache is set to 300M by default.

In addition, we might need to disable some modules/unnecessary stuffs in code to get things easy to go on the board.

@ChengJin01 jlink can generate java.base only JRE (no other modules).

Probably need to disable these modules in config/setting in the OpenJDK before compiling the build with riscv32 as there might be no way to run jink on the compiled build (all executables are already in the RISC-V format) on the host machine.

@ChengJin01 When the available disk space is small, the default shared cache size should be 64MB. However I can guess this still might be too big. We do want to ensure shared classes is working, and could set an even smaller size for this platform, like 16M or even 4M just for test purposes.

@hangshao0

Actually, this is 32-bit, so the default shared cache size should be 16MB already. The 300MB default size is for 64-bit only.

I doubled-check the document on HiFive1(32bit FE310), it is actually 16MB off-chip flash memory (128Mbit). So the shared cache still needs to be reduced if possible.

In addition, according to the technical Spec on FE310 at

https://docs.platformio.org/en/latest/frameworks/freedom-e-sdk.html

https://github.com/RIOT-OS/RIOT/wiki/Board:-HiFive1

HiFive1 Features & Specifications

Microcontroller: SiFive Freedom E310 (FE310)

SiFive E31 RISC-V Core

Architecture: 32-bit RV32IMAC

...

Memory: 16 KB Instruction Cache, 16 KB Data Scratchpad <--- 16KB RAM

...

Flash Memory: 128 Mbit Off-Chip (ISSI SPI Flash)

and the discussion of Linux port on HiFive1 at https://forums.sifive.com/t/is-there-a-linux-distribution-that-can-run-on-hifive1/658/5, it seems the 32bit FE310 chip holds a very tiny RAM (16KB) which is far from enough to support the Linux kernel.

Given that porting OS kernel to the board is not our focus, there might be two options for us to move forward:

1) choose 64bit HiFive FU540 Unleashed (Linux-capable, multi-core / https://www.sifive.com/boards/hifive-unleashed) to support Linux on chip for JVM.

2) consider to use a RTOS (must support multi-threading / e.g. RTLinux, Zephyr, Apache Mynewt, etc) with RISC-V 32bit support. It is still unclear whether we can make it work in this way and how many code/libraries in OpenJ9 need to be adjusted to accommodate the RTOS.

@DanHeidinga

1) Can the HiFive1 boards be extended with additional RAM & disk?

2) Is there an emulator for the RISC-V that we can use while working to procure more suitable boards?

3) We want to target Linux with this work as it simplifies the rest of the porting effort.

Surely supporting the shared classes cache could be considered on the "nice to have" list rather than in the initial set of priority activities for a new platform bring up?

Is there an existing new platform bring-up (ordered) checklist? If not, could we start creating one as part of this effort?

@DanHeidinga ,

- Can the HiFive1 boards be extended with additional RAM & disk?

There is no public doc/Spec shows it can do that but we can bring this question to their forum at https://forums.sifive.com. (already raised the question at https://forums.sifive.com/t/extending-the-hifive1-board-with-additional-ram-disk/2155)

- Is there an emulator for the RISC-V that we can use while working to procure more suitable boards?

The typical emulator is QEMU (https://github.com/riscv/riscv-qemu) both with RISC-V (RV64G, RV32G) Emulation Support. It will be used to boot the Linux/shell so as to run the JVM after compilation.

- We want to target Linux with this work as it simplifies the rest of the porting effort.

In this case, there might be not too many options in term of development boards except the 64bit HiFive FU540 Unleashed board.

Technically, most of work will be finished (compilation, emulation, etc) before uploading everything to the board which should be the final steps to verify whether it really works on the RISC-V chip/hardware. So it might be not that urgent for the moment to decide which board to use as long as we get our build work on RISC-V via emulator.

Already talked to @mstoodle on slack, we can create a high-level/generic porting guideline not specific to any platform with our porting experiences on RISC-V, which helps people to understand the basic/key steps to follow when porting OpenJ9 to a new platform.

we can create a high-level/generic porting guideline not specific to any platform with our porting experiences on RISC-V, which helps people to understand the basic/key steps to follow when porting OpenJ9 to a new platform

@knn-k has some recent experience porting OpenJ9 onto Aarch64. He might be helpful regarding the porting guideline.

If it is without the JIT, you can take a look at these PRs to begin with for what I have done with AArch64 VM build:

- #3502, #3559, #4087, #4333, #4350, #4487, #4696

@knn-k , many thanks for your links of changsets. I believe we will get started with at least something similar (with RISC-V instruction sets) except Docker-related stuff as we need to run it directly on hardware/board.

AArch64 VM uses the Docker image for cross-compilation on x86-64 Linux.

I would appreciate any feedback from your RISC-V effort.

[1] The response as to extending the 32bit board with external RAM/disk as follows:

https://forums.sifive.com/t/extending-the-hifive1-board-with-additional-ram-disk/2155/3

A while ago, one suggestion to extend the amount of memory was to connect a

SPI RAM to the board’s SPI GPIO pins. It’s slower than direct RAM but depending

on the use-case it might be enough:

...

Though to be honest it might very well be that your application

(if it requires “far more than 16MB”) is too much for this board.

So it seems extending the hardware this way is tricky and it is hard to say whether the 32bit board can really support that.

[2] I went over the Spec document for the 64bit board at https://sifive.cdn.prismic.io/sifive%2Ffa3a584a-a02f-4fda-b758-a2def05f49f9_hifive-unleashed-getting-started-guide-v1p1.pdf,

HiFive Unleashed is a Linux development platform for SiFive’s Freedom U540 SoC,

the world’s first 4+1 64-bit multi-core Linux-capable RISC-V SoC.

The HiFive Unleashed has 8GB DDR4, 32MB QuadSPI Flash, a Gigabit Ethernet port,

and a MicroSD card slot (can be used to boot the linux image) for more external storage.

If it is the mainstream hardware setup on the 64bit board, there should be no limitation

on RAM/disk for Linux kernel + JVM with this board, whether it comes to RAM or the share cache.

[3] There are 3 options for the cross-compilation & EQMU emulation:

1) manually compile all related artifacts including cross-compiler + Linux kernel + Boot Loader +an customized shell environment, etc from the source, for which https://github.com/michaeljclark/busybear-linux already integrated everything we need in the cross-compilation.

but it is unclear whether the generated boot image & Boot Loader works good on the real hardware.

2) https://buildroot.org/

Buildroot includes everything required for cross-compilation but it needs manual choice for a bunch of configurations.

3) https://github.com/riscv/meta-riscv

Yoctco provides a one-stop integration environment for cross-compilation pretty much without any manual intervention except a simple choice for the image type, coming with a full-featured Linux

environment for RISC-V-based cross-development. The only drawback is it mostly ends up with over xGB Linux image which seems huge in size to us (it might not be a big problem if booting from the SD-card).

I will go with the option 1) to compile everything including EQMU, which is the fastest & straightforward way we can do to solve all problems on our side before moving forward to the on-board/hardware verification.

If there is any special configuration/setting required in the boot process on the hardware which can't be done via 1), I will go back to check whether 2) or 3) works that way.

Already created the RISC-V cross compiler on 64bit:

/opt/riscv_gnu_toolchain_64bit/bin$ ./riscv64-unknown-linux-gnu-gcc --version

riscv64-unknown-linux-gnu-gcc (GCC) 8.3.0

Copyright (C) 2018 Free Software Foundation, Inc.

This is free software; see the source for copying conditions. There is NO

warranty; not even for MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.

Will keep compiling other related artifacts.

Hello,

I would like to add some comments.

First off I am a large contributor to meta-riscv and the Yocto/OpenEmbedded world in general. Please let me know what you need and I will help wherever I can. The less time you all spend on getting Linux running the more time you can spend on porting Java :)

Please do not spend time on the HiFive1 board OR 32-bit RISC-V. The HiFive1 board is very small, it's basically a RISC-V version of an Arduino. RISC-V 32-bit support is still lacking so you should start with 64-bit support. You can develop for 64-bit RISC-V on either the HiFive Unleashed or QEMU. QEMU has great RISC-V support and you should be able to do all your development on QEMU. If you want help running QEMU please let me know, I am also a QEMU maintainer and am happy to help here as well.

I think meta-riscv is the best option from above to use (option [3]). It's extremely powerful and you won't have any issues of size in either QEMU or on the HiFive Unleased. Buildroot is also an option (as a maintainer here as well I can help if required). I would not recommend to build it all yourself, you will end up wasting a lot of time building toolchains, Linux and related packages.

If you want to do native work you can also just run Fedora on QEMU and dnf install all the packages you need.

Either way, let me know how to help and I will do what I can

Something else worth noting is that most of the repos on the github riscv account are out of date. For Linux, GNU tools and QEMU you should use mainline not the riscv forks.

@alistair23 , many thanks for your suggestion & recommandation. Actually I already finished compiling everything on 64bit including QEMU with option 1) (just a minor issue with ntpd which can be ignored for now). We will move forward to the next step to compile our code if everything goes fine.

The problem with option [3] is it will end up with a huge size of linux image which I already tried but stopped it when it exceeded 9GB (What we need is a normal Linux kernel with decent shell support, so there is no need to integrate everything for Linux). With option 1, we are able to customize the size for use (e.g. 1GB or less if necessary).

I won't go for option 2) or 3) for now unless there is something unexpected when compiling our code.

Great, I'm glad you got it working!

I'm not sure how you eneded up with a 9GB image, my full OpenEmbeded desktop images with debug symbols and self hosted toolchains aren't that big.

Either way can you keep me updated with the progress? Also let me know if there is anything that I can do to help.

@alistair23 , I didn't try other options except bitbake core-image-full-cmdline guided at https://github.com/riscv/meta-riscv and the whole folder there kept increasing to over 9GB (eventually screwed up my VM due to out-of-space).

Basically the compilation of our code will be done with the 64bit cross-compiler outside of QEMU and mount the whole build inside to get executed in there. So we definitely need your support from QEMU perspective. My guess is there will be a bunch of problems with QEMU once we're done compiling our code to get it work, specifically in debugging in QEMU if it crashes or something similar happens.

Just keep subscribed in this issue and you will be informed with what is going on in our project.

Ah, the temporary files can get big. That makes more sense.

Great! I am subscribed to this issue so I will keep and eye on things and help where I can. QEMU is very stable and the RISC-V support is also mature. If possible it would be best to build it from the master branch in mainline QEMU. Otherwise the 3.1+ releases are in good shape.

Good luck with this. Java is one of the few missing pieces for RISC-V so it will be great when you get it running :)

Working on other crash issue on Windows. Will get back to this once we address that problem.

I am currently compiling a build without JIT components (excluded from OpenJ9 & OMR) on a Fyre Linux machine and then on my Ubuntu VM to eliminate any compilation error with JIT excluded.

If it works good, the next step is to get the risc-v toolchain introduced in the configure/setting to see what happens (temporarily ignore the spec setting & code specific to riscv and just ensure it picks up the correct cross-compiler/tools in the process of compilation)

Just finished the compilation on Linux without JIT on my Ubuntu VM

oot@jincheng-VirtualBox:...# jdk/bin/java -version

JVMJ9VM011W Unable to load j9jit29: /home/jincheng/RISC_V_OPENJ9/openj9-openjdk-jdk11/build/linux-x86_64-normal-server-release/images/jdk/lib/compressedrefs/libj9jit29.so: cannot open shared object file: No such file or directory

openjdk version "11.0.3-internal" 2019-04-16

OpenJDK Runtime Environment (build 11.0.3-internal+0-adhoc.root.openj9-openjdk-jdk11)

Eclipse OpenJ9 VM (build master-32fb64b, JRE 11 Linux amd64-64-Bit

Compressed References 20190401_000000 (JIT disabled, AOT disabled)

OpenJ9 - 32fb64b

OMR - 5ceecf1

JCL - ff6f49a based on jdk-11.0.3+2)

and will get started to figure out how to modify the config/setting for RISC-V on OpenJDK11 side.

The main reasons that our build needs to be compiled outside of QEMU/Linux are

1) Except the cross-compilers (gcc/g++ for riscv), a bunch of tools & commands (e.g. m4, etc) required in compiling the JDK are not entirely provided in riscv-gnu-toolchain & QEMU/Linux and there is no need to do so as they are offered on the Linux-based building platform.

2) The boot JDK (which is not compiled with the riscv cross-compiler) can't be executed in QEMU/Linux and must be used outside during the whole compilation.

So the first problem we encountered in the configuration check of JDK is the lack of X11 support in riscv-gnu-toolchain (it is required for libawt in JDK)

checking for X... no

configure: error: Could not find X11 libraries.

configure:58129: /opt/riscv_gnu_toolchain_64bit/bin/riscv64-unknown-linux-gnu-g++ -E conftest.cpp

conftest.cpp:21:10: fatal error: X11/Xlib.h: No such file or directory

#include <X11/Xlib.h>

^~~~~~~~~~~~

compilation terminated.

...

https://github.com/riscv/riscv-gnu-toolchain/tree/master/linux-headers/include

/riscv-gnu-toolchain/linux-headers/include# ls -l

total 48

drwxr-xr-x 2 root root 4096 Apr 2 12:15 asm

drwxr-xr-x 2 root root 4096 Apr 2 12:15 asm-generic

drwxr-xr-x 2 root root 4096 Apr 2 12:15 drm

drwxr-xr-x 28 root root 16384 Apr 2 21:10 linux

drwxr-xr-x 2 root root 56 Apr 2 12:15 misc

drwxr-xr-x 2 root root 135 Apr 2 12:15 mtd

drwxr-xr-x 3 root root 4096 Apr 2 12:15 rdma

drwxr-xr-x 3 root root 145 Apr 2 12:15 scsi

drwxr-xr-x 2 root root 321 Apr 2 12:15 sound

drwxr-xr-x 2 root root 89 Apr 2 12:15 video

drwxr-xr-x 2 root root 110 Apr 2 12:15 xen

<----------------------------------------------------- X11 is missing in riscv-gnu-toolchain

the X11 libraries should be part of riscv-gnu-toolchain otherwise the cross-compiler/linker fails to locate them during the compilation (the library will be used in QEMU/So we can't use the same one on the building platform).

There are two options to deal with the problem:

1) figure out how to integrate X11 (https://github.com/mirror/libX11) to riscv-gnu-toolchain, which might take a while to get things work.

2) skip X11 in configure for the moment and disable all X11 related code later in the compilation to see how far we can push forward. If it works out, we will get back to address X11 once we finish compiling the JDK build.

@alistair23 , do you know the basic steps to build X11 to riscv-gnu-toolchain?

the X11 libraries should be part of riscv-gnu-toolchain otherwise the cross-compiler/linker fails to locate them during the compilation (the library will be used in QEMU/So we can't use the same one on the building platform).

The problem isn't that you don't have X11 in the toolchain, it's that you don't have the X11 libraries installed for the guest. My advice would be to use Yocto/OpenEmbedded to build a rootFS. Continuing to build these manually will be more and more of a pain.

The other option is to use RISC-V Fedora, where you probably can dnf install the libraries.

1. Except the cross-compilers (gcc/g++ for riscv), a bunch of tools & commands (e.g. m4, etc) required in compiling the JDK are not entirely provided in riscv-gnu-toolchain & QEMU/Linux and there is no need to do so as they are offered on the Linux-based building platform.

This doesn't sound right. This sounds like you haven't installed the required tools onto the guest image. Almost all packages that run on x86 will run on RISC-V, about 90% of the Fedora/Debian packages are being cross compiled. My colleague just checked and m4 can be installed in Fedora with a simple dnf install and it's available as a RISC-V target package in OpenEmbedded.

If you would like to continue cross compiling the packages for RISC-V then I strongly recommend using meta-riscv and OpenEmbedded. It will handle all of these complexities for you as cross compiling an entire distro is a lot of work. If you want to do native compile/development then Fedora (or Debian) is the way to go.

2. The boot JDK (which is not compiled with the riscv cross-compiler) can't be executed in QEMU/Linux and must be used outside during the whole compilation.

Does this mean that it is impossible to natively compile JDK on a non-x86 architecture?

Does this mean that it is impossible to natively compile JDK on a non-x86 architecture?

Technically yes, the compilation of JDK is done with cross-compiler, macro preprocessor, other tools as well as the boot JDK (used for java source) which is only compiled on the host /can't be used/executed in QEMU.

Does this mean that it is impossible to natively compile JDK on a non-x86 architecture?

Technically yes, the compilation of JDK is done with cross-compiler, macro preprocessor, other tools as well as the boot JDK which is only compiled on the host /can't be used in QEMU.

The compiler, macro pre-processor and standard build tools will all work on a non-x86 native compile.

So boot JDK is x86 only?

When you say can't be used in QEMU, do you mean QEMU specifically or just that it can't be built on non-x86 architectures?

So boot JDK is x86 only?

Not really, we always need a boot JDK (already compiled) for the compilation. Basically, compiling a JDK always involves a boot JDK (only x86 in our case) which is already ready for use; otherwise there is no need to compile outside of QEMU if everything including the boot JDK is ready for use inside.

So boot JDK is x86 only?

Not really, we always need a boot JDK (already compiled) for the compilation. Basically, compiling a JDK always involves a boot JDK (only x86 in our case) which is already ready for use; otherwise there is no need to compile outside of QEMU if everything including the boot JDK is ready for use inside.

Ah, so boot JDK is a bootstrapper that allows you to compile. In this case boot JDK is already and only compiled for x86 so you need to do a x86 host cross compile.

Thanks for clarifying what boot JDK is.

In which case a native Fedora style compile won't work for you. I still recommend OpenEmbedded then.

In which case a native Fedora style compile won't work for you. I still recommend OpenEmbedded then.

At this point, does the cross-compiler can locate the correct X11 libraries offered from OpenEmbedded (the total compilation must be outside of QEMU) ?

OpenEmbedded will allow you to build the entire distro on your host machine (x86).

OpenEmbedded will build the X11 libraries for you and will link with them.

@alistair23, many thanks for your suggestion. I will try to see whether OpenEmbedded works for us in such case. Let me know whether there is anything that needs to be aware of in installing/building OpenEmbedded (links, guides, etc)

If you want I can have a go at it. Is there some sort of Java that can be cross compiled today?

I would recommend just following the docs in meta-riscv. There is also the Yoe distro (https://github.com/YoeDistro/yoe-distro) that seems easy to use, although I don't use it.

Is there some sort of Java that can be cross compiled today?

Might exist but not available in the public as far as I know. You could try Hotspot OpenJDK to see how it works. Actually, that's what we are currently doing here.

I figured that, I just meant is there maybe some WIP that I could test the build process with or maybe config options to disable the JIT and interpreter. I misread what you posted above and thought you had something running on RISC-V.

Either way, let me know if there is anything else you need

@alistair23 , I already followed the instructions at https://github.com/riscv/meta-riscv to build the image on one of our internal Ubuntu VM.

bitbake core-image-full-cmdline

runqemu qemux86 nographic

...

root@qemuriscv64:/# uname -a

Linux qemuriscv64 5.0.3-yocto-standard #1 SMP PREEMPT Wed Apr 3 23:31:29 UTC 2019 riscv64 riscv64 riscv64 GNU/Linux

It seems this is pretty much the same one as I already done before to build the EQMU environment (I didn't find any compiler/toolchain pre-installed in the EQMU), which is only used to launch the target JDK instead of compiling the JDK source. Even though the toolchain is installed in there, there is no way to launch boot JDK inside as it is compiled on Linux/X86_64.

In our case, the cross-compiler (riscv64-gnu-gcc/g++) must work with the boot JDK(already compiled on Linux/X86_64) to compile the native and java code for the target JDK (run on riscv-64). That being said above, the cross-compiler/toolchain must work on the same platform (Linux/X86_64) as the boot JDK (outside of EQMU), which is the requirement of the target JDK configuration/setting.

I also checked the result from an online Fedora/RISC-V enviroment (also without X11 headers at /usr/include) at https://rwmj.wordpress.com/2018/09/13/run-fedora-risc-v-with-x11-gui-in-your-browser/

, which ended up with the same error in such case.

, which ended up with the same error in such case.

It seems a bunch of native headers in OpenJDK require X11 libraries to be compiled, which means we can't be simply ignore X11 during compilation. Might still need to figure out how to get X11 work in riscv-gnu-toolchain or other way around.

Does configuring with --with-x=no work around the issue?

It doesn't help and will ends up with the following error:

checking how to link with libstdc++... static

configure: error: It is not possible to disable the use of X11. Remove the --without-x option.

configure exiting with result code 1

as already explained at /riscv_openj9-openjdk-jdk11/make/autoconf/lib-x11.m4

if test "x${with_x}" = xno; then

AC_MSG_ERROR([It is not possible to disable the use of X11. Remove the --without-x option.])

fi

and /riscv_openj9-openjdk-jdk11/make/autoconf/libraries.m4

AC_DEFUN_ONCE([LIB_DETERMINE_DEPENDENCIES],

[

# Check if X11 is needed

if test "x$OPENJDK_TARGET_OS" = xwindows || test "x$OPENJDK_TARGET_OS" = xmacosx; then

# No X11 support on windows or macosx

NEEDS_LIB_X11=false

else

# All other instances need X11, even if building headless only, libawt still

# needs X11 headers. <---------------------------

NEEDS_LIB_X11=true

fi

I managed to hack the the script in configure to disable the X11 libraries and will keep checking the remaining errors in config.

It seems this is pretty much the same one as I already done before to build the EQMU environment (I didn't find any compiler/toolchain pre-installed in the EQMU), which is only used to launch the target JDK instead of compiling the JDK source. Even though the toolchain is installed in there, there is no way to launch boot JDK inside as it is compiled on Linux/X86_64.

Yep, OpenEmbedded doesn't include the toolchain in the guest image by default. To install it just edit your IMAGE_FEATURES variable in your conf/local.conf file.

Something like this will give you development and debug packages for all installed packages and it will install the tools required to build and debug natively.

IMAGE_FEATURES += "debug-tweaks dev-pkgs dbg-pkgs tools-sdk tools-debug"

I don't understand why you need native RISC-V compiler tools though. I thought because you need the boot strap boot JDK which only runs on x86 you need to do a cross compile?

I also checked the result from an online Fedora/RISC-V enviroment (also without X11 headers at /usr/include) at https://rwmj.wordpress.com/2018/09/13/run-fedora-risc-v-with-x11-gui-in-your-browser/

I don't understand this. I have been saying you can use Fedora and you can run it in directly in QEMU. I thought that isn't an option because you need to cross compile on x86 due to the boot JDK boot strapper?

I don't understand why you need native RISC-V compiler tools though. I thought because you need the boot strap boot JDK which only runs on x86 you need to do a cross compile?

No need to have tool chains for now but it is better to be ready for use later (compiling & debugging)

The theory is, if we are able to generate the first JDK on RISCV via cross-compilation (it has to be this way), then the generated JDK can be used as the first boot JDK on RISCV. That means the total compilation can be moved into QEMU along with the generated JDK and there is no need to do via cross-compilation after that, which is perfect for us to manage in later use.

I don't understand this. I have been saying you can use Fedora and you can run it in directly in QEMU. I thought that isn't an option because you need to cross compile on x86 due to the boot JDK boot strapper?

This is just to double-check to see whether there is any other option for us.

@alistair23 , I already followed the instructions at https://github.com/riscv/meta-riscv to build the image on one of our internal Ubuntu VM.

So the next step if you want to keep using OpenEmbedded is to either add OpenJ9 to the meta-java layer or to just build an SDK and use that.

If you don't have a lot of OpenEmbedded experience it is probably easier to just build the host SDK as then you don't have to work with the OpenEmbedded build system. The advantage with using the build system though is then you can upstream your OpenJ9 support to meta-java allowing others to use it (not just for RISC-V).

If you do opt for the SDK option (probably the best starting place) then you can build the SDK with this command:

MACHINE=qemuriscv64 bitbake meta-toolchain

No need to have tool chains for now but it is better to be ready for use later (compiling & debugging)

The theory is, if we are able to generate the first JDK on RISCV via cross-compilation (it has to be this way), then the generated JDK can be used as the first boot JDK on RISCV. That means the total compilation can be moved into QEMU along with the generated JDK and there is no need to do via cross-compilation after that, which is perfect for us to manage in later use.

Makes sense! This can be easily done with the IMAGE_FEATURES I mentioned above.

You will probably want to set DISTRO_FEATURES += "x11" as well, to install X11.

This is just to double-check to see whether there is any other option for us.

Great!

IMAGE_FEATURES += "debug-tweaks dev-pkgs dbg-pkgs tools-sdk tools-debug"

You will probably want to set DISTRO_FEATURES += "x11" as well, to install X11.

All of these looks great for us to take OpenEmbedded as the perfect option to run/debug the JDK and compile a fully-featured JDK later on once we finish the cross-compilation.

Already finished the configure part on the OpenJDK11 side:

risc64_configure_log.txt

...

A new configuration has been successfully created in

/root/jchau/temp/.../openj9-openjdk-jdk11/build/linux-riscv64-normal-server-release

using configure arguments '--disable-ddr --openjdk-target=riscv64-unknown-linux-gnu --with-freemarker-jar=/root/jchau/temp/freemarker.jar'.

Configuration summary:

* Debug level: release

* HS debug level: product

* JVM variants: server

* JVM features: server: 'cds cmsgc compiler1 compiler2 epsilongc g1gc jfr jni-check jvmti management nmt parallelgc serialgc services vm-structs'

* OpenJDK target: OS: linux, CPU architecture: riscv64, address length: 64

* Version string: 11.0.3-internal+0-adhoc.root.openj9-openjdk-jdk11 (11.0.3-internal)

Tools summary:

* Boot JDK: openjdk version "11.0.1" 2018-10-16 OpenJDK Runtime Environment AdoptOpenJDK (build 11.0.1+13) OpenJDK 64-Bit Server VM AdoptOpenJDK (build 11.0.1+13, mixed mode) (at /root/jchau/temp/jdk-11.0.1_13_hotspot)

* Toolchain: gcc (GNU Compiler Collection)

* C Compiler: Version 8.3.0 (at /opt/riscv_gnu_toolchain_64bit/bin/riscv64-unknown-linux-gnu-gcc)

* C++ Compiler: Version 8.3.0 (at /opt/riscv_gnu_toolchain_64bit/bin/riscv64-unknown-linux-gnu-g++)

Build performance summary:

* Cores to use: 8

* Memory limit: 16046 MB

Temporarily the following native libraries required for OpenJDK11 are disabled as these libraries (only installed on the riscv64-based platform) are not offered by the cross-compilation toolchains:

NEEDS_LIB_X11 = false ---> libx11-dev (X11 Windows system)

NEEDS_LIB_FONTCONFIG = false ----> libfontconfig1-dev (fontconfig)

NEEDS_LIB_CUPS = false ---> libcups2-dev (Common Unix Printing System)

Will get started to modify/add the required makefile-related settings (spec, flags, module.xml, etc) in OpenJ9 & OMR. For any source & assembly code specific to riscv64, will add stub/empty files temporarily to see whether we can get it through in compilation.

Cool!

There seems to be an issue with freeType which needs to be disabled on the OpenJDK11 side (not offered by the cross-compilation toolchain). Still checking the corresponding scripts to address the problem.

Already disabled the native libraries for freeType in OpenJDK11 and keep working on the makefile/scripts in OpenJ9 & OMR.

Already finished most of the changes (including all kinds of settings, makefile related scripts, stub code, etc) in OpenJ9 & OMR and currently working on the issue with trace/hook tools (tracegen/tracemerge/hookgen that are used to generated a bunch of header files) when trying to cross-compile a build.

Need to figure out a way in setting to ensure these tools are only created by local compiler rather than the cross-compiler as the header files must be generated locally via these tools these before building with the cross-compiler.

Just resolved the issue with the trace/hook tools in OMR by overwriting the cross compiler with the local compiler only when compiling the source of these tools. And keep investigating the similar issue with constgen tool in OpenJ9 (need to check whether it has to be created via the local compiler).

It turns out the constgen tool (only used to generate JIT related constants) should be skipped to avoid compiling JIT. Now get back to address the issue with signal handling (need to fill up part of code base against the Spec of RISCV Instruction Sets to get it through the compilation).

Currently working on a verification related issue raised by an external user. Will get back to this after fixing it up.

Already finished the code in signal handling from the compilation perspective (might be still errors but it temporarily passed in compilation /need to get back to address if anything happens later in execution) and currently dealing with the errors in libffi/riscv64 to get it compatible with the existing libffi settings in OpenJ9.

dealing with the errors in libffi/riscv64

Have you checked the libffi project? They may have already done the a port to riscv64.

libffi is ported to RISC-V. You need to make sure you use the release candidate (or master).

Have you checked the libffi project? They may have already done the a port to riscv64.

I already moved the riscv64 part from the libffi project at https://github.com/libffi/libffi/tree/master/src/riscv to our code but need to check the compatibility issue detected in compilation as the libffi code in OpenJ9 is based on an old version. Given that there is no need update the whole libffi in OpenJ9 to keep up-to-date with the latest libffi, just need to make a few modifications to get it pass (macros / functions were renamed or discarded on the latest libffi /need to restore them back for OpenJ9 )

Already fixed the compatibility issue with libffi/riscv in OpenJ9 and the compilation failed at:

/opt/riscv_gnu_toolchain_64bit/lib/gcc/riscv64-unknown-linux-gnu/8.3.0

/../../../../riscv64-unknown-linux-gnu/bin/ld: ../..//libj9vm29.so:

undefined reference to `__riscv_flush_icache'

As explained by the cross toolchain developer at http://rodrigo.ebrmx.com/github_/riscv/riscv-gcc/issues/140, this is a versioning problem from the riscv-glibc (2.26). To be specific, the cross compiler (gcc 8.3.0) requires __riscv_flush_icachethat was first defined/implemented since glibc 2.27 (https://fossies.org/diffs/glibc/2.26_vs_2.27/manual/platform.texi-diff.html).

Given that there is no clear roadmap when glibc 2.27 is ready in the cross toolchain (they mentioned they were working on that but there is no update of their progress in the past years) and there is no code calling __riscv_flush_icache in OpenJ9 in the case of riscv for now, I will try to disable __riscv_flush_icache in the source of the cross-toolchain and recompile the whole toolchain to see whether it works when compiling OpenJ9; otherwise, we might need to manually extract all code related to __riscv_flush_icachefrom glibc 2.27 (https://sourceware.org/git/?p=glibc.git / https://www.gnu.org/software/libc/sources.html) to riscv-glibc(2.26) to fit the need of the cross compiler gcc 8.3.0.

In addition, simply replacing riscv-glibc in the cross toolchain with glibc 2.27 doesn' help as the config/settings specific to riscv on riscv-glibc(2.26) are different from glibc 2.27.

The cross-compiler works good now after recompiling the cross-toolchain with __riscv_flush_icachedisabled. So keep working on a bunch of warn-as-errors detected during compilation except the code issue with cinterp.m4.

Given that cinterp.m4 is related to the assembly code invoking interpreter (in callin) which needs to be written manually against the riscv instruction set, I temporarily leave these stub files (oti/rv64helpers.m4 & vm/rv64cinterp.m4) there as the last issue to be addressed until everything else in compilation gets resolved.

Already solved all warn-as-errors on JDK11 & OpenJ9 & OMR and currently adding DDR-related changes (previously disabled in compilation) to see how it goes in compilation.

Just double-checked /runtime/ddr/module.xml and realized that j9ddrgen either should be created on the target platform (the Linux/QEMU in our case) or has to be disabled as there is no way to generate the tool via the local compiler when compiling with the cross-compiler (this is different from the trace/hook tools in which case the cross-compiler can be overridden for the benefit of the cross-compilation). So there is no more change for DDR for the moment during the cross-compilation.

Now the other thing to be addressed immediately is still the issue with glibc.

I compiled a simple test (print hello world) with the cross-compiler locally and uploaded it to the Fedora-riscv/QEMU (https://fedorapeople.org/groups/risc-v/disk-images/ can be launched directly with QEMU after downloading /no need to compile)

/opt/riscv_gnu_toolchain/bin/riscv64-unknown-linux-gnu-gcc

--sysroot=/opt/riscv_gnu_toolchain/sysroot -o test test.c

and it ended up with failure to locate the required glibc as follows:

# ./test: /lib64/lp64d/libc.so.6: version `GLIBC_2.26' not found (required by ./test)

# uname -a

Linux stage4.fedoraproject.org 4.19.0-rc8 #1 SMP Wed Oct 17 15:11:25 UTC 2018

riscv64 riscv64 riscv64 GNU/Linux

# ls -l /lib64/lp64d/libc.so.6

lrwxrwxrwx 1 root root 17 Mar 4 2018 /lib64/lp64d/libc.so.6 -> libc-2.27.9000.so

It means the program compiled with glibc 2.26 must be executed with the same version of glibc installed on the target platform.

Given that the Fedora/riscv comes with glibc 2.27 at https://secondary.fedoraproject.org/pub/alt/risc-v/RPMS/riscv64/

glibc-2.27.9000-7.fc28.riscv64.rpm 2018-05-11 21:20 3.0M

and Openembedded/riscv with glibc 2.29

at https://layers.openembedded.org/layerindex/branch/master/layer/openembedded-core/

glibc 2.29 GLIBC (GNU C Library)

I will first try to re-compile the whole cross-toolchain again by replacing glibc 2.26 in there with https://github.com/riscv/riscv-glibc/tree/riscv-glibc-2.27 to see whether it works for us.

Still working on the issue with riscv-glibc 2.27 on the cross-toolchain. There seems to be a versioning problem with linux headers plus code issues with riscv-glibc.2.27/sysdeps/unix/sysv/linux/riscv/flush-icache.c (not used in libffi/riscv). So I need to hack the code/makefile to remove these stuff to see whether it works.

In addition, it seems there is no gdb support for now on Fedora/riscv. According to explanation at https://github.com/riscv/riscv-binutils-gdb/issues/157, all of required patches need to be compiled from scratch.

Double-checked on Fedora/riscv as follows:

Last login: Sun Jan 28 15:59:08 on ttyS0

[root@stage4 ~]# uname -r

4.19.0-rc8

[root@stage4 ~]# uname -a

Linux stage4.fedoraproject.org 4.19.0-rc8 #1 SMP Wed Oct 17 15:11:25 UTC 2018

riscv64 riscv64 riscv64 GNU/Linux

[root@stage4 ~]# gcc --version

gcc (GCC) 7.3.1 20180303 (Red Hat 7.3.1-5)

Copyright (C) 2017 Free Software Foundation, Inc.

This is free software; see the source for copying conditions. There is NO

warranty; not even for MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.

[root@stage4 ~]# gdb --version

-bash: gdb: command not found <------------ no gdb support on Fedora/riscv

In such case, we might as well need to prepare everything for OpenEmbedded at https://github.com/riscv/meta-riscv (including the cross-toolchain compiled with glibc2.29 and the linux image booted with QEMU) unless the compiled build (compiled with glibc2.27) works perfect on Fedora-Riscv/QEMU for the first time (the JVM most likely crashes as there might be bunch of errors in the changes of code/scripts).

I just figured that the branch at https://github.com/riscv/riscv-gnu-toolchain/tree/linux-headers-4.15-rc3 already comes with the latest linux headers file plus glibc2.27. Instead of hacking the existing code/makefile on glibc2.26 (which also works but some issues might be introduced in compilation), I will try to compile this branch to see how it goes.

Jan and I spent many months going through similar torture before we got to a point where we could make any progress on the OMR JIT side. Especially gdb: even though it's been claimed by some writers that gdb has been "working" for a while, it took Jan (who is one of the gdb maintainers) a significant amount of effort to get it to be actually useful for real-life debugging.

IMHO the shortest / most effort-effective path to getting a functioning Linux-RV64 dev system is to follow Jan's scripts, for both kernel, root fs, and gdb:

https://github.com/janvrany/riscv-debian

They work both for QEMU emulation of RV64 and on real silicon (at least on SiFive Unleashed for sure). I am not saying this scenario fits everyone (e.g. I don't know whether you may have specific reasons why you need Openembedded), I am saying these scripts summarize the half-year of desperate frustrations Jan and I had, and attempt to give a clear shortcut for others to not have to go through the same long thorny path.

I don't know whether you may have specific reasons why you need Openembedded...

@shingarov, many thanks for your suggestion. But our main effort now is to generate the first JDK which must be built via the cross-compilation rather than the native compilation inside a functioning Linux-RV64 dev system (there is no way to create it inside the Linux/QEMU as tools/java files have to be compiled via local compiler first), in which case linux headers/glibc offered by the cross-toolchain must keep consistent with the equivalent in Linux/QEMU. So Openembedded (with glibc2.29) still remains our backup option if the cross-toolchain works with glibc2.29.

Already resolved the issue with glibc on the toolchain after replacing the older linux header files and riscv-glibc(2.26) with the latest ones from https://github.com/riscv/riscv-gnu-toolchain/tree/linux-headers-4.15-rc3 and keep going with the cross-compilation on OpenJDK11/OpenJ9/OMR.

Currently working on a verifier related issue and will get back to this after fixing it up.

After grabbing the latest changes on OpenJDK11/OpenJ9/OMR, the compilation failed as follows:

../../../gc_glue_java/PointerArrayObjectScanner.hpp: In member function 'virtual GC_IndexableObjectScanner* GC_PointerArrayObjectScanner::splitTo(MM_EnvironmentBase*, void*, uintptr_t)':

../../../gc_glue_java/PointerArrayObjectScanner.hpp:139:132:

error: invalid new-expression of abstract class type 'GC_PointerArrayObjectScanner'

new(splitScanner) GC_PointerArrayObjectScanner(env, _parentObjectPtr, _basePtr, _limitPtr, _endPtr, _endPtr + splitAmount, _flags);

History shows the changes above was introduced by GC at #5684 & #5714 last week and the compiation passed locally with gcc v5.4 and v7.3.

Given that the cross-compiler version in the toolchain is v8.3, I will

1) first try recompiling the toolchain by replacing v8.3 with v7.3 at https://github.com/riscv/riscv-gcc/tree/riscv-gcc-7.3.0 to see whether it helps to get through the compilation;

2) otherwise, might need to modify the GC code in there to follow the compilation rules on v8.3 (most of the errors are related to unimplemented functions as explained at https://stackoverflow.com/questions/23827014/invalid-new-expression-of-abstract-class-type.

Already fixed the issue with GC changes (it turns out the latest GC changes somehow didn't get synchronized in my OpenJ9 branch which led to mismatch on the changes between OpenJ9 and OMR) and keep compiling to see whether there is anything wrong or minor issues left in building the JDK.

I already fixed most of script/make file related issues in the cross-compilation.

configure.log.txt

build.log.txt

and now need to double-check the validity check on the cross-toolchain to see whether it really matters in building the JDK as there seems to be a mismatch between the configure at the beginning and the configure in OMR during compilation as follows:

[1] configure at the very beginning

checking whether we are using the GNU C compiler... yes

checking whether /opt/riscv_gnu_toolchain_glibc2.27_v3/bin/riscv64-unknown-linux-gnu-gcc accepts -g... yes

checking for /opt/riscv_gnu_toolchain_glibc2.27_v3/bin/riscv64-unknown-linux-gnu-gcc option to accept ISO C89... none needed

checking for riscv64-unknown-linux-gnu-g++... /opt/riscv_gnu_toolchain_glibc2.27_v3/bin/riscv64-unknown-linux-gnu-g++

checking resolved symbolic links for CXX... no symlink

configure: Using gcc C++ compiler version 7.3.0 [riscv64-unknown-linux-gnu-g++ (GCC) 7.3.0]

checking whether we are using the GNU C++ compiler... yes

checking whether /opt/riscv_gnu_toolchain_glibc2.27_v3/bin/riscv64-unknown-linux-gnu-g++ accepts -g... yes

checking how to run the C preprocessor... /opt/riscv_gnu_toolchain_glibc2.27_v3/bin/riscv64-unknown-linux-gnu-gcc -E

checking how to run the C++ preprocessor... /opt/riscv_gnu_toolchain_glibc2.27_v3/bin/riscv64-unknown-linux-gnu-g++ -E

configure: Using gcc linker version 2.32 [GNU ld (GNU Binutils) 2.32]

[2] configure in OMR during the cross-compilation

/usr/bin/make -C omr -f run_configure.mk 'SPEC=linux_riscv64_cmprssptrs_cross' 'OMRGLUE=../gc_glue_java' 'CONFIG_INCL_DIR=../gc_glue_java/configure_includes' 'OMRGLUE_INCLUDES=../oti ../include ../gc_base ../gc_include ../gc_stats ../gc_structs ../gc_base ../include ../oti ../nls ../gc_include ../gc_structs ../gc_stats ../gc_modron_standard ../gc_realtime ../gc_trace ../gc_vlhgc' 'EXTRA_CONFIGURE_ARGS='

make[5]: Entering directory '/root/jchau/temp/RISCV_OPENJ9_v2/openj9-openjdk-jdk11/build/linux-riscv64-normal-server-release/vm/omr'

sh configure --disable-auto-build-flag 'OMRGLUE=../gc_glue_java' 'SPEC=linux_riscv64_cmprssptrs_cross' --enable-OMRPORT_OMRSIG_SUPPORT --enable-OMR_GC --enable-OMR_PORT --enable-OMR_THREAD --enable-OMR_OMRSIG --enable-tracegen --enable-OMR_GC_ARRAYLETS --enable-OMR_GC_DYNAMIC_CLASS_UNLOADING --enable-OMR_GC_MODRON_COMPACTION --enable-OMR_GC_MODRON_CONCURRENT_MARK --enable-OMR_GC_MODRON_SCAVENGER --enable-OMR_GC_CONCURRENT_SWEEP --enable-OMR_GC_SEGREGATED_HEAP --enable-OMR_GC_HYBRID_ARRAYLETS --enable-OMR_GC_LEAF_BITS --enable-OMR_GC_REALTIME --enable-OMR_GC_VLHGC --enable-OMR_PORT_ASYNC_HANDLER --enable-OMR_THR_CUSTOM_SPIN_OPTIONS --enable-OMR_NOTIFY_POLICY_CONTROL --disable-debug 'lib_output_dir=$(top_srcdir)/../lib' 'exe_output_dir=$(top_srcdir)/..' 'GLOBAL_INCLUDES=$(top_srcdir)/../include' --enable-debug --enable-OMR_THR_THREE_TIER_LOCKING --enable-OMR_THR_YIELD_ALG --enable-OMR_THR_SPIN_WAKE_CONTROL --enable-OMRTHREAD_LIB_UNIX --enable-OMR_ARCH_RISCV --enable-OMR_ENV_LITTLE_ENDIAN --enable-OMR_PORT_CAN_RESERVE_SPECIFIC_ADDRESS --enable-OMR_GC_IDLE_HEAP_MANAGER --enable-OMR_GC_TLH_PREFETCH_FTA --enable-OMR_GC_CONCURRENT_SCAVENGER --enable-OMR_GC_ARRAYLETS --host=riscv64-unknown-linux-gnu --enable-OMR_ENV_DATA64 'OMR_TARGET_DATASIZE=64' --enable-OMR_GC_COMPRESSED_POINTERS --enable-OMR_INTERP_COMPRESSED_OBJECT_HEADER --enable-OMR_INTERP_SMALL_MONITOR_SLOT --build=x86_64-pc-linux-gnu 'OMR_CROSS_CONFIGURE=yes' 'AR=riscv64-unknown-linux-gnu-ar' 'AS=riscv64-unknown-linux-gnu-as' 'CC=riscv64-unknown-linux-gnu-gcc --sysroot=/opt/riscv_gnu_toolchain_glibc2.27_v3/sysroot' 'CXX=riscv64-unknown-linux-gnu-g++ --sysroot=/opt/riscv_gnu_toolchain_glibc2.27_v3/sysroot' 'OBJCOPY=riscv64-unknown-linux-gnu-objcopy' libprefix=lib exeext= solibext=.so arlibext=.a objext=.o 'CCLINKEXE=$(CC)' 'CCLINKSHARED=$(CC)' 'CXXLINKEXE=$(CXX)' 'CXXLINKSHARED=$(CXX)' 'OMR_HOST_OS=linux' 'OMR_HOST_ARCH=riscv' 'OMR_TOOLCHAIN=gcc' 'OMR_BUILD_TOOLCHAIN=gcc' 'OMR_TOOLS_CC=gcc' 'OMR_TOOLS_CXX=g++' 'OMR_BUILD_DATASIZE=64'

checking build system type... x86_64-pc-linux-gnu

checking host system type... riscv64-unknown-linux-gnu

checking OMR_HOST_OS... linux

checking OMR_HOST_ARCH... riscv

checking OMR_TARGET_DATASIZE... 64

checking OMR_TOOLCHAIN... gcc

checking for riscv64-unknown-linux-gnu-gcc... (cached) /opt/riscv_gnu_toolchain_glibc2.27_v3/bin/riscv64-unknown-linux-gnu-gcc

checking whether we are using the GNU C compiler... no <--------

checking whether /opt/riscv_gnu_toolchain_glibc2.27_v3/bin/riscv64-unknown-linux-gnu-gcc accepts -g... no

checking for /opt/riscv_gnu_toolchain_glibc2.27_v3/bin/riscv64-unknown-linux-gnu-gcc option to accept ISO C89... unsupported

checking whether we are using the GNU C++ compiler... no <------

checking whether riscv64-unknown-linux-gnu-g++ --sysroot=/opt/riscv_gnu_toolchain_glibc2.27_v3/sysroot accepts -g... no

checking for numa.h... no

against the configure in OMR during the compilation on X86

/usr/bin/make -C omr -f run_configure.mk 'SPEC=linux_x86-64_cmprssptrs' 'OMRGLUE=../gc_glue_java' 'CONFIG_INCL_DIR=../gc_glue_java/configure_includes' 'OMRGLUE_INCLUDES=../oti ../include ../gc_base ../gc_include ../gc_stats ../gc_structs ../gc_base ../include ../oti ../nls ../gc_include ../gc_structs ../gc_stats ../gc_modron_standard ../gc_realtime ../gc_trace ../gc_vlhgc' 'EXTRA_CONFIGURE_ARGS='

make[5]: Entering directory '/root/jchau/temp/openj9-openjdk-jdk11/build/linux-x86_64-normal-server-release/vm/omr'

sh configure --disable-auto-build-flag 'OMRGLUE=../gc_glue_java' 'SPEC=linux_x86-64_cmprssptrs' --enable-OMRPORT_OMRSIG_SUPPORT --enable-OMR_GC --enable-OMR_PORT --enable-OMR_THREAD --enable-OMR_OMRSIG --enable-tracegen --enable-OMR_GC_ARRAYLETS --enable-OMR_GC_DYNAMIC_CLASS_UNLOADING --enable-OMR_GC_MODRON_COMPACTION --enable-OMR_GC_MODRON_CONCURRENT_MARK --enable-OMR_GC_MODRON_SCAVENGER --enable-OMR_GC_CONCURRENT_SWEEP --enable-OMR_GC_SEGREGATED_HEAP --enable-OMR_GC_HYBRID_ARRAYLETS --enable-OMR_GC_LEAF_BITS --enable-OMR_GC_REALTIME --enable-OMR_GC_SCAVENGER_DELEGATE --enable-OMR_GC_STACCATO --enable-OMR_GC_VLHGC --enable-OMR_PORT_ASYNC_HANDLER --enable-OMR_THR_CUSTOM_SPIN_OPTIONS --enable-OMR_NOTIFY_POLICY_CONTROL --disable-debug 'lib_output_dir=$(top_srcdir)/../lib' 'exe_output_dir=$(top_srcdir)/..' 'GLOBAL_INCLUDES=$(top_srcdir)/../include' --enable-debug --enable-OMR_THR_THREE_TIER_LOCKING --enable-OMR_THR_YIELD_ALG --enable-OMR_THR_SPIN_WAKE_CONTROL --enable-OMRTHREAD_LIB_UNIX --enable-OMR_ARCH_X86 --enable-OMR_ENV_DATA64 --enable-OMR_ENV_LITTLE_ENDIAN --enable-OMR_GC_COMPRESSED_POINTERS --enable-OMR_GC_IDLE_HEAP_MANAGER --enable-OMR_GC_TLH_PREFETCH_FTA --enable-OMR_GC_CONCURRENT_SCAVENGER --enable-OMR_INTERP_COMPRESSED_OBJECT_HEADER --enable-OMR_INTERP_SMALL_MONITOR_SLOT --enable-OMR_PORT_CAN_RESERVE_SPECIFIC_ADDRESS --enable-OMR_PORT_NUMA_SUPPORT libprefix=lib exeext= solibext=.so arlibext=.a objext=.o 'AR=ar' 'AS=as' 'CC=gcc' 'CCLINKEXE=gcc' 'CCLINKSHARED=gcc' 'CXX=g++' 'CXXLINKEXE=g++' 'CXXLINKSHARED=g++' 'RM=rm -f' 'OMR_HOST_OS=linux' 'OMR_HOST_ARCH=x86' 'OMR_TARGET_DATASIZE=64' 'OMR_TOOLCHAIN=gcc'

configure: WARNING: unrecognized options: --enable-OMR_GC_STACCATO

checking build system type... x86_64-pc-linux-gnu

checking host system type... x86_64-pc-linux-gnu

checking OMR_HOST_OS... linux

checking OMR_HOST_ARCH... x86

checking OMR_TARGET_DATASIZE...

checking whether the C compiler works... yes

checking for C compiler default output file name... a.out

checking for suffix of executables...

checking whether we are cross compiling... no

checking for suffix of object files... o

checking whether we are using the GNU C compiler... yes

checking whether /usr/bin/gcc accepts -g... yes

checking for /usr/bin/gcc option to accept ISO C89... none needed

Comparison the configuration log in OMR and in OpenJDK indicates the sysrootpath didn't get picked up when validating the cross-compiler on the OMR side:

configure:4753: checking whether we are using the GNU C compiler

configure:4772: /opt/riscv_gnu_toolchain/bin/riscv64-unknown-linux-gnu-gcc -c conftest.c >&5

configure:4772: $? = 0

configure: failed program was:

| /* confdefs.h */

| #define PACKAGE_NAME "OMR"

...

configure:4781: result: no

against the config.log in OpenJDK

configure:37042: checking whether we are using the GNU C compiler

----> configure:37061: /opt/riscv_gnu_toolchain/bin/riscv64-unknown-linux-gnu-gcc -c

--sysroot=/opt/riscv_gnu_toolchain/sysroot --sysroot=/opt/riscv_gnu_toolchain/sysroot conftest.c >&5

configure:37061: $? = 0

configure:37070: result: yes

Will further investigate to figure out how to fix the issue.

Investigation shows the misleading checks on the cross-compiler was automatically generated via the following macros in the configure script by autoconf:

/omr/configure.ac

AC_PROG_CC() //Determine a C compiler to use. If CC is not already set in the environment, check for gcc and cc, then for other C compilers. Set output variable CC to the name of the compiler found.

AC_PROG_CXX() //Determine a C++ compiler to use. Check whether the environment variable CXX or CCC (in that order) is set; if so, then set output variable CXX to its value.

, which is meaningless in our case as all these set-ups on checks are already done previously via the configuration in OpenJDK and the cross-compilers work well during the later compilation.

So the macros should be ignored in the case of cross-compilation on RISC-V.

Now move forward to address all code-specific issues during execution.

After uploading the compiled build to the Fedora-riscv/QEMU, it failed with the following errors when running with java - version and crashed some where after that.

snippet of snaptrace (e.g.)

0x38f00 j9vm.519 > initializeImpl for java/lang/String (0000000000045D00)

0x38f00 j9vm.520 - call preinit hook

0x38f00 j9vm.20 > sendClinit

0x38f00 j9vm.179 > javaLookupMethod(vmStruct 0000000000038F00, targetClass 0000000000045D00, nameAndSig 0000002000B7DDF0, senderClass 0000000000000000, lookupOptions 24612)

0x38f00 j9vm.180 - javaLookupMethod - methodName <clinit>

0x38f00 j9vm.538 - searching methods from 0000000000043938 using linear search

0x38f00 j9vm.181 < exit javaLookupMethod resultMethod 0000000000044BB8

0x38f00 j9vm.222 - sendClinit - class java/lang/String

0x38f00 j9vm.21 -------> < sendClinit (no <clinit> found)

against comparing to the trace on X86/AMD64

0x12cea00 j9vm.519 > initializeImpl for java/lang/String (00000000012DB500)

0x12cea00 j9vm.520 - call preinit hook

0x12cea00 j9vm.20 > sendClinit

0x12cea00 j9vm.179 > javaLookupMethod(vmStruct 00000000012CEA00, targetClass 00000000012DB500, nameAndSig 00007F039D8CD8A0, senderClass 0000000000000000, lookupOptions 24612)

0x12cea00 j9vm.180 - javaLookupMethod - methodName <clinit>

0x12cea00 j9vm.538 - searching methods from 00000000012D9138 using linear search

0x12cea00 j9vm.181 < exit javaLookupMethod resultMethod 00000000012DA3B8

0x12cea00 j9vm.222 - sendClinit - class java/lang/String

0x12cea00 j9vm.580 > resolveStaticFieldRef(method=0000000000000000, ramCP=00000000012DA3E0, cpIndex=222, flags=20, returnAddress=0000000000000000)

0x12cea00 j9vm.142 > resolveClassRef(ramCP=00000000012DA3E0, cpIndex=83, flags=20)

...

Looking at the code at callin as follows:

void JNICALL

sendClinit(J9VMThread *currentThread, J9Class *clazz)

{

Trc_VM_sendClinit_Entry(currentThread);

J9VMEntryLocalStorage newELS;

if (buildCallInStackFrame(currentThread, &newELS, false, false)) {

/* Lookup the method */

J9Method *method = (J9Method*)javaLookupMethod(currentThread, clazz, (J9ROMNameAndSignature*)&clinitNameAndSig, NULL, J9_LOOK_STATIC | J9_LOOK_NO_CLIMB | J9_LOOK_NO_THROW | J9_LOOK_DIRECT_NAS);

/* If the method was found, run it */

if (NULL != method) {

Trc_VM_sendClinit_forClass(

currentThread,

J9UTF8_LENGTH(J9ROMCLASS_CLASSNAME(clazz->romClass)),

J9UTF8_DATA(J9ROMCLASS_CLASSNAME(clazz->romClass)));

currentThread->returnValue = J9_BCLOOP_RUN_METHOD;

currentThread->returnValue2 = (UDATA)method;

c_cInterpreter(currentThread); <------- to be implemented in the case of RISC-V

}

restoreCallInFrame(currentThread);

}

Trc_VM_sendClinit_Exit(currentThread); <------- < sendClinit (no <clinit> found) was sent out from here

}

The failure above indicates the code in c_cInterpreter(currentThread) was missing on RISC-V.

As talked to @gacholio previously, given that JIT is disabled, the code here (directly calling the interp function) can be written as wrapper in C with assertion to ensure one of the two return values is "return from callin". I will compile the build to see how far it goes with the piece of code.

The newly added C code for c_cInterpreter(currentThread) seems working but the VM ended up with hang some where later on.

[root@stage4]# uname -a

Linux stage4.fedoraproject.org 4.19.0-rc8 #1 SMP Wed Oct 17 15:11:25 UTC 2018

riscv64 riscv64 riscv64 GNU/Linux

[root@stage4]# jdk11_rv64_openj9/bin/java -Xtrace:iprint=all -version

...

0x38f00 j9vm.519 > initializeImpl for java/lang/String (0000000000045D00)

0x38f00 j9vm.520 - call preinit hook

0x38f00 j9vm.20 > sendClinit

0x38f00 j9vm.179 > javaLookupMethod(vmStruct 0000000000038F00, targetClass 0000000000045D00, nameAndSig 0000002000B7DDF0, senderClass 0000000000000000, lookupOptions 24612)

0x38f00 j9vm.180 - javaLookupMethod - methodName <clinit>

0x38f00 j9vm.538 - searching methods from 0000000000043938 using linear search

0x38f00 j9vm.181 < exit javaLookupMethod resultMethod 0000000000044BB8

0x38f00 j9vm.222 - sendClinit - class java/lang/String

0x38f00 j9vm.580 > resolveStaticFieldRef(method=0000000000000000, ramCP=0000000000044BE0, cpIndex=222, flags=20, returnAddress=0000000000000000)

0x38f00 j9vm.142 > resolveClassRef(ramCP=0000000000044BE0, cpIndex=83, flags=20)

...

0x38f00 j9vm.124 < getMethodOrFieldID --> result=000000200419FD18

0x38f00 j9jcl.265 - Java_sun_misc_Unsafe_registerNatives

0x38f00 omrport.333 > omrmem_allocate_memory byteAmount=480 callSite=jnimisc.cpp:827

0x38f00 omrport.322 < omrmem_allocate_memory returns 00000020040099C0

0x38f00 j9vm.361 > Attempting to acquire exclusive VM access.

0x38f00 j9vm.366 - First thread to try for exclusive access. Setting the exclusive access state to J9_XACCESS_PENDING

<----- hang occurred some where

...

Need to figure out what happened in there.

After adding tracepoints in code, the snaptrace shows it got stuck on mprotect(addr, pageSize, PROT_READ | PROT_WRITE) when calling flushProcessWriteBuffers at acquireExclusiveVMAccess() as follows:

19:26:22.079 0x38f00 j9vm.361 > Attempting to acquire exclusive VM access.

19:26:22.080 0x38f00 j9vm.366 - First thread to try for exclusive access. Setting the exclusive access state to J9_XACCESS_PENDING

19:26:22.096 0x38f00 j9vm.600 - acquireExclusiveVMAccess: CALL flushProcessWriteBuffers

19:26:22.134 0x38f00 j9vm.604 > -->Enter flushProcessWriteBuffers

19:26:22.497 0x38f00 j9vm.605 - flushProcessWriteBuffers: ENTER omrthread_monitor_enter

19:26:22.500 0x38f00 j9vm.606 - flushProcessWriteBuffers: EXIT omrthread_monitor_enter

19:26:22.501 0x38f00 j9vm.607 ---> flushProcessWriteBuffers: ENTER mprotect PROT_READ | PROT_WRITE

<----- no EXIT from mprotect

against the code in acquireExclusiveVMAccess at runtime\vm\VMAccess.cpp

acquireExclusiveVMAccess(J9VMThread * vmThread)

{

#if defined(J9VM_INTERP_TWO_PASS_EXCLUSIVE)

#if defined(J9VM_INTERP_ATOMIC_FREE_JNI_USES_FLUSH)

flushProcessWriteBuffers(vm); <-------------------------

#endif /* J9VM_INTERP_ATOMIC_FREE_JNI_USES_FLUSH */

Assert_VM_true(0 == vm->exclusiveAccessResponseCount);

#endif /* J9VM_INTERP_TWO_PASS_EXCLUSIVE */

...

and flushProcessWriteBuffers at runtime\vm\FlushProcessWriteBuffers.cpp

#if defined(J9VM_INTERP_ATOMIC_FREE_JNI_USES_FLUSH)

flushProcessWriteBuffers(J9JavaVM *vm)

{

...

#elif defined(LINUX) || defined(AIXPPC) /* WIN32 */

if (NULL != vm->flushMutex) {

omrthread_monitor_enter(vm->flushMutex);

void *addr = vm->exclusiveGuardPage.address;

UDATA pageSize = vm->exclusiveGuardPage.pageSize;

-----> int mprotectrc = mprotect(addr, pageSize, PROT_READ | PROT_WRITE);

As talked to @gacholio, this is a new feature called Enable atomic-free JNI that was implemented on all platforms except ARM and OSX. Given that both ARM and RISC-V belong the family of RISC (Reduced Instruction Set Computer), this feature should be disabled on RISC-V for now. I will try to modify the config/setting to see whether it works without the feature.

After fixing up a few minor issues during compilation, the cross-built JDK is functionally working now on the Linux-RISCV/QEMU (downloaded from https://fedorapeople.org/groups/risc-v/disk-images/) as follows:

[root@stage4 RISCV_OPENJ9]# uname -a

Linux stage4.fedoraproject.org 4.19.0-rc8 #1 SMP Wed Oct 17 15:11:25 UTC 2018

riscv64 riscv64 riscv64 GNU/Linux

[root@stage4 RISCV_OPENJ9]# jdk11_rv64_openj9_v16/bin/java -version

openjdk version "11.0.4-internal" 2019-07-16

OpenJDK Runtime Environment (build 11.0.4-internal+0-adhoc.jincheng.openj9-openjdk-jdk11)

Eclipse OpenJ9 VM (build riscv_openj9_v2_uma-43a37e3, JRE 11 Linux riscv64-64-Bit Compressed References 20190528_000000 (JIT disabled, AOT disabled)

OpenJ9 - 43a37e3

OMR - 0dd3de9

JCL - a699a14 based on jdk-11.0.4+4)

[root@stage4 RISCV_OPENJ9]# cat HelloRiscv.java

public class HelloRiscv {

public static void main(String[] args) {

System.out.println("Hello, Linux/RISC-V");

}

}

[root@stage4 RISCV_OPENJ9]# jdk11_rv64_openj9_v16/bin/javac HelloRiscv.java

[root@stage4 RISCV_OPENJ9]# jdk11_rv64_openj9_v16/bin/java HelloRiscv

Hello, Linux/RISC-V

Given that DDR and other required libraries (X11, etc) are disabled on the cross-build (due to the lack of support on the GNU cross-toolchain), I will get started to set up the environment inside the Linux-RISCV/QEMU by installing all packages required for the native compilation to see whether it is feasible to compile a full-featured native build with the cross-built JDK above as the boot JDK.

Congrats @ChengJin01 on this major milestone!

Already set up the environment in the Linux-RISCV/QEMU and currently working on the following issue detected during the compilation:

java.lang.UnsatisfiedLinkError: awt (Not found in com.ibm.oti.vm.bootstrap.library.path)

at java.base/java.lang.ClassLoader.loadLibraryWithPath(ClassLoader.java:1707)

...

at build.tools.icondata.awt.ToBin.main(ToBin.java:35)

gmake[3]: *** [GensrcIcons.gmk:109: /root/RISCV_OPENJ9/openj9-openjdk-jdk11/build/linux-riscv64-normal-server-release/support/gensrc/java.desktop/sun/awt//AWTIcon32_java_icon16_png.java] Error 1

gmake[2]: *** [make/Main.gmk:112: java.desktop-gensrc-src] Error 2

There were a bunch of the exceptions on failure to locate the native awtlibrary (/lib/libawt.so) in the boot JDK as it can't be generated outside due to the lack of X11 support on the cross-toolchain.

Given that the native build should be able to generate the native awtlibrary in the later compilation, I will try to modify the related makefile scripts see whether the following steps help to fix the issue:

1) disable the code of loading the native awt library at the java level (when the boot JDK is a cross-build) so as to enable compiling the native awt library later for the first native build.

2) if everything works fine, replace the cross-build with the compiled native build (already contains the native awtlibrary) as the boot JDK and enable the java loading code above so as to compile another native build.

@ChengJin01 does running with -Djava.awt.headless=true help? It puts the VM into an headless mode which may avoid the need for AWT

@DanHeidinga, unfortunately it doesn't work as the -Djava.awt.headless=true option is already specified in the existing the script code according to the compilation log. e.g.

Calling /root/RISCV_OPENJ9/jdk11_rv64_openj9_v16/bin/java

-XX:+UseSerialGC -Xms32M -Xmx512M -XX:TieredStopAtLevel=1

-Duser.language=en -Duser.country=US -Xshare:auto

-Ddtd_home=/root/RISCV_OPENJ9/openj9-openjdk-jdk11/make/data/dtdbuilder

-Djava.awt.headless=true <------------------ already specified here

-cp /root/RISCV_OPENJ9/openj9-openjdk-jdk11/build/linux-riscv64-normal-server-release/buildtools/jdk_

tools_classes build.tools.dtdbuilder.DTDBuilder html32 ...

Exception in thread "main" java.lang.UnsatisfiedLinkError: awt (Not found in com.ibm.oti.vm.bootstrap.library.path)

at java.base/java.lang.ClassLoader.loadLibraryWithPath(ClassLoader.java:1707)

at java.base/java.lang.ClassLoader.loadLibraryWithClassLoader(ClassLoader.java:1672)

at java.base/java.lang.System.loadLibrary(System.java:613)

at java.desktop/java.awt.Toolkit$3.run(Toolkit.java:1395)

...

It seems the java code in OpenJDK (specifically in loadLibraries() at src/java.desktop/share/classes/java/awt/Toolkit.java) is forced to load the native library as long as X11 is enabled.

Hi,

I have successfully managed to compile @ChengJin01 openjdk & openj9 on HiFive Unleashed (i.e., on a physical hardware)

jv@unleashed:~/Projects/J9/openj9-openjdk-jdk11$ pwd

/home/jv/Projects/J9/openj9-openjdk-jdk11

jv@unleashed:~/Projects/J9/openj9-openjdk-jdk11$ uname -a

Linux unleashed 5.0.0-rc1-00028-g0a657e0d72f0 #2 SMP Sun Feb 17 07:27:02 GMT 2019 riscv64 GNU/Linux

jv@unleashed:~/Projects/J9/openj9-openjdk-jdk11$ head -n 6 /proc/cpuinfo

processor : 0

hart : 1

isa : rv64imafdc

mmu : sv39

uarch : sifive,rocket0

jv@unleashed:~/Projects/J9/openj9-openjdk-jdk11$ cd build/linux-riscv64-normal-server-release/images/jdk/

jv@unleashed:~/Projects/J9/openj9-openjdk-jdk11/build/linux-riscv64-normal-server-release/images/jdk$ ./bin/java -version

openjdk version "11.0.4-internal" 2019-07-16

OpenJDK Runtime Environment (build 11.0.4-internal+0-adhoc.jv.openj9-openjdk-jdk11)

Eclipse OpenJ9 VM (build riscv_openj9_v2_uma-25784ea1f, JRE 11 Linux riscv64-64-Bit Compressed References 20190604_000000 (JIT disabled, AOT disabled)

OpenJ9 - 25784ea1f

OMR - 0dd3de90

JCL - 6f627e2338 based on jdk-11.0.4+4)

jv@unleashed:~/Projects/J9/openj9-openjdk-jdk11/build/linux-riscv64-normal-server-release/images/jdk$ javac /tmp/HelloRISCV.java

jv@unleashed:~/Projects/J9/openj9-openjdk-jdk11/build/linux-riscv64-normal-server-release/images/jdk$ cat /tmp/HelloRISCV.java

public class HelloRISCV {

public static void main(String[] args) {

System.out.println("Hello, Linux/RISC-V");

}

}

jv@unleashed:~/Projects/J9/openj9-openjdk-jdk11/build/linux-riscv64-normal-server-release/images/jdk$ ./bin/javac /tmp/HelloRISCV.java

jv@unleashed:~/Projects/J9/openj9-openjdk-jdk11/build/linux-riscv64-normal-server-release/images/jdk$ ./bin/java -cp /tmp HelloRISCV

Hello, Linux/RISC-V

jv@unleashed:~/Projects/J9/openj9-openjdk-jdk11/build/linux-riscv64-normal-server-release/images/jdk$

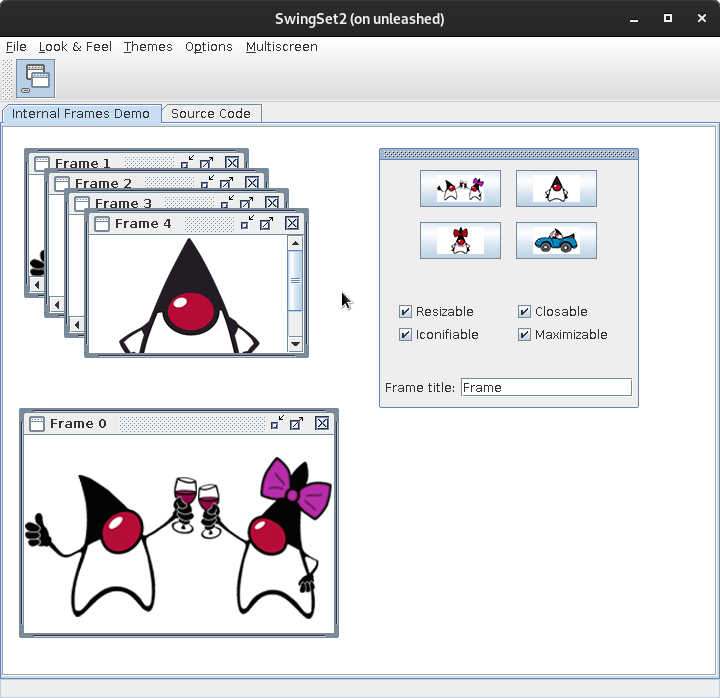

This is a native build on Debian (no cross compilation). AWT seems to be working just fine, tried

./bin/java -jar demo/jfc/SwingSet2/SwingSet2.jar

window opens as expexted. However, it is very, very slow - that's expected as there's no JIT (not yet).

The whole process was fairly straightforward, essentially just ../configure and then make all.

You did amazing job, @ChengJin01

@janvrany , really appreciate your confirmation of the compilation on the physical machine ahead of us.

Here're a few questions I'd like to check with you:

1) Where is your boot JDK? it was supposed to be built with the cross-toolchain because there is no way to compile a native build without the cross-build as the boot JDK.

2) If the native build was compiled via the cross-build, theoretically it should complain with exception of loading the native awt library because I already disabled the generation of this library on the cross-build (lack of X11 support in the cross-toolchain), which means there is no awt support on the cross-build.

3) please help to check the following lines exist in your build.log for both the native build and the cross-build. e.g.

Compiling 2788 files for java.desktop

...

Creating support/modules_libs/java.desktop/libawt_xawt.so from 57 file(s)

Creating support/modules_libs/java.desktop/libawt.so from 73 file(s)

Creating support/modules_libs/java.desktop/libawt_headless.so from 26 file(s)

These messages above shouldn't exist on the cross-build.

4) as for ./bin/java -jar demo/jfc/SwingSet2/SwingSet2.jar, did the all pictures show up correctly including the interlaced ones? If yes, that means the JNI/riscv_ffi works good.

1. Where is your boot JDK? it was supposed to be built with the cross-toolchain because there is no way to compile a native build without the cross-build as the boot JDK.

You caught me. I cheated. I did not want to go through the pain of cross-compilation environment, have enough of that as @shingarov mentioned above. So instead I use OpenJDK 11 in zero mode which is available in Debian's riscv64 repos. That works just fine, but it is very slow and I'm unpatient, so I cheated even more and created a "fake" JDK 11 by providing java (javac and so on) commands that run real java on a remote x86-64 server using ssh. I guess you don't like it but it did work. The latter is not needed, you just need to wait loooooonger.

2. If the native build was compiled via the cross-build, theoretically it should complain with exception of loading the native awt library because I already disabled the generation of this library on the cross-build (lack of X11 support in the cross-toolchain), which means there is no awt support on the cross-build.As I said, no cross-build was involved and my RISX-V Debian has all X11, ALSA, CUPS and what not libraries ready.

3. please help to check the following lines exist in your build.log for both the native build and the cross-build. e.g.

Hmm...I cannot find them. But maybe that's because I re-run make all few times as I was tuning NFS params for my fake JDK (NFS attribute caching does not play well with such a hack). I'll remove everything and launch the build tonight, will let you know tomorrow.

1. as for `./bin/java -jar demo/jfc/SwingSet2/SwingSet2.jar`, did the all pictures show up correctly including the interlaced ones? If yes, that means the JNI/riscv_ffi works good.

Not sure which pictures exactly, but see attached screenshots of what I see.

HTH

So instead I use OpenJDK 11 in zero mode which is available in Debian's riscv64 repos. That works just fine, but it is very slow and I'm unpatient, so I cheated even more and created a "fake" JDK 11 by providing ...

That explains why you can get through the disturbing issue with the native awtlibrary as they are offered from OpenJDK/zero-mode on Debian.

In any case, we still prefer to compile a full-featured native build via the cross-build in some way with our own changes rather than other means, which helps to confirm cross-builds and native-builds can be compiled with the same changes.

Hmm...I cannot find them. But maybe that's because I re-run make all few times as I was tuning NFS params for my fake JDK...

These messages should show up in the build.log of your native build. please check whether the following libraries exist in your native build:

lib/libawt_xawt.so

lib/libawt.so from

lib/libawt_headless.so

If not, it doesn't look correct in compiling java.desktop and all awt related native libraries are missing in the native build (I am still working on this part. So the changes there still are to be updated)

Not sure which pictures exactly, but see attached screenshots of what I see.

The pictures are correct, which confirms the changes to JNI/riscv_ffi work good.

In any case, we still prefer to compile a full-featured native build via the cross-build in some way with our own changes rather than other means, which helps to confirm cross-builds and native-builds can be compiled with the same changes.

Sure, I was just curious what would it take. In a long run one has to do it the proper way, no question about it.

Hmm...I cannot find them. But maybe that's because I re-run make all few times as I was tuning NFS params for my fake JDK...

lib/libawt_xawt.so lib/libawt.so from lib/libawt_headless.soYes, they do exist in my

.../images/jdk/libdirectory.

Yes, they do exist in my .../images/jdk/lib directory.

That means the native build was compiled correctly with the OpenJDK/zero-mode on Debian/riscv64.

It seems the steps mentioned previously (splitting the compilation to two steps) only work for case 1) but fail to work for case 2)

case 1): the JNI-related java code that doesn't depend on the native awtlibrary

case 2): the JNI-related java code that must load the native awtlibrary to call the native method initIDs() but the the native awtlibrary doesn't exist in the cross-build.

The detailed explanation is at src/java.desktop/share/classes/java/awt/Toolkit.java as follows:

/**

* Initialize JNI field and method ids

*/

private static native void initIDs();

/**

* WARNING: This is a temporary workaround for a problem in the

* way the AWT loads native libraries. A number of classes in the

* AWT package have a native method, initIDs(), which initializes

* the JNI field and method ids used in the native portion of

* their implementation.

*

* Since the use and storage of these ids is done by the

* implementation libraries, the implementation of these method is

* provided by the particular AWT implementations (for example,

* "Toolkit"s/Peer), such as Motif, Microsoft Windows, or Tiny. The

* problem is that this means that the native libraries must be

* loaded by the java.* classes, which do not necessarily know the

* names of the libraries to load. A better way of doing this

* would be to provide a separate library which defines java.awt.*

* initIDs, and exports the relevant symbols out to the

* implementation libraries.

*

* For now, we know it's done by the implementation, and we assume

* that the name of the library is "awt". -br.

*

* If you change loadLibraries(), please add the change to

* java.awt.image.ColorModel.loadLibraries(). Unfortunately,

* classes can be loaded in java.awt.image that depend on

* libawt and there is no way to call Toolkit.loadLibraries()

* directly. -hung

*/

private static boolean loaded = false;

static void loadLibraries() {

if (!loaded) {

java.security.AccessController.doPrivileged(

new java.security.PrivilegedAction<Void>() {

public Void run() {

System.loadLibrary("awt");

return null;

}

});

loaded = true;

}

}

As mentioned in the comment above,

* A better way of doing this would be to provide a separate library which defines java.awt.*

* initIDs, and exports the relevant symbols out to the implementation libraries.

which means we might have to move initIDs() into a separate library instead of awt in which case it can be generated in the cross-build. Another question is whether it covers all situations we encounter here (need to investigate case by case).