Models: Something bad has happened with graph! Data node "Preprocessor/mul" has 2 producers

1. The entire URL of the file you are using

/content/models/research/object_detection/model_main.py

2. Describe the bug

A change made on July 1, 2020 broke OpenVino blob convert for object detection mobilenetssdv2

Error is not present if before training I switch to commit hash 58d19c67e1d30d905dd5c6e5092348658fed80af before training

3. Steps to reproduce

This notebook shows the issue under the convert section

4. Expected behavior

The convert stage should show:

[ SUCCESS ] Generated IR version 10 model.

[ SUCCESS ] XML file: /content/models/research/fine_tuned_model/./IR_V10_fruits_mnssdv2_6k/frozen_inference_graph.xml

[ SUCCESS ] BIN file: /content/models/research/fine_tuned_model/./IR_V10_fruits_mnssdv2_6k/frozen_inference_graph.bin

[ SUCCESS ] Total execution time: 25.72 seconds.

[ SUCCESS ] Memory consumed: 1940 MB.

5. Additional context

Convert log failure when using HEAD:

/content/models/research/fine_tuned_model

[setupvars.sh] OpenVINO environment initialized

[ WARNING ] Use of deprecated cli option --tensorflow_use_custom_operations_config detected. Option use in the following releases will be fatal. Please use --transformations_config cli option instead

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: /content/models/research/fine_tuned_model/frozen_inference_graph.pb

- Path for generated IR: /content/models/research/fine_tuned_model/./IR_V10_fruits_mnssdv2_6k

- IR output name: frozen_inference_graph

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: Not specified, inherited from the model

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP16

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: False

- Reverse input channels: True

TensorFlow specific parameters:

- Input model in text protobuf format: False

- Path to model dump for TensorBoard: None

- List of shared libraries with TensorFlow custom layers implementation: None

- Update the configuration file with input/output node names: None

- Use configuration file used to generate the model with Object Detection API: /content/models/research/fine_tuned_model/pipeline.config

- Operations to offload: None

- Patterns to offload: None

- Use the config file: /opt/intel/openvino/deployment_tools/model_optimizer/extensions/front/tf/ssd_v2_support.json

Model Optimizer version: 2020.1.0-61-gd349c3ba4a

The Preprocessor block has been removed. Only nodes performing mean value subtraction and scaling (if applicable) are kept.

[ ANALYSIS INFO ] Your model looks like TensorFlow Object Detection API Model.

Check if all parameters are specified:

--tensorflow_use_custom_operations_config

--tensorflow_object_detection_api_pipeline_config

--input_shape (optional)

--reverse_input_channels (if you convert a model to use with the Inference Engine sample applications)

Detailed information about conversion of this model can be found at

https://docs.openvinotoolkit.org/latest/_docs_MO_DG_prepare_model_convert_model_tf_specific_Convert_Object_Detection_API_Models.html

[ ERROR ] Exception occurred during running replacer "REPLACEMENT_ID" (<class 'extensions.front.ChangePlaceholderTypes.ChangePlaceholderTypes'>): Something bad has happened with graph! Data node "Preprocessor/mul" has 2 producers

6. System information

Google Colab Notebook using:

%tensorflow_version 1.x

!pip install tf_slim

All 12 comments

any update in this issue ?

I have the same question. Is there any solution?

I converted mobilenetssdv2 trained by my dataset to OpenVINO IR successfully.

tensorflow_version 1.14

tensorflow model: V1.13.0

lmn@Z7:~/openvino_ws/releases/2020.4/openvino/deployment_tools/model_optimizer$ python3 mo_tf.py --input_model ~/openvino_ws/openvino_models/models/me/ssd_mobilenet_v2_yunji_robot/frozen_inference_graph.pb --tensorflow_use_custom_operations_config extensions/front/tf/ssd_v2_support.json --tensorflow_object_detection_api_pipeline_config /media/lmn/D/ubuntu_backup/openvino_ws/openvino_models/models/me/ssd_mobilenet_v2_yunji_robot/pipeline.config --reverse_input_channels --output_dir ~/openvino_ws/openvino_models/ir/me/ssd_mobilenet_v2_yunji_robot --data_type FP16

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: /home/lmn/openvino_ws/openvino_models/models/me/ssd_mobilenet_v2_yunji_robot/frozen_inference_graph.pb

- Path for generated IR: /home/lmn/openvino_ws/openvino_models/ir/me/ssd_mobilenet_v2_yunji_robot

- IR output name: frozen_inference_graph

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: Not specified, inherited from the model

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP16

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: False

- Reverse input channels: True

TensorFlow specific parameters:

- Input model in text protobuf format: False

- Path to model dump for TensorBoard: None

- List of shared libraries with TensorFlow custom layers implementation: None

- Update the configuration file with input/output node names: None

- Use configuration file used to generate the model with Object Detection API: /media/lmn/D/ubuntu_backup/openvino_ws/openvino_models/models/me/ssd_mobilenet_v2_yunji_robot/pipeline.config

- Use the config file: /media/lmn/D/ubuntu_backup/openvino_ws/releases/2020.4/openvino_2020.4.287/deployment_tools/model_optimizer/extensions/front/tf/ssd_v2_support.json

Model Optimizer version:

The Preprocessor block has been removed. Only nodes performing mean value subtraction and scaling (if applicable) are kept.

[ SUCCESS ] Generated IR version 10 model.

[ SUCCESS ] XML file: /home/lmn/openvino_ws/openvino_models/ir/me/ssd_mobilenet_v2_yunji_robot/frozen_inference_graph.xml

[ SUCCESS ] BIN file: /home/lmn/openvino_ws/openvino_models/ir/me/ssd_mobilenet_v2_yunji_robot/frozen_inference_graph.bin

[ SUCCESS ] Total execution time: 41.02 seconds.

[ SUCCESS ] Memory consumed: 400 MB.

@MengNan-Li

- I am struggling with the same issue, can you please share the scripts with me for training with a new dataset.

- How did you install specific TensorFlow-modelV1.13.0?

@prajnasb

I use the TF object detection api to finetune ssd_mobilenet_v2. So, the scripts is just the model_main.py.

research/object_detection python model_main.py --logtostderr --pipeline_config_path=/home/zfserver/lmn/tf_models/workspace/training_demo/training/ssd_mobilenet_v2_yunji_robot.config --model_dir=/home/zfserver/lmn/tf_models/workspace/training_demo/training

TensorFlow-modelV1.13.0: https://github.com/tensorflow/models/tree/v1.13.0

@MengNan-Li Thank you for the response.

@MengNan-Li Thankyou so much it worked..:)

@MengNan-Li Heya do you have any script for inferencing?

The issue still exist in master branch.

Which part of the new code that cause this issue ?

The only solution for exporting the model to openvino is switching to commit hash 58d19c6

Hello,

Any new about this subject ?

Because I effectively trained the ssdlite_mobilenet_v2_coco_2018_05_09 model with my own data on the last version of object detection tensorflow api but I cannot convert the model to Openvino.

I have the following error:

[ ERROR ] Exception occurred during running replacer "REPLACEMENT_ID" (

However, I succeed to convert the model of ssdlite_mobilenet_v2_coco_2018_05_09 provided by the zoo library.

I tried to freeze the model after switching to commit hash 58d19c6 but still the same error...

Please if you have any suggestion.

Hello,

I wrote a temporary patch for mobilenet/mobiledet and resnet_v1_50_coco with new Preprocessor.

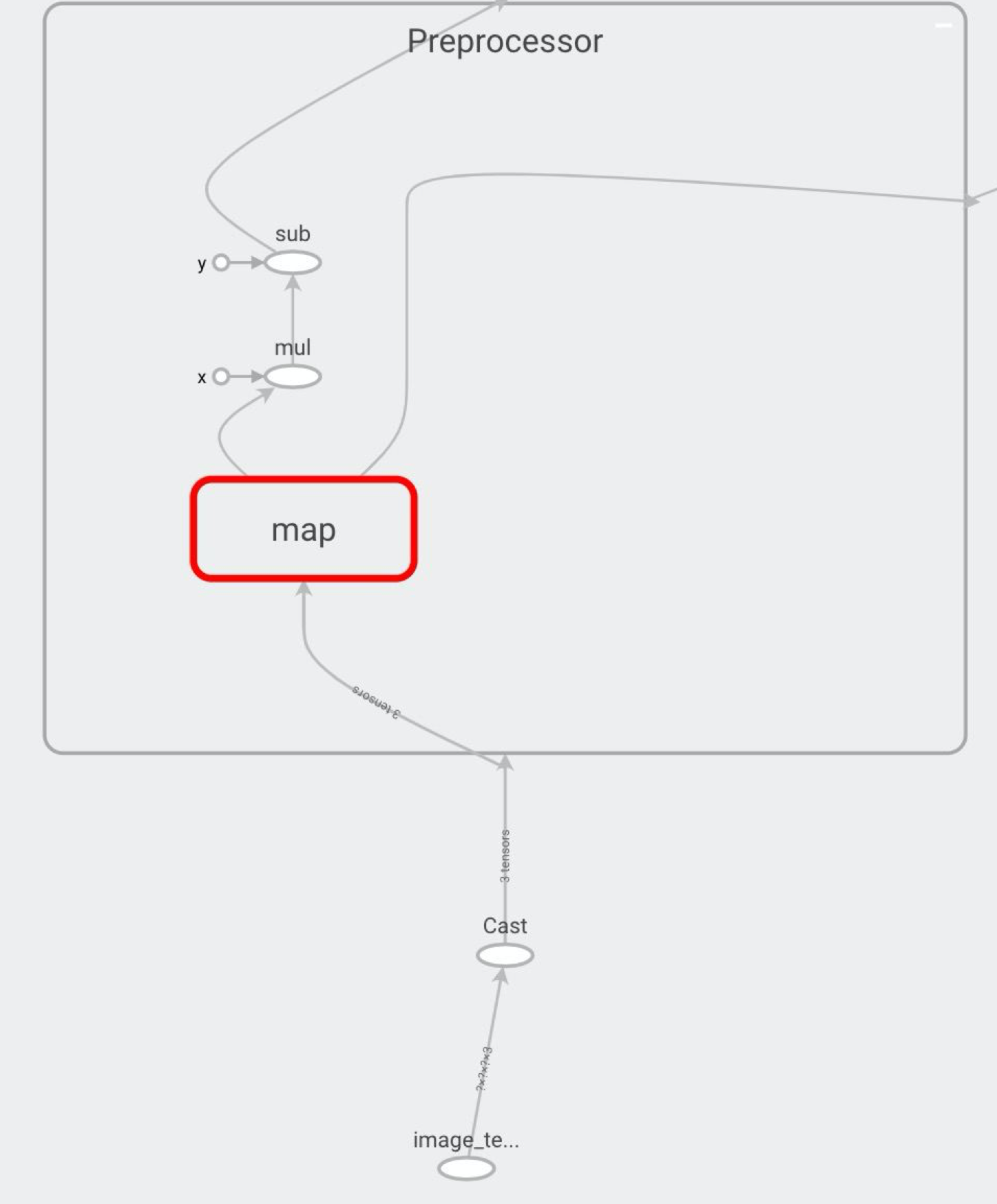

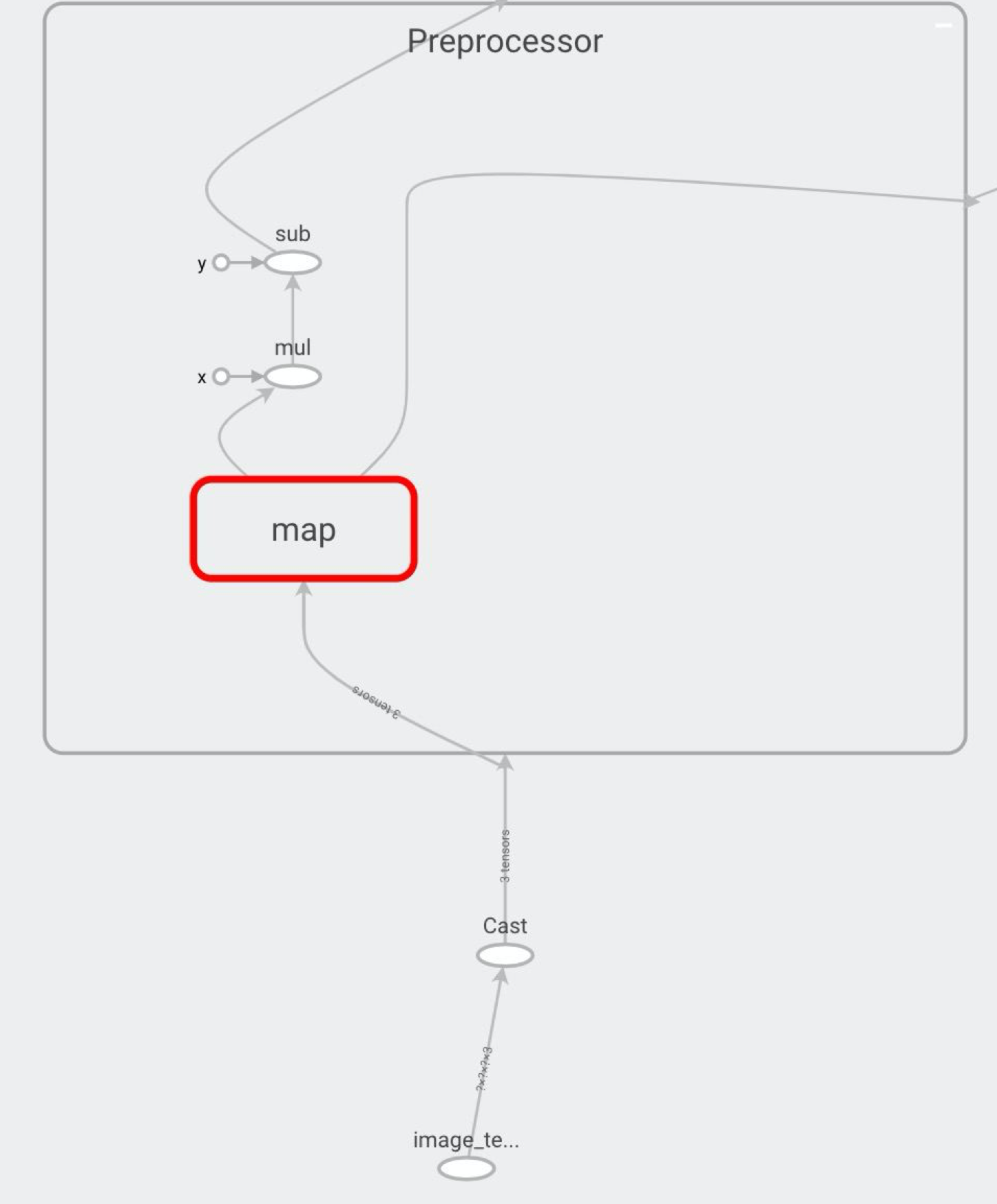

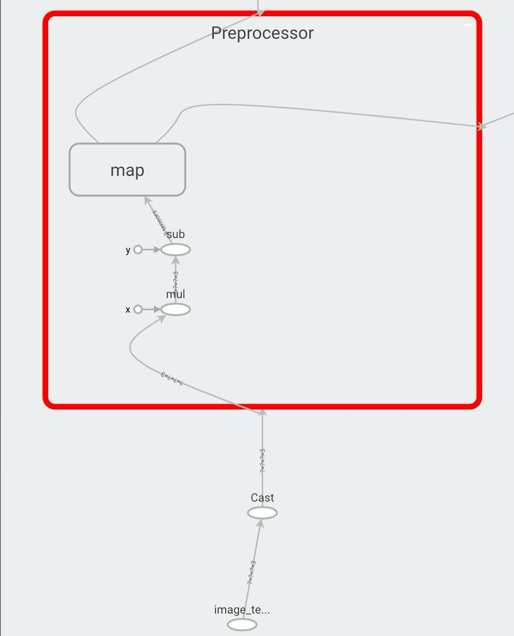

Old Preprocessor:

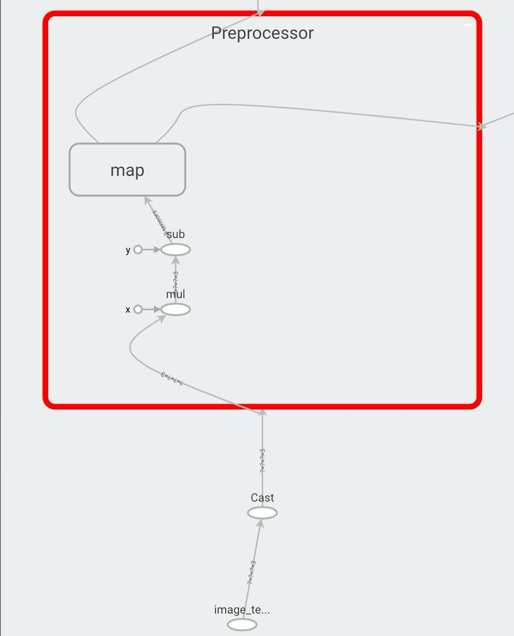

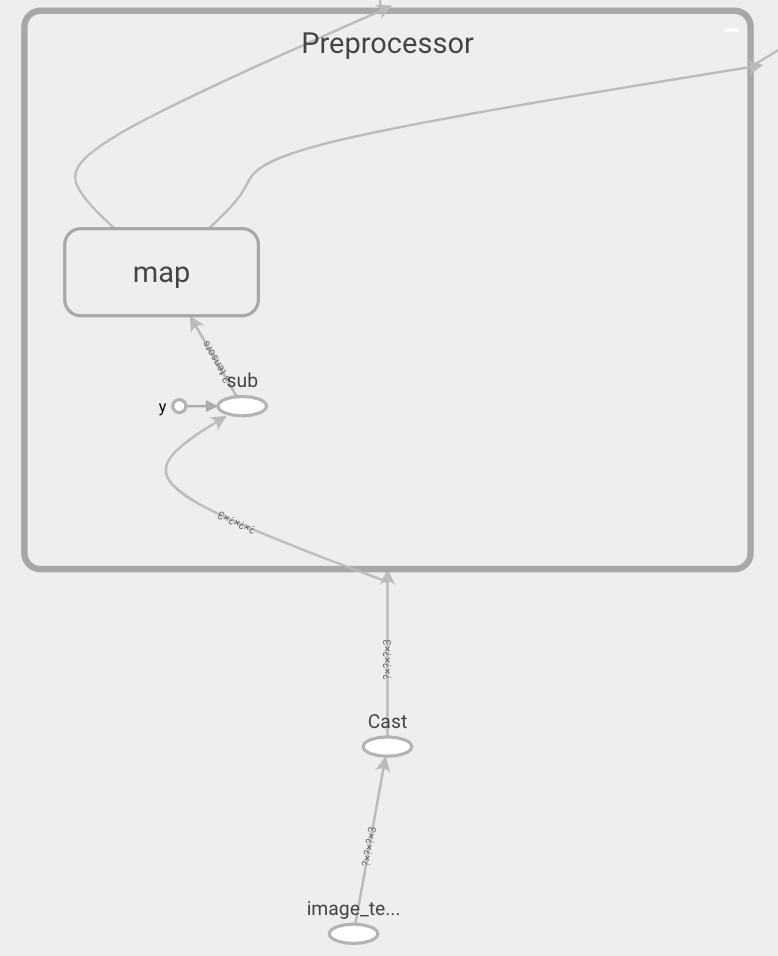

New Preprocessor:

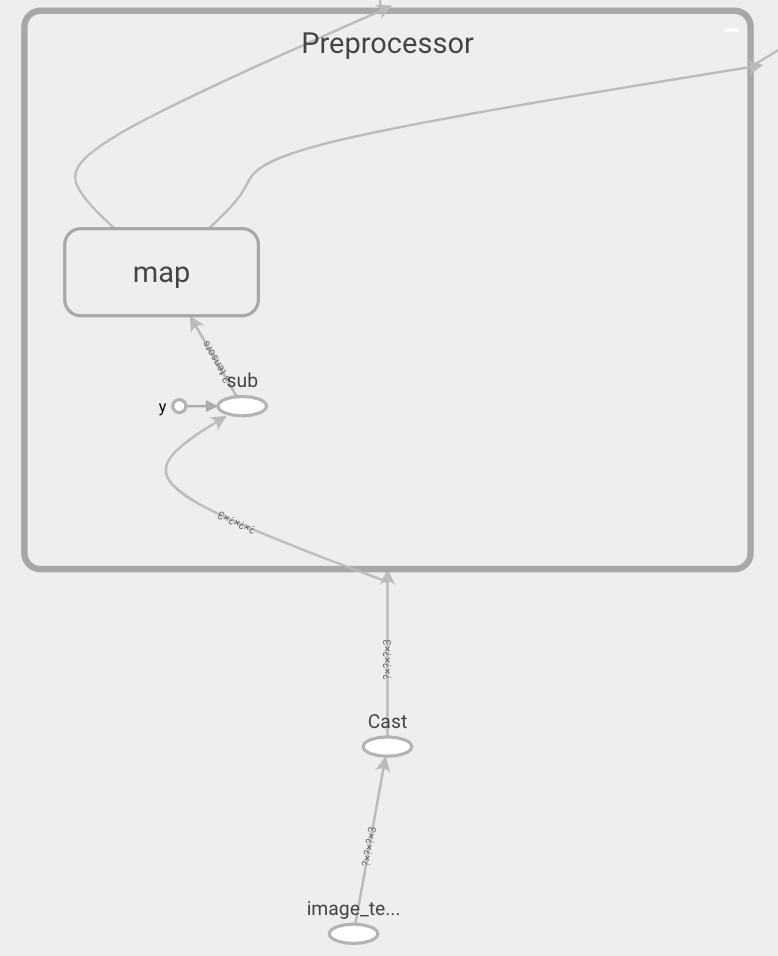

Mobilenet:

Resnet:

- Put ObjectDetectionAPIFix.py in /opt/intel/openvino/deployment_tools/model_optimizer/extensions/front/tf/

- Use ssd_support_api_v1.15_fix_mobile.json or ssd_support_api_v1.15_fix_resnet.json

- Use custom image preprocessing for Resnet - [123.68 , 116.779, 103.939] RGB

Hello,

Thanks to your patch I managed to convert to OpenVino but unfortunately when I run the inference, it doesn't work ... I don't get any detection which is not possible because when I make an inference with Tensorflow, I have several detections.

Best,

Natacha.

Most helpful comment

Hello,

I wrote a temporary patch for mobilenet/mobiledet and resnet_v1_50_coco with new Preprocessor.

Old Preprocessor:

New Preprocessor:

Mobilenet:

Resnet: