Models: Tensorflow stucks in evaluation step after running local_init_op

_OS: Ubuntu 18.0.41 LTS and 16.04

Tried on CPU and GPU both. Same error.

Tensorflow version 1.1, 1.5 and1.8 also.

Python 3.5 and 3.6.

Tensorflow was installed using the pip._

Command Prompt Snippet of the problem

INFO:tensorflow:Creating AttentionDecoder in mode=eval

INFO:tensorflow:

AttentionDecoder:

init_scale: 0.04

max_decode_length: 100

rnn_cell:

cell_class: LSTMCell

cell_params: {num_units: 512}

dropout_input_keep_prob: 0.8

dropout_output_keep_prob: 1.0

num_layers: 4

residual_combiner: add

residual_connections: false

residual_dense: false

INFO:tensorflow:Creating ZeroBridge in mode=eval

INFO:tensorflow:

ZeroBridge: {}

WARNING:tensorflow:From /home/muhammad/Thesis/Ab/AutoViz/seq2seq/metrics/metric_specs.py:232: streaming_mean (from tensorflow.contrib.metrics.python.ops.metric_ops) is deprecated and will be removed in a future version.

Instructions for updating:

Please switch to tf.metrics.mean

INFO:tensorflow:Starting evaluation at 2018-10-23-00:06:12

INFO:tensorflow:Graph was finalized.

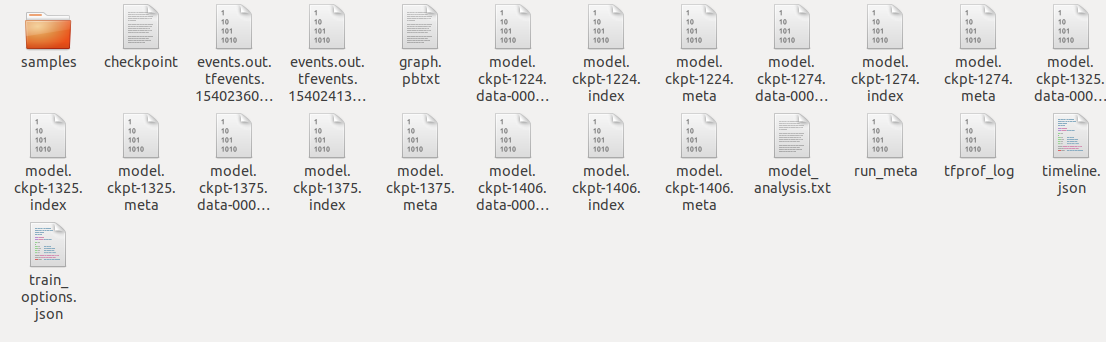

INFO:tensorflow:Restoring parameters from /home/muhammad/Thesis/Ab/AutoViz/Model/model.ckpt-1406

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

I have left it running for more than a day but it remains stuck here. On GPU it shows that the process is running, but system doesn't seems like doing anything because of the processor usage.

Any help would be appreaciated a lot.

Thank You.

All 26 comments

Thank you for your post. We noticed you have not filled out the following field in the issue template. Could you update them if they are relevant in your case, or leave them as N/A? Thanks.

What is the top-level directory of the model you are using

Have I written custom code

OS Platform and Distribution

TensorFlow installed from

Bazel version

CUDA/cuDNN version

GPU model and memory

Exact command to reproduce

What is the top-level directory of the model you are using: N/A

Have I written custom code: N/A

OS Platform and Distribution: Windows 10 and Ubuntu (18.04 + 16.04)

TensorFlow installed from: Python-Pip (TensorFlow Versions used: 1.0, 1.1 and 1.8)

Bazel version: N/A

CUDA/cuDNN version: 8 and 9

GPU model and memory: 940M (2GB)

Exact command to reproduce: Just running the training sequence in Python 3.5 and Python 3.6.

@abdullahakmal does checkpoint files produced on the destination folder? maybe it's just because the tensorflow logging function is turned off in default setting.

No it produces checkpoints successfully.

@abdullahakmal yeah, i mean the program is running, just turned off the logging info, you may write "tf.logging.set_verbosity(tf.logging.INFO)" in model_main.py, so the program will logging the loss on terminal

Okay. I'll try that.

tf.logging.set_verbosity(tf.logging.INFO)

is already set.

Was this resolved? I've just seen similar behavior on one of my jobs.

The same happens to me.

I am training an object detector using a custom dataset. What is your task?

Have anyone resolved this?

Any updates on that?

Execution also hangs after I get the following message:

INFO:tensorflow:Done running local_init_op.

I am able to continue the training normally by using Ctrl+C and re-starting from latest checkpoint.

I moved to torch....

On Wed, 6 Feb 2019 at 21:39, alk15 notifications@github.com wrote:

Any updates on that?

Execution also hangs after I get the following message:

INFO:tensorflow:Done running local_init_op.

I am able to continue the training normally by using Ctrl+C and

re-starting from latest checkpoint.—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

https://github.com/tensorflow/models/issues/5587#issuecomment-461197681,

or mute the thread

https://github.com/notifications/unsubscribe-auth/AY7cZS3AD2r_13_Tcv373IDOKls4PUFDks5vK0t5gaJpZM4X2X33

.

--

Best Regards,

Muhammad Abdullah Akmal

Data Scientist at Gamesessions,

Sheffield, South Yorkshire,

United Kingdom

I got the same issue, and I checked my code,I found there is a line:

dataset = dataset.repeat()

in my eval_input_fn() function.

I removed it,and the issue gone.

THIS LINE OF CODE WILL LEAD YOUR EVALUATE PROGRESS INTO A DEAD CYCLE

@LeoHirasawa

What script was that line located in?

generally, in function "eval_input_fn()“.

Cavan09 notifications@github.com 于2019年3月20日周三 下午10:34写道:

@LeoHirasawa https://github.com/LeoHirasawa

What script was that line located in?—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

https://github.com/tensorflow/models/issues/5587#issuecomment-474857355,

or mute the thread

https://github.com/notifications/unsubscribe-auth/ALLRyvtbVzOvYSJf6o7uTkdinEO4IijVks5vYkcGgaJpZM4X2X33

.

@LeoHirasawa

Hi,

Could you precise where this fonction is located? I can't find it in the models folder...

I found that reducing the size of my test set helped model_main.py successfully continue. Initially I had 10Ks of images in my test set and model_main.py would hang forever (well, until my Colab timed out). I reduced my test set to a few hundred images total and now things are working.

I didn't debug this any further to see if there's some problem loading too much training data; but hopefully this helps someone else get unstuck.

Hi,

I had the same problem and was able to solve the evaluation loop by adding _throttle_secs=k_ (k is the time interval you want your evaluation to repeat) to the EvalSpecs of object_detection/models/model_lib.py. your file should look like this:

eval_specs.append(

tf.estimator.EvalSpec(

name=eval_spec_name,

input_fn=eval_input_fn,

steps=None,

exporters=exporter,

throttle_secs=3600))

it seems that you forget to set a repeat number in eval_input_fn.

For example, if you use single input_fn for both training and evaluating

def data_input_fn(file_path_list):

with tf.name_scope("dataset") as scope:

dataset = TextLineDataset(filenames=file_path_list)

dataset = dataset.skip(1).map(_parse_line)

dataset = dataset.shuffle(10000).repeat().batch(32)

return dataset

Then you will get this problem when evaluating model on dataset.

Because it's fine to use repeat() when training if you use early-stoping, but it's not ok to use repeat() when evaluate on dataset or you will find programmer will keep running on evaluate.

Hi, @wind-meta when can I modify the data_input_fn

Hi, @wind-meta when can I modify the data_input_fn

Sorry but I don't get your question, if you mean how to modify the function, just use a parameter repeat_number to avoid such problem.

I solved this problem downgrading the NVIDIA drivers to version 436.48.

I would also recommend to verify the TF Records files just in case.

In some cases, it can be that the evaluation is taking too much time to process for some reasons (maybe inference is being done in CPU with a a model that is very computationally intensirve, eval dataset is too big, etc.)

my evaluation was stuck on

"estimator.evaluation taking too long"

I gave shuffle="False" to the input_function_of_eval

I also meet the same trouble. And I limit the eval dataset.

@abdullahakmal

Is this still an issue?

Please close this thread if your issue was resolved.Thanks!

Automatically closing due to lack of recent activity. Please update the issue when new information becomes available, and we will reopen the issue. Thanks!

Most helpful comment

I moved to torch....

On Wed, 6 Feb 2019 at 21:39, alk15 notifications@github.com wrote:

--

Best Regards,

Muhammad Abdullah Akmal

Data Scientist at Gamesessions,

Sheffield, South Yorkshire,

United Kingdom