Mixedrealitytoolkit-unity: Proposal: Reworking internal execution paths

Overview

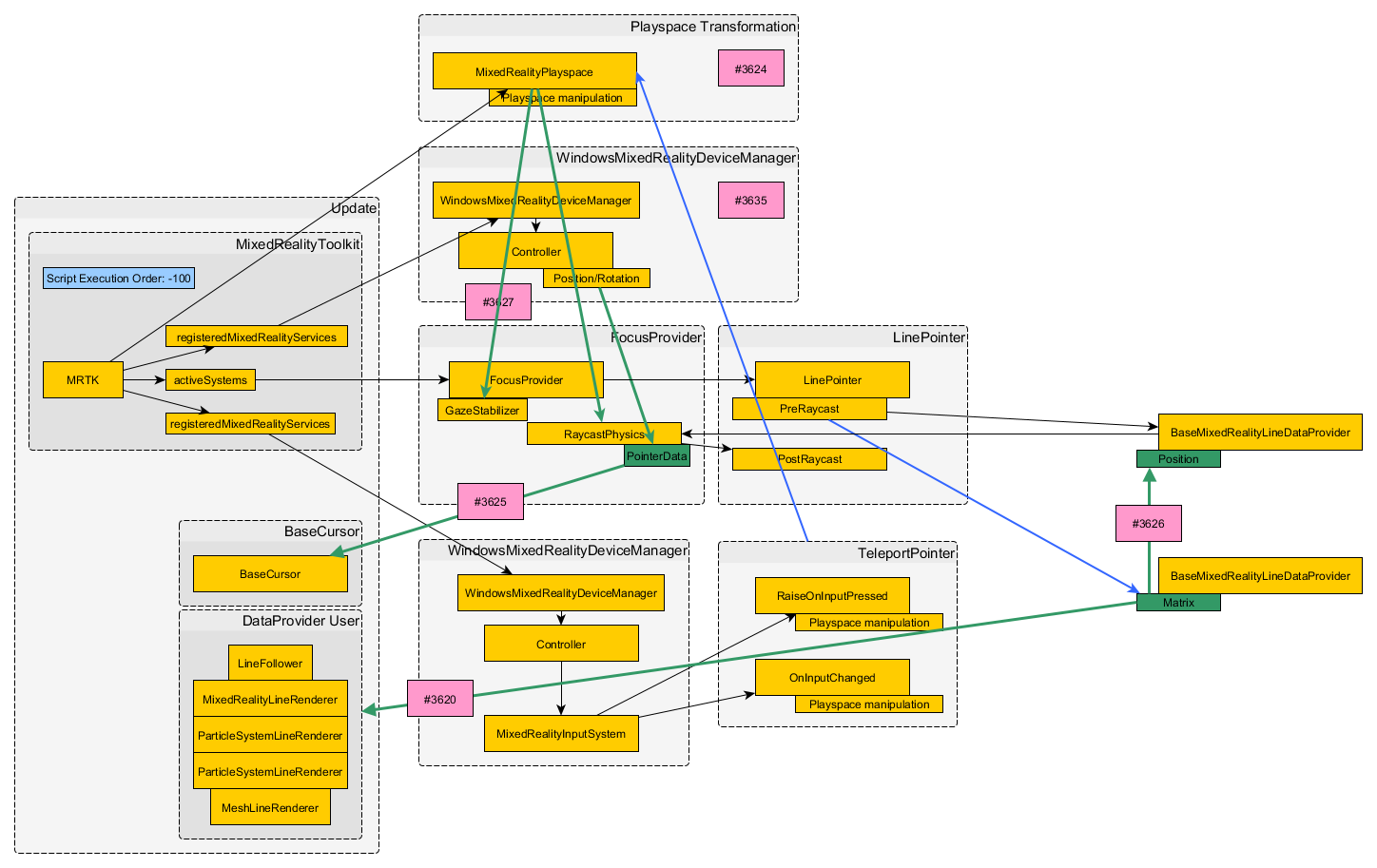

There are execution orders that are not correct. There might be more, but so far I found the following issues, trying to bundle them together here:

3612, #3585, #3580

It all boils down to the following two problems:

- MRTK has two main service paths, activeServices and registeredMixedRealityServices. The former executes the FocusProvider, the latter executes the MixedRealityInputSystem

Issue 1:

- FocusProvider: Updates all LinePointers. In PreRaycast positions get set on BaseMixedRealityLineDataProvider, but those get run through their localMatrix which has not been set yet. This only happens in PostRaycast when the component gets enabled

Issue 2:

- FocusProvider: Updates all LinePointers which perform all their Raycasts and Update hit info

- MRTK: Updates MixedRealityInputSystem in registeredMixedRealityServices. During this phase the TeleportPointer can perform movement and rotation. This makes all previously calculated Raycasts obsolete for all further calculations.

Bottom line: Raycasted PointerData as well as the darn BaseMixedRealityLineDataProvider matrix )who needs that anyways?) can be old.

Unity Editor Version

2018.3.8f1

Mixed Reality Toolkit Release Version

mrtk_development

All 27 comments

The orders of the updates aren't random, actually. They're ordered by the service's priority.

But yes, it's true that all the systems get their updates called first, then the list of services.

@Alexees also great job with the graph! It really helps visualize the problem :)

I was referreing to Update from Unity. The three groups I created under "random ordered Updates" all contain Unity Updates, not Updates based on MRKT.

Those DataProvider Users can be fixed easy by just changing all Update to LateUpdate

Gotcha. One last thing I also wanted to point out is that the events processed from the input system are not bound to an update loop. Once the button is registered as pressed in the data provider it immediately gets bubbled up to the input system, which then routes it to the appropriate GameObject in the presentation layer.

@StephenHodgson I double checked. All InputEvents actually ARE bound to the MRTK Update loop. Now that I saw that I remember that some time ago there was a major decision made if you wanted to let the inputs bubble up on their own or if you wanted to pull them. Guess the decision felt on the latter. I think @keveleigh was involved in this.

I updated the graph

One issue entered the ring: The GazeStabilizer is responsible for smoothing the gaze into view even after teleportation. It has no idea of any teleportation happening and needs to receive a transformation for its position data, or calculate that in local space

All InputEvents actually ARE bound to the MRTK Update loop.

I'm not so certain about that. @SimonDarksideJ thoughts here?

[edit]

Actually reading from the controller's data providers is, in fact bound to the update loop. Sorry. Thanks for catching that.

I thought we made it so you could choose to either poll or receive immediate events? 💭

@StephenHodgson all done.

I think I have introduced two accidental errors I will look into tomorrow. Will report back when I think all of these pull reqests are final.

All InputEvents actually ARE bound to the MRTK Update loop.

this is correct as of today. There was a time they weren't, due to the dependency on the InteractionManager events. But we since changed to polling as the InteractionManager events are crap.

Although, button events are still event driven for WMR at least. For OpenVR, everything is a poll

@SimonDarksideJ just out of curiosity, how are they crap?

@StephenHodgson @SimonDarksideJ The problem turned out to be a little more complicated. The actual main problem is that motion controller positioning as well as the teleportation logic is all handled in the WindowsMixedRealityDeviceManager, which is run AFTER the FocusProvider which does raycasting. Of course it makes sense for button presses and such, because you want to able to hit stuff, but for the initial raycast, the controllers and the playspace should be in position. The same approach I tried with the PlayspaceTransformation (delaying it until the next frame), won't work here, because we want the controller to be accurate.

WindowsMixedRealityDeviceManager also needs a PreRaycast step, where it can position its devices before the raycast step

@StephenHodgson @SimonDarksideJ ok, you guys. I know I'm not part of your team and what I did here has a large impact on a lot of classes. It's basically a huge bug fix, therefore I did not consider this being a new feature.

I still think a lot of this was necessary. Several calls were not in the right order and things were not happening at the right time.

All the changes are spread across several pull requests at the moment. If you'd like I merge them into one large one.

A brief overview what all the changes do:

- Position related changes on Input Devices are calculated in a Pre-Services step in the MixedRealityToolkit.

- Changes to the MixedRealityPlayspace are postponed to the next frame

Moving both of these actions to the beginning of the MixedRealityToolkit Update cycle ensures that the GazeProvider can properly calculate it's raycasts with all transformations made.

- The GazeStabilizer now operates in Playspace space, which assures taking teleport rotations into account

- The Playspace got extracted to a static class to hide it's Transform, so the only way to transform it is to use the postponing queuing method

Now, gazes and rays and teleport and all that do not pop for a frame or lag anymore.

ok, you guys. I know I'm not part of your team

We're all one big happy family here, no us vs them :D and every view has value.

how are they crap?

The InteractionManager is fine for in-time events (buttons, etc). But for continuous events (like position, rotation) it seems to only work when wired up to a GO, unsure as to why. SO when we just consumed the position/rotation events we were seeing lag while accessing it through C# only. Changing this to polling the InteractionManager resolved that.

The actual main problem is that motion controller positioning as well as the teleportation logic is all handled in the WindowsMixedRealityDeviceManager, which is run AFTER the FocusProvider which does raycasting

I would completely agree with your findings here and possibly a mistake in the order of events within the architecture. Currently the services run one after another, based on a order determined at design time as whole services.

But you highlight a potential critical issue, where Position / Rotation should be evaluated in a different cycle to other events due to the different ways they are consumed.

Thoughts @StephenHodgson , if we agree, this would need a critical change, as developers have likely had to workaround this sync issue.

I'd also have to agree with your other recommendations, with the only exception being

The Playspace got extracted to a static class to hide it's Transform, so the only way to transform it is to use the postponing queuing method

The playspace manager is a service, yes. But it has a physical representation in the scene which the service connects to. So transforming the playspace is only a matter of transforming the connected GO (unless I'm misunderstanding you)

Would also love to hear your insights @keveleigh / @Railboy

@SimonDarksideJ

We're all one big happy family here, no us vs them :D and every view has value.

nice to hear that :)

But you highlight a potential critical issue, where Position / Rotation should be evaluated in a different cycle to other events due to the different ways they are consumed.

pull request #3635 addresses that

The playspace manager is a service, yes. But it has a physical representation in the scene which the service connects to. So transforming the playspace is only a matter of transforming the connected GO

It's true, but the only way to transform it is to get a hold of the Transform or GO. But the Playspace creates and maintains it itself. I merely wrapped all relevant Transform calls (some are missing but could easily be added). It was an attempt to avoid any uncontrolled manipulation. But thinking of it (SerializedField reference, transform.parent access from children, GameObject.Find...) This might be a little too much.

What do you think?

But thinking of it (SerializedField reference, transform.parent access from children, GameObject.Find...)

Any find operation in the new framework should be avoided at all costs due to their expense. As you say the Playspace maintains a reference to the GO, so it should be responsible for all updates.

A developer only need locate the playspace service (which is highly performant) and then ask it to update.

I thought this was how it was working currently, based on @StephenHodgson updates related to teleportation, so I'd like him to comment on that one.

Just reviewed #3635 which seems a sensible change, but raises concerns for existing apps. Also it has other changes unrelated to the PR mixed in, suggesting it wasn't done from a clean branch of development (sorry)

I know I'm not part of your team

That's not true, you're here aren't you? You're def part of our team :)

Overall I think you've done a great job so far pointing out some of the things we may have glossed over. One important thing to think about as well is the Solvers and how they (s)could have played into this, as their whole job is to better coordinate the order in which GameObjects were transformed is space in relation to each other. Wondering if that solution could help solve some of the issues we're seeing here?

I think adding more event hooks is okay as long as we clearly tell the story there.

Adding some Microsoft folks to try and get some more traction on this refactoring proposal. I know a great deal of work has been done around HoloLens 2 in terms of the input stack, which may or may not impact some of these components.

@Alexees , excellent catch. I'm pretty busy at the moment with RC1 but I should be able to have a closer look later this week. On first inspection, I would rather have the input system update all device managers before focus manager update. For that we would need to:

- Register device managers in the input system instead of the mixed reality toolkit.

- Have input system call update on device managers

- Have a well defined execution order for activeSystems in the mixed reality toolkit so we can ensure input system runs before focus provider. Right now it seems to depend on whatever order _Dictionary_ iterates with.

Register device managers in the input system instead of the mixed reality toolkit.

Don't think we need to change where they are registered @luis-valverde-ms , that's the whole point of the locator, if you need a reference to something, there is only one place to look.

But altering the update steps for devices to separate rotation / position from buttons wouldn't be that hard. They already register and update the InputSystem, we would just need to update the execution order to multiple instead of a single call atm.

Don't think we need to change where they are registered @luis-valverde-ms , that's the whole point of the locator, if you need a reference to something, there is only one place to look

I must be missing something but I don't see the value in having a common interface to obtain a reference to an arbitrary service regardless of its type. We want more decoupling between different systems moving forward. In that context I think it is good to have input related services registered with the input system.

We want more decoupling between different systems moving forward

That was my point. We would never embed the devices in the input system as that would too tightly integrate them. Better to keep them loose and add an extra execution layer that an input system may or may not take advantage of. But if the devices pump out events at different intervals between buttons and the rest, it would not impact the framework.

in other words, Input isn't the ONLY place that would consume devices. Networking for example, voice, or anything else that is consumed by but not directly related to input per-se

@luis-valverde-ms Keep in mind that putting the whole Input System first leads to outdated raycasts used during the controllers input events.

I vote for:

- Translations

- Raycasts

- Buttons and such

@SimonDarksideJ , I'm not sure I follow. It may be just terminology but I consider devices to be input, not to consume it. For me, it feels natural to group them under the input system and order that relative to other systems that consume it, like focus.

@Alexees , I'm not sure I agree with processing buttons after changing focus. When you press a button to let's say click on something via a pointer, you do it based on what you are currently seeing, i.e. the information displayed on the last frame, not on what the pointer is going to be pointing at in the next frame. I'm not sure it makes a big different in practice but I think it is conceptually simpler to tick all input types (poses and buttons) together .

Which brings me to my main point which is keeping things as simple as possible. Tell me if I'm missing something but I think the issue we're trying to address is that of focus being ticked before device managers. The simplest solution I can think of is ticking device managers before focus. I'd rather avoid extending the device manager interface just for this because it forces every device implementer to be aware of what the core services are and to make the decision of what should be done before and after they tick.

@luis-valverde-ms I think you're right. thinking too much about all this made my head spin around event's order.

For me, it feels natural to group them under the input system and order that relative to other systems that consume it, like focus.

In RC1, data providers are registered and unregistered at the direction of their parent system. Flow is now:

- Registrary loads system

- Registrar calls enable on the system

- System (ex: input) requests that the registrar load data providers

- [some time later] Registrar calls disable on the system

- System requests that the registrar unload data providers

Please note: in the case of the MixedRealityToolkit object, all configured services are loaded before they are enabled.

It has been a while this issue received updates and this was about quite a few and it's not clear which were fixed and which weren't.

Closing this because issues might just pop up again if they still exist

Most helpful comment

That was my point. We would never embed the devices in the input system as that would too tightly integrate them. Better to keep them loose and add an extra execution layer that an input system may or may not take advantage of. But if the devices pump out events at different intervals between buttons and the rest, it would not impact the framework.

in other words, Input isn't the ONLY place that would consume devices. Networking for example, voice, or anything else that is consumed by but not directly related to input per-se