Mixedrealitytoolkit-unity: vNEXT Task: HoloLens Gesture Support

Overview

Add HoloLens gesture support: Tap, Hold, Manipulate, & Navigate.

(Hand Simulation to be done separately, I'd like to discuss how to handle mouse support via profile)

Acceptance criteria

- [x] User should be able to configure gesture recognizer from Input System Config profile

- [x] User should be able to configure input actions for Tap, Hold, Manipulate, & Navigate in Input System Config Profile.

- [x] When user raises hand to the ready position, the cursor should update to ready state.

- [x] When user taps on a GameObject, the Input System raises a Click event.

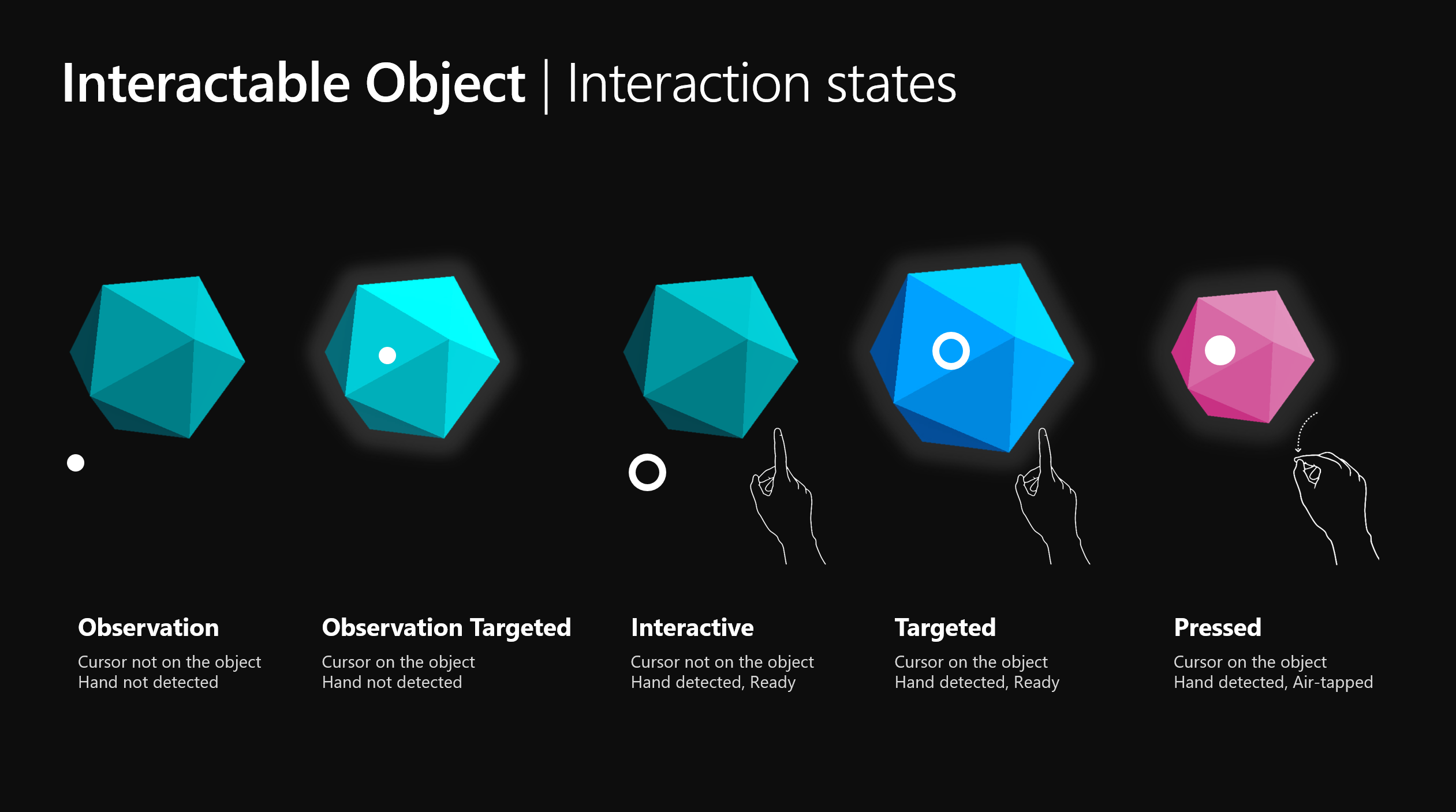

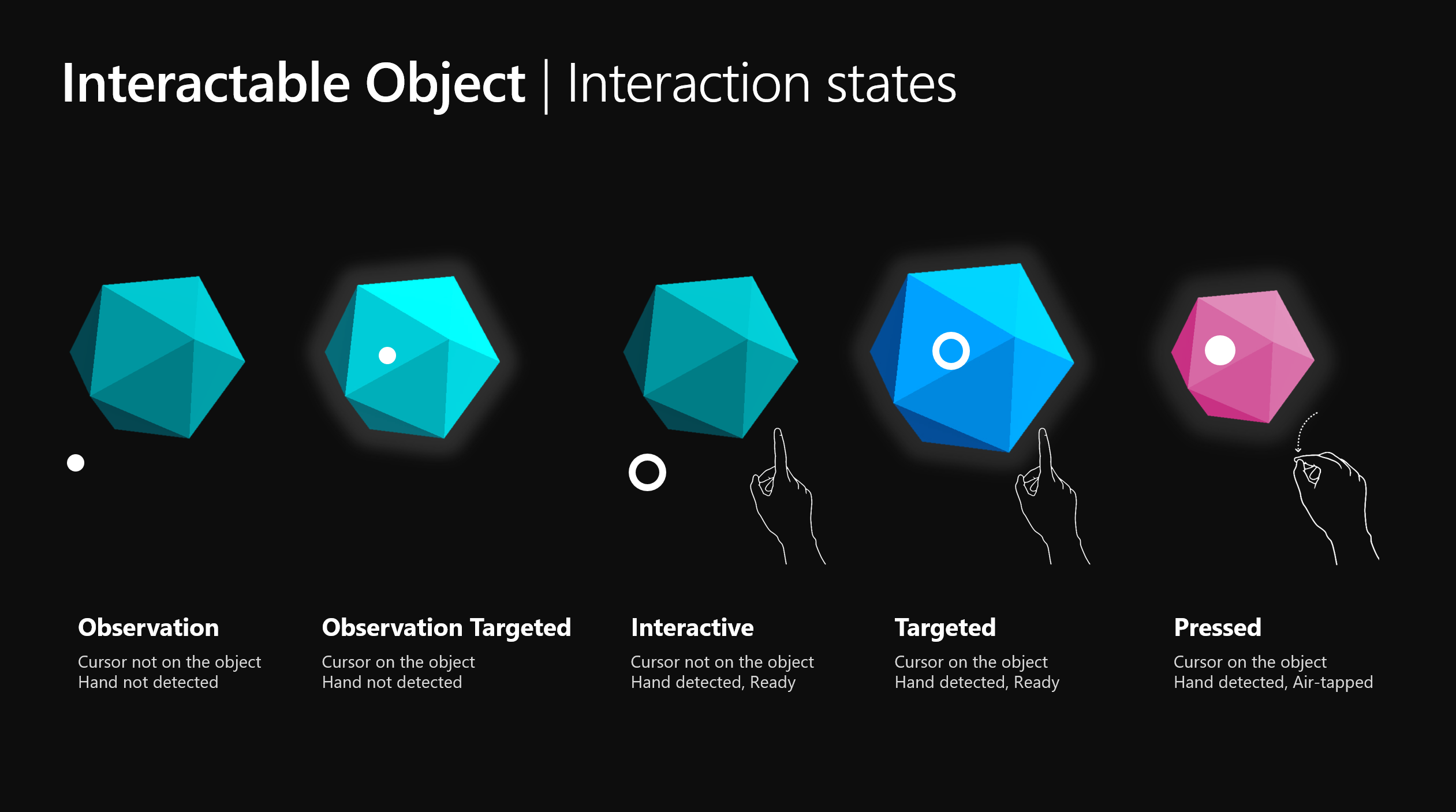

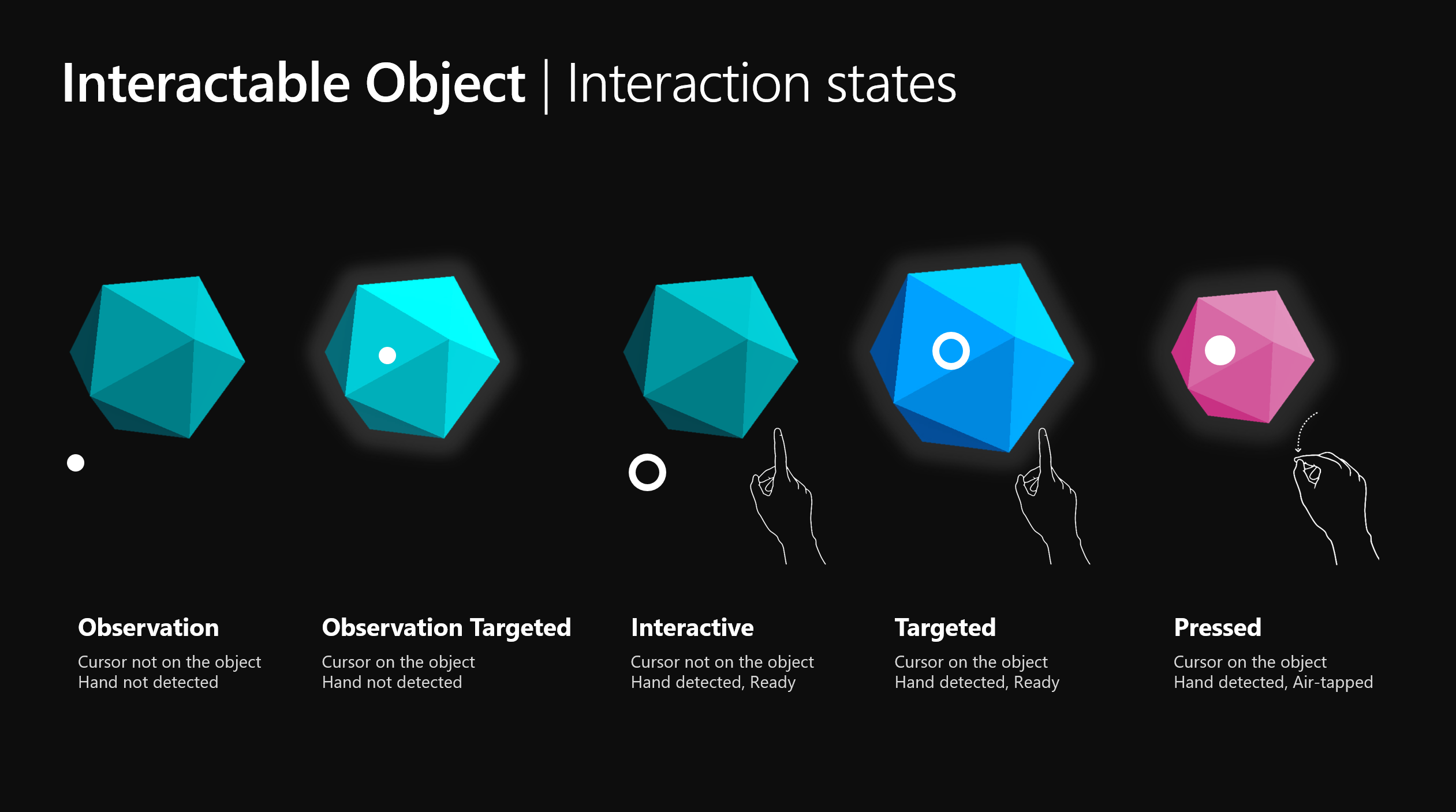

- [x] When user taps the cursor should animate properly with interaction states & cursor shapes in HoloLens:

- [x] When user taps and holds a GameObject, the Input System raises a Hold Started.

- [x] User should be able to enable/disable Navigation and Manipulation gesture recognizers

- [x] System should intelligently disable a recognizer if it's sibling has been enabled, and the recognizer is running.

- [x] When user taps, holds, and moves their hand, the Input System raises the appropriate Manipulation events when Manipulation Gesture Recognizer is running.

- [x] When the user taps, holds, and moves their hand, the Input System raises the appropriate Navigation events when Navigation Gesture Recognizer is running.

All 6 comments

It's probably worth calling out that we should do this in the context of some platform agnostic gesture handling system. I know Leap Motion had gesture recognition support for a while (though I think it didn't make it in the new SDK rewrite (yet?)). I wouldn't be surprised if Magic Leap has a similar event API either. We're likely going to see numerous Tap/Hold/etc gesture event APIs as more hand tracking solutions find wider adoption.

Though it's outside the scope of this work item, I think we'll eventually want to implement our own simple gesture recognizer as well, for devices that offer articulated hand positions but not gesture recognition specifically.

That's def planned. But I think we're also trying to figure out if we wanna include the WMR Gestures in the WMR Controller implementation or not bc they're so tightly coupled.

Oh interesting. I hadn't really thought about it from that direction, but in my past work with hand trackers, it has crossed my mind that it might be a good idea to have tracked hands be a kind of controller. You could imagine a system where a tap gesture comes from a "Tracked Left Hand" virtual controller. This would also blend more easily with physical controllers that also have hand tracking built in. I can also imagine lots of fingerless gestures that something like a capacitive Oculus Touch could offer as well, like a Wave, Thumbs Up, Fist, or Clap gesture, those events would be just as natural coming from a hand tracking virtual controller. The same way a Tap gesture might come from a "HoloLens Left Hand" virtual controller.

I'm not yet convinced tracked hands should be controllers, but its something to consider.

Hands are already tracked at WMR Controllers.

Standard interaction states & cursor shapes in HoloLens:

@cre8ivepark

2633 should restore the first four states on HoloLens, but not yet the pressed state.

It also restores Interactive and Targeted on immersive devices.

Most helpful comment

Standard interaction states & cursor shapes in HoloLens: