Kind: Cannot create cluster due to `docker exec cat /kind/version` failing

What happened:

Kind failed to create a cluster with

ERROR: failed to create cluster: failed to generate kubeadm config content: failed to get kubernetes version from node: failed to get file: command "docker exec --privileged flux-e2e-2-control-plane cat /kind/version" failed with error: exit status 1

Full logs at https://circleci.com/gh/fluxcd/flux/9822

What you expected to happen:

I expect the cluster to be created without errors

How to reproduce it (as minimally and precisely as possible):

Unfortunately it's not systematic. It only happens from time to time

It happens when running make e2e in https://github.com/fluxcd/flux/

Anything else we need to know?:

Although things have improved quite a bit since Kind 0.7.0 we are still getting some cluster creation errors from time to time. More info at https://github.com/fluxcd/flux/issues/2672

This may have to do with the fact that we create a few (4) Kind clusters in parallel. See https://github.com/fluxcd/flux/blob/cb319f2f7878f33369cf54139df26867aa6632ca/test/e2e/run.bash#L55

Environment:

- kind version: (use

kind version): 0.7.0 - Kubernetes version: (use

kubectl version): 1.14.10 - Docker version: (use

docker info): 18.09.3 - OS (e.g. from

/etc/os-release): Ubuntu 16.04.5 LTS

All 45 comments

Can you add -v 1 to your creation call? It will dump stack and more error output.

This error can happen if the node container exited for some reason.

How much resources are available where you are creating 4 in parallel?

Can you add

-v 1to your creation call? It will dump stack and more error output.

Will do

How much resources are available where you are creating 4 in parallel?

We are using CircleCI's machine executor with a large resource class which is supposed to have 4 cores and 15GB of ram

That should be enough RAM & CPU for four single node clusters, but disk I/O which isn't advertised there might be an issue.

@BenTheElder note that we only get errors from time to time, and only at cluster creation. I would expect the failures to be more systematic.

Also, things got much better with 0.17, which further suggests it may not be (at least not entirely) an IO performance problem.

There was a different issue previously that caused exec to have a race condition when capturing output. That's thoroughly fixed.

At startup we use the most disk, unpacking the images and the API server warming things up in etcd.

for reference the previous bug was https://github.com/kubernetes-sigs/kind/pull/972, this fix was in v0.6.0+

Can you add

-v 1to your creation call? It will dump stack and more error output.

would love to see logs if you spot this again with the -v 1 addition.

I've now seen something like this _once_ in our CI. the node container exited.

unfortunately the CI hung for another reason and we don't have more details, but either the node entrypoint failed or the container was killed for some reason.

I suspect some docker bug, though it is possible something flakes in the entrypoint, it seems unlikely as we don't do anything complex and I've only seen this once...

@BenTheElder I also see this in ingress-nginx prow jobs https://prow.k8s.io/view/gcs/kubernetes-jenkins/pr-logs/pull/ingress-nginx/4949/pull-ingress-nginx-e2e-1-16/1222680251373457408

@aledbf since you are on prow can you switch to using --retain during create (also -v 1) and putting an exit trap with kind export logs "${ARTIFACTS}/kind-logs/" before kind delete cluster, similar to https://github.com/kubernetes-sigs/kind/blob/3f3b305f148059ca7fd24402fcadd41b7b5883d2/hack/ci/e2e-k8s.sh#L29

we need to capture why the command failed, it could just be a flake in the environment killing the container, or some actual kind bug but we can't tell. dumping logs into prow's uploaded artifacts will help.

would love to see logs if you spot this again with the

-v 1addition.

No _luck_ so far

I think we're running into the same issue (using gitlab-runners). I've applied the same verbosity setting and will report back if anything of interest shows up.

we can see something similar here https://prow.k8s.io/view/gcs/kubernetes-jenkins/pr-logs/pull/ingress-nginx/5028/pull-ingress-nginx-e2e-1-15/1225490651391463424 (thanks @aledbf !)

the node container is not running and things are going very slowly, I wonder if it might be a combination of overloaded host and https://github.com/kubernetes-sigs/kind/issues/1290#issuecomment-582269579

going to put out a sync patch today and see how that goes ...

unfortunately we didn't capture the node logs but we can definitely see that the node container was no longer running.

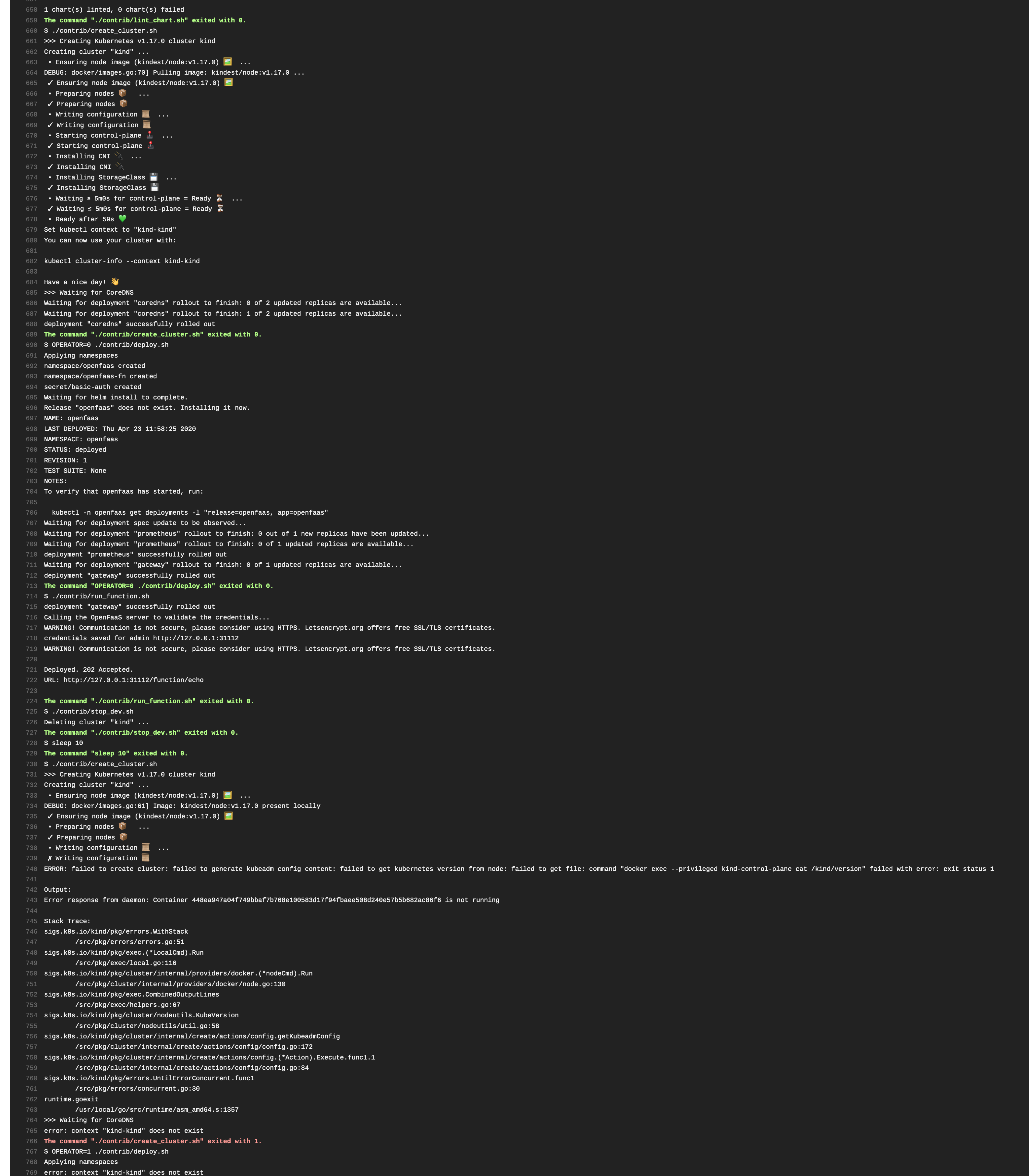

We finally hit the jackpot: https://circleci.com/gh/fluxcd/flux/10092

[WARNING CRI]: container runtime is not running: output: time="2020-02-06T19:22:09Z" level=fatal msg="failed to connect: failed to connect, make sure you are running as root and the runtime has been started: context deadline exceeded"

Absent further debug info, I'd have to guess spinning up 4 at once on that machine is sometimes causing a node to bring up too slowly and KIND is not expecting CRI to take this long to come up.

We can do some internal busy wait on this, it may also be the sync thing ... (need to explore that more ...). In the meantime you might want to consider bringing up 2 and then 2 more instead of 4 at once. startup is the most heaviest part generally.

I'd have to guess spinning up 4 at once on that machine is sometimes causing a node to bring up too slowly and KIND is not expecting CRI to take this long to come up.

I think this guessed-theory may be spot-on, we use the exact same strategy in another project of ours (fluxcd/helm-operator) which shows the same behaviour at times.

We can do some internal busy wait on this, it may also be the sync thing ... (need to explore that more ...). In the meantime you might want to consider bringing up 2 and then 2 more instead of 4 at once. startup is the most heaviest part generally.

This sounds like a reasonable interim solution. cc: @2opremio

Are we sure it's the same root cause? The docker command is very different. Does it matter? (Sorry for my ignorance)

The two recent ones from fluxcd are not the same as the initial one here.

The initial one is most likely the node container not running (we are just cat-ing a file that exists in the container image), that shouldn't fail otherwise ... The container not running is either a bug in the entrypoint causing it to crash (so far we've not observed this but, plausible) or something killing the container from the host ..

The two recent ones appear to be the node container is running, but bringup fails because the container runtime is not ready. Starting the container runtime inside the node is very cheap and normally completes in a very small amount of time well under 1s. In this environment it is presumably longer for some reason (could be the sync thing, could just be an overloaded host).

I don't know if circleCI has an equivilant, but in kubernetes's CI we create clusters with --retain and do a kind export logs to a CI artifacts directory before the job exits. That gives us some useful info like node logs.

We can see the container runtime not running in kubeadm output though in the two recent failures, which is not something I'd normally see in kind logs anywhere.

Yet another failure https://circleci.com/gh/fluxcd/flux/10180

Our latest error (looks different from the previous examples posted)

ERROR: failed to create cluster: failed to generate kubeadm config content: failed to get kubernetes version from node: failed to get file: command "docker exec --privileged kind-control-plane cat /kind/version" failed with error: exit status 1

Output:

Error response from daemon: Container 461ecdc20840ce16af83293f6666691f12bcdb037882cc95be20ab12c642e49a is not running

Stack Trace:

sigs.k8s.io/kind/pkg/errors.WithStack

/src/pkg/errors/errors.go:51

sigs.k8s.io/kind/pkg/exec.(*LocalCmd).Run

/src/pkg/exec/local.go:116

sigs.k8s.io/kind/pkg/cluster/internal/providers/docker.(*nodeCmd).Run

/src/pkg/cluster/internal/providers/docker/node.go:130

sigs.k8s.io/kind/pkg/exec.CombinedOutputLines

/src/pkg/exec/helpers.go:67

sigs.k8s.io/kind/pkg/cluster/nodeutils.KubeVersion

/src/pkg/cluster/nodeutils/util.go:58

sigs.k8s.io/kind/pkg/cluster/internal/create/actions/config.getKubeadmConfig

/src/pkg/cluster/internal/create/actions/config/config.go:172

sigs.k8s.io/kind/pkg/cluster/internal/create/actions/config.(*Action).Execute.func1.1

/src/pkg/cluster/internal/create/actions/config/config.go:84

sigs.k8s.io/kind/pkg/errors.UntilErrorConcurrent.func1

/src/pkg/errors/concurrent.go:30

runtime.goexit

/usr/local/go/src/runtime/asm_amd64.s:1357

That's identical to the original error I reported in this issue.

are there errors in the docker daemon logs?

next to:

Error response from daemon: Container 461ecdc20840ce16af83293f6666691f12bcdb037882cc95be20ab12c642e49a is not running

Stack Trace:

We switched to a non-parallel approach for the Helm operator, and this has been mostly successful to get rid of most of the failures during bootstrap.

Errors like the one original one mentioned in the issue do however still happen at times.

hi,

We encountered the same issue on my mac:

kind v0.7.0 go1.13.6 darwin/amd64

Client: Docker Engine - Community

Version: 19.03.5

ERROR: failed to create cluster: failed to generate kubeadm config content: failed to get kubernetes version from node: failed to get file: command "docker exec --privileged kind-worker4 cat /kind/version" fa

iled with error: exit status 1

Output:

Error response from daemon: Container 46030848638d5695df3b1184d77fcb8502a1241548d2e9e8108201da6a3e11e7 is not running

Stack Trace:

sigs.k8s.io/kind/pkg/errors.WithStack

/src/pkg/errors/errors.go:51

sigs.k8s.io/kind/pkg/exec.(*LocalCmd).Run

/src/pkg/exec/local.go:116

sigs.k8s.io/kind/pkg/cluster/internal/providers/docker.(*nodeCmd).Run

/src/pkg/cluster/internal/providers/docker/node.go:130

sigs.k8s.io/kind/pkg/exec.CombinedOutputLines

/src/pkg/exec/helpers.go:67

sigs.k8s.io/kind/pkg/cluster/nodeutils.KubeVersion

/src/pkg/cluster/nodeutils/util.go:58

sigs.k8s.io/kind/pkg/cluster/internal/create/actions/config.getKubeadmConfig

/src/pkg/cluster/internal/create/actions/config/config.go:172

sigs.k8s.io/kind/pkg/cluster/internal/create/actions/config.(*Action).Execute.func1.1

/src/pkg/cluster/internal/create/actions/config/config.go:84

sigs.k8s.io/kind/pkg/errors.UntilErrorConcurrent.func1

/src/pkg/errors/concurrent.go:30

runtime.goexit

/usr/local/go/src/runtime/asm_amd64.s:1357

Worker4 suggests many nodes.

First question: do you need 5+ nodes actually...?

Second: do you have enough resources allocated for this? Docker for Mac is

a VM with limited cpu etc.

On Wed, Mar 4, 2020, 19:13 CWen notifications@github.com wrote:

hi,

We encountered this issue on my mac:

ERROR: failed to create cluster: failed to generate kubeadm config content: failed to get kubernetes version from node: failed to get file: command "docker exec --privileged kind-worker4 cat /kind/version" fa

iled with error: exit status 1Output:

Error response from daemon: Container 46030848638d5695df3b1184d77fcb8502a1241548d2e9e8108201da6a3e11e7 is not runningStack Trace:sigs.k8s.io/kind/pkg/errors.WithStack

/src/pkg/errors/errors.go:51sigs.k8s.io/kind/pkg/exec.(LocalCmd).Run

/src/pkg/exec/local.go:116sigs.k8s.io/kind/pkg/cluster/internal/providers/docker.(nodeCmd).Run

/src/pkg/cluster/internal/providers/docker/node.go:130sigs.k8s.io/kind/pkg/exec.CombinedOutputLines

/src/pkg/exec/helpers.go:67sigs.k8s.io/kind/pkg/cluster/nodeutils.KubeVersion

/src/pkg/cluster/nodeutils/util.go:58sigs.k8s.io/kind/pkg/cluster/internal/create/actions/config.getKubeadmConfig

/src/pkg/cluster/internal/create/actions/config/config.go:172sigs.k8s.io/kind/pkg/cluster/internal/create/actions/config.(*Action).Execute.func1.1

/src/pkg/cluster/internal/create/actions/config/config.go:84sigs.k8s.io/kind/pkg/errors.UntilErrorConcurrent.func1

/src/pkg/errors/concurrent.go:30

runtime.goexit

/usr/local/go/src/runtime/asm_amd64.s:1357—

You are receiving this because you were assigned.

Reply to this email directly, view it on GitHub

https://github.com/kubernetes-sigs/kind/issues/1288?email_source=notifications&email_token=AAHADKZJXVYRBWQ52PAX4HTRF4KGZA5CNFSM4KMBRUV2YY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGOEN3RG4I#issuecomment-595006321,

or unsubscribe

https://github.com/notifications/unsubscribe-auth/AAHADK7KUW7W6PFXGIEASNLRF4KGZANCNFSM4KMBRUVQ

.

@BenTheElder Thanks, yes, I limited my Docker with 6 core CPU and I have tried to reduce the number of nodes to 3.

We appear to be running into a similar (blocking) issue with OpenFaaS and the openfaas/faas-netes build: https://travis-ci.com/github/openfaas/faas-netes/builds/161616197

https://github.com/openfaas/faas-netes/pull/620

What's the fix?

We have to run two tests of tests so the workflow in .travis.yml is:

Create

Test

Delete

Create

Test

Up until the beginning of the week it was passing on every build.

The problem goes away for us when we downgrade the kindest/node: version.

Currently working for us (on 0.7.0)

kindest/node:v1.14.6@sha256:464a43f5cf6ad442f100b0ca881a3acae37af069d5f96849c1d06ced2870888d

Prior to this we were using

v1.14.10@sha256:81ae5a3237c779efc4dda43cc81c696f88a194abcc4f8fa34f86cf674aa14977

...which seemed to have the problem.

1.14 is a pretty old version now :-/ Anyone else come up with a workaround to get past this?

@alexellis There isn't a single fix for this thread due to multiple different issues piled in here, travis going from passing to failing is new.

Can you file an issue with more details about your specific environment etc.?

From the log we know the node terminated before we could read the kubernetes version or start anything, which generally means the entrypoint ran into some issue (which is unusual...).

@nabadger that's a prettty old image, in what environment did this fix things...?

Best guess is it's the lack of sync in the entrypoint, which is also fixed by newer images. xref #1475

v0.8.0 is out with the sync fix, and better error output by default (without -v) amongst other things.

unfortunately it's difficult to tell what particular thing caused the container to fail at exit time

if anyone sees this in the future, try to capture kind export logs or at least the docker logs of the node container (you'll need to have set --retain).

Think I'm getting the same on my Raspberry Pi 4 (Buster)

./kind create cluster -v 1

Creating cluster "kind" ...

DEBUG: docker/images.go:58] Image: kindest/node:v1.18.2@sha256:7b27a6d0f2517ff88ba444025beae41491b016bc6af573ba467b70c5e8e0d85f present locally

✓ Ensuring node image (kindest/node:v1.18.2) 🖼

✓ Preparing nodes 📦

✗ Writing configuration 📜

ERROR: failed to create cluster: failed to generate kubeadm config content: failed to get kubernetes version from node: failed to get file: command "docker exec --privileged kind-control-plane cat /kind/version" failed with error: exit status 1

Command Output: Error response from daemon: Container d2f7c99a0a7424256491be5f5d1751cb11e55a51ca37c4c684a125fd1abdb19f is not running

Stack Trace:

sigs.k8s.io/kind/pkg/errors.WithStack

/src/pkg/errors/errors.go:51

sigs.k8s.io/kind/pkg/exec.(*LocalCmd).Run

/src/pkg/exec/local.go:124

sigs.k8s.io/kind/pkg/cluster/internal/providers/docker.(*nodeCmd).Run

/src/pkg/cluster/internal/providers/docker/node.go:146

sigs.k8s.io/kind/pkg/exec.CombinedOutputLines

/src/pkg/exec/helpers.go:67

sigs.k8s.io/kind/pkg/cluster/nodeutils.KubeVersion

/src/pkg/cluster/nodeutils/util.go:35

sigs.k8s.io/kind/pkg/cluster/internal/create/actions/config.getKubeadmConfig

/src/pkg/cluster/internal/create/actions/config/config.go:165

sigs.k8s.io/kind/pkg/cluster/internal/create/actions/config.(*Action).Execute.func1.1

/src/pkg/cluster/internal/create/actions/config/config.go:77

sigs.k8s.io/kind/pkg/errors.UntilErrorConcurrent.func1

/src/pkg/errors/concurrent.go:30

runtime.goexit

/usr/local/go/src/runtime/asm_arm.s:857

@carlskii the node images are not built for arm by default. this is not any of the above issues.

you'll need to build one yourself. there's details on building in the docs / user guide.

I'm going to close this out for now given that 0.8.0 / 0.8.1 is out with various fixes including more details in the errors, however for this particular error you need to try again with --retain (so it doesn't clean up after itself) and:

- check the docker container logs

- check docker inspect

This ~always means the node container died, which is generally due to some problem with the host environment such as:

- not enough resources to run everything

- wrong architecture

- in older kind versions, you might have some other issues we've documented in known issues such as your host filesystem being incompatble with docker in docker (zfs, btrfs; these are fixed) or using docker installed via snap (still not recommended)

Please file individual bugs when you encounter this in your environment and include as much detail as possible. This error just means the node container exited early so we are unable to do anything with it, and is probably a bug in your environment.

If you see a similar issue with 0.8.1 that is not using a node image built for the wrong architecture, please file an issue with your details.

There are multiple existing issues discussing CPU architectures.

Hi,

I had a similar issue:

ERROR: failed to create cluster: failed to generate kubeadm config content: failed to get kubernetes version from node: file should only be one line, got 2 lines

The reason of the error was that previously DOCKER_CONFIG env variable was defined to /home/$USER/.docker/config.

After I removed that variable the cluster creation worked.

Cheers,

SilverTux

@SilverTux this should not be necessary if you update to the latest binaries, are you seeing this in 0.8.1?

I had the same issue

$ kind create cluster

Creating cluster "kind" ...

✓ Ensuring node image (kindest/node:v1.18.2) 🖼

✓ Preparing nodes 📦

✗ Writing configuration 📜

ERROR: failed to create cluster: failed to generate kubeadm config content: failed to get kubernetes version from node: failed to get file: command "docker exec --privileged kind-control-plane cat /kind/version" failed with error: exit status 1

Command Output: Error response from daemon: Container f2a2d9c8f9c2eca9aeec7f10249eb205b02c8a5f41e5bf1145b5a8e4b63da123 is not running

kind version

kind v0.8.1 go1.14.3 linux/amd64

I was seeing the same symptom as others:

"""

$ kind create cluster

Creating cluster "kind" ...

✓ Ensuring node image (kindest/node:v1.18.2) framed_picture

✓ Preparing nodes package

✗ Writing configuration scroll

ERROR: failed to create cluster: failed to generate kubeadm config content: failed to get kubernetes version from node: failed to get file: command "docker exec --privileged kind-control-plane cat /kind/version" failed with error: exit status 1

Command Output: Error response from daemon: Container _ is not running

"""

My issue was docker installed via snap & not explicitly specifying image version

install docker via apt-get detailed on their page here (previously had installed via snap on ubuntu)

Explicitly specify image when running 'kind create' (tag latest on DockerHub not found): 'kind create cluster --image kindest/node:v1.18.2'

I had the same issue

You have the same _symptom_ which is that we fail to get data from the node because the node has exited.

This part: Command Output: Error response from daemon: Container f2a2d9c8f9c2eca9aeec7f10249eb205b02c8a5f41e5bf1145b5a8e4b63da123 is not running

That tells us that the node container is not running. That either means the entrypoint failed or your host killed it, both either due to some obscure bug we haven't found yet, or more likely an issue with your host environment.

Please file your own issue with much more details. This issue is non-specific and has discussed many different problems, as outlined above.

Resolution was to install docker via apt-get detailed on their page here

What docker did you have installled before?

Another note: I'm not seeing a kindest/node image with 'latest' tag-- I had to explicitly specify image when running 'kind create' like so: 'kind create cluster --image kindest/node:v1.18.2'

- There is intentionally no latest tag, it's not supported to use arbitrary images, we've made breaking changes e.g. to implement host reboot support

- Tags that are known are published in the release notes _along_ with their hashes, please specify the digests when using a non-default image to avoid any nasty surprises!

https://kind.sigs.k8s.io/docs/user/known-issues/#docker-installed-with-snap

snap is in the known-issue document, the snap docker package has a number of issues, e.g. no access to temp directories. I don't recommend snap for docker and we don't really support this.

I'm going to lock this issue now so as to steer people to please file new issues with sufficient details to identify your problem(s), this issue is no longer tracking any specific bug, and we can better help each of you if you file issues with your specific environment details and logs.

The new issue templates will steer your towards including some of the initial information we need to know, such as the kind version you have installed.

Thanks!

A small note: we've worked around most of the snap issues for now if you're just managing clusters, but I still don't recommend snap for docker.

If you're seeing an issue similar to this, it means the node container exited early for some reason. That usually means the host environment is broken, but occasionally has meant we need to work-around e.g. less common filesystems with device mapper issues.

Please attempt to capture node logs with kind create cluster --retain, kind export logs, and file an issue with the logs uploaded. We'll try to identify the cause based on these.