Kibana: [alerting] When you rotate/change/lose your encrypted saved object key, alerts should go from enabled to disabled

Kibana version:

7.8.0, 7.9.0-BC6

Steps to reproduce:

Set your kibana.yml to have an encryption key:

xpack.encryptedSavedObjects.encryptionKey: 'asdfasfasdfasdfasdfasfasdfasfasdfafds'

Start Kibana, then load or create a custom detection rule from security solution and start it.

Change your encryption key to some other value:

xpack.encryptedSavedObjects.encryptionKey: 'asdfasfasdfasdfasdfasfasdfasfasdfafds-extra-stuff'

Restart Kibana

Wait for a few minutes to see these error messages in your log file:

server log [10:51:28.682] [error][encryptedSavedObjects][plugins] Failed to decrypt "apiKey" attribute: Unsupported state or unable to authenticate data

server log [10:51:28.683] [error][alerting][alerts][plugins][plugins] Executing Alert "95bbf5d9-399d-48fd-9c9d-913a4961c7fd" has resulted in Error: Unable to decrypt attribute "apiKey"

server log [10:51:28.684] [error][encryptedSavedObjects][plugins] Failed to decrypt "apiKey" attribute: Unsupported state or unable to authenticate data

server log [10:51:28.684] [error][alerting][alerts][plugins][plugins] Executing Alert "88610914-dc44-42ab-9150-144c582293db" has resulted in Error: Unable to decrypt attribute "apiKey"

server log [10:52:34.666] [error][encryptedSavedObjects][plugins] Failed to decrypt "apiKey" attribute: Unsupported state or unable to authenticate data

server log [10:52:34.666] [error][alerting][alerts][plugins][plugins] Executing Alert "e2c8beae-bdf6-43cb-a123-9ea1fb040539" has resulted in Error: Unable to decrypt attribute "apiKey"

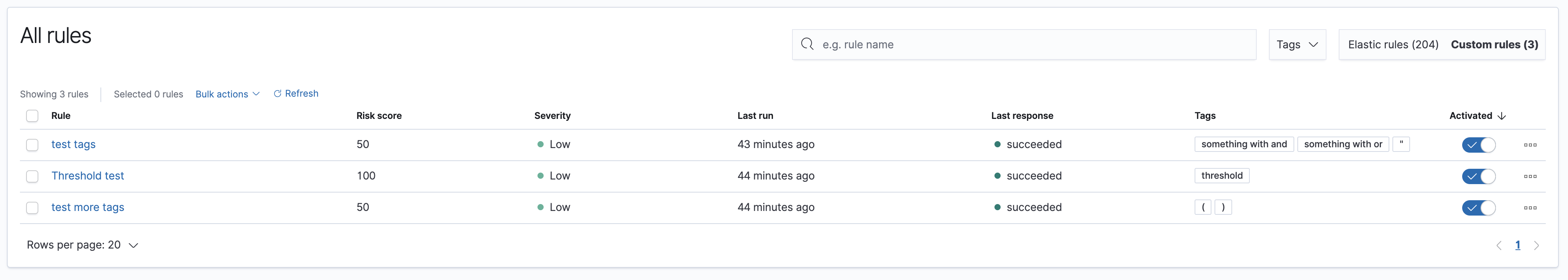

Go back to the detections pages under security solutions and notice that your rules are still showing themselves as being enabled and running and no errors on the UI to indicate to the user they are in a failed state

and need to disable/reanble the rule.

Query against your event log using dev tools:

GET .kibana-event-log-8.0.0/_search

{

"size": 9000

}

And it does not look like you will see anything outside of success.

Expectations from user feedback is that that the alerts will move themselves into a disabled state and report errors within their event logs and report to the security solution detections so it can also report errors and that the user needs to re-enable the alert to re-recreate the API key.

On the detections page even after running for a while the rules still show a success from last run but the only way to know that you rules have not been running is to look at your Last Run which will not be updated for a period of time. No error messages are being bubbled up either that the user needs to disable/re-enable the rule to get it running again:

Expectations are that the rule is disabled and that there is an error message displayed which informs the user that the key is corrupted and that the rule should be turned back on to re-enable a new API key.

All 21 comments

Pinging @elastic/kibana-alerting-services (Team:Alerting Services)

Pinging @elastic/siem (Team:SIEM)

This seems like it makes sense - to disable an alert if it's API key can't be decrypted.

This could be painful in a case where you had a lot of alerts, mistakenly restarted with a different key, realized that error, changed back to the old one, restarted, and everything's working again. If we disable-on-decrypt error, any alerts executed with the different key would be disabled and then have to be re-enabled. That's quite a stretch of an example, doubt it's worth worrying about, but thought I'd throw it out there.

This doesn't help with actions that are also no longer executable if their secrets are no long de-cryptable (another recent issue). Actions don't have an "enabled" state like alerts.

Support for key rotation is coming as well: https://github.com/elastic/kibana/pull/72828.

Given the general issue that we have where we don’t want failed migrations to block Kibana from loading (which is why we reverted them in 7.9, which is now blocking 7.10 https://github.com/elastic/kibana/issues/74404), we probably want to address both these issues in a uniform manner.

I just threw up a draft PR (https://github.com/elastic/kibana/pull/74408) if anyone wants to take a gander at it. It would allow for an optional callback to the AlertType when an error is caught to bubble that back up to the registering plugin.

Draft PR for the disabling of the alert if it cannot decode its API key (https://github.com/elastic/kibana/issues/74393) for anyone that wants to look.

Support for key rotation is coming as well: #72828.

I think that's great but see that as a bit orthogonal as the issue we still face is that we have users and/or bugs where the API key becomes corrupted and cannot be decoded on upgrades and I think when we detect an API key cannot be decoded we will want to deactivate the rule.

This doesn't help with actions that are also no longer executable if their secrets are no long de-cryptable (another recent issue). Actions don't have an "enabled" state like alerts.

That's true ... and those potentially wouldn't be recoverable either if the key is lost. I think we have to plan for when the encryptedSavedObjects key is no longer recoverable permanently for any variety of reasons and alert the user through error messages that they are going to have to double check their settings before re-enabling.

@dhurley14 in his PR probably should maybe detect the state that the error is of a particular type such as API key loss and let the user know that if they cannot recover their key they will have to reset several pieces of state manually?

If we disable-on-decrypt error, any alerts executed with the different key would be disabled and then have to be re-enabled.

If we would have to choose between two "evils" that would feel the lesser of the two. The reports from internal teams we are getting is that the corrupted API keys are not giving any feedback for us to indicate to users that the alerts have not been functioning as they are still in an enabled state and that they have potentially missed signals for several days after an upgrade which is unsettling to them.

Thanks for taking the time to look into a potential solution for this @dhurley14. Before deciding on a solution, I'd like the alerting team to have some time to experiment with the issues and get a better understanding of the underlying problem.

Am I right to assume these are the three scenarios?

- The key was rotated and the original is lost

- The kibana instance was using a dev key and moved to prod resulting in lost key

- The user has multiple kibana instances with different encryption keys by accident

Am I right to assume these are the three scenarios?

Yes, and two more mis-use cases if it counts which are not directly related to the encrypted saved object key but rather just the individual API key generically:

- User accidentally corrupts or overwrites an API key directly within Kibana

- Use revokes/deletes an API key or all of them directly from the user management screens of Kibana

I posted some thoughts here that relate to how we might want to address this: https://github.com/elastic/kibana/pull/74416#discussion_r466265447

The moving parts I've already identified:

I want to make sure whichever solution we go with, it addresses the different cases we have:

- What happens when decryption fails in migration?

- What happens when it fails due to an operation in the AlertsClient?

- What happens when it fails as part of a task run?

- Do we want to actually disable at Saved Object or make it a "logical" disable, so that we can differentiate between an Alerts that was disabled on purpose and an Alert disabled due to an inability to decrypt?

- How do we notify the user about this? We definitely need this to appear in the Event Log, so just disabling isn't enough. Do we need notify the producer? Do we need to notify the consumer? How best to do this?

Once we introduce code that naively disables an alerts when it fails to decrypt we'll need to support that _case_ going forward (we already have lots of _cases_ that we need to support and consider in every change we make [such as _alerts created before we required a non ephemeral key_, _alerts created without consumer prior to 7.7_ etc.] so lets not rush to add another _case_ to the list).

Understanding there is more discussion to be had - I'm curious if this PR https://github.com/elastic/kibana/pull/74408 in the interim could resolve the issue of at least signaling to the users that their rules are not running right now. It is probably not a good idea to push this for 7.9.0 but maybe 7.9.1? Just checking if this avenue is worth exploring further from my side.

Hi @dhurley14, I appreciate the efforts of putting a draft proposal together. I have a feeling the solution for this issue will be something the alerting team will take on. We’re going to discuss this internally early next week as we want to avoid adding quick workarounds that don't consider the concerns @gmmorris mentioned above. We’ll have some updates on this next week.

Hi @dhurley14,

We've discussed the issue internally today and have decided to go with the following approach:

- [7.10] Alert statuses #51099

With this issue, we're planning to add a status to each alert. This will be set to error whenever the alert fails to run and we'll make sure decryption failures are part of the error status. You will be able to query alerts by status using one of the find APIs (we're still discussing the implementation details but with this feature in mind).

With this, you will be able to know which rules are failing to run.

- [7.10] Display a banner to users when some alerts have failures #74778

This is alert management specific but a banner would be displayed whenever there's alerts that failed to run. This will use the same APIs provided by step 1 that can also be used in SIEM.

- Check alerts and actions periodically to see if they can be decrypted #74780

This will periodically check alerts to make sure they can be decrypted. This will be useful for alerts that have a long interval as we can display a banner even before they run next.

I think the first item will give you all the tools you need to capture all the scenarios discussed above and to create a UX in SIEM. Please let me know if you think otherwise.

@mikecote First item sounds good to me thank you everyone.

One question though - is there an expectation the error message will be available if / when alerts fail to run? Either due to a failure within the alert executor or a failure occurring outside of alert executor like in the case of a decryption failure? It would be nice to have access to that message so we could display that in our banner on our rule details page. I also feel this flows into a discussion on alert monitoring in general but that may be beyond the scope of this more immediate ask.

@dhurley14

One question though - is there an expectation the error message will be available if / when alerts fail to run? Either due to a failure within the alert executor or a failure occurring outside of alert executor like in the case of a decryption failure? It would be nice to have access to that message so we could display that in our banner on our rule details page. I also feel this flows into a discussion on alert monitoring in general but that may be beyond the scope of this more immediate ask.

We are planning to capture a reason and message for the error. The reason field would contain a value like decryption, authorization, execution, etc that you would be able to filter on. We are also planning to capture the message but this may change if there's places we'd expose information that shouldn't be exposed (ex: sensitive information, API keys, etc).

Been a while since we churned on this :-), here's the latest status as of 7.10, re: https://github.com/elastic/kibana/issues/74393#issuecomment-672114161

- [7.10] Alert statuses #51099 [MERGED in 7.10]

- [7.10] Display a banner to users when some alerts have failures #74778 [MERGED in 7.10]

- Check alerts and actions periodically to see if they can be decrypted #74780 [OPEN]

Did we still want to do point 3? Or do we think 1 + 2 will suffice for now?

- [7.10] Alert statuses #51099 [MERGED in 7.10]

- [7.10] Display a banner to users when some alerts have failures #74778 [MERGED in 7.10]

1 + 2 gets us to about 90% coverage so thank you for that :)

- Check alerts and actions periodically to see if they can be decrypted #74780 [OPEN]

The last 10% of coverage would come from the scenario I think @hmnichols mentioned during one of the RBAC meetings where our users may not even log into the detections page to check if their rules are failing. 3 could feasibly get the security solution to a place where we could notify the users through an action with a summary of failures or something like that. I'm wondering if with @YulNaumenko's PR https://github.com/elastic/kibana/pull/79056 we could try and build on top of this task and attach an action to it such that failures would optionally result in an email / slack notification etc.. to notify the user to check in on their alerts / rules. What do you all think?

I'm wondering if with @YulNaumenko's PR #79056 we could try and build on top of this task and attach an action to it such that failures would optionally result in an email / slack notification etc.. to notify the user to check in on their alerts / rules. What do you all think?

Sounds interesting. An alert based on some internal Kibana state. Would we have an alert per different state thing that could be checked? Or could we have some general "Kibana state" alert, where maybe you could select the things you were interested in being informed about?

Perhaps we could start with an alert specifically for Kibana state, as it has a lot of potential "inner" things you could select on, see how it goes. Those "inner" things are probably all plugin ids, so not terribly user friendly. Maybe it triggers when any health indicator "goes down"?

I wonder how well this will work out in practice, in a multi-Kibana-instance deployment; we'd definitely want to send the Kibana server uuid with the alert variables, though I'm not sure having that provides any value to a customer (eg, I don't think it shows up in Cloud deployment info).

@Aris thoughts?

3 could feasibly get the security solution to a place where we could notify the users through an action with a summary of failures or something like that. I'm wondering if with @YulNaumenko's PR #79056 we could try and build on top of this task and attach an action to it such that failures would optionally result in an email / slack notification etc.. to notify the user to check in on their alerts / rules. What do you all think?

From what I recall, we decided to not rely on our framework to notify when something is wrong but rather have a set of health APIs that a 3rd party service could monitor and itself notify users if something is wrong. We are still planning to work on meta alerts in the future but it wouldn't be a 100% solution more than what we have with health APIs.

One example is if the encryption key is changed, the alerts will fail to run but also the email connector due to not being able to decrypt credentials to communicate with it.

Most helpful comment

Hi @dhurley14, I appreciate the efforts of putting a draft proposal together. I have a feeling the solution for this issue will be something the alerting team will take on. We’re going to discuss this internally early next week as we want to avoid adding quick workarounds that don't consider the concerns @gmmorris mentioned above. We’ll have some updates on this next week.