Kibana: Logstash Monitoring UI does not display 5.6 data unless a 6.x instance is also sending data

Kibana version: 6.4.1

Elasticsearch version: 6.4.1

Describe the bug: Logstash will not display in the monitoring UI unless you have at least one logstash instance running 6.0+ and writing monitoring data. It seems to rely on the presence of data in the logstash_stats.pipelines:[] object.

Steps to reproduce:

- Startup Kibana & Elasticsearch 6.4.1

- Send monitoring data from logstash 5.6.x

- Logstash will not be available in the Monitoring UI landing page

- Send monitoring data from logstash 6.x. Both logstash instances will be visible in the UI

cc: @chrisronline

All 15 comments

@justinkambic Any thoughts on this?

@chrisronline I think I have a decent idea why this is happening, but I will reproduce and let you know when I've actually seen it.

It is probably not a quick fix.

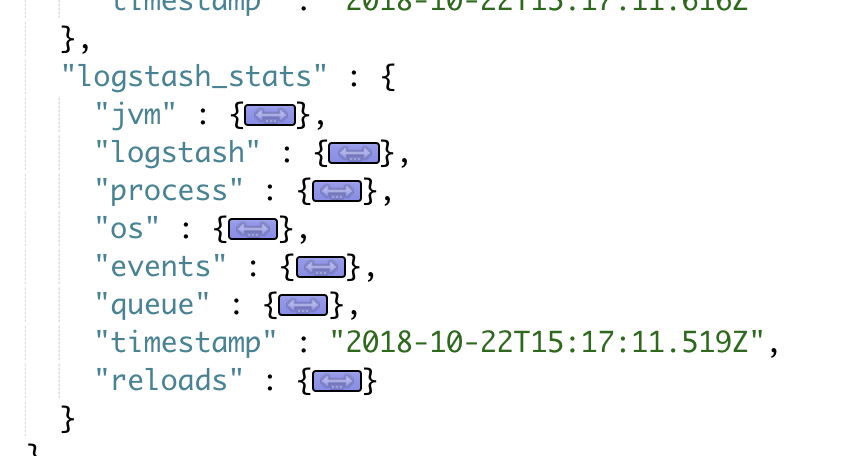

Ok - reasoning behind this is a change in the shape of the data LS is indexing across those versions. Here's a sample document from 5.6.0:

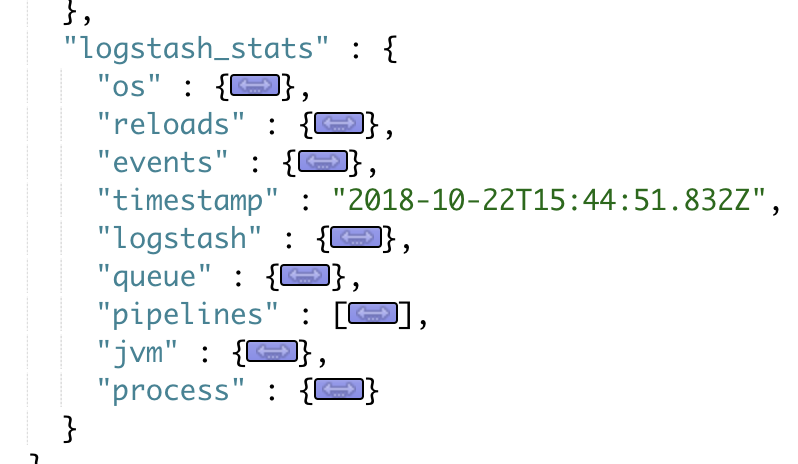

Here's a sample from 6.4.x:

The _pipelines_ field is not present in the older version's documents, and there're a series of aggs being built from that field.

So, why are we not showing _anything_ until a pipeline is detected? In the past, we were attempting to solve the issue of data being out of sync between the cluster overview page, and dedicated Logstash views, as explained in https://github.com/elastic/x-pack-kibana/issues/4517

We attempted to solve that problem by implementing the change that's causing this issue in https://github.com/elastic/x-pack-kibana/pull/4989

Obviously when we implemented the changes, we didn't anticipate that older LS versions' data could lack the fields we were expecting the monitoring documents to have. Two obvious solutions to this issue I can think of are:

- Revert: Show the monitoring data before any pipeline counts are known. Not ideal because it exposes us to the same issue we were attempting to solve in the first place

- Update Query: Modify the parameters for the query to account for the issue.

cc @ycombinator

More background: Logstash began supporting multiple pipelines in 6.0.0, hence the absence of the field in the document above.

Based on that information, I think it makes sense to simply update the existing functionality to add another agg that finds any instance that is pre-6.0.0 and count them as 1 pipeline. The _logstash_stats.logstash_ field in the documents contains the LS version, and they're notably absent the pipeline field, so there are some differentiating factors. Please poke holes in this solution.

Thanks for digging into this, @justinkambic ❤️.

One clarification: in your sample documents comment above, you said that a document from 6.5.0 doesn't have the pipelines field. Did you really mean 6.5.0 or an older version? If you really did mean 6.5.0 then it seems a bit odd to me that 6.4.x docs would have the field but then it was removed in 6.5.0. So just want to clarify before commenting further whether you really meant 6.5.0 in your comment or if it was just a typo.

Thanks @ycombinator I definitely did not mean to put 6.5.0; I've fixed it to say 5.6.0, which is the version mentioned in the repro steps (5.6.x). I totally missed that typo.

Thanks for clarifying, @justinkambic. I should've read the repro steps 🤦♂️.

From purely a UX perspective, what if we didn't show the "Pipelines" link in the Logstash panel on the Cluster Overview page if none of the docs had the pipelines field? Perhaps the missing aggregation could help here?

So, the thing that appealed to me as far as counting older version instances as 1 pipeline is that we can keep the UX unchanged while solving the bug. I spoke to @andrewvc and he didn't seem to think there was a problem with counting pre-6.0.0 LS as 1 pipeline per instance.

The alternative of hiding the pipelines link when the value of the _pipelines_ agg is 0 is obviously a better solution than the current UX. Would it be useful to do a hybrid of both solutions? It seems to me if we implement the first proposed fix it will make the second one redundant.

Ah, I get it now. I'm good with the proposal of counting pre-6.0.0 LS instances as 1 pipeline each (since that's actually accurately what's happening in LS). My only tweak to that solution would be to not use an actual version comparison check but base it on the schema instead (i.e. lack of pipelines field), if possible.

Totally agree - thank you for the recommendation of the missing agg, that's a great idea. I think the next step here is I can throw up a PR and we can continue discussing technical details there? Should be a small change after all.

Hi @justinkambic. A long delay here but I just wanted to see if by chance you ever put up a PR as mentioned in your last comment on this issue? Thanks!

@cachedout this seems to have gotten lost in the shuffle for me, I apologize. This issue was created right around the time we were ramping up on Uptime and I failed to prioritize it.

Things are a little less crazy now; I should be able to find some time to work on it this week! Thanks for the reminder.

@justinkambic No worries at all. Thanks!

@cachedout given that the latest version of Logstash (AFAIK) affected by this issue is 6.4, which isn't compatible with master Kibana/ES, @chrisronline and I are thinking this fix should be merged to the 6.7 branch only.

@justinkambic That seems like the right course of action to me.