Keras: Training works in keras 2.2.2 but not in 2.2.3 and 2.2.4

Hi, I'm training a semantic image segmentation model.

And I've built a generator that yields:

(images, masks, sample_weights)

my batch size is 8.

at the following shapes:

( (8, 256, 256, 3) , (8, 65536, 1) , (8, 65536) )

In the compilation I also defined sample_weight_mode = "temporal"

and this is the error I'm getting:

(it looks like these versions reshape the validation labels to be a 1D vector)

InvalidArgumentError: Incompatible shapes: [524288] vs. [8,65536]

[[Node: metrics/acc/Equal = Equal[T=DT_FLOAT, _device="/job:localhost/replica:0/task:0/device:GPU:0"](metrics/acc/Reshape, metrics/acc/Cast)]]

[[Node: training/Adam/gradients/activation_1_1/concat_grad/Slice_1/_799 = _Recv[client_terminated=false, recv_device="/job:localhost/replica:0/task:0/device:GPU:1", send_device="/job:localhost/replica:0/task:0/device:CPU:0", send_device_incarnation=1, tensor_name="edge_2406_training/Adam/gradients/activation_1_1/concat_grad/Slice_1", tensor_type=DT_FLOAT, _device="/job:localhost/replica:0/task:0/device:GPU:1"]()]]

When I set batch_size=1 it works.

All 20 comments

Can you give us a script to reproduce?

Sure. Training is on the VOC dataset

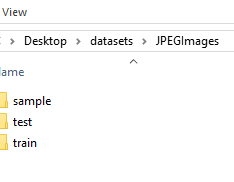

The training images in:

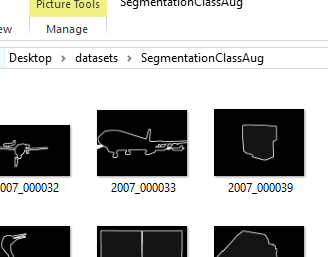

The masks in:

from keras.applications import imagenet_utils

def create_unet(image_size, n_classes):

s = Input(image_size+(3,))

c1 = Conv2D(8, 3, activation='relu', padding='same') (s)

c1 = BatchNormalization()(c1)

c1 = Conv2D(8, 3, activation='relu', padding='same') (c1)

p1 = MaxPooling2D() (c1)

c2 = Conv2D(16, 3, activation='relu', padding='same') (p1)

c2 = BatchNormalization()(c2)

c2 = Conv2D(16, 3, activation='relu', padding='same') (c2)

p2 = MaxPooling2D() (c2)

c3 = Conv2D(32, 3, activation='relu', padding='same') (p2)

c3 = Conv2D(32, 3, activation='relu', padding='same') (c3)

p3 = MaxPooling2D() (c3)

c4 = Conv2D(64, 3, activation='relu', padding='same') (p3)

c4 = Conv2D(64, 3, activation='relu', padding='same') (c4)

p4 = MaxPooling2D() (c4)

c5 = Conv2D(128, 3, activation='relu', padding='same') (p4)

c5 = Conv2D(128, 3, activation='relu', padding='same') (c5)

u6 = Conv2DTranspose(64, 2, strides=(2, 2), padding='same') (c5)

u6 = Concatenate(axis=3)([u6, c4])

c6 = Conv2D(64, 3, activation='relu', padding='same') (u6)

c6 = Conv2D(64, 3, activation='relu', padding='same') (c6)

u7 = Conv2DTranspose(32, 2, strides=(2, 2), padding='same') (c6)

u7 = Concatenate(axis=3)([u7, c3])

c7 = Conv2D(32, 3, activation='relu', padding='same') (u7)

c7 = Conv2D(32, 3, activation='relu', padding='same') (c7)

u8 = Conv2DTranspose(16, 2, strides=(2, 2), padding='same') (c7)

u8 = Concatenate(axis=3)([u8, c2])

c8 = Conv2D(16, 3, activation='relu', padding='same') (u8)

c8 = Conv2D(16, 3, activation='relu', padding='same') (c8)

u9 = Conv2DTranspose(8, 2, strides=(2, 2), padding='same') (c8)

u9 = Concatenate(axis=3)([u9, c1])

c9 = Conv2D(8, 3, activation='relu', padding='same') (u9)

c9 = BatchNormalization()(c9)

c9 = Conv2D(8, 3, activation='relu', padding='same') (c9)

c9 = BatchNormalization()(c9)

outputs = Conv2D(n_classes, 1) (c9)

outputs = Reshape((image_size[0]*image_size[1], n_classes)) (outputs)

outputs = Activation('softmax') (outputs)

model = Model(inputs=[s], outputs=[outputs])

return model

def preprocess_input(x):

return imagenet_utils.preprocess_input(x, mode='tf')

def preprocess_mask(x):

x = x.astype('int32')

th = round(x.shape[0]*x.shape[1]*0.005) # object at size less then 1% of the whole image

x[(x>20) & (x<255)] = 255

ctr = Counter(x.flatten())

for k in ctr.keys():

if ctr[k]<th:

x[x==k] = 255

return x

def segmentation_generator(image_gen, mask_gen):

while True:

X = image_gen.next()

y = mask_gen.next()

y = np.reshape(y, (-1, np.prod(mask_gen.image_shape), 1))

y = y.astype('int32')

sample_weights = np.ones(y.shape)

sample_weights[y==0] = .1

sample_weights[y==255] = 0

y[y==255]=21

yield X, y, sample_weights[...,0]

data_trn_gen_args_mask = dict(preprocessing_function = preprocess_mask,

horizontal_flip=True,

rotation_range=45,

width_shift_range=0.01,

height_shift_range=0.01,

zoom_range=0.2,

validation_split = .2,

)

data_trn_gen_args_image = dict(preprocessing_function = preprocess_input,

horizontal_flip=True,

rotation_range=45,

width_shift_range=0.01,

height_shift_range=0.01,

zoom_range=0.2,

validation_split = .2,

channel_shift_range = .2

)

model = create_unet(image_size = (256,256), n_classes = 22)

model.compile(optimizer = Adam(), sample_weight_mode = "temporal",

loss = 'sparse_categorical_crossentropy',

metrics = ['acc'])

mask_datagen = ImageDataGenerator(**data_trn_gen_args_mask)

image_datagen = ImageDataGenerator(**data_trn_gen_args_image)

image_generator = image_datagen.flow_from_directory(PATH+'JPEGImages',

classes = ['train'],

class_mode=None,

batch_size=8, shuffle=True,

seed=29)

mask_generator = mask_datagen.flow_from_directory(PATH+'JPEGImages',

color_mode = 'grayscale',

classes = ['SegmentationClassAug'],

class_mode=None,

batch_size=8,

shuffle=shuffle,

seed=29)

train_generator = segmentation_generator(image_generator, mask_generator)

h = model.fit_generator(train_generator, class_weight = None,

steps_per_epoch=1000,

epochs = 10, verbose=1,

max_queue_size=10,

workers=8, use_multiprocessing=True)

Have the same issue, working with keras 2.2.2, not with 2.2.3 or 2.2.4.

Simple example:

import numpy as np

from keras.models import Model

from keras.layers import Input, Conv1D, Reshape, Activation

inp = Input((None, 12))

conv = Conv1D(48, kernel_size=1, activation='linear')(inp)

out = Reshape((-1, 3, 16))(conv)

act = Activation('softmax')(out)

model = Model(inputs=inp, outputs=act)

model.compile('adam', 'sparse_categorical_crossentropy', metrics=['acc'])

model.summary()

inp_data = np.random.rand(32, 10, 12)

out_data = np.random.randint(0, 16, size=(32, 10, 3, 1))

model.fit(inp_data, out_data)

Traceback:

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/home/tom/.ve/lib/python3.7/site-packages/keras/engine/training.py", line 1039, in fit

validation_steps=validation_steps)

File "/home/tom/.ve/lib/python3.7/site-packages/keras/engine/training_arrays.py", line 199, in fit_loop

outs = f(ins_batch)

File "/home/tom/.ve/lib/python3.7/site-packages/keras/backend/tensorflow_backend.py", line 2715, in __call__

return self._call(inputs)

File "/home/tom/.ve/lib/python3.7/site-packages/keras/backend/tensorflow_backend.py", line 2675, in _call

fetched = self._callable_fn(*array_vals)

File "/usr/lib/python3.7/site-packages/tensorflow/python/client/session.py", line 1399, in __call__

run_metadata_ptr)

File "/usr/lib/python3.7/site-packages/tensorflow/python/framework/errors_impl.py", line 526, in __exit__

c_api.TF_GetCode(self.status.status))

tensorflow.python.framework.errors_impl.InvalidArgumentError: Incompatible shapes: [960] vs. [32,10,3]

[[{{node metrics/acc/Equal}} = Equal[T=DT_FLOAT, _device="/job:localhost/replica:0/task:0/device:GPU:0"](metrics/acc/Reshape, metrics/acc/Cast)]]

[[{{node loss/mul/_53}} = _Recv[client_terminated=false, recv_device="/job:localhost/replica:0/task:0/device:CPU:0", send_device="/job:localhost/replica:0/task:0/device:GPU:0", send_device_incarnation=1, tensor_name="edge_409_loss/mul", tensor_type=DT_FLOAT, _device="/job:localhost/replica:0/task:0/device:CPU:0"]()]]

Seems to be caused by the accuracy metric, without the accuracy metric it works fine in the latest versions as well.

sparse_categorical_accuracy seems to cause the same issue

train work in keras2.2.4 meet the same problem

I tested this function:

def test_mse_bug():

inp = Input((None, 12))

conv = Conv1D(48, kernel_size=1, activation='linear')(inp)

out = Reshape((-1, 3, 16))(conv)

act = Activation('softmax')(out)

model = Model(inputs=inp, outputs=act)

model.compile('adam', 'sparse_categorical_crossentropy', metrics=['acc'])

model.summary()

inp_data = np.random.rand(32, 10, 12)

out_data = np.random.randint(0, 16, size=(32, 10, 3, 1))

model.fit(inp_data, out_data)

and this was fine with the latest version of master with TF 1.9. So I think that this bug has been solved in the master branch since 2.2.4. Closing this issue. Feel free to post a script to reproduce if you think that I did a mistake.

The issue happens with tf 1.11

My bad. Keras is currently tested against tf 1.9. I'll make a PR to update the tests for 1.11/1.12. Hopefully we'll be able to track better this bug. Thanks for the feedback. As a quick fix, I recommend using tf.keras. Same API but it might not have this bug.

It added a test for this bug in #11602 with TF 1.12 and python 3.6. There doesn't seem to have a bug. Could you upgrade TF and report back?

I haven't tested with 3.6 but with 3.5 and tf 1.12, the bug's still there.

Under python 3.5, I tested with tf 1.7 through 1.12, and the bug is there. So it seems the problem is with keras 2.2.3, 2.2.4 and python 3.5

It's very strange. In any case, if we can't reproduce the bug, it's going to be really hard to fix it. I'll give a shot at python 3.5, but I don't think that the python version matters in this bug.

Having the same issue. Broke after update to 2.2.4 with pip.

It's caused by K.flatten in

def sparse_categorical_accuracy(y_true, y_pred):

# flatten y_true in case it's in shape (num_samples, 1) instead of (num_samples,)

return K.cast(K.equal(K.flatten(y_true),

K.cast(K.argmax(y_pred, axis=-1), K.floatx())),

K.floatx())

Master uses K.squeeze which is correct for n-dimensional shapes

Though the same bug remains in sparse_top_k_categorical_accuracy

The issue happens with tf1.9 and keras2.2.4

Have the same problem ubuntu 18.04. Two anaconda envs: one with python 3.5, tensorflow 1.9 and keras 2.2.2, cuda 9.0 works fine, while python 3.6, tensorflow 2.2.4, cuda 9.2 does not work. Simply switching between the environments makes the script (not) work. I can provide the script is so desired.

As @golbstein remarked, batch_size=1 makes it work.

I coincidentally noticed not always the error is generated. This depends on the model to be trained. Below is a script in which two models are defined. The one (simple_model) trains, the other (char_model) gives the invalid argument error. You can choose the model in line 16: when model_type='rnn' the model runs ok, when model_type='char' it does not. Data used is the Kaggle dataset: ner_dataset.csv.

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

import random

import pandas as pd

import numpy as np

from sklearn.metrics import classification_report

from keras.preprocessing.sequence import pad_sequences

from keras.utils import to_categorical

from sklearn.model_selection import train_test_split

from keras.models import Model, Input

from keras.layers import CuDNNGRU, Embedding, Dense, TimeDistributed, Dropout

from keras.layers import Bidirectional, concatenate, SpatialDropout1D, GlobalMaxPooling1D

# model_type can be 'rnn' or 'char'

model_type = 'char'

max_len_char = 10

test_fraction = 0.25

class SentenceGetter(object):

"""

get Sentences from the Kaggle dataset: ner_dataset.csv

"""

def __init__(self, data):

self.n_sent = 1

self.data = data

self.empty = False

agg_func = lambda s: [(w, p, t) for w, p, t in zip(s["Word"].values.tolist(),

s["POS"].values.tolist(),

s["Tag"].values.tolist())]

self.grouped = self.data.groupby("Sentence #").apply(agg_func)

self.sentences = [s for s in self.grouped]

def get_next(self):

try:

s = self.grouped["Sentence: {}".format(self.n_sent)]

self.n_sent += 1

return s

except:

return None

def unique_tokens(tokens, elements, idx):

words = [word[idx] for tweet in tokens for word in tweet]

for word in words:

if not word in elements:

elements.append(word)

return elements

def create_input(token2idx, sentences, idx, padding, unknown):

X = [[token2idx[w[idx]] if w[idx] in token2idx else unknown for w in s]

for s in sentences]

X = pad_sequences(maxlen=max_len, sequences=X, padding="post", value=padding)

return X

def create_char_seqs(sentences, char2idx, max_len, max_len_char):

""" Creates a sequence of characters for each word

Args:

Returns:

"""

X_char = []

for sentence in sentences:

sent_seq = []

for i in range(max_len):

word_seq = []

for j in range(max_len_char):

try:

word_seq.append(char2idx.get(sentence[i][0][j]))

except:

word_seq.append(char2idx.get("<PAD>"))

# for

sent_seq.append(word_seq)

# for

X_char.append(np.array(sent_seq))

# for

return np.array(X_char)

def simple_model(n_words, max_len):

""" Builds a model with an embedded layer and an RNN layer

Args:

n_words (int): number of unique words

max_len (int): maximum length of sentences

Returns:

Compiled model

"""

input = Input(shape=(max_len,))

model = Embedding(input_dim=n_words, output_dim=50, input_length=max_len)(input)

model = Dropout(0.1)(model)

model = Bidirectional(CuDNNGRU(units=200, return_sequences=True))(model)

#model = Bidirectional(CuDNNGRU(units=100, return_sequences=True))(model)

out = TimeDistributed(Dense(n_tags, activation="softmax"))(model) # softmax output layer

model = Model(input, out)

model.compile(optimizer="adam", loss="categorical_crossentropy",

metrics=["accuracy"])

model.summary()

return model, "acc", "val_acc"

def char_model(n_words, max_len, n_chars, max_len_char):

""" Builds a model for sequencing words and characters

Args:

n_words (int): number of unique words

max_len (int): maximum length of sentences

n_chars (int): number of characters

max_len_char (int): maximum number of characters in a characterized word

Returns:

Compiled model

"""

# Input and embedding for words

word_in = Input(shape=(max_len,), name='word_input')

emb_word = Embedding(input_dim=n_words, output_dim=50, input_length=max_len,

name='word_embedding')(word_in)

# Input and embedding for chars

char_in = Input(shape=(max_len, max_len_char,), name='char_input')

emb_char = TimeDistributed(Embedding(input_dim=n_chars + 2, output_dim=10,

input_length=max_len_char),#, mask_zero=True),

name='char_embedding')(char_in)

# Character RNN

char_enc = TimeDistributed(CuDNNGRU(units=20, return_sequences=False,

name='char_GRU'))(emb_char)

# Concatenate char and word sub systems

x = concatenate([emb_word, char_enc])

model = SpatialDropout1D(0.3)(x)

model = Bidirectional(CuDNNGRU(units=100, return_sequences=True))(model)

out = TimeDistributed(Dense(n_tags, activation="softmax"))(model) # softmax output layer

model = Model([word_in, char_in], out)

model.compile(optimizer="adam", loss="sparse_categorical_crossentropy",

metrics=["accuracy"])

model.summary()

return model, "acc", "val_acc"

seed = 42

np.random.seed(seed)

random.seed(seed)

#test_model(model, word2idx, idx2tag, test_sentences)

#sys.exit()

# Read all data sets

data = pd.read_csv("data/ner_dataset.csv", encoding="latin1")

data = data.fillna(method="ffill")

getter = SentenceGetter(data)

sentences = getter.sentences

sentences = sentences[:5000]

print('Total sentences:', len(sentences))

# Compute max length of all sentences

max_len = 0

for sentence in sentences:

if len(sentence) > max_len:

max_len = len(sentence)

print('max_len:', max_len)

if max_len > 50:

max_len = 50

print('max_len set to', max_len)

# Create dictionary and inverse dictionary of words and tags

words = unique_tokens(sentences, ['<PAD>', '<UNK>'], 0)

n_words = len(words)

word2idx = {w: i for i, w in enumerate(words)}

idx2word = {v: k for k, v in word2idx.items()}

print('Words', n_words)

tags = unique_tokens(sentences, ['O'], 2)

n_tags = len(tags)

tag2idx = {t: i for i, t in enumerate(tags)}

idx2tag = {v: k for k, v in tag2idx.items()}

print(n_tags, 'tags:', tag2idx)

# Convert words and tags numpy arrays

X_word = create_input(word2idx, sentences, 0, word2idx['<PAD>'], word2idx['<UNK>'])

y_word = create_input(tag2idx, sentences, 2, tag2idx["O"], tag2idx["O"])

# Create train and validation set

X_word_train, X_word_val, y_word_train, y_word_val = train_test_split(X_word,

y_word, test_size=test_fraction, random_state=seed)

# Create and fit model

epochs = 1

print('Type of model is:', model_type)

if model_type == 'rnn':

model, acc_name, val_acc_name = simple_model(n_words, max_len)

y_word_train = np.array([to_categorical(i, num_classes=n_tags) for i in y_word_train])

y_word_val = np.array([to_categorical(i, num_classes=n_tags) for i in y_word_val])

pred_list = [X_word_val]

history = model.fit(X_word_train, y_word_train, batch_size=32, epochs=epochs,

validation_data=(pred_list, y_word_val), verbose=1)

y_true = np.argmax(y_word_val, axis=2)

elif model_type == 'char':

# Additionally compute character recognition sub system

chars = set([w_i for w in words for w_i in w])

n_chars = len(chars)

char2idx = {c: i + 2 for i, c in enumerate(chars)}

char2idx["<UNK>"] = 1

char2idx["<PAD>"] = 0

idx2char = {v: k for k, v in char2idx.items()}

print(n_chars, 'chars:', char2idx)

# Compute the character training and validation sets

X_char = create_char_seqs(sentences, char2idx, max_len, max_len_char)

# Create train and validation set

X_char_train, X_char_val = train_test_split(X_char, test_size=test_fraction,

random_state=seed)

model, acc_name, val_acc_name = char_model(n_words, max_len, n_chars, max_len_char)

X_char_train = np.array(X_char_train).reshape((len(X_char_train), max_len, max_len_char))

X_char_val = np.array(X_char_val).reshape((len(X_char_val), max_len, max_len_char))

y_word_train = np.array(y_word_train).reshape(len(y_word_train), max_len, 1)

y_word_val = np.array(y_word_val).reshape(len(y_word_val), max_len, 1)

#X_word_train = X_word_train.reshape(X_word_train.shape[0], X_word_train.shape[1], 1)

#X_word_val = X_word_val.reshape(X_word_val.shape[0], X_word_val.shape[1], 1)

pred_list = [X_word_val, X_char_val]

history = model.fit([X_word_train, X_char_train], y_word_train,

validation_data=(pred_list, y_word_val),

batch_size=64, epochs=epochs,

verbose=1)

y_true = y_word_val.reshape(y_word_val.shape[0:2])

else:

raise ValueError('Unknown model type: ' + model_type)

I updated to tensorflow-gpu 1.12.0 and now all works ok

I have a similar issue using tf.keras. My keras version is 2.2.4, my tensorflow version is 1.12.0, and I use Python 3.6.

I train a simple RNN with one embedding layer, one GRU layer with return_sequences=True, one Dense layer (using TimeDistributed or not with the Dense layer doesn't change the problem, I get the same error). My metric is sparse_categorical_entropy.

The length of my sequences is 4, and there are 60 categories, so the shape of my input is (batch_size, 4, 60). The shape of my output is also (batch_size, 4, 60) since return_sequences=True.

The error I get is

InvalidArgumentError: Incompatible shapes: [batch_size] vs. [batch_size,4] [Op:Equal]

Note that, like in the posts above, the error does not occur when batch_size=1 or when I don't use any metric. In both cases the neural network trains without error.

Note also that if I set return_sequences=False, that is, I only try to predict one category instead of a sequence and my output is of shape (batch_size,60), then the network trains also without error.

EDIT : I managed to make my training work by replacing

model.compile(

optimizer = tf.train.AdamOptimizer(),

loss = 'sparse_categorical_crossentropy',

metrics=['sparse_categorical_accuracy'])

by

model.compile(

optimizer = tf.train.AdamOptimizer(),

loss = 'sparse_categorical_crossentropy',

metrics=[new_sparse_categorical_accuracy])

where I defined new_sparse_categorical_accuracy by copying the code taken from https://github.com/tensorflow/tensorflow/blob/master/tensorflow/python/keras/metrics.py, that is:

from tensorflow.python.ops import math_ops

from tensorflow.python.framework import ops

from tensorflow.python.keras import backend as K

from tensorflow.python.ops import array_ops

def new_sparse_categorical_accuracy(y_true, y_pred):

y_pred_rank = ops.convert_to_tensor(y_pred).get_shape().ndims

y_true_rank = ops.convert_to_tensor(y_true).get_shape().ndims

# If the shape of y_true is (num_samples, 1), squeeze to (num_samples,)

if (y_true_rank is not None) and (y_pred_rank is not None) and (len(K.int_shape(y_true)) == len(K.int_shape(y_pred))):

y_true = array_ops.squeeze(y_true, [-1])

y_pred = math_ops.argmax(y_pred, axis=-1)

# If the predicted output and actual output types don't match, force cast them

# to match.

if K.dtype(y_pred) != K.dtype(y_true):

y_pred = math_ops.cast(y_pred, K.dtype(y_true))

return math_ops.cast(math_ops.equal(y_true, y_pred), K.floatx())

durandg12

I have a similar issue using tf.keras. My keras version is 2.2.4, my tensorflow version is 1.12.0, and I use Python 3.6.

I train a simple RNN with one embedding layer, one GRU layer withreturn_sequences=True, one Dense layer (using TimeDistributed or not with the Dense layer doesn't change the problem, I get the same error). My metric is sparse_categorical_entropy.The length of my sequences is 4, and there are 60 categories, so the shape of my input is

(batch_size, 4, 60). The shape of my output is also(batch_size, 4, 60)sincereturn_sequences=True.

The error I get is InvalidArgumentError: Incompatible shapes: [batch_size] vs. [batch_size,4] [Op:Equal]Note that, like in the posts above, the error does not occur when batch_size=1 or when I don't use any metric. In both cases the neural network trains without error.

Note also that if I set

return_sequences=False, that is, I only try to predict one category instead of a sequence and my output is of shape(batch_size,60), then the network trains also without error.EDIT : I managed to make my training work by replacing

model.compile( optimizer = tf.train.AdamOptimizer(), loss = 'sparse_categorical_crossentropy', metrics=['sparse_categorical_accuracy'])by

model.compile( optimizer = tf.train.AdamOptimizer(), loss = 'sparse_categorical_crossentropy', metrics=[new_sparse_categorical_accuracy])where I defined

new_sparse_categorical_accuracyby copying the code taken from https://github.com/tensorflow/tensorflow/blob/master/tensorflow/python/keras/metrics.py, that is:from tensorflow.python.ops import math_ops from tensorflow.python.framework import ops from tensorflow.python.keras import backend as K from tensorflow.python.ops import array_ops def new_sparse_categorical_accuracy(y_true, y_pred): y_pred_rank = ops.convert_to_tensor(y_pred).get_shape().ndims y_true_rank = ops.convert_to_tensor(y_true).get_shape().ndims # If the shape of y_true is (num_samples, 1), squeeze to (num_samples,) if (y_true_rank is not None) and (y_pred_rank is not None) and (len(K.int_shape(y_true)) == len(K.int_shape(y_pred))): y_true = array_ops.squeeze(y_true, [-1]) y_pred = math_ops.argmax(y_pred, axis=-1) # If the predicted output and actual output types don't match, force cast them # to match. if K.dtype(y_pred) != K.dtype(y_true): y_pred = math_ops.cast(y_pred, K.dtype(y_true)) return math_ops.cast(math_ops.equal(y_true, y_pred), K.floatx())

bro that was great .....thanks for sharing

you r pure dope

Most helpful comment

The issue happens with tf1.9 and keras2.2.4