Keras: model.save and load giving different result

I am trying to save a simple LSTM model for text classification. The input of the model is padded vectorized sentences.

model = Sequential()

model.add(LSTM(40, input_shape=(16, 32)))

model.add(Dense(20))

model.add(Dense(8, activation='softmax'))

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

For saving I'm using the following snippet:

for i in range(50):

from sklearn.cross_validation import train_test_split

data_train, data_test, labels_train, labels_test = train_test_split(feature_set, dummy_y, test_size=0.1, random_state=i)

accuracy = 0.0

try:

with open('/app/accuracy', 'r') as file:

for line in file:

accuracy = float(line)

except Exception:

print("error")

model.fit(data_train, labels_train, nb_epoch=50)

loss, acc = model.evaluate(feature_set, dummy_y)

if acc > accuracy:

model.save_weights("model.h5", overwrite=True)

model.save('my_model.h5', overwrite=True)

print("Saved model to disk.\nAccuracy:")

print(acc)

with open('/app/accuracy', 'w') as file:

file.write('%f' % acc)

But whenever I'm trying to load the same model

from keras.models import load_model

model = load_model('my_model.h5')

I'm getting random accuracy like an untrained model. same result even when trying to load weights separately.

If I set the weights

lstmweights=model.get_weights()

model2.set_weights(lstmweights)

like above. It is working if model and model2 are run under same session (same notebook session). If I serialize lstmweights and try to load it from different place, again I'm getting result like untrained model. It seems saving only the weights are not enough. So why model.save is not working. Any known point?

All 265 comments

I'm having a similar problem, but it has to do with setting stateful=True. If I do that, the prediction from the original model is different from the prediction of the saved and reloaded model.

`# DEPENDENCIES

import numpy as np

from keras.models import Sequential

from keras.layers.core import Dense, Activation

from keras.layers.recurrent import LSTM

TRAINING AND VALIDATION FILES

xTrain = np.random.rand(200,10)

yTrain = np.random.rand(200,1)

xVal = np.random.rand(100,10)

yVal = np.random.rand(100,1)

ADD 3RD DIMENSION TO DATA

xTrain = xTrain.reshape(len(xTrain), 1, xTrain.shape[1])

xVal = xVal.reshape(len(xVal), 1, xVal.shape[1])

CREATE MODEL

model = Sequential()

model.add(LSTM(200, batch_input_shape=(10, 1, xTrain.shape[2])

#, stateful=True # With this line, the reloaded model generates different predictions than the original model

))

model.add(Dense(yTrain.shape[1]))

model.add(Activation("linear"))

model.compile(loss="mean_squared_error", optimizer="rmsprop")

model.fit(xTrain, yTrain,

batch_size=10, nb_epoch=2,

verbose=0,

shuffle='False',

validation_data=(xVal, yVal))

PREDICT RESULTS ON VALIDATION DATA

yFit = model.predict(xVal, batch_size=10, verbose=1)

print()

print(yFit)

SAVE MODEL

model.save('my_model.h5')

del model

RELOAD MODEL

from keras.models import load_model

model = load_model('my_model.h5')

yFit = model.predict(xVal, batch_size=10, verbose=1)

print()

print(yFit)

DO IT AGAIN

del model

model = load_model('my_model.h5')

yFit = model.predict(xVal, batch_size=10, verbose=1)

print()

print(yFit)`

Same problem. Is there sth wrong with the function "save model"?

I am having the exact same issue. Does anyone know exactly what the problem is?

Same issue using json format for saving a very simple model. I get different results on my test data before and after save/loading the model.

classifier = train(model, trainSet, devSet)

TEST BEFORE SAVING

test(model, classifier, testSet)

save model to json

model_json = classifier.to_json()

with open('../data/local/SICK-Classifier', "w") as json_file:

json_file.write(model_json)

load model fron json

json_file = open('../data/local/SICK-Classifier', 'r')

loaded_model_json = json_file.read()

json_file.close()

classifier = model_from_json(loaded_model_json)

TEST AFTER SAVING

test(model, classifier, testSet)

I crosschecked all these functions - they seem to be working properly.

model.save(), load_model(), model.save_weights() and model.load_weights()

I ran into a similar issue. After saving my model, the weights were changed and my predictions became random.

For my own case, it came down to how I was mixing vanilla Tensorflow with Keras. It turns out that Keras implicitly runs tf.global_variables_initializer if you don't tell it that you will do so manually. This means that in trying to save my model, it was first re-initializing all of the weights.

The flag to prevent Keras from doing this is _MANUAL_VAR_INIT in the tensorflow backend. You can turn it on like this, before training your model:

from keras.backend import manual_variable_initialization

manual_variable_initialization(True)

Hope this helps!

Hi @kswersky,

Thanks for your answer.

I am using Keras 2.0 with Tensorflow 1.0 setup. I am building model in Keras and using Tensorflow pipeline for training and testing. When you load the keras model, it might reinitialize the weights. I avoided tf.global_variables_initializer() and used load_weights('saved_model.h5'). Then model got the saved weights and I was able to reproduce correct results. I did not have to do the _manual_var_init step. (its a very good answer for just Keras)

May be I confused people who are just using Keras.

Use model.model.save() instead of model.save()

I'm stuck with the same problem. The only solution for now is move to python 2.7 ?

@pras135 , if I do as you suggest I cannot perform model.predict_classes(x), AttributeError: 'Model' object has no attribute 'predict_classes'

Same issue here. When I load a saved model my predictions are random.

@lotempeledGong

model_1 = model.model.save('abcd.h5') # save the model as abcd.h5

from keras.models import load_model

model_1 = load_model('abcd.h5') # load the saved model

y_score = model_1.predict_classes(data_to_predict) # supply data_to_predict

Hope it helps.

@pras135 What you suggested is in the same session, and it does work. Unfortunately I need this to work in separate sessions, and if you do the following:

(in first python session)

model_1 = model.model.save('abcd.h5') # save the model as abcd.h5

(close python session)

(open second python session)

model_1 = load_model('abcd.h5') # load the saved model

y_score = model_1.predict_classes(data_to_predict) # supply data_to_predict

I receive the following error: AttributeError: 'Model' object has no attribute 'predict_classes'

@lotempeledGong That should not happen. Check if load_model means same as keras.models.load_model in your context. This should work just fine.

@deeiip thanks, but this still doesn't work for me. However this is not my main problem here. What I want eventually is to train a model, save it, close the python session and in a new python session load the trained model and obtain the same accuracy. Currently when I try to do this, the loaded model gives random predictions and it is as though it wasn't trained.

By the way, in case this is a versions issue- I'm running with Keras 2.0.4 with Tensorflow 1.1.0 backend on Python 3.5.

@lotempeledGong I'm facing exactly the same issue you refer here. However, I'm using Tensorflow 1.1 and TFlearn 0.3 on Windows10 with Python 3.5.2.

@deeiip have you solved this problem? how to?

@kswersky l add the from keras.backend import manual_variable_initialization

manual_variable_initialization(True) but the error came:

tensorflow.python.framework.errors_impl.FailedPreconditionError: Attempting to use uninitialized value Variable

[[Node: Variable/_24 = _Send[T=DT_FLOAT, client_terminated=false, recv_device="/job:localhost/replica:0/task:0/cpu:0", send_device="/job:localhost/replica:0/task:0/gpu:0", send_device_incarnation=1, tensor_name="edge_8_Variable", _device="/job:localhost/replica:0/task:0/gpu:0"](Variable)]]

[[Node: Variable_1/_27 = _Recv[_start_time=0, client_terminated=false, recv_device="/job:localhost/replica:0/task:0/cpu:0", send_device="/job:localhost/replica:0/task:0/gpu:0", send_device_incarnation=1, tensor_name="edge_10_Variable_1", tensor_type=DT_FLOAT, _device="/job:localhost/replica:0/task:0/cpu:0"]()]]

@HarshaVardhanP how to avoided tf.global_variables_initializer() before load model?

@chenlihuang Now, I am using tf.global_variables_initializer(). It will init net variables. And use load_weights() to load the saved weights. This seems easier for me than using load_model().

@HarshaVardhanP can you give some ideas for me on the keras level . l don't care the backend on TF.

only easy uase keras layers. that's mean how can l solve the problem in this beacuse I didn't touch the TF

.

EDIT: i've noticed loading my model doesnt give different results so I guess I dont need the workaround.

Unfortunately, I've run into the same issue that many others on here seem to have encountered -- I've trained what seems to be an extremely powerful text classifier (based on cross-validation, at least, with a healthy-sized dataset), but upon loading a saved model -- either using load_model or model.load_weights -- my model's performance is now completely worthless when tested in a new session. There's absolutely no way this is the same model that I trained. I've tried every suggestion offered here, but to no avail. Super disheartening to see what appeared to be such good results and have no ability to actually use the classifier in other sessions/settings. I hope this gets addressed soon. (P.S. I'm running with Keras 2.0.4 with Tensorflow 1.1.0 backend on Python 3.5.)

@gokceneraslan @fchollet Many of us are facing this issue. Could you please take a look ?

Thanks!

I do not see any issue with model serialization using the save_model() and load_model() functions from the latest Tensorflow packaged Keras.

For example:

import tensorflow.contrib.keras as keras

m = train_keras_cnn_model() # Fill in the gaps with your model

model_fn = "test-keras-model-serialization.hdf5"

keras.models.save_model(m, model_fn)

m_load = keras.models.load_model(model_fn)

m_load_weights = m_load.get_weights()

m_weights = m.get_weights()

assert len(m_load_weights) == len(m_weights)

for i in range(len(m_weights)):

assert np.array_equal(m_load_weights[i], m_weights[i])

print("Model weight serialization test passed"

Hi @kevinjos

The issue is not with using the saved model in the same session. If i save a model from session 1 and load it in session 2 and use two exactly the same data to perform inference, the results are different.

To be more specific, the inference results from the session in which the model was built is much better compared to results from a different session using the same model.

How is tensorflow packaged keras different from vanilla keras itself ?

@Chandrahas1991 When I run a similar code as above with a fresh tf session I get the same results. Have you tried the suggestion offered by @kswersky to set a flag to prevent automatic variable initialization? Could the issue with serialization apply only to LSTM layers? Or more specifically stateful LSTM layers? Have you tried using only the Keras code packaged with TensorFlow?

import tensorflow.contrib.keras as keras

from tensorflow.contrib.keras import backend as K

m = train_keras_cnn_model() # Fill in the gaps with your model

model_fn = "test-keras-model-serialization.hdf5"

m_weights = m.get_weights()

keras.models.save_model(m, model_fn)

K.clear_session()

m_load = keras.models.load_model(model_fn)

m_load_weights = m_load.get_weights()

assert len(m_load_weights) == len(m_weights)

for i in range(len(m_weights)):

assert np.array_equal(m_load_weights[i], m_weights[i])

print("Model weight serialization test passed"

@kevinjos I got this error while working with CNN's and @kswersky's solution did not work for me.

I haven't tried with keras packed in tensorflow. I'll check it out. Thanks for the suggestion!

There are already tests in Keras to check if model saving/loading works. Unless you write a short and fully reproducible code (along with the data) and fully describe your working environment (keras/python version, backend), it's difficult to pinpoint the cause of all issues mentioned here.

I've just sent another PR to add more tests about problems described here. @deeiip can you check #7024 and see if it's similar to the code that you use to reproduce this? With my setup, (keras master branch, python 3.6, TF backend) I cannot reproduce any model save/load issues with either mlps or convlstms, even if I restart the session in between.

Some people mentioned reproducibility problems about stateful RNNs. As far as I know, RNN states are not saved via save_model(). Therefore, there must be differences when you compare predictions before and after saving the model, since states are reset. But keep in mind that this report is not about stateful RNNs.

check the input data if is consistent for the same data across multiple executions.

I am having this same problem, but only when I use an embedding layer and keras API. Before closing the session the accuracy after running model.evaluate is >90%, after opening a new session and running a model.evaluate on the exact same data I get ~ 80% accuracy. I have tried saving the model using save,model() and by saving to json and saving and then also loading the weights. Both methods give the same results.

I use the same data with a sequential model without an embedding layer and API model without an embedding layer and both work as expected.

embedding_layer = Embedding(len(word_index) +1, 28, input_length = 28)

sequence_input = Input(shape=(max_seq_len,), dtype='int32')

embedded_sequences = embedding_layer(sequence_input)

x = Conv1D(128, 3, activation='relu')(embedded_sequences)

x = MaxPooling1D(3)(x)

x = Conv1D(128, 2, activation='relu')(x)

x = MaxPooling1D(2)(x)

x = Conv1D(128, 2, activation='relu')(x)

x = MaxPooling1D(2)(x) # global max pooling

x = Flatten()(x)

x = Dense(12, activation='relu')(x)

x = Dropout(0.2)(x)

preds = Dense(46, activation='softmax')(x)

model = Model(sequence_input, preds)

model.compile(loss='categorical_crossentropy',

optimizer='adam',

metrics=['acc'])

model.fit(x_train, y_train, validation_data=(x_val, y_val), epochs=1, batch_size=300)

I am using anaconda v3.5 and tf r1.1

Any news on that? Similar problem here.

I am training a unet architecture CNN. The training gives good results and the evaluate function works fine loading the model. As I generate new predicitons they are completely random and uncorrelated to the ground truths.. Loading the model or the weights separately does not help.

Keras 2.0.4 with Tensorflow 1.1.0 backend on Python 2.7.

It's difficult to help without a fully reproducible code.

I ran in to the same issue (and am mixing Keras and Tensorflow) and I am already using manual_variable_initialization(True). I had to add the extra load_weights(..) call to get it to work.

def __init__(self):

self.session = tf.Session()

K.set_session(self.session)

K.manual_variable_initialization(True)

self.model = load_model(FILENAME)

self.model._make_predict_function()

self.session.run(tf.global_variables_initializer())

self.model.load_weights(FILENAME) #### Added this line

self.default_graph = tf.get_default_graph()

self.default_graph.finalize()

For anyone experiencing this, can you check that the weights of the model are exactly the same right after training and after being reloaded? To machine precision.

I have the same issue. In my case if I use Lambda layer then the prediction results after loading are not correct. If I remove the Lambda layer, then the results after loading are the same as training.

EDIT: I checked my code again, and I found the issue is because of using a undefined parameter in my lambda function. After I solved it, the results are consistent.

Just switched to a GPU setup, and this issue came up. I haven't tried switching back to CPU only, but will let you know if it seems to be the issue. Additionally #4044 has been happening a lot, although I'm not sure if it's related. Tensorflow is only used to set the device (GPU) for each model.

Python 3.4.3, Keras 2.0.6, TensorFlow 1.2.0, TensorFlow-GPU 1.1.0

UPDATE: It seems to occur when training two models at the same time (i.e. train model1, test model1 with expected accuracy, train model2, test model1 with random predictions). I think this might be an issue with the load_model function, and I'm going to try using the instance method (load the model from json and then load it's weights) to see if the same error occurs.

UPDATE 2: Instance methods didn't solve the issue.

UPDATE 3: I feel kinda silly. My code was the issue. 👎 Sorry for the spam.

I'm training a LSTM RNN for description generation using Keras (Tensorflow Backend) with MSCOCO dataset. When training the model it had 92% accuracy with 0.79 loss. Further when the model was training I tested the description generation at each epoch and the model provided very good predictions with a meaningful description when it gives a random word.

However after training I loaded the model using model.load_weights(WEIGHTS) method in Keras and tried to create a description by giving a random word as I've done before. But now model is not providing a meaningful description and it just outputs random words which has no meaning at all.

I checked weight values and they are same too.

My model parameters are:

10 LSTM layers

Learning rate: 0.04

Activation: Softmax

Loss Function: Categorical Cross entropy

Optimizer: rmsprop

My Tesorflow version: 1.2.1

Python: 3.5

Keras Version: 2.0.6

Does anyone have a solution for this ?

What I've tried here is:

md = config_a_model() # a pretty complicated convolutional NN.

md.save('model.h5')

md1 = config_a_model() # same config as above

md1.load_weights('model.h5')

md1.save('another_model.h5')

model.h5 is 176M and another_model.h5 is only 29M.

Python 2.7.6

tensorflow-gpu (1.2.1)

Keras 2.0.6

Did you try model.save_weights() ?? I am using model.save_weights(), model.load_weights(), model.load_from_json() which are working fine.

@HarshaVardhanP Will try.. What's the difference between save() and save_weights() ?

Also I feel it's a recent issue for me since I upgraded Keras from 2.0.1 => 2.0.6 last weekend.

I didn't have such issue before, as previously the prediction results are much better. Now the prediction results are horrible. That's why I tested above model save->load->save again steps.

@rrki - I had this issue while loading Keras models into Tensorflow. model.save() didn't work for me for some reason. It's supposed to save architecture and weights. So, I saved/loaded architecture and weights separately which seems to be working fine for sometime.

@HarshaVardhanP I've tried save_weights() and also keras.models.save_model()

They are basically all the same. I can't explain why the mode file sizes differ a lot. I tried to print out every layer's summary() and seems the network's architecture is there. But predict() always gives random results...

@rrki we would need a specific example to find what is going wrong there, including a minimal model that reproduces the error, and some data to train it.

Otherwise, can you check if the weights of the model are the same, to machine precision, before and after reloading?

@Dapid

Thank you for the reply. Correct me if I'm misusing something...

import numpy as np

from keras.callbacks import TensorBoard

from keras.layers import Conv1D, MaxPooling1D

from keras.layers.advanced_activations import PReLU

from keras.layers.core import Dense, Activation, Dropout, Flatten

from keras.layers.normalization import BatchNormalization

from keras.layers.wrappers import TimeDistributed

from keras.legacy.layers import Merge

from keras.models import save_model, load_model, Sequential

def config_model():

pRelu = PReLU()

md1 = Sequential(name='md1')

md2 = Sequential(name='md2')

md1.add(BatchNormalization(axis=1, input_shape=(10, 520)))

md2.add(BatchNormalization(axis=1, input_shape=(10, 520)))

md1.add(Conv1D(512, 1, padding='causal', activation='relu', name='conv1_f1_s1'))

md2.add(Conv1D(512, 3, padding='causal', activation='relu', name='conv1_f3_s1'))

md1.add(TimeDistributed(Dense(512, activation='relu')))

md2.add(TimeDistributed(Dense(512, activation='relu')))

merge_1 = Sequential(name='merge_1')

merge_1.add(Merge([md1, md2], mode='concat'))

merge_1.add(MaxPooling1D(name='mxp_merge'))

merge_1.add(Dropout(0.2))

merge_1.add(Dense(512))

merge_1.add(pRelu)

merge_1.add(Flatten())

md3 = Sequential(name='md3')

md3.add(BatchNormalization(axis=1, input_shape=(10, 520)))

md3.add(Conv1D(512, 1, padding='causal', activation='relu', name='conv1_f1_s1_2'))

md3.add(MaxPooling1D())

md3.add(Dense(256))

md3.add(Flatten())

model = Sequential(name='final_model')

model.add(Merge([merge_1, md3], mode='concat'))

model.add(Dense(2))

model.add(Activation('softmax', name='softmax'))

model.compile(loss='categorical_crossentropy', optimizer='adam',

metrics=['accuracy'])

return model

if __name__ == '__main__':

X = np.random.rand(2000, 10, 520)

Y = np.random.rand(2000, 2)

model = config_model()

tbCallBack = TensorBoard(

log_dir='tb_logs', histogram_freq=4, write_graph=True,

write_grads=True, write_images=True)

model.fit([X]*3, Y, epochs=10, batch_size=100, callbacks=[tbCallBack])

model.save('model.h5')

model2 = config_model()

model2.load_weights('model.h5')

model2.save('model2.h5')

Result:

-rw-r--r-- 1 root root 3762696 Aug 2 11:57 model2.h5

-rw-r--r-- 1 root root 23983824 Aug 2 11:57 model.h5

@Dapid I tried save_weights() and it looks correct from the model file sizes. (but why?)

I guess the problem I'm facing is, I trained a huge model which took one week, but in previous code I did model.save(). Now what should be the right way to load this model from disk?

@rrki your example doesn't work for me:

Using TensorFlow backend.

file.py:22: UserWarning: The `Merge` layer is deprecated and will be removed after 08/2017. Use instead layers from `keras.layers.merge`, e.g. `add`, `concatenate`, etc.

merge_1.add(Merge([md1, md2], mode='concat'))

Traceback (most recent call last):

File "/home/david/.virtualenv/py35/lib/python3.5/site-packages/tensorflow/python/framework/common_shapes.py", line 671, in _call_cpp_shape_fn_impl

input_tensors_as_shapes, status)

File "/usr/lib64/python3.5/contextlib.py", line 66, in __exit__

next(self.gen)

File "/home/david/.virtualenv/py35/lib/python3.5/site-packages/tensorflow/python/framework/errors_impl.py", line 466, in raise_exception_on_not_ok_status

pywrap_tensorflow.TF_GetCode(status))

tensorflow.python.framework.errors_impl.InvalidArgumentError: Dimensions must be equal, but are 5 and 512 for 'dense_3/add' (op: 'Add') with input shapes: [?,5,512], [1,512,1].

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "file.py", line 45, in <module>

model = config_model()

File "file.py", line 25, in config_model

merge_1.add(Dense(512))

File "/home/david/.virtualenv/py35/lib/python3.5/site-packages/keras/models.py", line 469, in add

output_tensor = layer(self.outputs[0])

File "/home/david/.virtualenv/py35/lib/python3.5/site-packages/keras/engine/topology.py", line 596, in __call__

output = self.call(inputs, **kwargs)

File "/home/david/.virtualenv/py35/lib/python3.5/site-packages/keras/layers/core.py", line 840, in call

output = K.bias_add(output, self.bias)

File "/home/david/.virtualenv/py35/lib/python3.5/site-packages/keras/backend/tensorflow_backend.py", line 3479, in bias_add

x += reshape(bias, (1, bias_shape[0], 1))

File "/home/david/.virtualenv/py35/lib/python3.5/site-packages/tensorflow/python/ops/math_ops.py", line 838, in binary_op_wrapper

return func(x, y, name=name)

File "/home/david/.virtualenv/py35/lib/python3.5/site-packages/tensorflow/python/ops/gen_math_ops.py", line 67, in add

result = _op_def_lib.apply_op("Add", x=x, y=y, name=name)

File "/home/david/.virtualenv/py35/lib/python3.5/site-packages/tensorflow/python/framework/op_def_library.py", line 767, in apply_op

op_def=op_def)

File "/home/david/.virtualenv/py35/lib/python3.5/site-packages/tensorflow/python/framework/ops.py", line 2508, in create_op

set_shapes_for_outputs(ret)

File "/home/david/.virtualenv/py35/lib/python3.5/site-packages/tensorflow/python/framework/ops.py", line 1873, in set_shapes_for_outputs

shapes = shape_func(op)

File "/home/david/.virtualenv/py35/lib/python3.5/site-packages/tensorflow/python/framework/ops.py", line 1823, in call_with_requiring

return call_cpp_shape_fn(op, require_shape_fn=True)

File "/home/david/.virtualenv/py35/lib/python3.5/site-packages/tensorflow/python/framework/common_shapes.py", line 610, in call_cpp_shape_fn

debug_python_shape_fn, require_shape_fn)

File "/home/david/.virtualenv/py35/lib/python3.5/site-packages/tensorflow/python/framework/common_shapes.py", line 676, in _call_cpp_shape_fn_impl

raise ValueError(err.message)

ValueError: Dimensions must be equal, but are 5 and 512 for 'dense_3/add' (op: 'Add') with input shapes: [?,5,512], [1,512,1].

@Dapid What's your environment?

I'm using

Python 3.4.3

tensorflow-gpu (1.2.1)

Keras (2.0.6) <- which follows instructions from ISSUE_TEMPLATE

How comes I didn't get any error except for those Merge layer warnings?

visualized via tensorboard...

Ah, now it works, it must have been the image_data_format in the json.

I'll take a look. Thanks for the example.

Anyone solved the problem?

I found out when I used the statful RNN the code

open('my_model_architecture.json','w').write(json_string)

self.model.save_weights('my_model_weights.h5')

self.model.save('/home/tdk/models/LSTM_3layers_model_weights_2_Callback_%f.h5'%score)

temp = load_model('/home/tdk/models/LSTM_3layers_model_weights_2_Callback_%f.h5'%score)

y_load = temp.predict(self.validation_data[0])

temp2 = model_from_json(open('my_model_architecture.json').read())

temp2.load_weights('my_model_weights.h5')

y_load2 = temp2.predict(self.validation_data[0])

y_show = self.model.predict(self.validation_data[0])

y_show was different from y_load and y_load was same as y_load2.

When I set the statful False. I can get the same y_show and y_load. However, when I open another python, and try to get the prediction, the prediction seems to be random as @lotempeledGong described.

The other code is thatmodel = model_from_json(open('my_model_architecture.json').read())

model.load_weights('my_model_weights.h5')

a = model.predict(test_data)

np.savetxt("test_predict.txt",a)

I don't know how to solve it, anyone has idea? or do I misuse something?

@rrki sorry, I forgot about this. The difference is the optimizer and gradients. model.save saves both, when you use model.load_weights you ignore the optimizer from the file, and hence it is not saved.

If you have pytables installed you can use the CLI ptdump and pttree to inspect the content of the files.

In case anyone is still running into this problem, I was dealing with this for a while because I did not realize Python 3.3 and up has non-deterministic hashing between runs (https://stackoverflow.com/questions/27954892/deterministic-hashing-in-python-3). I was doing my own preprocessing through nltk, and then using the native Python hash method to convert words to integers before passing them to my Embedding layer, which ended up being the issue of non-determinism.

I'm still with this issue.

It has no difference between load and not load weights.

Both weights are the same.

I just don't know what to do, since I already tried load_model and load_weights

I'm using tf and keras btw...

Facing exactly the same issue while saving and loading the model itself or the weights of it.

Both resulting in completely different results.

Python: v3.5.3

Tensorflow: v1.3.0

Keras: v2.0.8

Hi all,

I've just finished fighting the battle with this problem and overall not having consistent results while using evaluate_generator (if I execute it multiple times in a row, the results vary). With me the problem was the following -batch_size was not a divisor of number_of_samples! It took me ages to figure this one out -

steps = math.ceil(val_samples/batch_size)

Due to the fact that the batch_size was not a divisor of number_of_samples I assume it took different samples to fill in the last step. Some small errors occured also from using workers variable - using GPU it makes no sense to actually use it. Once I used a real divisor of the val_samples it worked like a charm and reproducible - before and after loading!

Unfortunately nothing that I tried helped.

Still face the same issue.

Even on a really simple example like this:

Train

model = Sequential()

model.add(Conv2D(16, (4, 4), padding='same', input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(Flatten())

model.add(Dense(2))

model.add(Activation('softmax'))

optimizer = RMSprop()

model.compile(loss='categorical_crossentropy', optimizer=optimizer, metrics=['accuracy'])

model.fit(x_train,y_train,epochs=10)

print(model.evaluate(x_val, y_val))

model.save('test.h5', overwrite=True)

Output:

[1.0409752264022827, 0.67000000047683717]

Now loading the model back again

Test

```python

from keras.models import load_model

model = load_model('test.h5')

print(model.evaluate(x_val, y_val))

````

Output:

[0.72732420063018799, 0.26000000000000001]

_That's just for demonstration_

- Python: v3.5.3

- Tensorflow-GPU: v1.3.0

- Keras: v2.0.8

Edit:

Just to be sure, I also tried with my training dataset [aka: evaluate the model before and after saving with the same dataset that I used for training.]

Output 1: same session:

{'acc': 0.73999999999999999, 'loss': 0.57565217232704158}

Output 2: load_model / weights:

{'acc': 0.88403865378207269, 'loss': 0.59617107459932062}

@sanosay Thank you for providing a full example, but I cannot reproduce. Can you provide some data?

from keras.models import load_model, Sequential

from keras.layers import Conv2D, Activation, Flatten, Dense

from keras.optimizers import RMSprop

import numpy as np

x_train = np.random.randn(100, 10, 10, 2)

y_train = np.zeros((100, 2))

y_train[:, np.argmax(np.median(x_train, axis=(1, 2)), axis=1)] = 1.

x_val = np.random.randn(30, 10, 10, 2)

y_val = np.zeros((30, 2))

y_val[:, np.argmax(np.median(x_val, axis=(1, 2)), axis=1)] = 1.

model = Sequential()

model.add(Conv2D(16, (4, 4), padding='same', input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(Flatten())

model.add(Dense(2))

model.add(Activation('softmax'))

optimizer = RMSprop()

model.compile(loss='categorical_crossentropy', optimizer=optimizer, metrics=['accuracy'])

model.fit(x_train,y_train,epochs=10)

print(model.evaluate(x_val, y_val))

model.save('test.h5', overwrite=True)

model = load_model('test.h5')

print(model.evaluate(x_val, y_val))

Gives:

[1.4226549863815308, 0.63333332538604736]

[1.4226549863815308, 0.63333332538604736]

Python: v3.5.4

Tensorflow: v1.3.0

Keras: v2.0.8

I'll test on the GPU.

@Dapid Unfortunately due to the nature of the dataset [medical] I have no license to upload them anywhere.

Dataset size is: 6002 images and 500 validation images

Images: 64x64x3

I also noticed something really strange [just now]:

Training a different model [same dataset] for different epoch numbers I get :

5 epochs

{'metrics':{'acc': 0.96867710763078974, 'loss': 0.10006937423370672}}

After

{'metrics': {'acc': 0.11596134621792736, 'loss': 0.73292944400320847}}

10 epochs:

Before:

{'metrics': {'acc': 0.98367210929690108, 'loss': 0.045077768838411421}}

After:

{'metrics': {'acc': 0.11596134621792736, 'loss': 1.1414862417133995}}

The accuracy remains the same, while loss is changing.

I tried the same model [and different models] on different machines, and having the same issue.

_Also tried the same with tensorflow and tensorflow-gpu, just in case_

What do you get with my synthetic data? Is it consistent? I can play with the sizes and number of images to see if I can get it to misbehave.

@Dapid That is indeed strange, I with your generated dataset [seed to 0] and I can't reproduce it.

I also tried adjusting it to 1001 instead of 100 and 302 instead of 30 [to see if it's affected somehow by batch size etc]. No issue with the results.

I then tried with a different dataset (cifar10) and I get inconsistent results

Ok, CIFAR is good, I can see if it works funny for me.

Which model are you using on CIFAR?

from keras.models import load_model, Sequential

from keras.layers import Conv2D, Activation, Flatten, Dense

from keras.optimizers import RMSprop

import numpy as np

from keras.datasets import cifar10

(x_train, y_train), (x_val, y_val) = cifar10.load_data()

model = Sequential()

model.add(Conv2D(16, (4, 4), padding='same', input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(Flatten())

model.add(Dense(10))

model.add(Activation('softmax'))

optimizer = RMSprop()

model.compile(loss='sparse_categorical_crossentropy', optimizer=optimizer, metrics=['accuracy'])

model.fit(x_train,y_train,epochs=10)

print(model.evaluate(x_val, y_val))

model.save('test.h5', overwrite=True)

model = load_model('test.h5')

print(model.evaluate(x_val, y_val))

[14.506285684204101, 0.10000000000000001]

[14.506285684204101, 0.10000000000000001]

I experience the same phenomenon. After saving saving and loading the weights the loss value increases significantly.

Moreover, I don't get consistent behaviour when loading the weights. For the same model weight file, I get dramatically different results every time I load it in a different Keras session. Can it be something linked to numerical precision?

I'm using Keras 2.0.8. Unfortunately I don't know how how to provide a minimal working example.

I'm also having the performance issues when saving and loading a model.

I'm facing the same issue, Any idea how to fix this?

I'm facing the same problem.

I have an LSTM layer in the model

I verified that the weights are loading properly by comparing them before and after with bcompare. But the output results are different after loading the weights.

Before introducing the LSTM layer my results were reproducible.

I'm having the same issue with the value of the loss function after reloading a model, as described by @darteaga. My model was saved via ModelCheckPoint with both save_best_only and save_weights_only being False. It was then loaded with keras.models.load_model function, which then gave significantly high loss value during the first training epochs.

I'm using python3 and keras 2.0.8. Any suggestion how to fix this would be highly appreciated.

I have the same issue. See attachment. Model was reloaded at epoch 51 as well as few times around 3-5. I do not have a LSTM layer.

My network is based on xception for transfer learning. From the following thread, the issue might be due to tensorflow with python3: https://github.com/tensorflow/tensorflow/issues/6683

@pickou: "I train and store the model in python2, and restore it using python3, it got terrible result. But when I restore the model using python2, the result is good. Train and store in python3, I got awful result."

I tried python 2.7.12 with tensorflow 1.2, 1.3 and 1.4 (master), Keras 2.1.1, Ubuntu 16.04 LTS and I still have the same issue with unexpected high loss value after reloading the model.

Chiming in. I'm running into the same problem, but it's GPU implementation only from what i've seen. I trained a model on both CPU and GPU. Saved them off. Then tested them in a cross.

Train v Test : Result

CPU v CPU : saved model and loaded model agree

CPU v GPU : saved model and loaded model disagree

GPU v CPU : saved model and loaded model disagree

GPU v GPU : saved model and loaded model disagree

I'm going to attempt the suggestions and see if they resolve the issues

I should be more specific. I train and save the model in one session, then open another session, load the saved model and test on the same data. I can't share the data unfortunately, but the model is a relatively simple sequential FFNN. No recurrence or memory neurons.

I did some more testing to see what was happening. I think this is an issue with jupyter notebook and keras/tensorflow interacting in a manner which is not predicted.

To test, i created and trained identical models in both an external file and in a jupyter notebook. I saved off their predictions, and the model itself using the self.save() method inherent to Keras models. Then in another file/notebook I loaded the test data used in the training file/notebook, loaded the model from their respective training partner, loaded the saved predictions, and then used the loaded model to create an array of predictions based on the test data.

I took the difference of the respective predictions, and found that for the files created in an IDE and run via command line, the results are identical. The difference is zero (to machine tolerance) between the predictions created in the training file and the predictions created by loading the saved model.

For the Jupyter Notebook version though, this isn't true. There is significant difference between the training file predictions and the loaded model predictions.

Interesting note though, is that when you load the model trained and saved via command line and the predictions created via command line in a jupyter notebook and take the difference there, you find it is zero.

Using the model.model.save() method also results in incorrect results in a jupyter notebook.

Using keras.save_weights() generates the exact values found in model.save() in a jupyter notebook.

Using the manual_variable_initialization() method suggested by @kswersky may be a work around, but it seems like a bit of a clever hack for something that should work out of the box. I haven't gotten it to work using just Keras layers though.

@vickorian,

Thanks for your efforts.

If I understood correctly you connect the error with mixing saving/loading in

different programs (command line and jupyter for example).

If that is the case then my example is a counter example.

My layout was that I trained several models (~100), recorded some statistics

and then saved them. At a later point I wanted to record some more statistics

on them so I loaded them up. The strange thing is that not every re-loaded

model had its scores mixed up just some of them. In any case all this process

was done through an Anaconda Prompt on Windows.

No, the problem is with just the notebooks. If you save on a notebook, you'll get bad results

Hello guys,

I was facing the same issue until I set my ModelCheckpoint to save only weights (save_weights_only=True).

e.g.:

checkpoint = ModelCheckpoint(file_path, monitor='val_acc', verbose=1, save_best_only=True, mode='max', save_weights_only=True)

After this, I tested my best model using a python script through the terminal, and I got a good prediction.

I haven't run the model checkpointing at all. I have been just training model by creating files and running python from command line instead of using Notebooks.

Having the same issue. Trained a Keras LTSM model, saved the weights. Start a new standalone process reconstructing the model and loading the weights to check evaluation/prediction result, and it gets a different result every time it runs unless I fix numpy random seed.

@chunsheng-chen will you try writing a stand-alone file and run it from command line to see if you get different results? Also, when do you fix the random seed? Is it immediately after importing numpy?

@vickorian,

My issue's methodology was exactly that:

- Ran file from command line.

Fixed the numpy random seed after the imports, as a first statement on the

main()function. E.g.:import numpy as np def main(): np.random.seed(0) # Rest of code if __name__ == '__main__': main()

@fmv1992

Interesting. Your models were still getting errors even when run from command line. My test setup is Ubuntu 16.04. I have the samba server set up so I can do further testing for my process on windows without much trouble.

The errors disappear when you set the random seed?

@vickorian,

They did not go away. I have set both numpy and python random seeds.

Unfortunately I'm developing at work so I feel uncomfortable to post the entire

code here. But the idea is that I have several functions of the type:

def myfunc(shape):

n_lines, n_columns = shape

model = Sequential()

model.add(Dense(

np.random.randint(1000, 2000),

input_dim=n_columns,

activation='sigmoid',

kernel_initializer='glorot_uniform',

bias_initializer='Zeros',

use_bias=True))

model.add(GaussianDropout(0.2))

model.add(Dense(1, activation='sigmoid'))

optimizer = SGD(lr=0.1, momentum=0.6, decay=1e-4, nesterov=True)

model.compile(loss='binary_crossentropy', optimizer=optimizer,

metrics=[auc2])

return model

which get trained in a for loop then saved with:

model.save(model_full_name)

After saving all of them get loaded and then evaluated. So no direct

evaluation after training. All go through the serialization/de-serialization

stage.

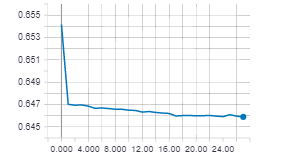

The puzzling results are in the attached image. See that many of the models

have a ROC AUC of ~ 0.5 and this is out of the trend of the overall plot. This

image brought me here.

I have tried several workarouds cited here and some place else but none of them

worked.

Also as you can see the loading error does not happen for 100% of the cases

(i.e. some models have reasonable ROC AUCs).

@fmv1992

It would be interesting to see the ROC AUC scores when tested before serialization. Is that possible?

I meet the same problem.

Keras: 2.0.4

Jupyter notebook

Tensorflow-gpu:Version: 1.3.0

Model:

def create_UniLSTMwithAttention(X_vocab_len, X_max_len, y_vocab_len, y_max_len, hidden_size, num_layers, return_probabilities = False):

# create and return the model for unidirectional LSTM encoder decoder

model = Sequential()

model.add(Embedding(X_vocab_len,300, input_length=X_max_len,

weights = [g_word_embedding_matrix],

trainable = False,mask_zero = True))

for _ in range(num_layers):

model.add(LSTM(hidden_size,return_sequences = True))

model.add(AttentionDecoder(hidden_size,y_vocab_len,return_probabilities = return_probabilities))

model.compile(loss='categorical_crossentropy',optimizer='adam',metrics=['accuracy'])

return model

Actions like:

- Model loss =1.2

- save_weights

- create model

- Restart kernel

- Create Model

- load_weights

- Loss = 2.4

@vickorian,

I'm trying to revive the code. Will post the results here as soon as I have

them.

However I think that the problem is pretty much "established" and I fail to see

how those results would help.

And unfortunately this issue is a real show stopper. If you are using Keras for

your homework assignment and training a 5 minute model this is not an issue.

However if you are on an enterprise setting this is a huge deal as the

models will necessarily be serialized to be transferred to a "production

environment" or something. And also the entire project reputation becomes

jeopardized by this behavior.

And please, please don't get me wrong. Keras seems to be a great project.

Indeed it is very unfortunate that this is happening. I want to be as helpful

as possible.

One thing really bothers me though: Why don't use the simple pickle module?

Best,

It seems that Keras will not overwrite the existed model file. Tried to use early stopping and save only model after stopping. Make sure your model directory is empty before training. I had similar issue and this solved my problem. Hope it helps.

@ryanzh13 Yes I save_weights after training stop and I make sure there is no other file in the directory. The training take 8 hours and I can only pray the instance won't be corrupted.

@darcula1993 Have you tried save instead of save_weights? Another thing is to try the code on a smaller dataset and less epochs. Also, you can print out the model.summary() to see if the model parameters are the same before saving and after loading.

@ryanzh13 Since there is a custom layer, I didn't. I will give it a try when I finish the training and submit my assignment. I already past the deadline.

I'm also having a problem saving my model or my weights. They "save" but when loaded back in the values are clearly garbage. For saving weights I tried reloading them into a replica model architecture and then doing a batch of predictions, results in junk values. I tried saving a full model with model.save() and reloading it and running a prediction gives junk values. The only time my prediction results look valid is while in the same run(session) and when not saving the whole model. If I try to save the whole model then even the same run predictions are junk. So something is happening when saving the model and/or loading the model. My model consists of regular Conv2d, max pooling, and dense layers. I'm guessing something is happening either to the weights or the training config data. I'm still new to all this so I'm not sure but the model consists of three things right: weights, training config, structure? I should also specify that I'm using the model.predict_generator() for my predictions.

Ubuntu 16.04 LTS

Tensorflow v1.4.1

Keras v2.1.2

Python 2.7 (anaconda)

@rsmith49 Thanks for your solution, I faced the same problem when training a text classifier using LSTM. Already got stuck for a long time and I found the problem is the word dict: I didn't enforce the dict to have the same id for one word in different session, the solution is either dump the dict using pickle or sort the words before assigning id to them.

I also had the problem of constant output after loading a model at Keras 2.0.6.

I've upgraded to Keras 2.1.2 and used the preprocess_input and now it works.

Below is the output of the model with and without using the preprocess_input function.

Using preprocess_input at Keras 2.0.6 didn't work for me and I had to upgrade to Keras 2.1.2.

Output:

Without Preprocess:

[[ 0. 1. 0.]]

[[ 0. 1. 0.]]

With Preprocess:

[[ 5.07961657e-24 1.00000000e+00 3.09985791e-28]]

[[ 0.00508418 0.00213011 0.99278569]]

from keras.preprocessing.image import load_img, img_to_array

from keras.applications.imagenet_utils import preprocess_input

import matplotlib.pyplot as plt

from keras.models import Model, load_model

import numpy as np

def readImg(filename):

img = load_img(filename, target_size=(299, 299))

imgArray = img_to_array(img)

imgArrayReshaped = np.expand_dims(imgArray, axis=0)

imgProcessed = preprocess_input(imgArrayReshaped, mode='tf')

return img, imgProcessed

def readImgWithout(filename):

img = load_img(filename, target_size=(299, 299))

imgArray = img_to_array(img)

imgProcessed = np.expand_dims(imgArray, axis=0)

return img, imgProcessed

sidesModel = load_model('C:/Models/Xception.hdf5', compile=False)

img1, arr1 = readImgWithout('c:/Test/image1.jpeg')

img2, arr2 = readImgWithout('c:/Test/image2.jpeg')

prob1 = sidesModel.predict(arr1)

prob2 = sidesModel.predict(arr2)

print('Without Preprocess:')

print(prob1)

print(prob2)

img1, arr1 = readImg('c:/Test/image1.jpeg')

img2, arr2 = readImg('c:/Test/image2.jpeg')

prob1 = sidesModel.predict(arr1)

prob2 = sidesModel.predict(arr2)

print('With Preprocess:')

print(prob1)

print(prob2)

I was able to resolve this issue by adapting my preprocessing pipeline.

I tried saving the model, clearing the session, then loading the model, and then calling the prediction function on the training and validation sets from when I trained the model. This gave me the same accuracy.

If I imported exactly the same data, but preprocessed it again (in my case using a Tokenizer for a text classification problem) the accuracy dropped drastically. After some research, I assume this was because the Tokenizer assigns different ids to different tokens unless they are trained on exactly the same dataset. I was able to achieve my training accuracy (~.95) on newly imported data in a new session, provided I used the same Tokenizer to preprocess the text.

This may not be the underlying problem for all above cases, but I suggest checking your preprocessing pipeline carefully and observing if the issue remains.

I'm facing the same issue.

If I recreate the model (ResNext SE from https://github.com/titu1994/keras-squeeze-excite-network) from scratch and use load_weights and then use model.predict everything works as expected. If I use load_model first and then use load_weights on top of that (I have different sets of weights) the model predicts garbage.

I checked that in both cases the weights are the same (through model.get_weights).

I use Keras 2.1.3 and Tensorflow 1.4.0

This works:

K.clear_session()

model=SEResNext(**model_params)

model.compile(Adam(1e-4), 'binary_crossentropy', metrics=[tf.losses.log_loss])

model.load_weights('1694.hdf5')

pred=model.predict(train_set)

print(log_loss(y_true=train_y,y_pred=pred))

This doesn't:

K.clear_session()

model=keras.models.load_model(model_name,custom_objects={'log_loss': tf.losses.log_loss})

model.load_weights('1694.hdf5')

pred=model.predict(train_set)

print(log_loss(y_true=train_y,y_pred=pred))

After 2hrs of struggling, I found this inconsistency can be related to K.batch_set_value() not working if multiple python kernels (with tf imported) are running on the same machine, and resolved if all but one are closed.

@ludwigthebull I am pickling the tokenizer and loading it to tokenize the text I want to predict, but I'm still getting random predictions. With your pipeline, are you running the model on the same session? If not, can you let us know how are you saving and loading the model?

@dterg I am not running the model in the same session. I am loading and saving the model as an .h5 file using the standard model.save('model_name') and load_model('model_name') Keras functions. I should add that I am not pickling the Tokenizer but instead rebuilding the tokenizer each time I load data by having a large text file as the common reference for the tokenizer. This is inefficient, but I haven't gotten around to writing a function that pickles the Tokenizer for me (I don't think Keras has this option.) In your case, I would try to see if you can get the same predictions by loading the model in a new session, but instead of pickling the tokenizer, just recreating it in the new session by using a common text file for both the training and the prediction phase. It may be that your issue has to do with the way you are pickling the tokenizer. Hope that helps !

I'm struggling again on the same problem on a different model. Both models consist on a series of linear convolutional filters (same filter reused many times) followed by non-linear convolutional filters. While training, after each epoch I save weights using the checkpoint callback.

Early in the training process I can load the weights saved to disk and I get reliable and consistent results, but beyond some training saved filter coefficients lead to almost random values of the loss function (as if there had been no training at all). Moreover the loss value is different every time I load the weights in a different Keras session.

I don't know what to do. Most workarounds suggested here rely on having LSTM layers (I don't have any) or data preprocessing (I don't do it). I find the problems while saving both the full model or only the weights.

I have built a few similar models and I haven't found this problem in all models consistently. Hence I don't know how to provide a minimally working example. I would be willing to do any testing to help solving the bug.

It is currently a major issue for me because I rely on Keras for my research and after this bug I have found myself unable to continue working.

I am using Keras 2.1.1 under Python 3.5.2. I have found the problem both with the Tensorflow (1.2.0) and Theano (0.9.0) backends.

please try first load the model and then the weigths using:

Save code

serialize model to JSON

model_json = model.to_json()

with open("model.json", "w") as json_file:

json_file.write(model_json)

serialize weights to HDF5

model.save_weights("model.h5")

print("Saved model to disk")

Load code

load json and create model

json_file = open('model.json', 'r')

loaded_model_json = json_file.read()

json_file.close()

loaded_model = model_from_json(loaded_model_json)

load weights into new model

loaded_model.load_weights("model.h5")

print("Loaded model from disk")

reference:

https://machinelearningmastery.com/save-load-keras-deep-learning-models/

The problem is: If you are using tokenizer (from Keras), because Keras applies an unique index for each word but then if you load the model and use tokenizer applies another index for each word. The solution is save the original word_index and then load to tokenizer with these index.

I had the same problem. Turns out, the problem wasn't with my LSTM, but with my pre-trained word vectors. I preprocessed my corpus using FastText, and since it is a non-deterministic model, each run of Skip-Gram gives a different set of word vectors. Since we are dealing with LSTM, I'm pretty sure a lot of folks out there are doing some kind of word2vec. Make sure that your word vectors are the same each time. Hope this helps!

I have the same issue:

I am trying to load a saved model in order to use it for predictions after I restart the kernel. While saving the model seems to work, loading does not seem to work without issues. Heres what I have done:

I have retrained a VGG16 model using Keras:

vgg16_model = keras.applications.vgg16.VGG16()

model = Sequential()

for layer in vgg16_model.layers[:-1]:

model.add(layer)

model.layers.pop()

for layer in model.layers:

layer.trainable = False

model.add(Dense(26, activation='softmax'))

model.summary()

model.compile(Adam(lr=.0000025), loss='categorical_crossentropy', metrics=['accuracy'])

Next I have trained the model:

model.fit_generator(train_batches,validation_data=validation_batches,

epochs=85, verbose=1,callbacks=[tbCallBack,earlystopCallback])

and finally I am saving my model like so:

model.save("model.h5")

Now when I restart the kernel and load the model again using:

from keras.models import load_model

new_model = load_model("model.h5")

While the model does load, I get a warning telling me:

C:\Users...Anaconda2envs\tensorflow-gpu\lib\site-packages\kerasmodels.py:291:

UserWarning: Error in loading the saved optimizer state. As a result,

your model is starting with a freshly initialized optimizer.

warnings.warn('Error in loading the saved optimizer '

Furthermore, when I use the loaded model for predictions, I get wrong values (very different values from the one I trained) and it appears that the model has not been trained at all. However, when I check .get_weights I see that weights have been loaded.

I have also tried to save and load the model via json and weights only like so:

# serialize model to JSON

model_json = model.to_json()

with open("model.json", "w") as json_file:

json_file.write(model_json)

# serialize weights to HDF5

model.save_weights("model_weights.h5")

and loading:

#load json and create model

json_file = open('model.json', 'r')

loaded_model_json = json_file.read()

json_file.close()

load_model = model_from_json(loaded_model_json)

# load weights into new model

load_model.load_weights("model_weights.h5")

While loading the model this way does not throw me an error message, I still get predictions much worse than my previously trained model. In order to ensure that the model loading works I did the following:

I checked the weights of the trained model and the loaded model and they appear to be the same.

Also checking the models summary "model.summary()" displays the same architecture.

So to my understanding, having the same architecture and the same weights should yield the same model i.e. results - but I can't figure out why this is not the case

What baffles me even further is that when I use model.get_weights() and model.set_weights() it works perfectly, e.g.:

#getting weights from the old model

weights = model.get_weights()

# setting weights of the new model

new_model.set_weights(weights)

Hence, my current workaround involves saving the weights in as a numpy (.npy) file and loading it, once I restart my kernel

# saving

the_weights = model.get_weights()

np.save("weights_array", the_weights)

# loading upon restarting the kernel

the_weights = np.load('weights_array.npy')

new_model.set_weights(trained_weights)

Maybe this is helpful for some of you as well!

Hi everyone

I've got exactly the same issue previously. The saved keras model works fine in the same session, but completely random result in other session.

However I finally found that it was this snippet of code that messed up the result.

data = open(filepath, encoding='utf-8').read().lower()

uniq = set(data)

# mapping

ch2idx = {word: idx for idx, word in enumerate(uniq)}

idx2ch = {idx: word for idx, word in enumerate(uniq)}

My model is using RNN to generate human's name, so I created a mapping from character to index.

However, according to this link, the set function will return different result in different console/session since Python 3.3, so even the model remains the same, this wrong mapping rule will map id to the wrong character, and the result seems random.

For those who have the same issue as mine, please check if any other environment variable related to your model is unchanged, or even your model is identical, other related variable will also mess up your prediction result.

Is there any solution to this issue? I am running across the same issue, I am training a model on AWS. Every epoch takes about an hour to run, and I am not able to save the model. Is there any workaround to it?

I have also tried saving and loading weights, and the model as a json file, none of them seem to work.

Does the numpy workaround work? Sadly, I have to retrain my model and would really love to find a solution to this issue.

I have solved this problem because we have to save the word_index in addition to the model. The reason is because when you use the predict you have to tokenize the sentence also but you have to apply the same index that in the previous word_index.

@alejandrods I am working on an image prediction model, so I don't think tokenizing applies here. I have checked for the order of output classes though, and they are the same through different runs.

Just to make things a little stranger - I'm training an image classifier using Xception with bottleneck features (saving in one session, then testing in another). When I save and load my classifier layers (a separate sequential model) things work well. When I have the classifier layers attached to the base network and only train those layers it also works. However in the latter case if I let any of the base network layers train then the results I get look like the weights have been randomly initialized.

One of the things I tried was to train the classifier layers only then let the last convolutional block train for one epoch at the end before saving - the results looked half way between randomly initialized and the bottleneck results. Training for any longer and it looks almost like a complete re-initialization. This is not a learning rate problem due to the 20% difference in accuracy score between validation accuracy during training and with reloading. It kinda looks like some weights are saving/loading and others are not.

Update:

I've compared the weights for each corresponding layer between the two networks and they are identical. This is the case both before and after calling model.evaluate_generator().

Update:

Well I have managed to get this to work by recompiling the model after every change (trainable flags, loading weights, etc..).

model.compile(optimizer=model.optimizer, loss=model.loss, metrics=model.metrics).

Although I don't think that this fully explains the problem I was seeing as the issue regressed after trying to tidy up the code a bit.

I am also experiencing same problem.

I trained a model on a machine with GPU using CudnnGRU, I saved the model weights. I then rebuilt a model with similar architecture but this time with GRU on machine with just CPU. I loaded the saved weights on to this new model and I see different prediction results.

Sadly, I cannot use the numpy work around as the shape of the weights change from CudnnGru to GRU.

I have same issue here.

Loading weight and evaluating model yields poor results.

What is interesting that if I start training again, after one epoch loss goes back to the correct value from previous training:

My setup:

- I'm not using any custom layers.

- Windows 10

- Using GPU

- Keras 2.1.3

- tensorflow-gpu 1.5.0

- python 3.6.4

The gotcha I found was that when using a sub-model in a Lambda layer (and nowhere else) the weights corresponding to the sub model were not saved. My hacky solution:

layer = L.Lambda(func_including_model)

layer.trainable_weights = included_model.trainable_weights

layer.non_trainable_weights = included_model.non_trainable_weights

I also needed to add updates for batch norm.

@mharradon that sounds serious, can you report a bug with an example?

I've opened a new issue with reproducing code: https://github.com/keras-team/keras/issues/9740

One possible trigger for this that I've found comes from the keras auto layer naming (i.e. if you don't explicitly give a layer a name then you get one that is indexed based on the number of times that layer has been created in that session). If you build your model(s) slightly differently or in a different order between train and test time code then these auto named layers may have a different name to what is stored in the weights file. This of course means that the load weights by name flag will be useless in this case, and also explains why the numpy save/load weights solution works for some people.

The safest way to avoid this case is to save the model architecture as well as the weights - then in the second session create the model directly from that save:

Saving Model

# Architecture

with open("model.json","w") as f:

f.write(model.to_json())

f.close()

# Weights

model.save_weights("model.hdf5")

Loading Model

# Architecture

with open("model.json","r") as f:

json_str = f.read()

f.close()

model = keras.models.model_from_json(json_str)

# Weights

model.load_weights("model.hdf5")

Hopefully this is helpful for some people.

This solution has only fixed one manifestation of this problem in my code. I'll post the solution to the other instance if I manage to work it out.

I have also found that fine-tuning batch-norm layers can also result in this problem (in Xception at least - haven't tested with the other BN models yet). My stop-gap solution is to freeze the BN layers using the method in #7085 while I get my head around why the BN is not working in my case.

I think someone said it before, but I'll comment anyway just in case someone has the same situation in the future.

I made a script with multiple calls to fit in order to train a model on multiple smallish files in batches. In order to save the state of the model for each next call to fit, I had to somehow save the model. I realized that saving and loading the model with model.save() and load_model() wasn't working, and I couldn't figure out why.

I tried saving the architecture and weights separately, which didn't work either, and finally decided to change my script in order to be able to train the model progressively without saving the model, but of course at the end of the session I would lose the progress.

Finally, I realized that the problem was just that Keras won't overwrite an existing file, so I would save the first model calling it 'Model.h5py' or whatever, and then load it in each call to fit, starting again from a very low accuracy each time. To solve this, I just made sure that the Model is saved differently each time, adding a counter to my script.

This is unlikely to be the root cause. Because Keras actually does overwrite the old file in normal cases.

@KaitoHH Thank you for the solution. I experienced the issue, spent easily 3 hours. At the end it was caused by the set function returning different order per session.

I'm having similar issues to the original problem where I am saving model weights, but when I load up the weights again, it's predicting essentially random probabilities. Has there been a fix to this yet? Thanks.

@enriqueav Thanks, I had the same problem, my sample list was in different order per session due to python set. Thus my LSTM would have its accuracy reduced to baseline when I loaded the weights.

I have similar problems. I use the sample code in Keras documentation as following to save and reload model and weights, but the reloaded model get wrong. The model has around 1000 layers, and I think the problem is possibly caused by the mismatch between weights and layers when newmodel.set_weights(weights). Is there any solution to it?

json_string = model.to_json()

weights = model.get_weights()

newmodel = model_from_json(json_string)

newmodel.set_weights(weights)

Hi guys, I got a similar issue with Tensorflow backend for Keras, and the new nightly built version solved the problem. Not sure whether it may help this issue.

I have re-encountered the same bug in a totally different model. The model is https://arxiv.org/abs/1703.09452, basically consisting on a series of 1D convolutional layers with skip connections (the full model is a GAN but for the time being I'm just training the generator). So there are no recurrent layers, no preprocessing, nothing fancy.

One thing that I noticed, both with this model and the previous one, is that with a small amount of training the loss almost doesn't increase after saving and loading, but the more the model is trained the more the loss function increases after saving and loading. Could it be anything related to machine precision?

What I have tried so far:

- Give a explicit name to the model layers, and load by name (the increase in the loss function seems to be even greater in this case)

- Setting PYTHONHASHSEED=0 as a an environment variable.

@darteaga do you have batchnorm layers? I found them to be problematic in #10784

@Dapid No, I don't have batchnorm layers... The only layers I have are:

- Conv2D

- PReLU

- Conv2DTranspose

- Add

- Reshape

- Conv1D

Sequential layers with skip connections.

Thank you very much.

Is there any known solution or workaround?

I can't publish the exact code or the data, but I'm willing to help in testing / debugging.

This is a stopper bug for me that I have hit twice with two different models. If unresolved, I'll need to switch to a different DL framework.

Can you check the weights of the original and re-loaded model? They should match exactly.

@Dapid Good idea. I have done the following test:

- Reloading the weights of the model

- Saving them again with save_weights()

Then I have compared the original and re-loaded model weights in the hdf5 file (with the tool h5diff) and I have found that they are _identical_.

@darteaga can you check it on the model loaded? It is possible that some weights are being transposed wrongly when reading from the HDF5.

@Dapid How can I do this test that you propose? If I look at the weights with model.weights I seen tensorflow tensors, and I don't know how to compare them (thank you very much, btw).

@darteaga

from keras import backend as K

K.batch_get_value(m.weights[0])

@Dapid Thanks. By looking at this, I discovered that in my case the issue this time is related to using multi_gpu_model, and the template and multi-gpu models apparently not sharing the weights. I am still investigating, and I will post the results here when I am done.

In my case the root cause of the problem was using multi_gpu_model. I have found a bug with cpu_relocation. I have opened another issue: https://github.com/keras-team/keras/issues/11313

I'll give a detail description below.

keras: 2.2.2

tensorboard: 1.10.0

tensorflow-gpu: 1.10.1

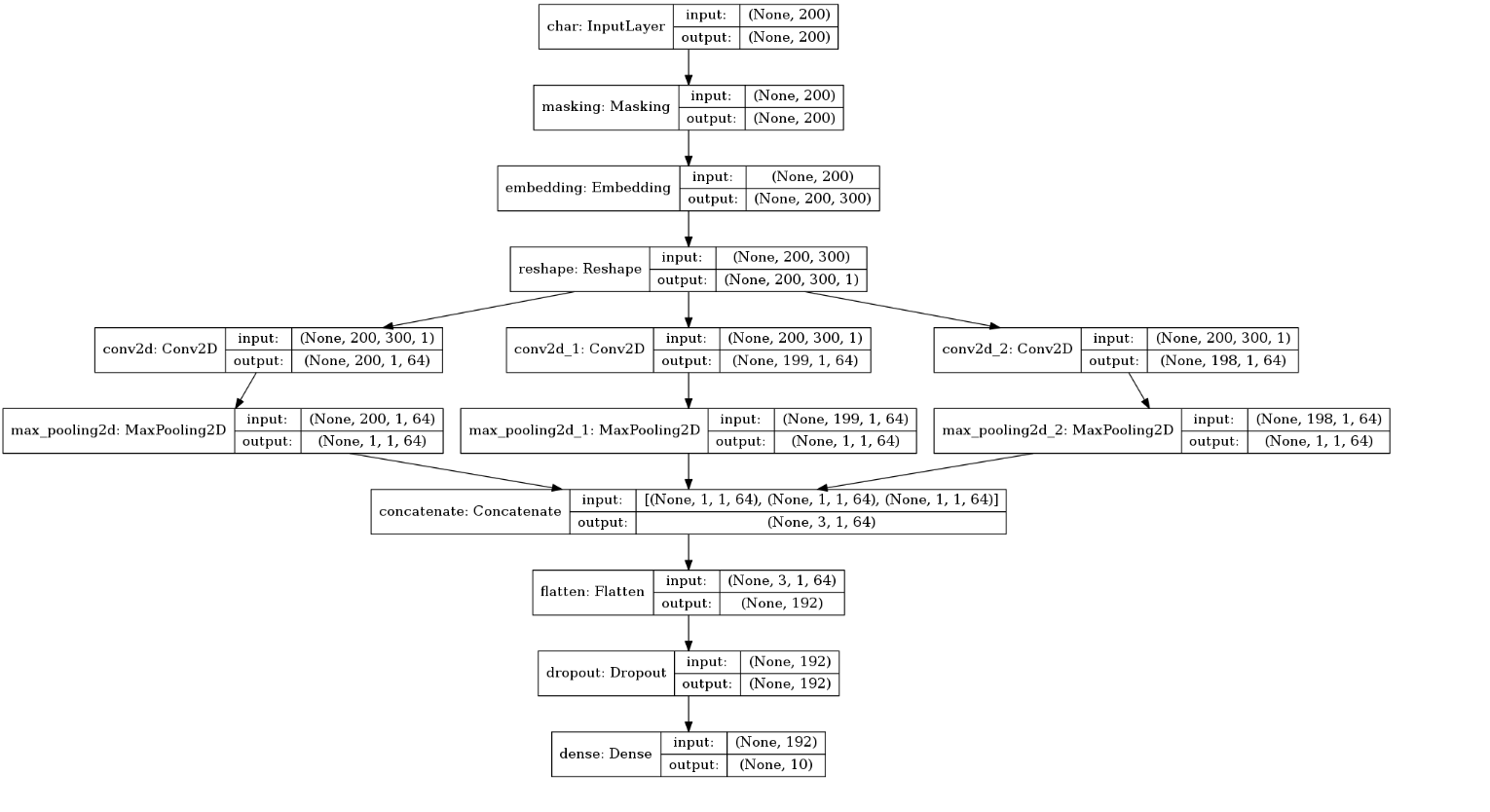

"RNN problem?"

I have the same problem when using a model textcnnbelow to do text classify:(just prove it's not a rnn problem)

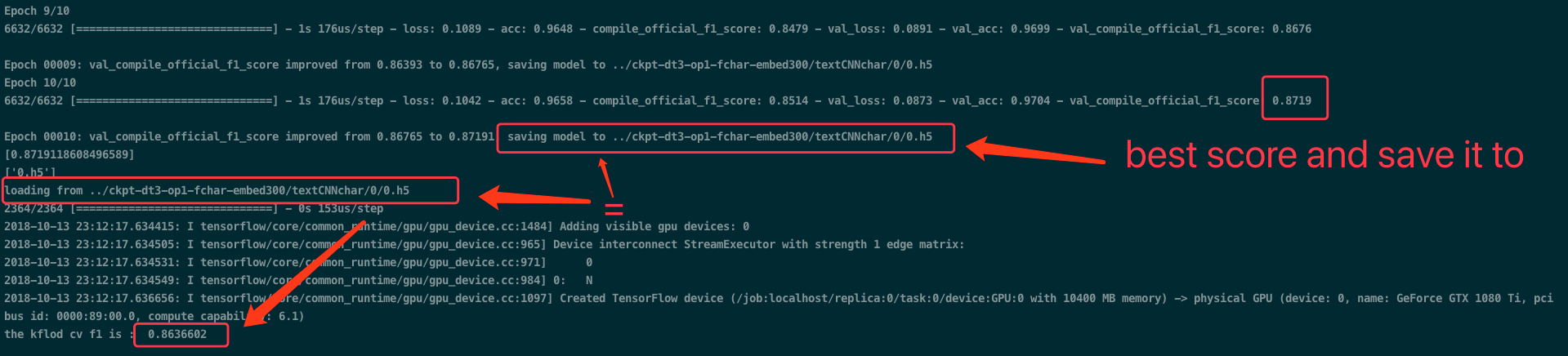

All layer is trainable, I saved my model at best f1 score 0. 8719, and load my best model to predict same validate dataset but got different score '0.8636'.

"Your models have the different parameter."

I checked my parameters as @kevinjos said, I saved model parameter and weight below if new best f1 score appear:

self.model.save_weights(save_path)

pickle.dump(self.model.get_weights(), open('./debug_best_weight.pkl', 'wb'))

Then after model.fit ending, I check it:

self.model = load_model(save_path)

m_weight = self.model.get_weights()

m_best_weight = pickle.load(open('./debug_best_weight.pkl', 'rb'))

m_weights = m.get_weights()

assert len(m_best_weight) == len(m_weights)

for i in range(len(m_weights)):

assert np.array_equal(m_best_weight[i], m_weights[i])

print("Model weight serialization test passed")

the output is:

Model weight serialization test passed

Unfortunately, all parameter is the same, but I get different score using the same model, same parameter and same validate dataset. SO

"You use a different function to calculate score"

so maybe you say I have a different function to calculate f1 score. But it's not.

I use custom callback and f1 metric:

self.model.compile(loss='binary_crossentropy', optimizer='adam', metrics=[JZTrainCategory.compile_official_f1_score])

During training, I got best score 0. 8719, And the use the same score function below, it changed:

sess = tf.Session()

with sess.as_default():

score = JZTrainCategory.compile_official_f1_score(K.constant(y_test), K.constant(oof_pred_)).eval()

the score is '0.8636'. see the picture below:

"You write a wrong score function"

my score function can run correctly, even it's wrong, saved model should give me a same wrong result, you can see all my customer callback function and score function JZTrainCategory.compile_official_f1_score.

import tensorflow.keras as keras

from tensorflow.keras import backend as K

import numpy as np

import warnings

import glob

import os

from tensorflow.keras.models import load_model

from tensorflow.keras.models import save_model

import pickle

class JZTrainCategory(keras.callbacks.Callback):

def __init__(self, filepath, nb_epochs=20, nb_snapshots=1, monitor='val_loss', factor=0.1, verbose=1, patience=1,

save_weights_only=False,

mode='auto', period=1):

super(JZTrainCategory, self).__init__()

self.nb_epochs = nb_epochs

self.monitor = monitor

self.verbose = verbose

self.filepath = filepath

self.factor = factor

self.save_weights_only = save_weights_only

self.patience = patience

self.r_patience = 0

self.check = nb_epochs // nb_snapshots

self.monitor_val_list = []

if mode not in ['auto', 'min', 'max']:

warnings.warn('ModelCheckpoint mode %s is unknown, '

'fallback to auto mode.' % (mode),

RuntimeWarning)

mode = 'auto'

if mode == 'min':

self.monitor_op = np.less

self.init_best = np.Inf

elif mode == 'max':

self.monitor_op = np.greater

self.init_best = -np.Inf

else:

if 'acc' in self.monitor or self.monitor.startswith('fmeasure'):

self.monitor_op = np.greater

self.init_best = -np.Inf

else:

self.monitor_op = np.less

self.init_best = np.Inf

@staticmethod

def compile_official_f1_score(y_true, y_pred):

y_true = K.reshape(y_true, (-1, 10))

y_true = K.cast(y_true, 'float32')

y_pred = K.round(y_pred)

tp = K.sum(y_pred * y_true)

fp = K.sum(K.cast(K.greater(y_pred - y_true, 0.), 'float32'))

fn = K.sum(K.cast(K.greater(y_true - y_pred, 0.), 'float32'))

p = tp / (tp + fp)

r = tp / (tp + fn)

f = 2*p*r/(p+r)

return f

def on_batch_begin(self, batch, logs={}):

return

def on_batch_end(self, batch, logs={}):

return

def on_train_end(self, logs={}):

print(self.monitor_val_list)

return

def on_train_begin(self, logs={}):

self.init_lr = K.get_value(self.model.optimizer.lr)

self.best = self.init_best

return

def on_epoch_begin(self, epoch, logs=None):

return

def on_epoch_end(self, epoch, logs=None):

logs = logs or {}

logs['lr'] = K.get_value(self.model.optimizer.lr)

n_recurrent = epoch // self.check

self.save_path = '{}/{}.h5'.format(self.filepath, n_recurrent)

dir_path = '{}'.format(self.filepath)

os.makedirs(dir_path, exist_ok=True)

current = logs.get(self.monitor)

if current is None:

warnings.warn('Can save best model only with %s available, '

'skipping.' % (self.monitor), RuntimeWarning)

else:

if self.monitor_op(current, self.best):

# if better result: save model

if self.verbose > 0:

print('\nEpoch %05d: %s improved from %0.5f to %0.5f,'

' saving model to %s'

% (epoch + 1, self.monitor, self.best,

current, self.save_path))

self.best = current

if self.save_weights_only:

self.model.save_weights(self.save_path)

pickle.dump(self.model.get_weights(), open('./debug_weight.pkl', 'wb'))

else:

# save_model(self.model, self.save_path)

self.model.save(self.save_path)

else:

# if worse resule: reload last best model saved

self.r_patience += 1

if self.verbose > 0:

if self.r_patience == self.patience:

print('\nEpoch %05d: %s did not improve from %0.5f' %

(epoch + 1, self.monitor, self.best))

if self.save_weights_only:

self.model.load_weights(self.save_path)

else:

self.model = load_model(self.save_path, custom_objects={'compile_official_f1_score': JZTrainCategory.compile_official_f1_score})

# set new learning rate

old_lr = K.get_value(self.model.optimizer.lr)

new_lr = old_lr * self.factor

K.set_value(self.model.optimizer.lr, new_lr)

print('\nReload model and decay learningrate from {} to {}\n'.format(old_lr, new_lr))

self.r_patience = 0

if (epoch+1) % self.check == 0:

self.monitor_val_list.append(self.best)

self.best = self.init_best

if (epoch+1) != self.nb_epochs:

K.set_value(self.model.optimizer.lr, self.init_lr)

print('At epoch-{} reset learning rate to mountain-top init lr {}'.format(epoch+1, self.init_lr))

more test

I also test model.load_weights,the results are similar near 0.864 but they are still not equal.

I don't know how to fix the bug, and it makes me very insecurities. Anyhow, it's about 1% point.

Hope someone's help. Thanks in advance. It wastes my lots of time. Oh gosh/

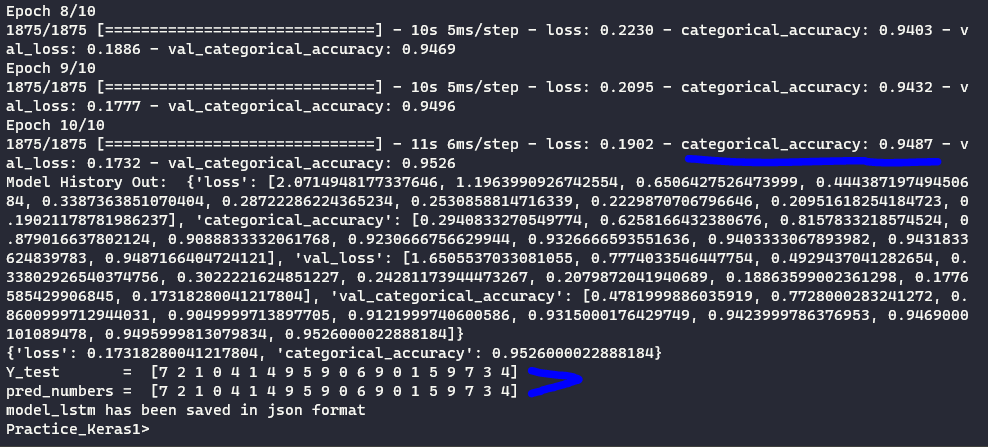

I was able to work around this problem by using TensorFlow Saver.

Training