Julia: BLAS threads should default to physical not logical core count?

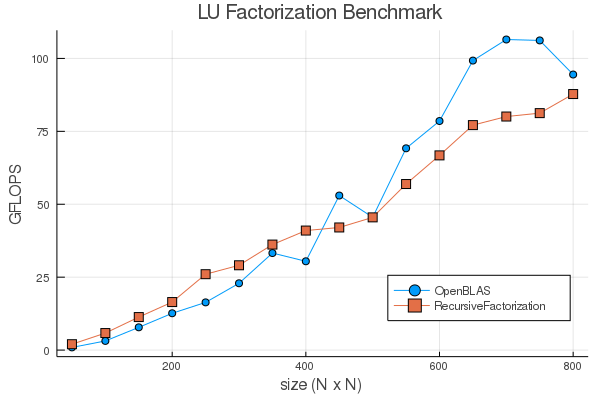

On an i7-8550u, OpenBLAS is defaulting to 8 threads. I was comparing to RecursiveFactorizations.jl, and saw the performance is like:

using BenchmarkTools

import LinearAlgebra, RecursiveFactorization

ccall((:openblas_get_num_threads64_, Base.libblas_name), Cint, ())

LinearAlgebra.BLAS.set_num_threads(4)

BenchmarkTools.DEFAULT_PARAMETERS.seconds = 0.5

luflop(m, n) = n^3÷3 - n÷3 + m*n^2

luflop(n) = luflop(n, n)

bas_mflops = Float64[]

rec_mflops = Float64[]

ns = 50:50:800

for n in ns

A = rand(n, n)

bt = @belapsed LinearAlgebra.lu!($(copy(A)))

rt = @belapsed RecursiveFactorization.lu!($(copy(A)))

push!(bas_mflops, luflop(n)/bt/1e9)

push!(rec_mflops, luflop(n)/rt/1e9)

end

using Plots

plt = plot(ns, bas_mflops, legend=:bottomright, lab="OpenBLAS", title="LU Factorization Benchmark", marker=:auto)

plot!(plt, ns, rec_mflops, lab="RecursiveFactorization", marker=:auto)

xaxis!(plt, "size (N x N)")

yaxis!(plt, "GFLOPS")

1 thread

4 threads

8 threads

Conclusion: the default that Julia chooses, 8 threads, is the worst, with 1 thread doing better. But using the number of physical cores, 4, is best.

So there were a lot of issues on Discourse and Slack #gripes where essentially "setting BLAS threads to 1 is better than the default!", but it looks like it's because the default should be the number of physical and not logical threads. I am actually very surprised it's not set that way, and so I was wondering why, and also where this default is set (I couldn't find it).

All 41 comments

Interestingly, on Linux I checked our benchmarking server and see:

processor : 15

vendor_id : GenuineIntel

cpu family : 6

model : 63

model name : Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz

stepping : 0

microcode : 0x3d

cpu MHz : 2394.455

cache size : 35840 KB

physical id : 30

siblings : 1

core id : 0

cpu cores : 1

apicid : 30

initial apicid : 30

fpu : yes

fpu_exception : yes

cpuid level : 13

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts mmx fxsr sse sse2 ss syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon pebs bts nopl xtopology tsc_reliable nonstop_tsc eagerfpu pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand hypervisor lahf_lm abm ssbd ibrs ibpb stibp fsgsbase tsc_adjust bmi1 avx2 smep bmi2 invpcid xsaveopt arat spec_ctrl intel_stibp flush_l1d arch_capabilities

bogomips : 4788.91

clflush size : 64

cache_alignment : 64

address sizes : 42 bits physical, 48 bits virtual

power management:

[crackauckas@pumas ~]$ julia

_

_ _ _(_)_ | Documentation: https://docs.julialang.org

(_) | (_) (_) |

_ _ _| |_ __ _ | Type "?" for help, "]?" for Pkg help.

| | | | | | |/ _` | |

| | |_| | | | (_| | | Version 1.2.0 (2019-08-20)

_/ |\__'_|_|_|\__'_| | Official https://julialang.org/ release

|__/ |

julia> ccall((:openblas_get_num_threads64_, Base.libblas_name), Cint, ())

8

and it's correct there, so this may be an issue with Windows.

This doesn't seem like a Windows-only problem.

julia> versioninfo(verbose=true)

Julia Version 1.3.0-rc2.0

Commit a04936e3e0 (2019-09-12 19:49 UTC)

Platform Info:

OS: Linux (x86_64-linux-gnu)

Ubuntu 19.04

uname: Linux 5.0.0-29-generic #31-Ubuntu SMP Thu Sep 12 13:05:32 UTC 2019 x86_64 x86_64

CPU: Intel(R) Core(TM) i7-6820HQ CPU @ 2.70GHz:

speed user nice sys idle irq

#1 2700 MHz 384385 s 2674 s 128341 s 7458186 s 0 s

#2 2700 MHz 381396 s 3631 s 125158 s 1466384 s 0 s

#3 2700 MHz 304236 s 3488 s 99122 s 1470836 s 0 s

#4 2700 MHz 421380 s 4702 s 137860 s 1454895 s 0 s

#5 2700 MHz 192528 s 2218 s 83784 s 1472282 s 0 s

#6 2700 MHz 267616 s 1661 s 82347 s 1470275 s 0 s

#7 2700 MHz 309143 s 1778 s 98877 s 1478079 s 0 s

#8 2700 MHz 287978 s 3788 s 85621 s 1470172 s 0 s

Memory: 15.530269622802734 GB (4015.9765625 MB free)

Uptime: 238530.0 sec

Load Avg: 0.39208984375 0.30615234375 0.16015625

WORD_SIZE: 64

LIBM: libopenlibm

LLVM: libLLVM-6.0.1 (ORCJIT, skylake)

Environment:

HOME = /home/scheme

OPTFLAGS = -load=/home/scheme/julia/usr/lib/libjulia.so

PATH = /home/scheme/.cargo/bin:/home/scheme/Downloads/VSCode-linux-x64/code-insiders:/sbin:/home/scheme/.cargo/bin:/home/scheme/Downloads/VSCode-linux-x64/code-insiders:/sbin:/home/scheme/.cargo/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/snap/bin

TERM = screen-256color

WINDOWPATH = 2

julia> ccall((:openblas_get_num_threads64_, Base.libblas_name), Cint, ())

8

My Linux laptop has four physical cores.

Isn't 8 coming from here?

https://github.com/JuliaLang/julia/blob/318affa294e8d493b1b3edcc3e06df26c97eb4bb/base/Base.jl#L395-L403

See also

https://github.com/JuliaLang/julia/blob/318affa294e8d493b1b3edcc3e06df26c97eb4bb/base/sysinfo.jl#L56-L63

Ref: #27856

Here is the comparison of the 4 thread case on an Intel i7-6770HQ (Skull Canyon NUC) with using the Intel MKL

That shows? It seems RecursiveFactorizations is only faster when the BLAS is not well-tuned, but I don't understand how what you show is related @macd . Could you show the 8 thread version of MKL to see if MKL is also not smart with the setting being at logical?

Here are the MKL results as a function of number of threads on the Skull Canyon NUC. It has 4 cores and 8 threads. Clearly a stumble with 8 threads around n = 500, but it is still better than 4. Apparently Intel knows how to use the hyper threads more effectively.

That explains a lot. Thanks

So I have egg all over my face on this one. Just looked at the code in the window I left open this morning to see I incorrectly used an index rather than the indexed value for the number of threads. The above graph is then for 1,2,3,4 threads. The following graph shows no significant difference between 4 and 8 threads. (I never was an early morning person.)

Ahh yes, so it looks like it's just smart and doesn't actually run more threads or something like that. Since OpenBLAS doesn't do that, this would account for a good chunk of the difference given the default settings.

Can we easily detect the number of actual cores versus logical cores? We renamed the old Sys.CPU_CORES to Sys.CPU_THREADS fortunately, so we have the name Sys.CPU_CORES available for that value but I'm not sure how best to get it.

Is lscpu reliably on Linux machines?

It correctly identified 10 cores per socket and 2 threads per core:

> lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

Address sizes: 46 bits physical, 48 bits virtual

CPU(s): 20

On-line CPU(s) list: 0-19

Thread(s) per core: 2

Core(s) per socket: 10

Socket(s): 1

NUMA node(s): 1

Vendor ID: GenuineIntel

CPU family: 6

Model: 85

Model name: Intel(R) Core(TM) i9-7900X CPU @ 3.30GHz

Stepping: 4

CPU MHz: 3980.712

CPU max MHz: 4500.0000

CPU min MHz: 1200.0000

BogoMIPS: 6600.00

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 1024K

L3 cache: 14080K

NUMA node0 CPU(s): 0-19

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf pni pclmulqdq dtes64 monitor ds_cpl vmx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch cpuid_fault epb cat_l3 cdp_l3 invpcid_single pti ssbd mba ibrs ibpb stibp tpr_shadow vnmi flexpriority ept vpid ept_ad fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 erms invpcid rtm cqm mpx rdt_a avx512f avx512dq rdseed adx smap clflushopt clwb intel_pt avx512cd avx512bw avx512vl xsaveopt xsavec xgetbv1 xsaves cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local dtherm ida arat pln pts md_clear flush_l1d

> lscpu | grep "Core(s) per socket"

Core(s) per socket: 10

Some possibilities for figuring out the number of physical cores:

- https://en.wikipedia.org/wiki/CPUID

- https://c9x.me/x86/html/file_module_x86_id_45.html

- https://stackoverflow.com/a/46201896/659248:

smp_processor_id()

We may be able to borrow something from https://github.com/m-j-w/CpuId.jl

Another possible library for figuring out the number of cores (from @RoyiAvital):

Just as a note, we already do a bunch of cpuid stuff to support picking functions optimized for a feature-set at runtime e.g.

Perhaps extending that to pick out the number of cores instead of bundling a whole other library is more "clean".

I'm not very familiar with things this close to hardware. But, IIUC, CPUID is not the full story and I suppose you need to run the instruction on each core/socket. Does julia has a facility to do that in all supported platforms?

Also, I noticed that there is an issue tracked in libuv https://github.com/libuv/libuv/issues/1967 for adding the support for physical cores to uv_cpu_info (which is called via Sys.cpu_info). I guess it'd be ideal if it's supported by libuv.

BTW, It looks like uv_cpu_info just looks into OS's API (e.g., /proc/cpuinfo in Linux):

https://github.com/JuliaLang/libuv/blob/julia-uv2-1.29.1/src/unix/linux-core.c

https://github.com/JuliaLang/libuv/blob/julia-uv2-1.29.1/src/unix/darwin.c

https://github.com/JuliaLang/libuv/blob/julia-uv2-1.29.1/src/win/util.c

Maybe another option is to call such APIs directly from Julia? For example, parsing /proc/cpuinfo sounds very easy.

FYI: Meanwhile, I wrote a simple helper Python script to launch Julia with appropriate JULIA_CPU_THREADS: https://github.com/tkf/julia-t. It does something equivalent to lscpu -p | grep -v '^#' | sort --unique --field-separator=, --key=2 | wc -l to get the number of threads. It's useful to use this via Distributed.addprocs.

It seems MKL limits the maximum threads to the number of physical cores (i9-9900K (8 cores)):

julia> ccall((:mkl_get_max_threads, "libmkl_rt"), Int32, ())

8

julia> ccall((:mkl_set_num_threads, "libmkl_rt"), Cvoid, (Ptr{Int32},), Ref(Int32(16)))

julia> ccall((:mkl_get_max_threads, "libmkl_rt"), Int32, ())

8

julia> ccall((:mkl_set_num_threads, "libmkl_rt"), Cvoid, (Ptr{Int32},), Ref(Int32(4)))

julia> ccall((:mkl_get_max_threads, "libmkl_rt"), Int32, ())

4

Is this stuff done automatically in Julia 1.5?

Not that I'm aware of. No one ever figured out how to get the right number of threads.

I am sure that this has already been suggested, but what if the is a temporary solution of just taking half of the current number because that would be the right calculation in most cases?

Even if AMD processors count logical cores different than intel (maybe they don't?...), it seems like it might be better to choose a default which matches the higher market share for now so that the MKL vs. OpenBLAS is more speed is more comparible. Others can always increase the number of cores if they wish.

No one ever figured out how to get the right number of threads.

I think it just has to be handled via whatever the API given by the OS. For example, in Linux, you'd want to make it cgroup-aware rather than using the hardware information directly.

$ sudo docker run --cpuset-cpus=0-2 -i -t --rm julia

_

_ _ _(_)_ | Documentation: https://docs.julialang.org

(_) | (_) (_) |

_ _ _| |_ __ _ | Type "?" for help, "]?" for Pkg help.

| | | | | | |/ _` | |

| | |_| | | | (_| | | Version 1.4.1 (2020-04-14)

_/ |\__'_|_|_|\__'_| | Official https://julialang.org/ release

|__/ |

(@v1.4) pkg> add CpuId

...

julia> using CpuId

[ Info: Precompiling CpuId [adafc99b-e345-5852-983c-f28acb93d879]

julia> cpucores()

4

julia> cputhreads()

8

shell> cat /sys/fs/cgroup/cpuset/cpuset.cpus

0-2

julia> Sys.CPU_THREADS

8

Here, using Threads.nthreads() == 3 with julia -t sounds like the best approach.

Parsing /proc and /sys sounds easy to do for Linux. I'm not sure about other OSes, though.

(Edit: fix the example to use --cpuset-cpus=0-2 instead of --cpuset-cpus=0-3)

We (probably) don't want to make all of the CpuId package a dependency of Julia, so in order to use that, we would have to extract a minimal piece of it that lets us get the number of cores and use that.

Short of that, defaulting to half the number of CPU threads is probably better than saturating the cores. Of course, there are other computations than BLAS and they generally do benefit from hyperthreads, so maybe not. And if anyone has gone to the trouble of disabling hyperthreading, they're suddenly going to see their BLAS-intensive Julia code get half the FLOPS, so not great.

Having libuv support this would be nice but since that's a feature request issue and there's no PR to implement it, whereas we already have Julia code that implements using CPUID to detect the number of cores and threads, it seems better to do that for now. If libuv ever adds the feature, we can always switch to using that instead.

@KristofferC's observation that we already do a bunch of CPU detection stuff in C is actually the most promising avenue, imo: write C code that figures out the right number of CPU cores and threads and then expose that number as a C global or C function and set the relevant Julia globals and then use those. So someone "just" needs to write C code that does this now.

My point was that CPUID does not give us the correct information. I think it's better to parse /proc and/or /sys at least for Linux.

How does it not agree? cpucores() == 4 and cat /sys/fs/cgroup/cpuset/cpuset.cpus shows for CPUs with IDs 0, 1, 2 and 3.

Ah, sorry, my example was completely misleading (I used Julia too much and cannot think in 0-origin any more...). I should have used something other than --cpuset-cpus=0-3. If I use --cpuset-cpus=0-2 (as in the updated example now), cpucores() still returns 4 while cat /sys/fs/cgroup/cpuset/cpuset.cpus shows 0-2. I can see that julia process uses only 3 CPUs if I do something like LinearAlgebra.peakflops().

We can certainly handle Linux specially, but we need to do something on all platforms.

Maybe we should ship https://github.com/JuliaParallel/Hwloc.jl with Julia?

Hwloc is pretty heavyweight. Let's not add more binary dependencies for this. It seems like a very small piece of CpuId as a generic fallback to figure out the number of physical cores with special detection logic for specific platforms, (e.g. catting /proc or /sys on Linux) is the way to go. In the future as people propose better ways to figure out the right core counts on other platforms, we can add special cases for them.

Not sure if this is of any help - I just ran into this issue on CoCalc. They use KVM virtual machines (in my case with 4 cores and 8 hyperthreads), and Julia uses 8 OpenBLAS threads. On other Linux systems, I've seen Julia using the number of physical cores for OpenBLAS, but not on all systems.

It seems coupled to the output of nproc - on the CoCalc VM, for example, nproc is the same as nproc --all, on other systems I've seen nproc give the number of cores and nproc --all the number of hyperthreads - but not on all systems: I've also seen nproc == nproc --all on some standard, non-virtualized Linux boxes with hyperthreading. Haven't been able to figure out why.

Btw., would it be possible to increase the number of maximum OpenBLAS threads Julia uses? On my Epyc-2 server (64 physical cores, 128 hyperthreads, single socket), I get ccall((:openblas_get_num_threads64_, Base.libblas_name), Cint, ()) == 32. I would love to see how OpenBLAS+Julia scales to the full 64 cores.

I thought @staticfloat had a high-thread count openblas in BinaryBuilder. I'm not sure how to use it, but we should put those instructions in a prominent place.

Clearly the current default of 16 is too small.

Clearly the current default of 16 is too small.

Is it really 16? I do get 32 BLAS threads with Julia v1.4/v1.5 on that 64-core machine.

Yeah, it is 32 in BB but different in deps/blas.jl. The https://github.com/JuliaPackaging/Yggdrasil/tree/master/O/OpenBLAS collection also has the high core count version built. Not sure how to use it.

Is there a technical necessity to have a hard upper limit (don't know the technical depths, here), or could the user be allowed to set any `$OPENBLAS_NUM_THREADS$ value they like, in the future?

It is a build time option for OpenBLAS, and we try to be conservative because it leads to significant memory consumption to have a default that is too high. I don't know if recent versions have fixed this, and if we can set the number to something big - like 256.

Ah, I see! Grrr, why oh why does OpenBLAS need to know that at build time ... :-)

Relevant: https://github.com/xianyi/OpenBLAS/blob/develop/USAGE.md

While eventually we should integrate openblas threading with Julia's, I wonder if we can solve the current issue by moving to openmp. That will have the benefit of also playing nicely with the existing openmp compiled librares in BB.

What thread scheduler to use does not seem to have anything to do with buffer allocation. That should be something to be fixed separately.

I wonder if BLAS actually does some code generation based on the max. number of threads or so? I guess it's not a compile-time option on a whim?

No code generation. AFAICT it uses stack buffer for cheap and reentraint allocation. The easiest first step to try should be to just use VLA when supported.

Most helpful comment

Just as a note, we already do a bunch of cpuid stuff to support picking functions optimized for a feature-set at runtime e.g.

https://github.com/JuliaLang/julia/blob/cf544e6afab38351eeac049af1956153e24ec99c/src/processor_x86.cpp#L5-L28.

Perhaps extending that to pick out the number of cores instead of bundling a whole other library is more "clean".