Describe the bug

I have a web application in a Pod running with an Istio sidecar and I get random 503 errors from the sidecar itself (Envoy) not even reaching the web application. This is happening with a very low demand. In the logs below you will see a request to an endpoint named /logout which is throwing the 503 error and not reaching the web application whatsoever.

Expected behavior

Everything is forwarded to the web application.

Steps to reproduce the bug

Simple test invoking several endpoints in the web application, with a very small load.

Version

Istio --> 1.0.1

Kubernetes --> 1.11.3

Installation

istio.yaml - use this generated file for installation without authentication enabled

Environment

Bare metal servers, on premise, OL7.5, Linux 4.17.5-1.el7.elrepo.x86_64 #1 SMP Sun Jul 8 10:40:01 EDT 2018 x86_64 x86_64 x86_64 GNU/Linux

Logs

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][debug][http] external/envoy/source/common/http/conn_manager_impl.cc:190] [C32] new stream

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][http] external/envoy/source/common/http/http1/codec_impl.cc:309] [C32] completed header: key=host value=pp-helpers.test.oami.eu

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][http] external/envoy/source/common/http/http1/codec_impl.cc:309] [C32] completed header: key=cookie value=CLASSIFSESSIONID=MjM2N2MyOWUtODFhOS00ZGVjLTkwNjktOTQ5NzhlZTc2ZDk1

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][http] external/envoy/source/common/http/http1/codec_impl.cc:309] [C32] completed header: key=content-type value=application/x-www-form-urlencoded

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][http] external/envoy/source/common/http/http1/codec_impl.cc:309] [C32] completed header: key=content-length value=0

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][http] external/envoy/source/common/http/http1/codec_impl.cc:309] [C32] completed header: key=user-agent value=Apache-HttpClient/4.5.5 (Java/1.8.0_151)

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][http] external/envoy/source/common/http/http1/codec_impl.cc:309] [C32] completed header: key=x-forwarded-for value=10.133.0.44, 174.16.212.0

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][http] external/envoy/source/common/http/http1/codec_impl.cc:309] [C32] completed header: key=x-forwarded-proto value=http

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][http] external/envoy/source/common/http/http1/codec_impl.cc:309] [C32] completed header: key=x-envoy-external-address value=174.16.212.0

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][http] external/envoy/source/common/http/http1/codec_impl.cc:309] [C32] completed header: key=x-request-id value=82aeb468-9f91-9b30-9482-2aa3591e1979

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][http] external/envoy/source/common/http/http1/codec_impl.cc:309] [C32] completed header: key=x-envoy-decorator-operation value=classification-helper-ui.preprod-cb.svc.cluster.local:8080/api/classification*

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][http] external/envoy/source/common/http/http1/codec_impl.cc:309] [C32] completed header: key=x-b3-traceid value=e4ece8f07a5a7481

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][http] external/envoy/source/common/http/http1/codec_impl.cc:309] [C32] completed header: key=x-b3-spanid value=e4ece8f07a5a7481

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][http] external/envoy/source/common/http/http1/codec_impl.cc:309] [C32] completed header: key=x-b3-sampled value=1

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][http] external/envoy/source/common/http/http1/codec_impl.cc:309] [C32] completed header: key=x-istio-attributes value=Ck8KCnNvdXJjZS51aWQSQRI/a3ViZXJuZXRlczovL2lzdGlvLWluZ3Jlc3NnYXRld2F5LTVkOTk5Yzg3NTctZHY5cnMuaXN0aW8tc3lzdGVtCk4KE2Rlc3RpbmF0aW9uLnNlcnZpY2USNxI1Y2xhc3NpZmljYXRpb24taGVscGVyLXVpLnByZXByb2QtY2Iuc3ZjLmNsdXN0ZXIubG9jYWwKUwoYZGVzdGluYXRpb24uc2VydmljZS5ob3N0EjcSNWNsYXNzaWZpY2F0aW9uLWhlbHBlci11aS5wcmVwcm9kLWNiLnN2Yy5jbHVzdGVyLmxvY2FsClEKF2Rlc3RpbmF0aW9uLnNlcnZpY2UudWlkEjYSNGlzdGlvOi8vcHJlcHJvZC1jYi9zZXJ2aWNlcy9jbGFzc2lmaWNhdGlvbi1oZWxwZXItdWkKLQodZGVzdGluYXRpb24uc2VydmljZS5uYW1lc3BhY2USDBIKcHJlcHJvZC1jYgo2ChhkZXN0aW5hdGlvbi5zZXJ2aWNlLm5hbWUSGhIYY2xhc3NpZmljYXRpb24taGVscGVyLXVp

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][http] external/envoy/source/common/http/http1/codec_impl.cc:405] [C32] headers complete

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][http] external/envoy/source/common/http/http1/codec_impl.cc:309] [C32] completed header: key=x-envoy-original-path value=/api/classification/logout

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][http] external/envoy/source/common/http/http1/codec_impl.cc:426] [C32] message complete

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][debug][filter] src/envoy/http/mixer/filter.cc:60] Called Mixer::Filter : Filter

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][debug][filter] src/envoy/http/mixer/filter.cc:204] Called Mixer::Filter : setDecoderFilterCallbacks

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][debug][http] external/envoy/source/common/http/conn_manager_impl.cc:889] [C32][S16141632285256672176] request end stream

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][debug][http] external/envoy/source/common/http/conn_manager_impl.cc:490] [C32][S16141632285256672176] request headers complete (end_stream=true):

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: ':authority', 'pp-helpers.test.oami.eu'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: ':path', '//logout'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: ':method', 'POST'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'cookie', 'CLASSIFSESSIONID=MjM2N2MyOWUtODFhOS00ZGVjLTkwNjktOTQ5NzhlZTc2ZDk1'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'content-type', 'application/x-www-form-urlencoded'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'content-length', '0'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'user-agent', 'Apache-HttpClient/4.5.5 (Java/1.8.0_151)'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-forwarded-for', '10.133.0.44, 174.16.212.0'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-forwarded-proto', 'http'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-envoy-external-address', '174.16.212.0'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-request-id', '82aeb468-9f91-9b30-9482-2aa3591e1979'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-envoy-decorator-operation', 'classification-helper-ui.preprod-cb.svc.cluster.local:8080/api/classification*'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-b3-traceid', 'e4ece8f07a5a7481'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-b3-spanid', 'e4ece8f07a5a7481'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-b3-sampled', '1'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-istio-attributes', 'Ck8KCnNvdXJjZS51aWQSQRI/a3ViZXJuZXRlczovL2lzdGlvLWluZ3Jlc3NnYXRld2F5LTVkOTk5Yzg3NTctZHY5cnMuaXN0aW8tc3lzdGVtCk4KE2Rlc3RpbmF0aW9uLnNlcnZpY2USNxI1Y2xhc3NpZmljYXRpb24taGVscGVyLXVpLnByZXByb2QtY2Iuc3ZjLmNsdXN0ZXIubG9jYWwKUwoYZGVzdGluYXRpb24uc2VydmljZS5ob3N0EjcSNWNsYXNzaWZpY2F0aW9uLWhlbHBlci11aS5wcmVwcm9kLWNiLnN2Yy5jbHVzdGVyLmxvY2FsClEKF2Rlc3RpbmF0aW9uLnNlcnZpY2UudWlkEjYSNGlzdGlvOi8vcHJlcHJvZC1jYi9zZXJ2aWNlcy9jbGFzc2lmaWNhdGlvbi1oZWxwZXItdWkKLQodZGVzdGluYXRpb24uc2VydmljZS5uYW1lc3BhY2USDBIKcHJlcHJvZC1jYgo2ChhkZXN0aW5hdGlvbi5zZXJ2aWNlLm5hbWUSGhIYY2xhc3NpZmljYXRpb24taGVscGVyLXVp'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-envoy-original-path', '/api/classification/logout'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]:

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][debug][filter] src/envoy/http/mixer/filter.cc:122] Called Mixer::Filter : decodeHeaders

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][debug][filter] ./src/envoy/utils/header_update.h:46] Mixer forward attributes set: ClEKCnNvdXJjZS51aWQSQxJBa3ViZXJuZXRlczovL2NsYXNzaWZpY2F0aW9uLWhlbHBlci11aS03NmY5Y2RjZDRkLXJ6Nm45LnByZXByb2QtY2I=

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][debug][router] external/envoy/source/common/router/router.cc:252] [C0][S14382728320977988265] cluster 'outbound|9091||istio-policy.istio-system.svc.cluster.local' match for URL '/istio.mixer.v1.Mixer/Check'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][debug][router] external/envoy/source/common/router/router.cc:303] [C0][S14382728320977988265] router decoding headers:

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: ':method', 'POST'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: ':path', '/istio.mixer.v1.Mixer/Check'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: ':authority', 'mixer'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: ':scheme', 'http'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'te', 'trailers'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'grpc-timeout', '5000m'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'content-type', 'application/grpc'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-b3-traceid', 'e4ece8f07a5a7481'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-b3-spanid', 'df547f7d4992bf4e'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-b3-parentspanid', 'e4ece8f07a5a7481'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-b3-sampled', '1'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-istio-attributes', 'ClEKCnNvdXJjZS51aWQSQxJBa3ViZXJuZXRlczovL2NsYXNzaWZpY2F0aW9uLWhlbHBlci11aS03NmY5Y2RjZDRkLXJ6Nm45LnByZXByb2QtY2I='

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-envoy-internal', 'true'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-forwarded-for', '174.16.213.27'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-envoy-expected-rq-timeout-ms', '5000'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]:

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][debug][pool] external/envoy/source/common/http/http2/conn_pool.cc:97] [C236] creating stream

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][debug][router] external/envoy/source/common/router/router.cc:971] [C0][S14382728320977988265] pool ready

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][http2] external/envoy/source/common/http/http2/codec_impl.cc:492] [C236] send data: bytes=85

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][connection] external/envoy/source/common/network/connection_impl.cc:326] [C236] writing 85 bytes, end_stream false

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][http2] external/envoy/source/common/http/http2/codec_impl.cc:446] [C236] sent frame type=1

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][router] external/envoy/source/common/router/router.cc:871] [C0][S14382728320977988265] proxying 985 bytes

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][connection] external/envoy/source/common/network/connection_impl.cc:326] [C236] writing 994 bytes, end_stream false

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.958][95][trace][http2] external/envoy/source/common/http/http2/codec_impl.cc:446] [C236] sent frame type=0

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.959][95][debug][grpc] src/envoy/utils/grpc_transport.cc:46] Sending Check request: attributes {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: words: "inbound"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: words: "x-envoy-original-path"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: words: "/api/classification/logout"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: words: "Apache-HttpClient/4.5.5 (Java/1.8.0_151)"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: words: "e4ece8f07a5a7481"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: words: "//logout"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: words: "x-envoy-external-address"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: words: "174.16.212.0"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: words: "application/x-www-form-urlencoded"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: words: "CLASSIFSESSIONID=MjM2N2MyOWUtODFhOS00ZGVjLTkwNjktOTQ5NzhlZTc2ZDk1"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: words: "pp-helpers.test.oami.eu"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: words: "82aeb468-9f91-9b30-9482-2aa3591e1979"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: words: "10.133.0.44, 174.16.212.0"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: words: "classification-helper-ui.preprod-cb.svc.cluster.local"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: words: "preprod-cb"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: words: "classification-helper-ui"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: words: "kubernetes://classification-helper-ui-76f9cdcd4d-rz6n9.preprod-cb"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: words: "origin.ip"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: words: "kubernetes://istio-ingressgateway-5d999c8757-dv9rs.istio-system"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: words: "istio://preprod-cb/services/classification-helper-ui"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: strings {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 3

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -19

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: strings {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 17

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -6

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: strings {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 18

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -11

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: strings {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 19

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: 91

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: strings {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 22

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: 92

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: strings {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 25

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -4

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: strings {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 131

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: 92

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: strings {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 152

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -14

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: strings {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 154

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -17

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: strings {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 155

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -15

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: strings {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 190

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -20

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: strings {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 191

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -16

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: strings {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 192

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -15

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: strings {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 193

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -14

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: strings {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 197

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -17

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: strings {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 201

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -1

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: int64s {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 151

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: 8080

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: bools {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 177

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: false

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: timestamps {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 24

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: seconds: 1538556462

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: nanos: 958551761

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: bytes {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: -18

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: "\256\020=8"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: bytes {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 150

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: "\000\000\000\000\000\000\000\000\000\000\377\377\256\020\325\033"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: string_maps {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 15

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: entries {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: -7

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -8

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: entries {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: -2

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -3

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: entries {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 31

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -11

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: entries {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 32

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: 91

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: entries {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 33

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -6

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: entries {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 55

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: 134

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: entries {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 58

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -9

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: entries {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 59

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -10

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: entries {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 86

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -4

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: entries {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 98

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -13

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: entries {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 100

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: 92

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: entries {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 102

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -12

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: entries {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 121

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -5

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: entries {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 122

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: 135

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: entries {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: key: 123

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: value: -5

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: global_word_count: 203

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: deduplication_id: "231e89c0-6dcb-4ada-a813-375cedb8a64427"

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]:

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.959][95][debug][filter] src/envoy/http/mixer/filter.cc:146] Called Mixer::Filter : decodeHeaders Stop

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.959][95][trace][http] external/envoy/source/common/http/conn_manager_impl.cc:719] [C32][S16141632285256672176] decode headers called: filter=0x115c9040 status=1

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.959][95][trace][http] external/envoy/source/common/http/http1/codec_impl.cc:362] [C32] parsed 1253 bytes

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.959][95][trace][connection] external/envoy/source/common/network/connection_impl.cc:232] [C32] readDisable: enabled=true disable=true

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.959][95][trace][connection] external/envoy/source/common/network/connection_impl.cc:389] [C236] socket event: 2

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.959][95][trace][connection] external/envoy/source/common/network/connection_impl.cc:457] [C236] write ready

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.959][95][trace][connection] external/envoy/source/common/network/raw_buffer_socket.cc:62] [C236] write returns: 1079

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.959][95][trace][connection] external/envoy/source/common/network/connection_impl.cc:389] [C32] socket event: 2

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.959][95][trace][connection] external/envoy/source/common/network/connection_impl.cc:457] [C32] write ready

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][trace][connection] external/envoy/source/common/network/connection_impl.cc:389] [C236] socket event: 3

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][trace][connection] external/envoy/source/common/network/connection_impl.cc:457] [C236] write ready

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][trace][connection] external/envoy/source/common/network/connection_impl.cc:427] [C236] read ready

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][trace][connection] external/envoy/source/common/network/raw_buffer_socket.cc:21] [C236] read returns: 103

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][trace][connection] external/envoy/source/common/network/raw_buffer_socket.cc:21] [C236] read returns: -1

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][trace][connection] external/envoy/source/common/network/raw_buffer_socket.cc:29] [C236] read error: 11

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][trace][http2] external/envoy/source/common/http/http2/codec_impl.cc:277] [C236] dispatching 103 bytes

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][trace][http2] external/envoy/source/common/http/http2/codec_impl.cc:335] [C236] recv frame type=1

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][debug][router] external/envoy/source/common/router/router.cc:583] [C0][S14382728320977988265] upstream headers complete: end_stream=false

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][debug][http] external/envoy/source/common/http/async_client_impl.cc:93] async http request response headers (end_stream=false):

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: ':status', '200'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'content-type', 'application/grpc'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-envoy-upstream-service-time', '1'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'date', 'Wed, 03 Oct 2018 08:47:42 GMT'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'server', 'envoy'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-envoy-decorator-operation', 'Check'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]:

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][trace][http2] external/envoy/source/common/http/http2/codec_impl.cc:335] [C236] recv frame type=0

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][trace][http] external/envoy/source/common/http/async_client_impl.cc:101] async http request response data (length=45 end_stream=false)

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][trace][http2] external/envoy/source/common/http/http2/codec_impl.cc:335] [C236] recv frame type=1

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][debug][client] external/envoy/source/common/http/codec_client.cc:94] [C236] response complete

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][trace][main] external/envoy/source/common/event/dispatcher_impl.cc:126] item added to deferred deletion list (size=1)

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][debug][pool] external/envoy/source/common/http/http2/conn_pool.cc:189] [C236] destroying stream: 0 remaining

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][debug][http] external/envoy/source/common/http/async_client_impl.cc:108] async http request response trailers:

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'grpc-status', '0'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'grpc-message', ''

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]:

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][debug][grpc] src/envoy/utils/grpc_transport.cc:67] Check response: precondition {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: status {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: valid_duration {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: seconds: 46

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: nanos: 386564935

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: valid_use_count: 10000

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: referenced_attributes {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: attribute_matches {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: name: 155

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: condition: EXACT

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: attribute_matches {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: name: 201

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: condition: EXACT

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: attribute_matches {

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: name: 152

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: condition: EXACT

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: }

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]:

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][debug][filter] src/envoy/http/mixer/filter.cc:211] Called Mixer::Filter : check complete OK

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][trace][http] external/envoy/source/common/http/conn_manager_impl.cc:1296] [C32][S16141632285256672176] continuing filter chain: filter=0x115c9040

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][trace][http] external/envoy/source/common/http/conn_manager_impl.cc:719] [C32][S16141632285256672176] decode headers called: filter=0x12d6f310 status=0

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][trace][http] external/envoy/source/common/http/conn_manager_impl.cc:719] [C32][S16141632285256672176] decode headers called: filter=0x12d6fcc0 status=0

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][debug][router] external/envoy/source/common/router/router.cc:252] [C32][S16141632285256672176] cluster 'inbound|8080||classification-helper-ui.preprod-cb.svc.cluster.local' match for URL '//logout'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][debug][router] external/envoy/source/common/router/router.cc:303] [C32][S16141632285256672176] router decoding headers:

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: ':authority', 'pp-helpers.test.oami.eu'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: ':path', '//logout'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: ':method', 'POST'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: ':scheme', 'http'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'cookie', 'CLASSIFSESSIONID=MjM2N2MyOWUtODFhOS00ZGVjLTkwNjktOTQ5NzhlZTc2ZDk1'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'content-type', 'application/x-www-form-urlencoded'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'content-length', '0'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'user-agent', 'Apache-HttpClient/4.5.5 (Java/1.8.0_151)'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-forwarded-for', '10.133.0.44, 174.16.212.0'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-forwarded-proto', 'http'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-envoy-external-address', '174.16.212.0'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-request-id', '82aeb468-9f91-9b30-9482-2aa3591e1979'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-b3-traceid', 'e4ece8f07a5a7481'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-b3-spanid', 'e4ece8f07a5a7481'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-b3-sampled', '1'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'x-envoy-original-path', '/api/classification/logout'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]:

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][debug][pool] external/envoy/source/common/http/http1/conn_pool.cc:89] [C502] using existing connection

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][trace][connection] external/envoy/source/common/network/connection_impl.cc:232] [C502] readDisable: enabled=false disable=false

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.960][95][debug][router] external/envoy/source/common/router/router.cc:971] [C32][S16141632285256672176] pool ready

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][connection] external/envoy/source/common/network/connection_impl.cc:326] [C502] writing 546 bytes, end_stream false

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][http] external/envoy/source/common/http/conn_manager_impl.cc:719] [C32][S16141632285256672176] decode headers called: filter=0x111d3c20 status=1

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][main] external/envoy/source/common/event/dispatcher_impl.cc:126] item added to deferred deletion list (size=2)

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][main] external/envoy/source/common/event/dispatcher_impl.cc:126] item added to deferred deletion list (size=3)

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][debug][http2] external/envoy/source/common/http/http2/codec_impl.cc:501] [C236] stream closed: 0

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][main] external/envoy/source/common/event/dispatcher_impl.cc:126] item added to deferred deletion list (size=4)

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][http2] external/envoy/source/common/http/http2/codec_impl.cc:292] [C236] dispatched 103 bytes

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][main] external/envoy/source/common/event/dispatcher_impl.cc:52] clearing deferred deletion list (size=4)

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][connection] external/envoy/source/common/network/connection_impl.cc:389] [C502] socket event: 2

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][connection] external/envoy/source/common/network/connection_impl.cc:457] [C502] write ready

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][connection] external/envoy/source/common/network/raw_buffer_socket.cc:62] [C502] write returns: 546

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][connection] external/envoy/source/common/network/connection_impl.cc:389] [C502] socket event: 3

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][connection] external/envoy/source/common/network/connection_impl.cc:457] [C502] write ready

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][connection] external/envoy/source/common/network/connection_impl.cc:427] [C502] read ready

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][connection] external/envoy/source/common/network/raw_buffer_socket.cc:21] [C502] read returns: 0

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][debug][connection] external/envoy/source/common/network/connection_impl.cc:451] [C502] remote close

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][debug][connection] external/envoy/source/common/network/connection_impl.cc:133] [C502] closing socket: 0

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][http] external/envoy/source/common/http/http1/codec_impl.cc:341] [C502] parsing 0 bytes

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][http] external/envoy/source/common/http/http1/codec_impl.cc:362] [C502] parsed 0 bytes

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][debug][client] external/envoy/source/common/http/codec_client.cc:81] [C502] disconnect. resetting 1 pending requests

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][debug][client] external/envoy/source/common/http/codec_client.cc:104] [C502] request reset

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][main] external/envoy/source/common/event/dispatcher_impl.cc:126] item added to deferred deletion list (size=1)

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][debug][router] external/envoy/source/common/router/router.cc:457] [C32][S16141632285256672176] upstream reset

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][debug][filter] src/envoy/http/mixer/filter.cc:191] Called Mixer::Filter : encodeHeaders 2

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][http] external/envoy/source/common/http/conn_manager_impl.cc:997] [C32][S16141632285256672176] encode headers called: filter=0x11d85140 status=0

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][http] external/envoy/source/common/http/conn_manager_impl.cc:997] [C32][S16141632285256672176] encode headers called: filter=0x11305dc0 status=0

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][debug][http] external/envoy/source/common/http/conn_manager_impl.cc:1083] [C32][S16141632285256672176] encoding headers via codec (end_stream=false):

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: ':status', '503'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'content-length', '57'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'content-type', 'text/plain'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'date', 'Wed, 03 Oct 2018 08:47:42 GMT'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: 'server', 'envoy'

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]:

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][connection] external/envoy/source/common/network/connection_impl.cc:326] [C32] writing 134 bytes, end_stream false

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][http] external/envoy/source/common/http/conn_manager_impl.cc:1157] [C32][S16141632285256672176] encode data called: filter=0x11d85140 status=0

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][http] external/envoy/source/common/http/conn_manager_impl.cc:1157] [C32][S16141632285256672176] encode data called: filter=0x11305dc0 status=0

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][http] external/envoy/source/common/http/conn_manager_impl.cc:1170] [C32][S16141632285256672176] encoding data via codec (size=57 end_stream=true)

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][connection] external/envoy/source/common/network/connection_impl.cc:326] [C32] writing 57 bytes, end_stream false

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][debug][filter] src/envoy/http/mixer/filter.cc:257] Called Mixer::Filter : onDestroy state: 2

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][main] external/envoy/source/common/event/dispatcher_impl.cc:126] item added to deferred deletion list (size=2)

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][connection] external/envoy/source/common/network/connection_impl.cc:232] [C32] readDisable: enabled=false disable=false

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][debug][pool] external/envoy/source/common/http/http1/conn_pool.cc:122] [C502] client disconnected

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][main] external/envoy/source/common/event/dispatcher_impl.cc:126] item added to deferred deletion list (size=3)

preprod-cb/classification-helper-ui-76f9cdcd4d-rz6n9[istio-proxy]: [2018-10-03 08:47:42.961][95][trace][main] external/envoy/source/common/event/dispatcher_impl.cc:52] clearing deferred deletion list (size=3)

All 130 comments

Dunno if this is related https://github.com/istio/istio/issues/8270

Still facing sporadic 503s, but maybe related to the HPA autoscaling the Control Plane and impacting applications. Does this make sense?

Most probably fixed with #9805 for #9480.

It won't be fixed btw. Sporadic 503s are a common issue in istio at the moment due to the delays in pushing out mesh config to all envoys.

The fixes you have linked are to a specific to an issue in 1.0.3, but the OP is on 1.0.1

one option suggested in istio-user groups to disable policy check

# disablePolicyChecks disables mixer policy checks.

# Will set the value with same name in istio config map - pilot needs to be restarted to take effect.

disablePolicyChecks: true

Reopening and relating to #7665

I keep suffering this issue...

Using Istio 1.0.4 here.

I’m struggling with random 503s that I get in a stupid simple application even when getting the JS to display the page. After setting the proxy logging level to debug, I can spot this:

[2019-02-19 09:52:50.754][35][debug][http] external/envoy/source/common/http/conn_manager_impl.cc:190] [C5963] new stream

[2019-02-19 09:52:50.754][35][debug][filter] src/envoy/http/mixer/filter.cc:60] Called Mixer::Filter : Filter

[2019-02-19 09:52:50.754][35][debug][filter] src/envoy/http/mixer/filter.cc:204] Called Mixer::Filter : setDecoderFilterCallbacks

[2019-02-19 09:52:50.754][35][debug][http] external/envoy/source/common/http/conn_manager_impl.cc:889] [C5963][S17073327596826725699] request end stream

[2019-02-19 09:52:50.754][35][debug][http] external/envoy/source/common/http/conn_manager_impl.cc:490] [C5963][S17073327596826725699] request headers complete (end_stream=true):

‘:authority’, ‘pp-helpers.test.oami.eu’

‘:path’, ‘/client.min.js?version=1.7.0’

‘:method’, ‘GET’

‘user-agent’, ‘Apache-HttpClient/4.5.6 (Java/1.8.0_151)’

‘x-forwarded-for’, ‘10.133.0.44, 10.136.106.52’

‘x-forwarded-proto’, ‘http’

‘x-envoy-external-address’, ‘10.136.106.52’

‘x-request-id’, ‘817c0d38-f489-9023-9f46-706f504357d5’

‘x-envoy-decorator-operation’, ‘helpers-frontend.preprod-cb.svc.cluster.local:80/*’

‘x-b3-traceid’, ‘b421de1126053c6d’

‘x-b3-spanid’, ‘b421de1126053c6d’

‘x-b3-sampled’, ‘1’

‘x-istio-attributes’, ‘Ck8KCnNvdXJjZS51aWQSQRI/a3ViZXJuZXRlczovL2lzdGlvLWluZ3Jlc3NnYXRld2F5LTY5OTZkNTY2ZDQtYmNtamYuaXN0aW8tc3lzdGVtCkYKE2Rlc3RpbmF0aW9uLnNlcnZpY2USLxItaGVscGVycy1mcm9udGVuZC5wcmVwcm9kLWNiLnN2Yy5jbHVzdGVyLmxvY2FsCksKGGRlc3RpbmF0aW9uLnNlcnZpY2UuaG9zdBIvEi1oZWxwZXJzLWZyb250ZW5kLnByZXByb2QtY2Iuc3ZjLmNsdXN0ZXIubG9jYWwKSQoXZGVzdGluYXRpb24uc2VydmljZS51aWQSLhIsaXN0aW86Ly9wcmVwcm9kLWNiL3NlcnZpY2VzL2hlbHBlcnMtZnJvbnRlbmQKLQodZGVzdGluYXRpb24uc2VydmljZS5uYW1lc3BhY2USDBIKcHJlcHJvZC1jYgouChhkZXN0aW5hdGlvbi5zZXJ2aWNlLm5hbWUSEhIQaGVscGVycy1mcm9udGVuZA==’

‘content-length’, ‘0’

[2019-02-19 09:52:50.754][35][debug][filter] src/envoy/http/mixer/filter.cc:122] Called Mixer::Filter : decodeHeaders

[2019-02-19 09:52:50.754][35][debug][filter] src/envoy/http/mixer/filter.cc:211] Called Mixer::Filter : check complete OK

[2019-02-19 09:52:50.754][35][debug][router] external/envoy/source/common/router/router.cc:252] [C5963][S17073327596826725699] cluster ‘inbound|80||helpers-frontend.preprod-cb.svc.cluster.local’ match for URL ‘/client.min.js?version=1.7.0’

[2019-02-19 09:52:50.754][35][debug][router] external/envoy/source/common/router/router.cc:303] [C5963][S17073327596826725699] router decoding headers:

‘:authority’, ‘pp-helpers.test.oami.eu’

‘:path’, ‘/client.min.js?version=1.7.0’

‘:method’, ‘GET’

‘:scheme’, ‘http’

‘user-agent’, ‘Apache-HttpClient/4.5.6 (Java/1.8.0_151)’

‘x-forwarded-for’, ‘10.133.0.44, 10.136.106.52’

‘x-forwarded-proto’, ‘http’

‘x-envoy-external-address’, ‘10.136.106.52’

‘x-request-id’, ‘817c0d38-f489-9023-9f46-706f504357d5’

‘x-b3-traceid’, ‘b421de1126053c6d’

‘x-b3-spanid’, ‘b421de1126053c6d’

‘x-b3-sampled’, ‘1’

‘content-length’, ‘0’

[2019-02-19 09:52:50.754][35][debug][pool] external/envoy/source/common/http/http1/conn_pool.cc:89] [C6024] using existing connection

[2019-02-19 09:52:50.754][35][debug][router] external/envoy/source/common/router/router.cc:971] [C5963][S17073327596826725699] pool ready

[2019-02-19 09:52:50.755][35][debug][connection] external/envoy/source/common/network/connection_impl.cc:451] [C6024] remote close

[2019-02-19 09:52:50.755][35][debug][connection] external/envoy/source/common/network/connection_impl.cc:133] [C6024] closing socket: 0

[2019-02-19 09:52:50.755][35][debug][client] external/envoy/source/common/http/codec_client.cc:81] [C6024] disconnect. resetting 1 pending requests

[2019-02-19 09:52:50.755][35][debug][client] external/envoy/source/common/http/codec_client.cc:104] [C6024] request reset

[2019-02-19 09:52:50.755][35][debug][router] external/envoy/source/common/router/router.cc:457] [C5963][S17073327596826725699] upstream reset

[2019-02-19 09:52:50.755][35][debug][filter] src/envoy/http/mixer/filter.cc:191] Called Mixer::Filter : encodeHeaders 2

[2019-02-19 09:52:50.755][35][debug][http] external/envoy/source/common/http/conn_manager_impl.cc:1083] [C5963][S17073327596826725699] encoding headers via codec (end_stream=false):

‘:status’, ‘503’

‘content-length’, ‘57’

‘content-type’, ‘text/plain’

‘date’, ‘Tue, 19 Feb 2019 09:52:50 GMT’

‘server’, ‘envoy’

[2019-02-19 09:52:50.755][35][debug][filter] src/envoy/http/mixer/filter.cc:257] Called Mixer::Filter : onDestroy state: 2

[2019-02-19 09:52:50.755][35][debug][pool] external/envoy/source/common/http/http1/conn_pool.cc:122] [C6024] client disconnected

[2019-02-19 09:52:50.755][35][debug][filter] src/envoy/http/mixer/filter.cc:273] Called Mixer::Filter : log

In the target application, I don’t see any 503 whatsoever. Everything is always 200:

“GET /client.min.js?version=1.7.0 HTTP/1.1” 200 8774206

Anyone can help out here to understand what’s going on? If I remove Istio and route it using Nginx Ingress Controller, then everything works perfectly.

Thanks!

I see the same issue, with Istio service mesh sidecars, Istio IngressGateway, and Ambassador API Gateway. I believe the root cause is a race condition between the TCP keepalive setting and the stream idle timeout. Istio does connection pooling of HTTP connections under the covers. If a connection is idle for 5 minutes, the TCP keepalive should fire to keep the conneciton alive. But, the stream idle timeout is also 5 minutes, so a race condition exists.

I've commented more about it here:

https://github.com/istio/istio/issues/9906

We were able to correct the issue by setting the spec->http->retries->attempts field on the VirtualService manifest. In Istio v1.1, they've introduced the retryOn configuration setting to Envoy, so you can retry on only 5xx errors. They've also introduced the ability to set the steam idle timeout. I haven't tested this hypothesis against the snapshot builds of 1.1--we'll resume testing of this when 1.1 goes GA.

After some research, I think I spotted the issue for my use case: it's related to the HAProxy I use as the load balancer to the Istio Ingress Gateway, so when I skip the HAProxy and use directly a NodePort, it works perfectly.

I presume there is some misalignment with low-level TCP configuration between the HAProxy and the Envoy running inside the Ingress Gateway (or something similar), but I still need to figure out what's going on.

Any help on this regard would be highly appreciated.

This is something the OpenShift folks might have some thoughts on as OpenShift by default uses an HAProxy ingress. @knrc @jwendell ?

@christian-posta I'm not aware of this impacting us as of yet but I'll keep an eye on it, the race mentioned above does seem to be a likely suspect. @emedina if you have a reproducer then I can take a look to see how it behaves on our system.

@knrc @christian-posta Going through testing to try to figure out the right configuration, but as a initial teaser, changing option httpclose to option http-server-close seems to lower the number of 503s, although they still appear.

Also did some basic testing using Google Cloud with httpbin and fortio running a minimal load test (10 qps using 10 threads during 10m) against /html and /json endpoints, and I get 503 errors:

Code 200 : 5998 (100.0 %)

Code 503 : 2 (0.0 %)

I know that Google Cloud may not be using the latest Istio, but I'm wondering how Google can provide this functionality when a basic test fails so easily.

And I presume this issue is still there... because I keep getting it even with latest 1.1-rc.

More feedback...

Tried 1.1.0-rc.0 in a clean LAB environment running v1.13.2 and it's still throwing sporadic 503 with a simple load test using fortio and two endpoints of httpbin:

kubectl exec -ti fortio-deploy-5588f84b45-5xx5v -- fortio load -qps 10 -t 600s -c 10 -httpbufferkb 8569 -H "Host: httpbin.org" http://10.138.132.8:32500/json

kubectl exec -ti fortio-deploy-5588f84b45-5xx5v -- fortio load -qps 10 -t 600s -c 10 -httpbufferkb 8569 -H "Host: httpbin.org" http://10.138.132.8:32500/html

As you can see, I'm using the NodePort to call the Ingress Gateway, so no external impact by HAProxy (just in case). Additionally, this is definitively a very low demanding load test running for 10m. However, there are several 503 errors:

From the endpoint /html:

08:58:38 W http_client.go:679> Parsed non ok code 503 (HTTP/1.1 503)

08:58:42 W http_client.go:679> Parsed non ok code 503 (HTTP/1.1 503)

08:58:45 W http_client.go:679> Parsed non ok code 503 (HTTP/1.1 503)

09:06:01 W http_client.go:679> Parsed non ok code 503 (HTTP/1.1 503)

From the endpoint /json:

09:05:47 W http_client.go:679> Parsed non ok code 503 (HTTP/1.1 503)

09:05:56 W http_client.go:679> Parsed non ok code 503 (HTTP/1.1 503)

09:06:00 W http_client.go:679> Parsed non ok code 503 (HTTP/1.1 503)

The logs from the Ingress Gateway are:

# kail -l app=istio-ingressgateway --since 10m | grep ' 503 '

istio-system/istio-ingressgateway-5979b99885-rxd66[istio-proxy]: [2019-02-24T08:58:38.611Z] "GET /html HTTP/1.1" 503 UC "-" 0 57 2 - "174.16.249.64" "fortio.org/fortio-1.3.1" "ad930b2f-b08c-99bf-93b3-e93d4d3aa475" "httpbin.org" "174.16.249.69:80" outbound|8000||httpbin.default.svc.cluster.local - 174.16.71.101:80 174.16.249.64:33416 -

istio-system/istio-ingressgateway-5979b99885-rxd66[istio-proxy]: [2019-02-24T08:58:42.641Z] "GET /html HTTP/1.1" 503 UC "-" 0 57 19 - "174.16.249.64" "fortio.org/fortio-1.3.1" "447f4df1-99fe-9efa-9302-f2084120baaa" "httpbin.org" "174.16.249.69:80" outbound|8000||httpbin.default.svc.cluster.local - 174.16.71.101:80 174.16.249.64:33421 -

istio-system/istio-ingressgateway-5979b99885-rxd66[istio-proxy]: [2019-02-24T08:58:45.874Z] "GET /html HTTP/1.1" 503 UC "-" 0 57 6 - "174.16.249.64" "fortio.org/fortio-1.3.1" "13c3e002-6f69-9575-a642-3e130cc1aeea" "httpbin.org" "174.16.249.69:80" outbound|8000||httpbin.default.svc.cluster.local - 174.16.71.101:80 174.16.249.64:33415 -

istio-system/istio-ingressgateway-5979b99885-rxd66[istio-proxy]: [2019-02-24T09:05:47.855Z] "GET /json HTTP/1.1" 503 UC "-" 0 57 15 - "174.16.249.64" "fortio.org/fortio-1.3.1" "96cb8b74-7a29-9829-8bde-30d58b70d353" "httpbin.org" "174.16.249.69:80" outbound|8000||httpbin.default.svc.cluster.local - 174.16.71.101:80 174.16.249.64:33554 -

istio-system/istio-ingressgateway-5979b99885-rxd66[istio-proxy]: [2019-02-24T09:05:55.955Z] "GET /json HTTP/1.1" 503 UC "-" 0 57 26 - "174.16.249.64" "fortio.org/fortio-1.3.1" "dce86649-0f9e-946f-b15a-4e48300f45b8" "httpbin.org" "174.16.249.69:80" outbound|8000||httpbin.default.svc.cluster.local - 174.16.71.101:80 174.16.249.64:33561 -

istio-system/istio-ingressgateway-5979b99885-rxd66[istio-proxy]: [2019-02-24T09:05:59.924Z] "GET /json HTTP/1.1" 503 UC "-" 0 57 146 - "174.16.249.64" "fortio.org/fortio-1.3.1" "fb921a6f-2f04-993b-9e45-25349c3962f1" "httpbin.org" "174.16.249.69:80" outbound|8000||httpbin.default.svc.cluster.local - 174.16.71.101:80 174.16.249.64:33550 -

istio-system/istio-ingressgateway-5979b99885-rxd66[istio-proxy]: [2019-02-24T09:06:01.426Z] "GET /html HTTP/1.1" 503 UC "-" 0 57 0 - "174.16.249.64" "fortio.org/fortio-1.3.1" "e821b8b9-4273-9814-bb3a-e13d6749a35d" "httpbin.org" "174.16.249.69:80" outbound|8000||httpbin.default.svc.cluster.local - 174.16.71.101:80 174.16.249.64:33419 -

No apparent issues in httpbin itself;

# kubectl logs -f httpbin-54f5bb4957-sgt4v

[2019-02-20 16:32:08 +0000] [1] [INFO] Starting gunicorn 19.9.0

[2019-02-20 16:32:08 +0000] [1] [INFO] Listening at: http://0.0.0.0:80 (1)

[2019-02-20 16:32:08 +0000] [1] [INFO] Using worker: gevent

[2019-02-20 16:32:08 +0000] [10] [INFO] Booting worker with pid: 10

And eventually the fortio load test concludes reporting the issues:

For the endpoint `/json`:

Code 200 : 5997 (100.0 %)

Code 503 : 3 (0.1 %)

For the endpoint `/html`:

Code 200 : 5996 (99.9 %)

Code 503 : 4 (0.1 %)

This scenario can be reproduced consistently and always throws 503 errors.

Also, I increased the number of replicas to 3 for the httpbin service and 2 for the Ingress Gateway in order to discard issues with capacity, but still getting 503 errors on each load test.

And another run of 30m showing, again. 503 errors:

kubectl exec -ti fortio-deploy-5588f84b45-5xx5v -- fortio load -qps 10 -t 1800s -c 10 -httpbufferkb 8569 -H "Host: httpbin.org" http://10.138.132.8:32500/json

Code 200 : 17995 (100.0 %)

Code 503 : 5 (0.0 %)

kubectl exec -ti fortio-deploy-5588f84b45-5xx5v -- fortio load -qps 10 -t 1800s -c 10 -httpbufferkb 8569 -H "Host: httpbin.org" http://10.138.132.8:32500/html

Code 200 : 17993 (100.0 %)

Code 503 : 7 (0.0 %)

# kail -l app=istio-ingressgateway | grep ' 503 '

istio-system/istio-ingressgateway-5979b99885-ljzdl[istio-proxy]: [2019-02-24T09:44:10.421Z] "GET /json HTTP/1.1" 503 UC "-" 0 57 0 - "174.16.249.64" "fortio.org/fortio-1.3.1" "b47f435f-063d-9e38-aff3-ac1202ea58d1" "httpbin.org" "174.16.71.77:80" outbound|8000||httpbin.default.svc.cluster.local - 174.16.111.24:80 174.16.249.64:39987 -

istio-system/istio-ingressgateway-5979b99885-rxd66[istio-proxy]: [2019-02-24T09:44:46.453Z] "GET /json HTTP/1.1" 503 UC "-" 0 57 2 - "174.16.249.64" "fortio.org/fortio-1.3.1" "537415ba-d9e4-9eae-85e8-6b24a632f924" "httpbin.org" "174.16.249.69:80" outbound|8000||httpbin.default.svc.cluster.local - 174.16.71.101:80 174.16.249.64:39990 -

istio-system/istio-ingressgateway-5979b99885-rxd66[istio-proxy]: [2019-02-24T09:44:48.216Z] "GET /html HTTP/1.1" 503 UC "-" 0 57 0 - "174.16.249.64" "fortio.org/fortio-1.3.1" "a5b4e97c-87dc-9232-8c77-53ea0be5d685" "httpbin.org" "174.16.249.69:80" outbound|8000||httpbin.default.svc.cluster.local - 174.16.71.101:80 174.16.249.64:40045 -

istio-system/istio-ingressgateway-5979b99885-rxd66[istio-proxy]: [2019-02-24T09:44:49.215Z] "GET /html HTTP/1.1" 503 UC "-" 0 57 2 - "174.16.249.64" "fortio.org/fortio-1.3.1" "3181b1bc-9776-94db-96d0-44074f0b8b3e" "httpbin.org" "174.16.111.12:80" outbound|8000||httpbin.default.svc.cluster.local - 174.16.71.101:80 174.16.249.64:40046 -

istio-system/istio-ingressgateway-5979b99885-ljzdl[istio-proxy]: [2019-02-24T09:45:21.238Z] "GET /html HTTP/1.1" 503 UC "-" 0 57 1 - "174.16.249.64" "fortio.org/fortio-1.3.1" "c5996f8a-3b5e-95ae-9825-f4530254ed95" "httpbin.org" "174.16.249.69:80" outbound|8000||httpbin.default.svc.cluster.local - 174.16.111.24:80 174.16.249.64:41439 -

istio-system/istio-ingressgateway-5979b99885-ljzdl[istio-proxy]: [2019-02-24T09:45:26.469Z] "GET /json HTTP/1.1" 503 UC "-" 0 57 0 - "174.16.249.64" "fortio.org/fortio-1.3.1" "5c2fa8aa-ab1a-90ed-9262-f61e75dfe1d6" "httpbin.org" "174.16.111.12:80" outbound|8000||httpbin.default.svc.cluster.local - 174.16.111.24:80 174.16.249.64:41364 -

istio-system/istio-ingressgateway-5979b99885-ljzdl[istio-proxy]: [2019-02-24T09:45:50.250Z] "GET /html HTTP/1.1" 503 UC "-" 0 57 6 - "174.16.249.64" "fortio.org/fortio-1.3.1" "723d6e0c-c8bd-98ab-b947-9d39bc0af8f9" "httpbin.org" "174.16.71.77:80" outbound|8000||httpbin.default.svc.cluster.local - 174.16.111.24:80 174.16.249.64:41458 -

istio-system/istio-ingressgateway-5979b99885-ljzdl[istio-proxy]: [2019-02-24T09:46:44.507Z] "GET /json HTTP/1.1" 503 UC "-" 0 57 0 - "174.16.249.64" "fortio.org/fortio-1.3.1" "2fdb1ce0-6eff-9fd6-bec2-7a258fb3b26a" "httpbin.org" "174.16.249.69:80" outbound|8000||httpbin.default.svc.cluster.local - 174.16.111.24:80 174.16.249.64:41487 -

istio-system/istio-ingressgateway-5979b99885-ljzdl[istio-proxy]: [2019-02-24T10:05:55.152Z] "GET /json HTTP/1.1" 503 UC "-" 0 57 3 - "174.16.249.64" "fortio.org/fortio-1.3.1" "417990a5-de4c-943a-874c-eefc6ee38927" "httpbin.org" "174.16.249.69:80" outbound|8000||httpbin.default.svc.cluster.local - 174.16.111.24:80 174.16.249.64:57217 -

istio-system/istio-ingressgateway-5979b99885-rxd66[istio-proxy]: [2019-02-24T10:05:52.933Z] "GET /html HTTP/1.1" 503 UC "-" 0 57 115 - "174.16.249.64" "fortio.org/fortio-1.3.1" "32045583-0442-94fd-9bf0-2148c9dc0f18" "httpbin.org" "174.16.249.69:80" outbound|8000||httpbin.default.svc.cluster.local - 174.16.71.101:80 174.16.249.64:57243 -

istio-system/istio-ingressgateway-5979b99885-ljzdl[istio-proxy]: [2019-02-24T10:06:38.945Z] "GET /html HTTP/1.1" 503 UC "-" 0 57 1 - "174.16.249.64" "fortio.org/fortio-1.3.1" "dbde8e93-5d86-9d5a-b041-c80ecc27119e" "httpbin.org" "174.16.249.69:80" outbound|8000||httpbin.default.svc.cluster.local - 174.16.111.24:80 174.16.249.64:57274 -

istio-system/istio-ingressgateway-5979b99885-rxd66[istio-proxy]: [2019-02-24T10:09:17.031Z] "GET /html HTTP/1.1" 503 UC "-" 0 57 2 - "174.16.249.64" "fortio.org/fortio-1.3.1" "f1cc53c4-5918-93c8-8334-cea08c51c423" "httpbin.org" "174.16.71.77:80" outbound|8000||httpbin.default.svc.cluster.local - 174.16.71.101:80 174.16.249.64:57198 -

Now a valid workaround is to use the retry mechanism configurable through HTTPRetry in the VirtualService, and when 1.1 is finally released, the retryOn with 5xx.

Testing this out with 1.1.0-rc.0 and just a single attempts: 1 results in no 503 whatsoever:

# kubectl exec -ti fortio-deploy-5588f84b45-5xx5v -- fortio load -qps 10 -t 1800s -c 10 -httpbufferkb 8569 -H "Host: httpbin.org" http://10.138.132.8:32500/json

Code 200 : 18000 (100.0 %)

# kubectl exec -ti fortio-deploy-5588f84b45-5xx5v -- fortio load -qps 10 -t 1800s -c 10 -httpbufferkb 8569 -H "Host: httpbin.org" http://10.138.132.8:32500/html

Code 200 : 18000 (100.0 %)

Any reason you're on the first RC of 1.1? There were a lot of issues on the

early releases, including 1.0 regressions. Not sure if any are specific to

this issue or not though.

On Sun, Feb 24, 2019, 9:55 AM Enrique Medina Montenegro <

[email protected]> wrote:

Now a valid workaround is to use the retry mechanism configurable through

HTTPRetry in the VirtualService, and when 1.1 is finally released, the

retryOn with 5xx.Testing this out with 1.1.0-rc.0 and just a single attempts: 1 results in

no 503 whatsoever:kubectl exec -ti fortio-deploy-5588f84b45-5xx5v -- fortio load -qps 10 -t 1800s -c 10 -httpbufferkb 8569 -H "Host: httpbin.org" http://10.138.132.8:32500/json

Code 200 : 18000 (100.0 %)

kubectl exec -ti fortio-deploy-5588f84b45-5xx5v -- fortio load -qps 10 -t 1800s -c 10 -httpbufferkb 8569 -H "Host: httpbin.org" http://10.138.132.8:32500/html

Code 200 : 18000 (100.0 %)

—

You are receiving this because you are subscribed to this thread.

Reply to this email directly, view it on GitHub

https://github.com/istio/istio/issues/9113#issuecomment-466783950, or mute

the thread

https://github.com/notifications/unsubscribe-auth/ALnFDJJ0QbwJxsuZ7YfeWaoGgcbA75MRks5vQqfcgaJpZM4XFnSU

.

@jaygorrell, please take a look at the issue from the beginning, not just my last comment. You will realize that this is happenning since 1.0.1.

No, I haven't tried each and every 1.1 snapshot, but 1.0.1, 1.0.4, 1.0.5, 1.0.6 and latest release 1.1.0-rc.0 show the same issue.

My apologies - I didn't realize a rc was out and read that as snapshot.0. Sorry about that.

@emedina This is exactly the behavior we see. We have retries turned on as a workaround, but clearly it's not a long-term solution

the OpenShift folks might have some thoughts on as OpenShift by

@christian-posta In my testing, I experience the problem with Istio Ingressgateway, Istio service mesh sidecars, and Ambassador API Gateway (also built atop Envoy). I do not see this error at all when using an Nginx Ingress Gateway.

curious if mixer policy deployment is enabled in your env? Note: mixer will be part of your request flow even if you are not using any mixer policy. We do have a PR in flight to disable mixer policy by default.

@dankirkpatrick I am not sure this is the same as the tcp keep alive issue.. See my comment here: https://github.com/istio/istio/issues/9906#issuecomment-467992199

Long story short, for tcp keep alive situations before 1.1, retry seems to make sense..

However, the issue that @emedina demonstrates seems different. The connection is kept occupied with a constant stream of 10 rps. The only scenario where the op is hitting the same tcp keep alive issue is when the fortio client ended up doing persistent HTTP to one of the replicas, but that seems unlikely..

FYI I'm trying to reproduce this locally to investigate

At first glance, this looks like a good usecase for the new retry stuff https://github.com/istio/istio/pull/10566

@knrc has a PR out for this and should be added to the assignees once he's inducted into the github org

@knrc's PR https://github.com/istio/istio/pull/12188 for 1.1

For anyone who has this issue for 1.0, please look at @Stono's tips at https://github.com/istio/istio/issues/12183

@duderino I'm not sure this should be assigned to me ... I'm OOO next week.

We shouldn't need retry for when no pod is going down. Also retries wouldn't work for POST.

Retries do work for POST - that's what 100 continue is for. They don't work after the body is sent, but in this case ( TCP closed ) they work very well, all browsers do this.

My test

kubectl -n fortio-control exec -it cli-fortio-7675dfcf59-ztdmn -- fortio load -qps 10 -t 600s -c 10 -httpbufferkb 8569 http://httpbin.httpbin.svc.cluster.local:8000/json

22:02:23 W http_client.go:679> Parsed non ok code 503 (HTTP/1.1 503)

22:04:18 W http_client.go:679> Parsed non ok code 503 (HTTP/1.1 503)

Note the 2 timestamps (02:23, 04:18)

The attached tcpdump

# remember to start injector with global.proxy.enableCoreDump=true

tcpdump -n -s0 -i lo -w /tmp/tcpdump.out port 80

There are 2 RST around the time, and if you follow the stream you see that both TCP connections

end with envoy sending the request.

All other connections are terminated with normal FIN/FIN.

I think this is 'fixed' for 1.1, but we didn't have consensus so I'll try to capture the objections and alternatives for the record....

The problem: envoy as a client borrows a connection from a connection pool that has already been closed by the peer. Since this is a routine occurrence we don't want to lose the request and return a 503 to the downstream client.

Approach 1: Envoy should notice the reset/close when sending the request to the upstream and automatically borrow a new connection from the pool or initiate a new connection. AFAIK Envoy does not support this today so we can't get this into Istio 1.1. @PiotrSikora @htuch @jplevyak @alyssawilk

Approach 2: @knrc's https://github.com/istio/istio/pull/12188 which was built upon work done by @nmittler: Configure Envoy to retry these requests. This covers the connection pool race condition, but is imperfect because it may retry additional cases we don't want to retry. This is the current solution going into 1.1.

Approach 3: @rshriram abandoned https://github.com/istio/istio/pull/12227 to set max requests per connection to 1. This certainly avoids the race condition, but also completely disables connection pooling. Several of us (@costinm @knrc @mandarjog @duderino) were not comfortable doing this without substantial performance testing and doing that would delay 1.1 too much.

Approach 4: We could instead set the an idle timeout for connections using the mechanism introduced in https://github.com/envoyproxy/envoy/pull/2714. The idea is that we can close idle connections and remove them from the connection pool before the peer is likely to close them. The peer can still close them for other reasons (e.g., overload) but in theory this would reduce the number of 503s without potentially retrying requests we don't want retried. The concern I have with this one is that it's not clear to me that the idle timeout only applies to sockets with no active requests on them. If there were active requests with infrequent activity (e.g,. long polls) we might lose those too.

So Approach 2 IMHO is the least risky option for 1.1, but it's arguable.

@knrc reports he still sees the rare 503 (about 7 in 1 hour at 10 rps which means a probability of about 0.0002 per request) and is continuing to investigate. Demoting to P1 to cover the remaining work

Belatedly agreed: 1) still has inherent races. 2) does have some risk, especially for non-idempotent requests, but is the best option for avoiding 503s. 3) has been historically super expensive for the proxy I've run so I agree with the folks who had perf concerns. I'd say 4 has higher risk of not getting it right first, but may be safer if you need to avoid spurious retries.

Update for today's call

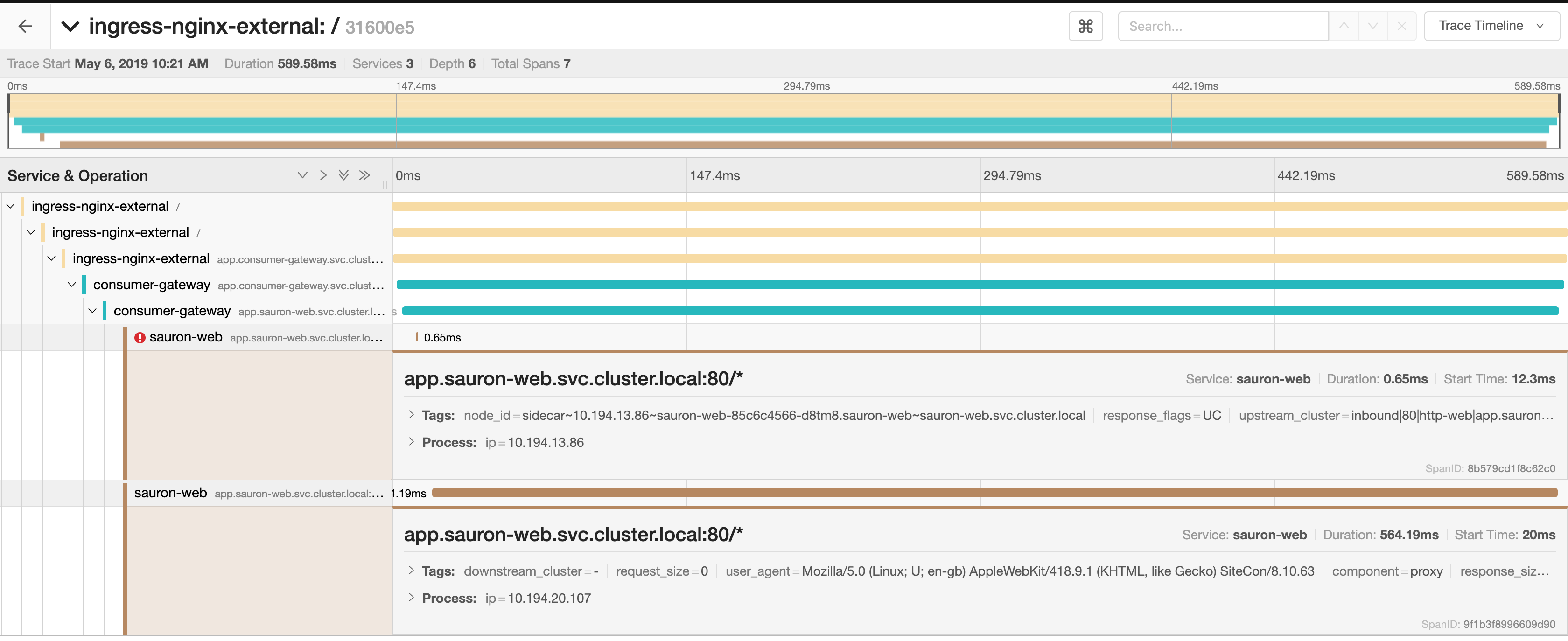

I have traces from both ingressgateway and httpbin sides, the error is happening when the sidecar returns RST/ACK in response to the request (GET in this case) but without trying to talk with the application. The response from ingressgateway to the caller is then a 503.

I'm still investigating the reason behind the RST/ACK from the sidecar.

BTW I can now reproduce these after approx 10-15 mins

I don't know if it helps debugging, but with everything we do in https://github.com/istio/istio/issues/12183 - we only ever see the 503's being reported from the destination, not the source. Which says to me the retries and outlier detection are protecting the source from the problem.

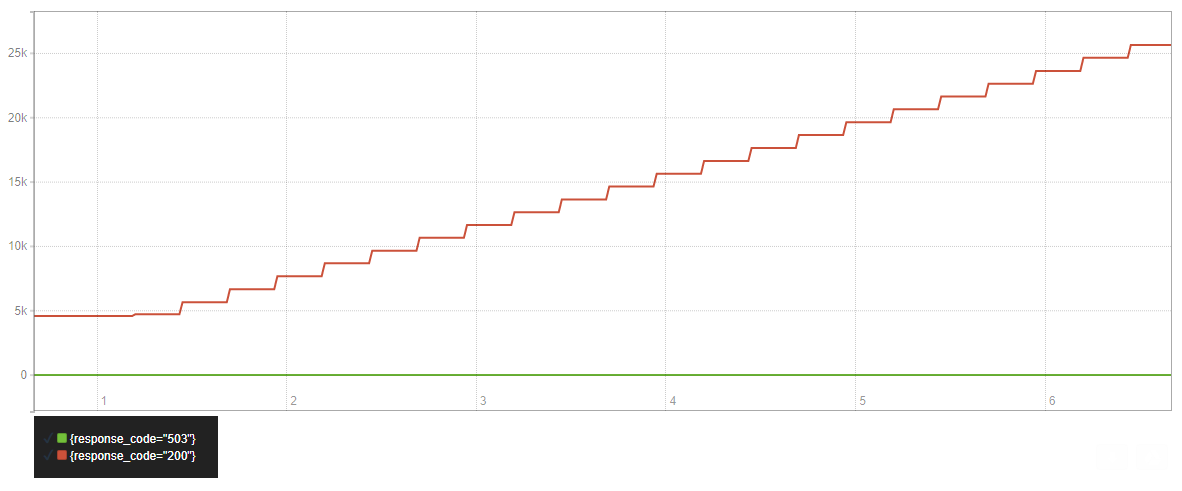

Also having sporadic 503 errors. In my case it's coming from a simple health check. The 503 does not come from the application. It comes from the istio-proxy. Found this when my pods were failing health checks, yet the application logs were 100% devoid of 503s. The istio-proxy logs showed about 2.9% of responses were 503 UC, the rest are 200.

All 503 responses had the UC flag.

From the envoy docs:

UC: Upstream connection termination in addition to 503 response code.

Running Istio 1.0.6

GKE 1.10.x

PS -- This is the only deployment that is throwing 503s like this. However... I may have a malformed ip address in a ServiceEntry.

The malformed IP address happens to be the FQDN of a service that's on the same cluster but outside the mesh. The service does exist. The FQDN is correct. Resolution is set to DNS. Not sure why this is trying to get read as an IP Address...

Logs

[2019-03-07 20:10:30.719][17][warning][config] bazel-out/k8-opt/bin/external/envoy/source/common/config/_virtual_includes/grpc_mux_subscription_lib/common/config/grpc_mux_subscription_impl.h:70] gRPC config for type.googleapis.com/envoy.api.v2.ClusterLoadAssignment rejected: malformed IP address: api-pricing.pricing.svc.cluster.local. Consider setting resolver_name or setting cluster type to 'STRICT_DNS' or 'LOGICAL_DNS'

...

This line shows up in the logs 2,141 times

Upgrading to Istio 1.1.0 has given us a more resilient installation, in that the 503 responses are being handled such that they are less disruptive to our applications.

Istio 1.1.0

Kubernetes : 1.13.4_1515

However, we are nevertheless seeing a continuous stream of 503 responses across all of our pods, making them all apparently unhealthy.

It would be highly preferable to see these eliminated so that we can see a correct health status for our pods.

I'm also intrigued to see that we have many thousands of messages from the istio-proxy as shown below. If there is anything further I can try/provide to help track down this issue I can do so.

[2019-03-29 09:26:31.637][18][warning][misc] [external/envoy/source/common/protobuf/utility.cc:129] Using deprecated option 'envoy.api.v2.route.Route.per_filter_config'. This configuration will be removed from Envoy soon. Please see https://github.com/envoyproxy/envoy/blob/master/DEPRECATED.md for details.