Ingress-nginx: Nginx ingress keeps reloading and dropping connections

Is this a request for help?

I don't know. Either it is a bug or I am misunderstanding some concept

What keywords did you search in NGINX Ingress controller issues before filing this one? (If you have found any duplicates, you should instead reply there.):

- Nginx ingress "Backend successfully reloaded."

- nginx ingress "Configuration changes detected, backend reload required."

- nginx ingress random reloads

- nginx ingress random restarts

Is this a BUG REPORT or FEATURE REQUEST? (choose one):

Bug report

NGINX Ingress controller version:

I tried images:

- quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.18

- quay.io/aledbf/nginx-ingress-controller:0.413

Kubernetes version (use kubectl version):

Client Version: version.Info{Major:"1", Minor:"11", GitVersion:"v1.11.2", GitCommit:"bb9ffb1654d4a729bb4cec18ff088eacc153c239", GitTreeState:"clean", BuildDate:"2018-08-08T16:31:10Z", GoVersion:"go1.10.3", Compiler:"gc", Platform:"darwin/amd64"}

Server Version: version.Info{Major:"1", Minor:"10+", GitVersion:"v1.10.5-gke.4", GitCommit:"6265b9797fc8680c8395abeab12c1e3bad14069a", GitTreeState:"clean", BuildDate:"2018-08-04T03:47:40Z", GoVersion:"go1.9.3b4", Compiler:"gc", Platform:"linux/amd64"}

Environment:

- Cloud provider or hardware configuration: GKE

What happened:

- Make curl request on endpoint which responds exactly after 120 seconds.

- I can see the request arrives at the destination service which processes it.

- During waiting for response I see in logs following:

I0824 17:16:57.102312 9 controller.go:171] Configuration changes detected, backend reload required.

I0824 17:16:57.644812 9 controller.go:187] Backend successfully reloaded.

2018/08/24 17:16:57 [warn] 1086#1086: *4706 a client request body is buffered to a temporary file /tmp/client-body/0000000016, client: ::1, server: , request: "POST /configuration/backends HTTP/1.1", host: "localhost:18080"

I0824 17:16:57.648475 9 controller.go:204] Dynamic reconfiguration succeeded.

- At this point the connection is dropped and I can see in curl following:

Empty reply from server

* Connection #0 to host 127.0.0.1 left intact

curl: (52) Empty reply from server

What you expected to happen:

I expected to get the results.

How to reproduce it (as minimally and precisely as possible):

Already described.

Anything else we need to know:

- The timeouts are correctly set to 900s, I can see them in nginx.conf.

- Nginx ingress was installed with Helm

- I edited the deployment to set

--enable-dynamic-configuration=falsewith no effect.

All 10 comments

@knyttl please post the ingress controller pod logs to see exactly why the connection is being closed

We've also seen very similar symptoms with our Nginx ingress. The symptoms were correlated with a pod, that was failing to start. Just in case someone's experiencing this issue.

I'll try to reproduce if anyone's curious, but deleting the pod/service stopped the behaviour from happening.

I'll try to reproduce if anyone's curious, but deleting the pod/service stopped the behaviour from happening.

This is fixed in https://github.com/kubernetes/ingress-nginx/pull/3338 (avoid reloads for 503, endpoints not available)

Closing. Please update to 0.21.0. If the issue persists please reopen the issue.

Still present with 0.21.0 but wondering if it's an issue or a wrong way of using it:

It's a multi namespace environment (multi-projects)

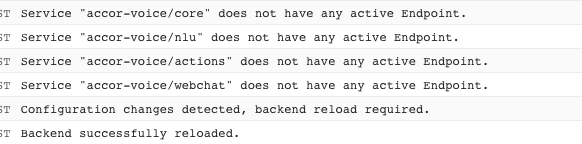

The ingress-nginx deployment is on the default namespace. When a new deployment is made on any other namespace it is restarted (see image) and shows the does not have valid endpoints

Should there be a single nginx-ingress deployment by namespace?

have the same issue. whenever I deploy a new App instanse in another namespace with a new host added to ingress, it reloads the backend and reset my long polling connection in other name space.

For me it was solved with

worker-shutdown-timeout: 900s

in nginx controller configmap

I believe I hit the same issue, I have jaeger ingress controller which is mapped to a specific fqdn, but reguallry I found this is deleted, and the default jaeger ingress is created which is has no fqdn, is my understanding the that adding worker-shutdown-timeout: 900s to the nginx controller will solve this issue?

Increasing worker-shutdown-timeout generally speaking is dangerous as doing that means the new changes in the cluster will take longer to be reflected in Nginx configuration.

If you would like to avoid connection resets, it is better to deploy a dedicated ingress-nginx for the app that requires long running connection. That way (assuming you're using recent ingress-nginx) you minimize the possibility of Nginx reloads.

Increasing worker-shutdown-timeout generally speaking is dangerous as doing that means the new changes in the cluster will take longer to be reflected in Nginx configuration.

I think the correct statement would be "might take longer" - it will take longer only if there is some open connection. As soon as the connection is closed, the worker will shutdown. That is desired.

Most helpful comment

Still present with 0.21.0 but wondering if it's an issue or a wrong way of using it:

It's a multi namespace environment (multi-projects)

The ingress-nginx deployment is on the

defaultnamespace. When a new deployment is made on any other namespace it is restarted (see image) and shows thedoes not have valid endpointsShould there be a single nginx-ingress deployment by namespace?