Incubator-mxnet: Some problems about support for Volta GPU architecture(V100 and CUDA9/CUDNNV7).

1 Not support all features in Volta GPU.

I found Mxnet used SgemmEX API when the I/O is FP16 type. The input is FP16, and computing with FP32. So is cudnn_convolution.

2 How can we get 3.5x faster than Pascal

After I check in the real Hgemm API and fix the cudnn convolution compute type to FP16, the speed up to 2x faster than Pascal in VGG network. So which net mxnet team to train to achive 3.5x speedup?

3 Tensor mode

In convolution, mxnet enables tensor op only through set math mode, but in API documentation, using tensor op requires setting the corresponding forward-backward algorithm, such as CUDNN_CONVOLUTION_FWD_ALGO_IMPLICIT_PRECOMP_GEMM, and the need for correct input and output data.

Environment

Hardware: V100-PCIE-16G

Software: Mxnet-0.12 CUDA-9.0 CUDNN-7

Network: VGG

All 5 comments

You don't need (and in most cases you don't want) to set fp16 compute type to use TensorCore - it is doing internal accumulation in fp32, that is why it is exposed via functions like sgemmEx. Setting the cudnn convolution algo is done via the cudnnFind function (it is very beneficial to set the MXNET_CUDNN_AUTOTUNE_DEFAULT environment variable to 2).

You can look at the fp16 examples in example/image-classification. The typical benchmark that we perform for synthetic data is (I assume single GPU here)

python example/image-classification/train_imagenet.py --gpu 0 --batch-size 128 --num-epochs 1 --disp-batches 100 --network resnet-v1 --num-layers 50 --dtype float16 --benchmark 1

On Pascal you would need to set the batch size to 64 or 96 and dtype to float32.

@ptrendx

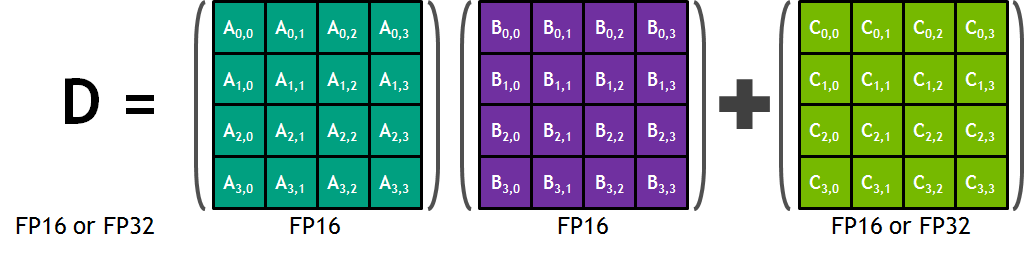

I think, Tensor op internal calculation method is FP16, not FP32. You can refer to the following document diagram.

It is either FP16 or FP32, and the difference in performance between those 2 is pretty negligible, but the accumulation precision is important for training.

I am from NVIDIA, by the way ;-).

@ptrendx

I got it.Thank you for your reply.

But, why you do not deal with the FP16, I found that in the absence of Tensor mode, in fact mxnet still use the input FP16 with calculation of FP32 to deal with. In the convolution OP only need to modify the computetype to FP16 .

I have test the tensor mode in cublas. Both Sgemm and Hgemm works well. And Hgemm get a bigger speedup than Sgemm.

@ptrendx

OK, I got 3.33x speedup between V100-tensor with P40 in resnet-50 network.

Thank you for your assistant.

Most helpful comment

It is either FP16 or FP32, and the difference in performance between those 2 is pretty negligible, but the accumulation precision is important for training.

I am from NVIDIA, by the way ;-).