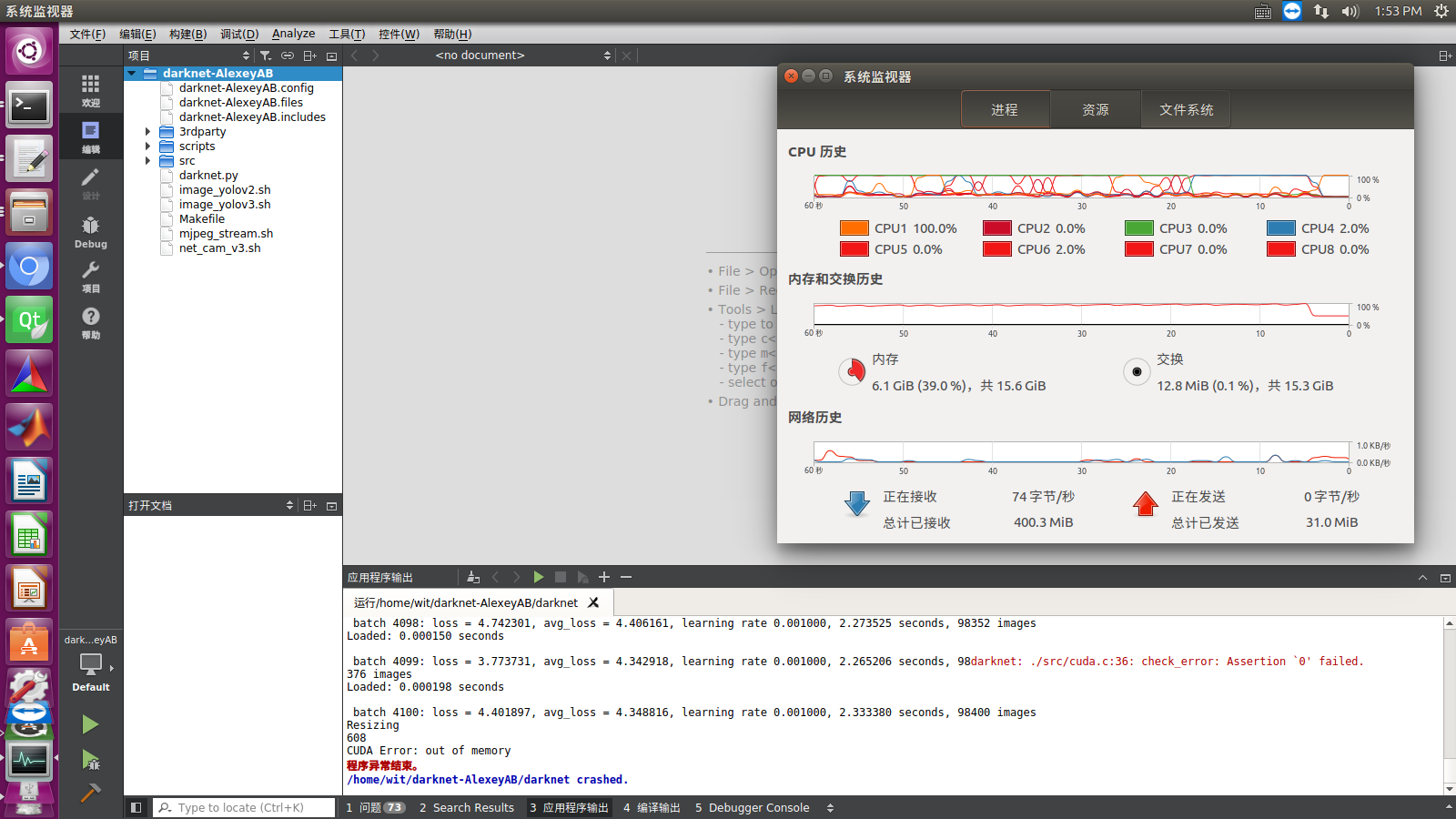

while I use detector.c::train_detector, the video memory is fluctuating between 3000+MBs to 5000+MBs. That's acceptable by GTX1070. But the RAM and swap momory keep growing until it's full.

so each 600-700 batches, the program will be killed by it'self because of no memory. I've got to continue the training by auto-save-weights.

I've checked "malloc/realloc/calloc" in while(get_current_batch(net) < net.max_batches){ ... }. Those do have been freed. It seems that the memory problem is not caused from pointers.

I'm not very familiar with the pthread operation. Has there been anyone to check the leakage of the pthread?

All 7 comments

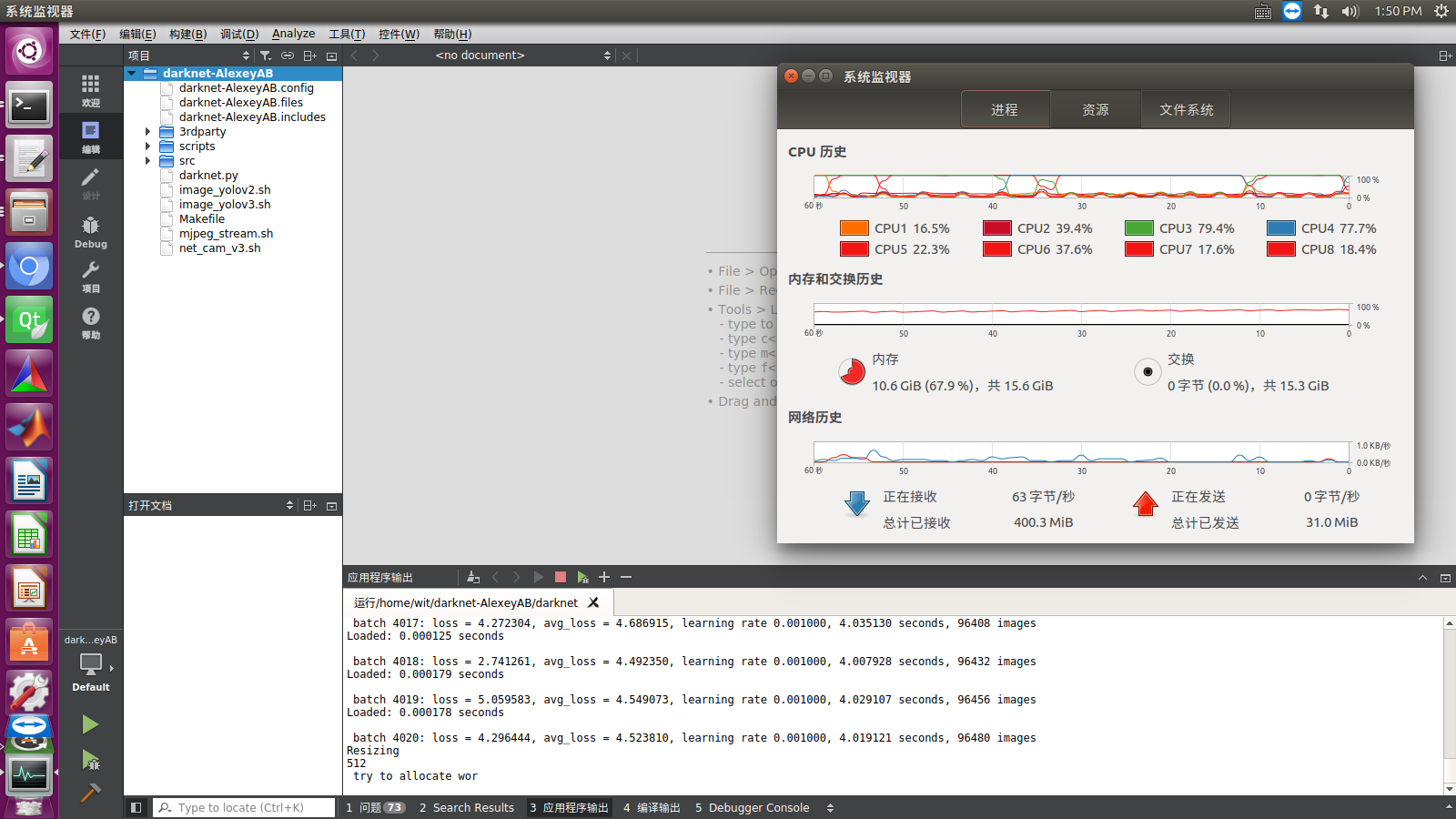

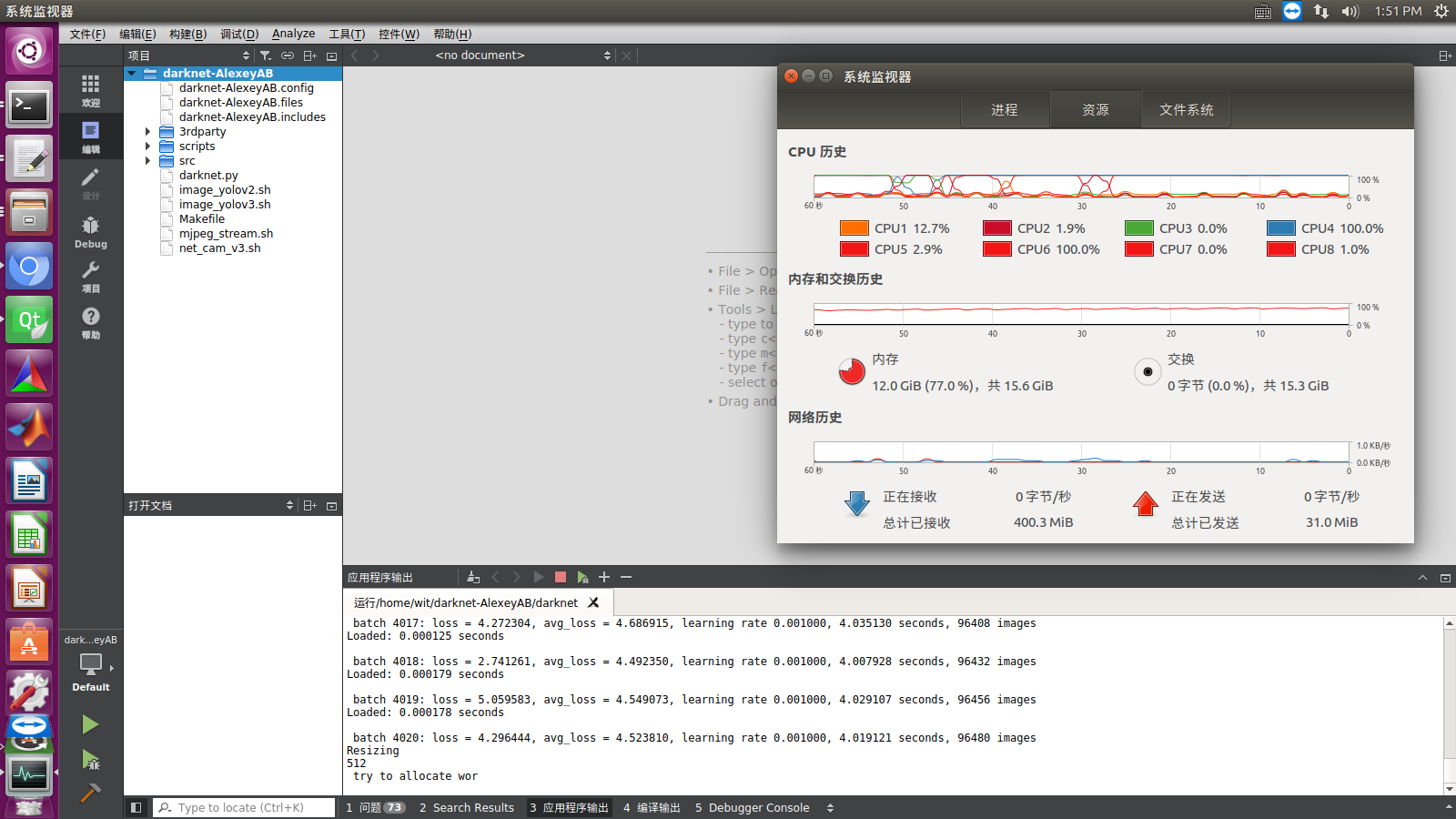

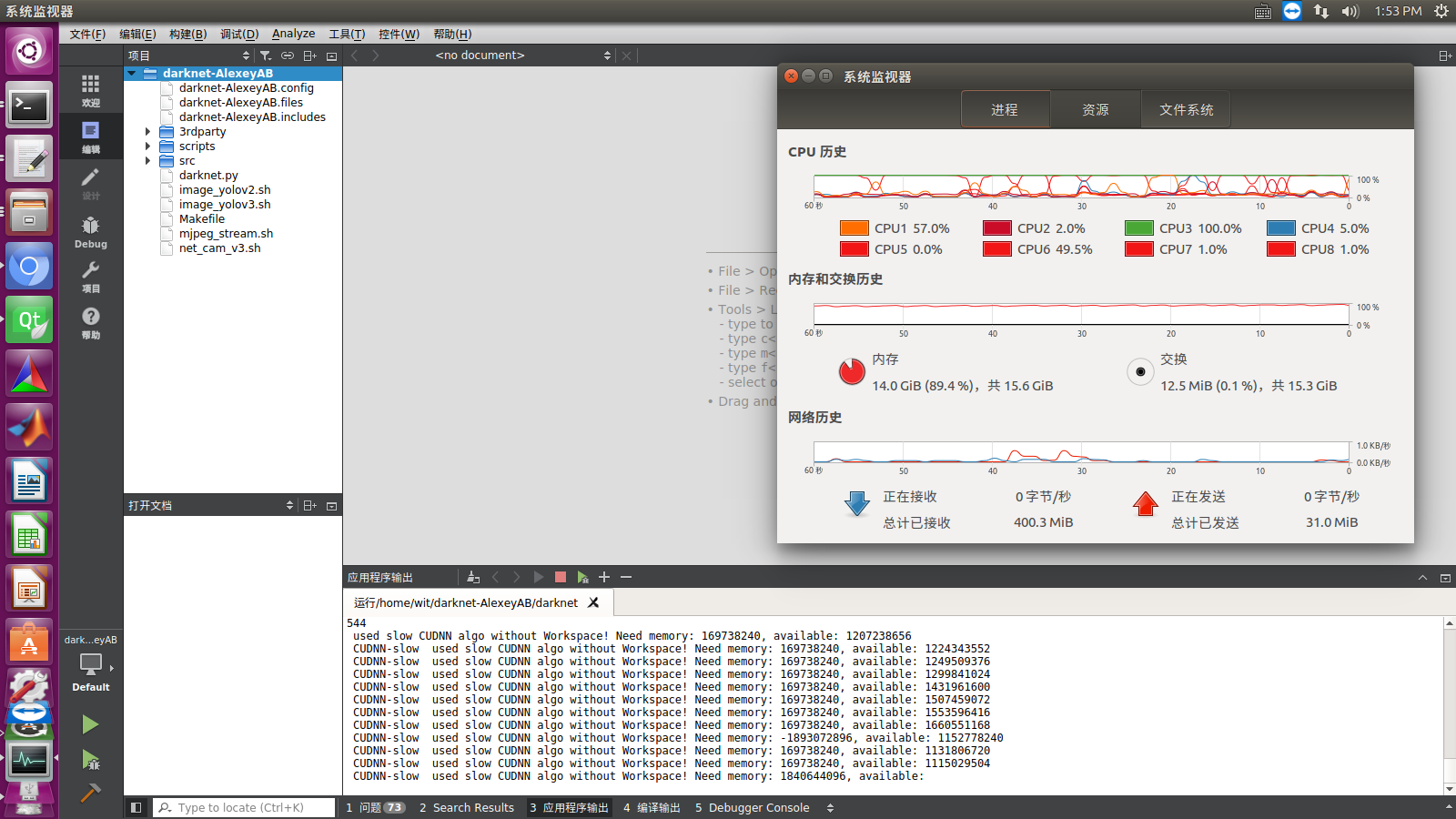

But the RAM and swap momory keep growing until it's full.

Can you show screenshot?

Do you use Windows or Linux?

- What params do you use in the Makefile on Linux? (Or did you compile x64 & Release on Windows?)

- How many RAM do you have?

@AlexeyAB

In the pictures, my colleagues are also using graphics cards, so there is not enough graphics memory to wait until this, but it is clear that RAM usage is also continuing to rise.

ubuntu 14.04x64, already sudo apt-get upgrade

now I summary it by valgrind (5400+lines, same project,same data, another batch&subdivisions for my own computer).

valgrind-24078.log

in 100 batches, it counts:

==24078== LEAK SUMMARY:

==24078== definitely lost: 4,875 bytes in 210 blocks

==24078== indirectly lost: 1,253,175,880 bytes in 2,407 blocks

==24078== possibly lost: 666,536,579 bytes in 17,809 blocks

==24078== still reachable: 772,658,855 bytes in 610,188 blocks

==24078== suppressed: 0 bytes in 0 blocks

==24078== Reachable blocks (those to which a pointer was found) are not shown.

==24078== To see them, rerun with: --leak-check=full --show-leak-kinds=all

it seems that the leak is still caused by the XXalloc.

@aimhabo Thanks!

I just fixed some memory leaks for: training and test. Try to update your code from GitHub and recompile.

Now I can't find any memory leaks by using MS Visual Studio 2015 _CrtSetDbgFlag(_CRTDBG_ALLOC_MEM_DF | _CRTDBG_LEAK_CHECK_DF);

If it doesn't help, then try to train with different options:

- Is there a problem if you train with

random=0in your cfg-file? - Is there a problem if you train with

OPENCV=0in your Makefile-file? - Is there a problem if you train with

CUDNN=0in your Makefile-file?

Also valgrind detected some leaks inside the CUDA in the Thread local storage dl_allocate_tls that I think isn't true-memory-leak:

==24078== 368 bytes in 1 blocks are definitely lost in loss record 1,363 of 2,106

==24078== at 0x4C2CC70: calloc (in /usr/lib/valgrind/vgpreload_memcheck-amd64-linux.so)

==24078== by 0x4012EE4: allocate_dtv (dl-tls.c:296)

==24078== by 0x4012EE4: _dl_allocate_tls (dl-tls.c:460)

==24078== by 0x36103D92: allocate_stack (allocatestack.c:589)

==24078== by 0x36103D92: pthread_create@@GLIBC_2.2.5 (pthread_create.c:500)

==24078== by 0x65CA2A5E: ??? (in /usr/lib/x86_64-linux-gnu/libcuda.so.384.81)

==24078== by 0x65C59BA9: ??? (in /usr/lib/x86_64-linux-gnu/libcuda.so.384.81)

==24078== by 0x65C5AFCE: ??? (in /usr/lib/x86_64-linux-gnu/libcuda.so.384.81)

==24078== by 0x65B8C5CB: ??? (in /usr/lib/x86_64-linux-gnu/libcuda.so.384.81)

==24078== by 0x65CC765A: cuDevicePrimaryCtxRetain (in /usr/lib/x86_64-linux-gnu/libcuda.so.384.81)

==24078== by 0x3569B72F: ??? (in /usr/local/cuda-9.0/lib64/libcudart.so.9.0.176)

==24078== by 0x3569B951: ??? (in /usr/local/cuda-9.0/lib64/libcudart.so.9.0.176)

==24078== by 0x3569D017: ??? (in /usr/local/cuda-9.0/lib64/libcudart.so.9.0.176)

==24078== by 0x3568F11D: ??? (in /usr/local/cuda-9.0/lib64/libcudart.so.9.0.176)

==24078==

@AlexeyAB

Can we have some versioning to know what and when things have changed ?

@dexception What do you mean?

You can compare any range of commits by using, for example, TortoiseGit on Windows.

Or you can click by commits to see changes online: https://github.com/AlexeyAB/darknet/commits/master

@AlexeyAB and in network.c , it forgot #include "upsample_layer.h". Can pass in the gcc compiler, but will prompt the lack of definition in the g++.

I haven't done the test yet. I can do it after some days later.

it works!

Most helpful comment

@aimhabo Thanks!

I just fixed some memory leaks for: training and test. Try to update your code from GitHub and recompile.

Now I can't find any memory leaks by using MS Visual Studio 2015

_CrtSetDbgFlag(_CRTDBG_ALLOC_MEM_DF | _CRTDBG_LEAK_CHECK_DF);If it doesn't help, then try to train with different options:

random=0in your cfg-file?OPENCV=0in your Makefile-file?CUDNN=0in your Makefile-file?Also valgrind detected some leaks inside the CUDA in the Thread local storage

dl_allocate_tlsthat I think isn't true-memory-leak: