Azure-docs: Blob Module deployment fails; 3 possible improvements

Today I tried to add the new blob storage support to my already running IoT edge on Ubuntu 18.04.

I did not get it running.

I would like to share my findings. I tried to contact Greg Manyak, I talked with him last week in Barcelona but I do not have his email address. Is he the right contact person?

So I used the procedure at https://docs.microsoft.com/en-us/azure/iot-edge/how-to-store-data-blob

This is one of the hardest documents to read compared to the other documents regarding IoT Edge. My colleague encountered similar issues with this tutorial.

First, it describes I have to use mcr.microsoft.com/azure-blob-storage:latest This naming convention is strange. For as far as I know, the edgeAgent is not capable to automatically update the module if “latest” changes in the repository. It seems I can only update to a newer version by removing the current one in the local repository. For as far as I know, it was not recommended to use “latest” when IoT Edge was available as public preview.

Isn’t it better to give it a version real number as with all other modules?

Secondly, the explanation about the Container Create options are not very clear. The examples given are hard to understand. What I came up with was:

{

"Env":[

"LOCAL_STORAGE_ACCOUNT_NAME=localblobstorageaccountname",

"LOCAL_STORAGE_ACCOUNT_KEY=pY[64 long]hozVm5QJtL8Jg=="

],

"HostConfig":{

"Mounts": [

{"Target": "/var/opt/msblob", "Source": "msBlob", "Type": "volume"}

],

"Binds":[

"msBlob"

],

"PortBindings":{

"11002/tcp":[{"HostPort":"11002"}]

}

}

}

E.g. I hope I have added the right values for the environment variables.

Regarding the volume, I added the extra “Mounts”. This should create the right volume automatically. I took it from: https://docs.microsoft.com/en-us/azure/iot-edge/tutorial-store-data-sql-server

This way, I can have a volume without creating it on the device itself. I think this could actually work 😊

These options are accepted in the Azure Portal. But after deployment, I get the following exceptions in the edgeAgent:

2018-10-21 10:18:05.058 +00:00 [INF] - Executing command: "Create module blob"

2018-10-21 10:19:51.012 +00:00 [ERR] - Executing command for operation ["create"] failed.

System.Threading.Tasks.TaskCanceledException: A task was canceled.

at Microsoft.Azure.Devices.Edge.Util.Uds.HttpBufferedStream.ReadLineAsync(CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-util/src/Microsoft.Azure.Devices.Edge.Util/uds/HttpBufferedStream.cs:line 50

at Microsoft.Azure.Devices.Edge.Util.Uds.HttpRequestResponseSerializer.SetResponseStatusLine(HttpResponseMessage httpResponse, HttpBufferedStream bufferedStream, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-util/src/Microsoft.Azure.Devices.Edge.Util/uds/HttpRequestResponseSerializer.cs:line 120

at Microsoft.Azure.Devices.Edge.Util.Uds.HttpRequestResponseSerializer.DeserializeResponse(HttpBufferedStream bufferedStream, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-util/src/Microsoft.Azure.Devices.Edge.Util/uds/HttpRequestResponseSerializer.cs:line 67

at Microsoft.Azure.Devices.Edge.Util.Uds.HttpUdsMessageHandler.SendAsync(HttpRequestMessage request, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-util/src/Microsoft.Azure.Devices.Edge.Util/uds/HttpUdsMessageHandler.cs:line 37

at System.Net.Http.HttpClient.FinishSendAsyncUnbuffered(Task1 sendTask, HttpRequestMessage request, CancellationTokenSource cts, Boolean disposeCts) at Microsoft.Azure.Devices.Edge.Agent.Edgelet.GeneratedCode.EdgeletHttpClient.CreateModuleAsync(String api_version, ModuleSpec module, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Edgelet/generatedCode/EdgeletHttpClient.cs:line 269 at Microsoft.Azure.Devices.Edge.Agent.Edgelet.ModuleManagementHttpClient.Execute[T](Func1 func, String operation)

at Microsoft.Azure.Devices.Edge.Agent.Edgelet.ModuleManagementHttpClient.CreateModuleAsync(ModuleSpec moduleSpec) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Edgelet/ModuleManagementHttpClient.cs:line 89

at Microsoft.Azure.Devices.Edge.Agent.Core.LoggingCommandFactory.LoggingCommand.ExecuteAsync(CancellationToken token) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Core/LoggingCommandFactory.cs:line 61

2018-10-21 10:19:51.151 +00:00 [ERR] - Executing command for operation ["Command Group: (

[Create module blob]

[Start module blob]

)"] failed.

System.Threading.Tasks.TaskCanceledException: A task was canceled.

at Microsoft.Azure.Devices.Edge.Util.Uds.HttpBufferedStream.ReadLineAsync(CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-util/src/Microsoft.Azure.Devices.Edge.Util/uds/HttpBufferedStream.cs:line 50

at Microsoft.Azure.Devices.Edge.Util.Uds.HttpRequestResponseSerializer.SetResponseStatusLine(HttpResponseMessage httpResponse, HttpBufferedStream bufferedStream, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-util/src/Microsoft.Azure.Devices.Edge.Util/uds/HttpRequestResponseSerializer.cs:line 120

at Microsoft.Azure.Devices.Edge.Util.Uds.HttpRequestResponseSerializer.DeserializeResponse(HttpBufferedStream bufferedStream, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-util/src/Microsoft.Azure.Devices.Edge.Util/uds/HttpRequestResponseSerializer.cs:line 67

at Microsoft.Azure.Devices.Edge.Util.Uds.HttpUdsMessageHandler.SendAsync(HttpRequestMessage request, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-util/src/Microsoft.Azure.Devices.Edge.Util/uds/HttpUdsMessageHandler.cs:line 37

at System.Net.Http.HttpClient.FinishSendAsyncUnbuffered(Task1 sendTask, HttpRequestMessage request, CancellationTokenSource cts, Boolean disposeCts) at Microsoft.Azure.Devices.Edge.Agent.Edgelet.GeneratedCode.EdgeletHttpClient.CreateModuleAsync(String api_version, ModuleSpec module, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Edgelet/generatedCode/EdgeletHttpClient.cs:line 269 at Microsoft.Azure.Devices.Edge.Agent.Edgelet.ModuleManagementHttpClient.Execute[T](Func1 func, String operation)

at Microsoft.Azure.Devices.Edge.Agent.Edgelet.ModuleManagementHttpClient.CreateModuleAsync(ModuleSpec moduleSpec) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Edgelet/ModuleManagementHttpClient.cs:line 89

at Microsoft.Azure.Devices.Edge.Agent.Core.LoggingCommandFactory.LoggingCommand.ExecuteAsync(CancellationToken token) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Core/LoggingCommandFactory.cs:line 61

at Microsoft.Azure.Devices.Edge.Agent.Core.Commands.GroupCommand.ExecuteAsync(CancellationToken token) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Core/commands/GroupCommand.cs:line 36

at Microsoft.Azure.Devices.Edge.Agent.Core.LoggingCommandFactory.LoggingCommand.ExecuteAsync(CancellationToken token) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Core/LoggingCommandFactory.cs:line 61

2018-10-21 10:19:51.181 +00:00 [ERR] - Step failed in deployment 74, continuing execution. Failure when running command Command Group: (

[Create module blob]

[Start module blob]

). Will retry in 00s.

2018-10-21 10:19:51.185 +00:00 [INF] - Plan execution ended for deployment 74

2018-10-21 10:19:51.208 +00:00 [ERR] - Edge agent plan execution failed.

System.AggregateException: One or more errors occurred. (A task was canceled.) ---> System.Threading.Tasks.TaskCanceledException: A task was canceled.

at Microsoft.Azure.Devices.Edge.Util.Uds.HttpBufferedStream.ReadLineAsync(CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-util/src/Microsoft.Azure.Devices.Edge.Util/uds/HttpBufferedStream.cs:line 50

at Microsoft.Azure.Devices.Edge.Util.Uds.HttpRequestResponseSerializer.SetResponseStatusLine(HttpResponseMessage httpResponse, HttpBufferedStream bufferedStream, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-util/src/Microsoft.Azure.Devices.Edge.Util/uds/HttpRequestResponseSerializer.cs:line 120

at Microsoft.Azure.Devices.Edge.Util.Uds.HttpRequestResponseSerializer.DeserializeResponse(HttpBufferedStream bufferedStream, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-util/src/Microsoft.Azure.Devices.Edge.Util/uds/HttpRequestResponseSerializer.cs:line 67

at Microsoft.Azure.Devices.Edge.Util.Uds.HttpUdsMessageHandler.SendAsync(HttpRequestMessage request, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-util/src/Microsoft.Azure.Devices.Edge.Util/uds/HttpUdsMessageHandler.cs:line 37

at System.Net.Http.HttpClient.FinishSendAsyncUnbuffered(Task1 sendTask, HttpRequestMessage request, CancellationTokenSource cts, Boolean disposeCts) at Microsoft.Azure.Devices.Edge.Agent.Edgelet.GeneratedCode.EdgeletHttpClient.CreateModuleAsync(String api_version, ModuleSpec module, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Edgelet/generatedCode/EdgeletHttpClient.cs:line 269 at Microsoft.Azure.Devices.Edge.Agent.Edgelet.ModuleManagementHttpClient.Execute[T](Func1 func, String operation)

at Microsoft.Azure.Devices.Edge.Agent.Edgelet.ModuleManagementHttpClient.CreateModuleAsync(ModuleSpec moduleSpec) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Edgelet/ModuleManagementHttpClient.cs:line 89

at Microsoft.Azure.Devices.Edge.Agent.Core.LoggingCommandFactory.LoggingCommand.ExecuteAsync(CancellationToken token) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Core/LoggingCommandFactory.cs:line 61

at Microsoft.Azure.Devices.Edge.Agent.Core.Commands.GroupCommand.ExecuteAsync(CancellationToken token) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Core/commands/GroupCommand.cs:line 36

at Microsoft.Azure.Devices.Edge.Agent.Core.LoggingCommandFactory.LoggingCommand.ExecuteAsync(CancellationToken token) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Core/LoggingCommandFactory.cs:line 61

at Microsoft.Azure.Devices.Edge.Agent.Core.PlanRunners.OrderedRetryPlanRunner.ExecuteAsync(Int64 deploymentId, Plan plan, CancellationToken token) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Core/planrunners/OrdererdRetryPlanRunner.cs:line 86

--- End of inner exception stack trace ---

at Microsoft.Azure.Devices.Edge.Agent.Core.PlanRunners.OrderedRetryPlanRunner.<>c.b__7_0(List 1 f) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Core/planrunners/OrdererdRetryPlanRunner.cs:line 114 at Microsoft.Azure.Devices.Edge.Agent.Core.PlanRunners.OrderedRetryPlanRunner.ExecuteAsync(Int64 deploymentId, Plan plan, CancellationToken token) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Core/planrunners/OrdererdRetryPlanRunner.cs:line 115 at Microsoft.Azure.Devices.Edge.Agent.Core.Agent.ReconcileAsync(CancellationToken token) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Core/Agent.cs:line 176 ---> (Inner Exception #0) System.Threading.Tasks.TaskCanceledException: A task was canceled. at Microsoft.Azure.Devices.Edge.Util.Uds.HttpBufferedStream.ReadLineAsync(CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-util/src/Microsoft.Azure.Devices.Edge.Util/uds/HttpBufferedStream.cs:line 50 at Microsoft.Azure.Devices.Edge.Util.Uds.HttpRequestResponseSerializer.SetResponseStatusLine(HttpResponseMessage httpResponse, HttpBufferedStream bufferedStream, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-util/src/Microsoft.Azure.Devices.Edge.Util/uds/HttpRequestResponseSerializer.cs:line 120 at Microsoft.Azure.Devices.Edge.Util.Uds.HttpRequestResponseSerializer.DeserializeResponse(HttpBufferedStream bufferedStream, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-util/src/Microsoft.Azure.Devices.Edge.Util/uds/HttpRequestResponseSerializer.cs:line 67 at Microsoft.Azure.Devices.Edge.Util.Uds.HttpUdsMessageHandler.SendAsync(HttpRequestMessage request, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-util/src/Microsoft.Azure.Devices.Edge.Util/uds/HttpUdsMessageHandler.cs:line 37 at System.Net.Http.HttpClient.FinishSendAsyncUnbuffered(Task1 sendTask, HttpRequestMessage request, CancellationTokenSource cts, Boolean disposeCts)

at Microsoft.Azure.Devices.Edge.Agent.Edgelet.GeneratedCode.EdgeletHttpClient.CreateModuleAsync(String api_version, ModuleSpec module, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Edgelet/generatedCode/EdgeletHttpClient.cs:line 269

at Microsoft.Azure.Devices.Edge.Agent.Edgelet.ModuleManagementHttpClient.ExecuteT

at Microsoft.Azure.Devices.Edge.Agent.Edgelet.GeneratedCode.EdgeletHttpClient.CreateModuleAsync(String api_version, ModuleSpec module, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Edgelet/generatedCode/EdgeletHttpClient.cs:line 269

at Microsoft.Azure.Devices.Edge.Agent.Edgelet.ModuleManagementHttpClient.ExecuteT in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Core/planrunners/OrdererdRetryPlanRunner.cs:line 114

at Microsoft.Azure.Devices.Edge.Agent.Core.PlanRunners.OrderedRetryPlanRunner.ExecuteAsync(Int64 deploymentId, Plan plan, CancellationToken token) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Core/planrunners/OrdererdRetryPlanRunner.cs:line 115

at Microsoft.Azure.Devices.Edge.Agent.Core.Agent.ReconcileAsync(CancellationToken token) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Core/Agent.cs:line 176

at Microsoft.Azure.Devices.Edge.Agent.Core.Agent.ReconcileAsync(CancellationToken token) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Core/Agent.cs:line 187

---> (Inner Exception #0) System.Threading.Tasks.TaskCanceledException: A task was canceled.

at Microsoft.Azure.Devices.Edge.Util.Uds.HttpBufferedStream.ReadLineAsync(CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-util/src/Microsoft.Azure.Devices.Edge.Util/uds/HttpBufferedStream.cs:line 50

at Microsoft.Azure.Devices.Edge.Util.Uds.HttpRequestResponseSerializer.SetResponseStatusLine(HttpResponseMessage httpResponse, HttpBufferedStream bufferedStream, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-util/src/Microsoft.Azure.Devices.Edge.Util/uds/HttpRequestResponseSerializer.cs:line 120

at Microsoft.Azure.Devices.Edge.Util.Uds.HttpRequestResponseSerializer.DeserializeResponse(HttpBufferedStream bufferedStream, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-util/src/Microsoft.Azure.Devices.Edge.Util/uds/HttpRequestResponseSerializer.cs:line 67

at Microsoft.Azure.Devices.Edge.Util.Uds.HttpUdsMessageHandler.SendAsync(HttpRequestMessage request, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-util/src/Microsoft.Azure.Devices.Edge.Util/uds/HttpUdsMessageHandler.cs:line 37

at System.Net.Http.HttpClient.FinishSendAsyncUnbuffered(Task1 sendTask, HttpRequestMessage request, CancellationTokenSource cts, Boolean disposeCts) at Microsoft.Azure.Devices.Edge.Agent.Edgelet.GeneratedCode.EdgeletHttpClient.CreateModuleAsync(String api_version, ModuleSpec module, CancellationToken cancellationToken) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Edgelet/generatedCode/EdgeletHttpClient.cs:line 269 at Microsoft.Azure.Devices.Edge.Agent.Edgelet.ModuleManagementHttpClient.Execute[T](Func1 func, String operation)

at Microsoft.Azure.Devices.Edge.Agent.Edgelet.ModuleManagementHttpClient.CreateModuleAsync(ModuleSpec moduleSpec) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Edgelet/ModuleManagementHttpClient.cs:line 89

at Microsoft.Azure.Devices.Edge.Agent.Core.LoggingCommandFactory.LoggingCommand.ExecuteAsync(CancellationToken token) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Core/LoggingCommandFactory.cs:line 61

at Microsoft.Azure.Devices.Edge.Agent.Core.Commands.GroupCommand.ExecuteAsync(CancellationToken token) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Core/commands/GroupCommand.cs:line 36

at Microsoft.Azure.Devices.Edge.Agent.Core.LoggingCommandFactory.LoggingCommand.ExecuteAsync(CancellationToken token) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Core/LoggingCommandFactory.cs:line 61

at Microsoft.Azure.Devices.Edge.Agent.Core.PlanRunners.OrderedRetryPlanRunner.ExecuteAsync(Int64 deploymentId, Plan plan, CancellationToken token) in /home/vsts/work/1/s/edge-agent/src/Microsoft.Azure.Devices.Edge.Agent.Core/planrunners/OrdererdRetryPlanRunner.cs:line 86<---

2018-10-21 10:19:52.284 +00:00 [INF] - Updated reported properties

2018-10-21 10:19:57.963 +00:00 [INF] - Plan execution started for deployment 74

2018-10-21 10:19:57.984 +00:00 [INF] - Plan execution ended for deployment 74

^C

I have no clue what the reason for this exception is.

So I have these questions:

• Can you confirm the container create options configuration of my blob module is correct?

• Do you agree adding the creation of a new volume in the create options is a good idea?

• Do you agree the use of “latest” as version indication in the name of the module is less optimal?

If there are any questions or if I am doing everything wrong 😊 or if there is better documentation to test, please send me a message.

Regards,

Sander

Document Details

⚠ Do not edit this section. It is required for docs.microsoft.com ➟ GitHub issue linking.

- ID: 07a44a28-a19c-f68e-167c-5e8ad91605e0

- Version Independent ID: 33eacdff-4f0a-c543-d8f9-e7b208bab015

- Content: Azure Blob Storage on Azure IoT Edge devices

- Content Source: articles/iot-edge/how-to-store-data-blob.md

- Service: iot-edge

- GitHub Login: @kgremban

- Microsoft Alias: kgremban

All 28 comments

@sandervandevelde Thanks for the feedback! We are currently investigating and will update you shortly.

@sandervandevelde

Ad. "Can you confirm the container create options configuration of my blob module is correct?"

Looking at the docker documentation, Mounts option is a newer way of creating binds that replaces Binds, Volumes, and Tmpfs options. It is more verbose and versatile. I do not see a reason to use both options at the same time – just use one of them, whichever you like.

When using Mounts option:

Target is the container path, in our case /blobroot or C:/BlobRoot

Source is volume name OR host path, in this case msBlob OR /var/opt/msblob

Type is “volume” if msBlob is used, OR “bind” if /var/opt/msblob is used

Example:

"HostConfig":{

"Mounts": [

{"Target": "/blobroot", "Source": "msBlob", "Type": "volume"}

],

"PortBindings":{

"11002/tcp":[{"HostPort":"11002"}]

}

When using Binds option: Example: Ad. "Do you agree adding the creation of a new volume in the create options is a good idea?" Yes, you should create a custom volume and keep using it after possible redeployments if you want to keep using the blobs created before redeployment. Docker creates a volume for you if you specify a name of non-existing volume. Ad. "Do you agree the use of “latest” as version indication in the name of the module is less optimal?" At the time of releasing this module, IoT team recommended we use the :latest tag. Let me know if it helps. We'll look into improving the doc so it's more clear how the binds options should be used.

“\

"HostConfig":{

"Binds":[

"msBlob:/blobroot"

],

"PortBindings":{

"11002/tcp":[{"HostPort":"11002"}]

}

Thanks for the information.

I tried it again on ubuntu 18.04 on a stable edge (running 1.0.3 modules for agent and hub).

I deployed: mcr.microsoft.com/azure-blob-storage:latest

I used configuration as seen in the documentation:

{

"Env": [

"LOCAL_STORAGE_ACCOUNT_NAME=blobaccount",

"LOCAL_STORAGE_ACCOUNT_KEY=64 bytes long "

],

"HostConfig": {

"Binds": [

"/srv/containerdata:/blobroot"

],

"PortBindings": {

"11002/tcp": [

{

"HostPort": "11002"

}

]

}

}

}

But the blob module is not loaded on the edge:

2018-10-23 22:23:50.431 +00:00 [INF] - Executing command: "Create module blob"

2018-10-23 22:24:10.392 +00:00 [INF] - Executing command: "Start module blob"

2018-10-23 22:24:12.403 +00:00 [INF] - Plan execution ended for deployment 82

2018-10-23 22:24:12.813 +00:00 [INF] - Updated reported properties

2018-10-23 22:24:17.912 +00:00 [INF] - Module 'blob' scheduled to restart after 10s (05s left).

2018-10-23 22:24:18.122 +00:00 [INF] - Updated reported properties

2018-10-23 22:24:23.270 +00:00 [INF] - Module 'blob' scheduled to restart after 10s (00s left).

2018-10-23 22:24:28.377 +00:00 [INF] - Plan execution started for deployment 82

2018-10-23 22:24:28.381 +00:00 [INF] - Executing command: "Command Group: (

[Stop module blob]

[Start module blob]

[Saving blob to store]

)"

2018-10-23 22:24:28.381 +00:00 [INF] - Executing command: "Stop module blob"

2018-10-23 22:24:28.392 +00:00 [INF] - Executing command: "Start module blob"

2018-10-23 22:24:30.156 +00:00 [INF] - Executing command: "Saving blob to store"

2018-10-23 22:24:30.157 +00:00 [INF] - Plan execution ended for deployment 82

2018-10-23 22:24:35.380 +00:00 [INF] - Module 'blob' scheduled to restart after 20s (16s left).

2018-10-23 22:24:35.636 +00:00 [INF] - Updated reported properties

On the portal, I get Backoff code 132.

Docker info returns:

uno2271gsv@uno2271gsv-UNO-2271G-E2xAE:~$ docker info

Containers: 6

Running: 5

Paused: 0

Stopped: 1

Images: 6

Server Version: dev

Storage Driver: overlay2

Backing Filesystem: extfs

Supports d_type: true

Native Overlay Diff: true

Logging Driver: json-file

Cgroup Driver: cgroupfs

Plugins:

Volume: local

Network: bridge host macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file logentries splunk syslog

Swarm: inactive

Runtimes: runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 468a545b9edcd5932818eb9de8e72413e616e86e

runc version: 69663f0bd4b60df09991c08812a60108003fa340

init version: fec3683

Security Options:

apparmor

seccomp

Profile: default

Kernel Version: 4.15.0-34-generic

Operating System: Ubuntu 18.04.1 LTS

OSType: linux

Architecture: x86_64

CPUs: 1

Total Memory: 3.747GiB

Name: uno2271gsv-UNO-2271G-E2xAE

ID: R4HP:HCE5:QGE5:V7EL:V2O4:LZBS:XFYH:DUJF:DPTB:I3PV:4IZX:GWEW

Docker Root Dir: /var/lib/docker

Debug Mode (client): false

Debug Mode (server): false

Username: svelde

Registry: https://index.docker.io/v1/

Labels:

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

WARNING: No swap limit support

@sandervandevelde Do you see anything in docker logs for the container (docker logs

Hello, thanks for looking into this.

The blob module is not downloaded yet. So "docker logs blob -f" results in an empty line.

The log of edgeHub shows nothing regarding the new module.

The log of edgeAgent only shows the scheduled restarts:

2018-10-24 22:17:22.393 +00:00 [INF] - Module 'blob' scheduled to restart after 02m:40s (02s left).

2018-10-24 22:17:27.543 +00:00 [INF] - Plan execution started for deployment 84

2018-10-24 22:17:27.547 +00:00 [INF] - Executing command: "Command Group: (

[Stop module blob]

[Start module blob]

[Saving blob to store]

)"

2018-10-24 22:17:27.547 +00:00 [INF] - Executing command: "Stop module blob"

2018-10-24 22:17:27.558 +00:00 [INF] - Executing command: "Start module blob"

2018-10-24 22:17:29.343 +00:00 [INF] - Executing command: "Saving blob to store"

2018-10-24 22:17:29.344 +00:00 [INF] - Plan execution ended for deployment 84

2018-10-24 22:17:34.634 +00:00 [INF] - Module 'blob' scheduled to restart after 05m:00s (04m:56s left).

2018-10-24 22:17:34.796 +00:00 [INF] - Updated reported properties

2018-10-24 22:17:39.954 +00:00 [INF] - Module 'blob' scheduled to restart after 05m:00s (04m:50s left).

The images is actually downloaded to my machine (just before adding the module to the configuration, I removed it from the local repository)

REPOSITORY TAG IMAGE ID CREATED SIZE

mcr.microsoft.com/azure-blob-storage latest 4e115b95db60 29 hours ago 214MB

The command "sudo ls -R /srv/containerdata" does show a single line containing this meaningless "/srv/containerdata:"

Just to make sure I actually can download and start modules, I added mcr.microsoft.com/azureiotedge-simulated-temperature-sensor:1.0 to the iot edge. That tempSensor was downloaded and added without any issue.

Are there other places or logs to investigate?

Regards,

Sander

@sandervandevelde It looks like module/container is successfully created but during startup or soon after it crashes leaving no trace in docker logs. If it wasn't successfully created I imagine there would be a specific error entry in the edgeAgent logs. What is the output of docker ps -a and what happens if you try to start this container manually (docker start blob)? If it doesn't hint you what may be the issue, you could try creating a container with overridden entry point and starting blob service manually

$ docker run -it --rm -e "LOCAL_STORAGE_ACCOUNT_NAME=mytestaccount" -e "LOCAL_STORAGE_ACCOUNT_KEY=Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw==" --entrypoint bash mcr.microsoft.com/azure-blob-storage:latest

root@283855933f02:/azure-blob-storage# dotnet PlatformBlob.dll /blobroot

If there is an issue you should see errors on the output. If after half a minute the output is empty it means the service was able to start successfully. If this works I would look for an issue with edge runtime itself.

I'm sorry I'm not helping much. I'm not able to reproduce your issue - I used Ubuntu Server 18.04, edge runtime 1.0.3, azure-blob-storage:latest, and the config you posted above - it works fine for me.

Hello Magdalena,

I really appreciate your help. I would like to see this working too, I run the IoT Edge on an Advantech Uno 2271G with Ubuntu 18.04 on it.

So before I reinstall my runtime, I have performed all the steps you described.

See the file blob.txt with the output.

It ends with this "Illegal instruction (core dumped)". If I knew where it is dumped, I would have added it too.

I will wait another day before reinstalling the runtime so I can check out other things if you are still interested.

Regards,

Sander

Some extra environment info:

uno2271gsv@uno2271gsv-UNO-2271G-E2xAE:~$ uname -a

Linux uno2271gsv-UNO-2271G-E2xAE 4.15.0-34-generic #37-Ubuntu SMP Mon Aug 27 15:21:48 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

uno2271gsv@uno2271gsv-UNO-2271G-E2xAE:~$ docker version

Client:

Version: 18.06.0-dev

API version: 1.37

Go version: go1.10.2

Git commit: daf021fe

Built: Mon Jun 25 21:07:53 2018

OS/Arch: linux/amd64

Experimental: false

Orchestrator: swarm

Server:

Engine:

Version: dev

API version: 1.38 (minimum version 1.12)

Go version: go1.10.3

Git commit: 09f5e9d

Built: Wed Sep 19 16:55:45 2018

OS/Arch: linux/amd64

Experimental: false

uno2271gsv@uno2271gsv-UNO-2271G-E2xAE:~$

Afterburner: I tried the same settings on a Windows 10 device running Linux containers and the same settings are accepted ([Initialize] Initialize MetaStore. Status:OK on this Windows device). So the issue on my Linux device doesn't seem to be related to the settings.

@sandervandevelde Hi, Sander, could you please do the following inside container

- run "ulimit -c unlimited"

- run "dotnet PlatformBlob.dll /blobroot"

See if a dump can be generated in the current directory. I found this info at this link:

https://stackoverflow.com/questions/47606532/illegal-instructions-core-dumped-error-on-launch-of-my-application-in-ubuntu-1

Also, could you check if the log file exists /blobroot/logs/platformblob.log? Thanks!

Hello qibozhu,

I tried your suggestions:

rroot@549c639e2d66:/azure-blob-storage# ulimit -c unlimited

root@549c639e2d66:/azure-blob-storage# dotnet PlatformBlob.dll /blobroot

Illegal instruction (core dumped)

Niether /blobroot nor /blobroot/logs/platformblob.log does exist.

but I failed to find any core dump. I even tried to catch all coredump in a special folder as seen in http://www.randombugs.com/linux/core-dumps-linux.html (the 1.2 part)

I wiped my iot edge runtime and tested with a new one (added a new iotedge registration in the portal). Still the same outcome (illegal instruction, no further logging found)

Got the same issue of a completely new XUbuntu image on another device:

name: blob

URI: mcr.microsoft.com/azure-blob-storage:latest

{

"Env": [

"LOCAL_STORAGE_ACCOUNT_NAME=blobaccount",

"LOCAL_STORAGE_ACCOUNT_KEY=iU6uTvlFrS [skip] oOVSW040nAOfPg=="

],

"HostConfig": {

"Binds": [

"/srv/containerdata:/blobroot"

],

"PortBindings": {

"11002/tcp": [

{

"HostPort": "11002"

}

]

}

}

}

I got the same behavior as above on the other device.

Can you confirm this is a sound configuration?

Is it this issue https://medium.com/@nprch_12/docker-exited-132-e38f9dd2cd0d?

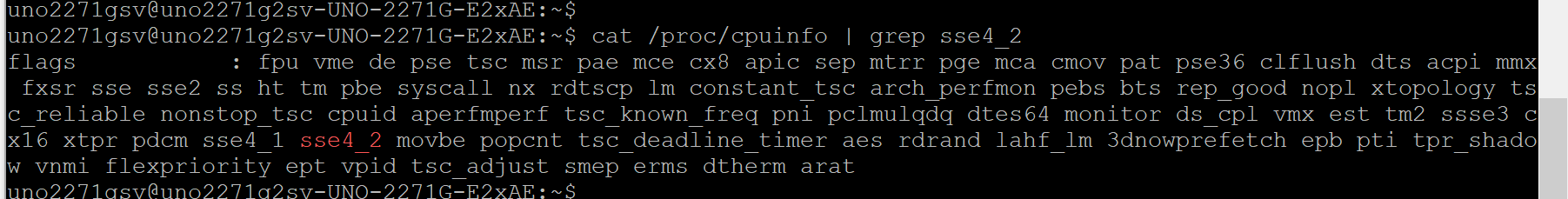

Does sse4_2 show up in the output of cat /proc/cpuinfo | grep sse4_2?

Hello sudhirap,

The processor is an older model Atom CPU (an E3815 I read from the manual).

But yes, it seems sse4_2 is part of it:

uno2271gsv@uno2271g2sv-UNO-2271G-E2xAE:~ cat /proc/cpuinfo | grep sse4_2

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx rdtscp lm constant_tsc arch_perfmon pebs bts rep_good nopl xtopology tsc_reliable nonstop_tsc cpuid aperfmperf tsc_known_freq pni pclmulqdq dtes64 monitor ds_cpl vmx est tm2 ssse3 cx16 xtpr pdcm sse4_1 sse4_2 movbe popcnt tsc_deadline_timer aes rdrand lahf_lm 3dnowprefetch epb pti tpr_shadow vnmi flexpriority ept vpid tsc_adjust smep erms dtherm arat

uno2271gsv@uno2271g2sv-UNO-2271G-E2xAE:~

@sandervandevelde Thanks for your patience with this. Your configuration looks good. It may be a product bug. I prepared a new container image, could you please give it a try and let me know if it works for you?

Image URI: mcr.microsoft.com/azure-blob-storage:1.0.1-linux-amd64

Simple check:

$ docker run -it --rm -e "LOCAL_STORAGE_ACCOUNT_NAME=mytestaccount" -e "LOCAL_STORAGE_ACCOUNT_KEY=Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw==" --entrypoint bash mcr.microsoft.com/azure-blob-storage:1.0.1-linux-amd64

root@283855933f02:/azure-blob-storage# dotnet PlatformBlob.dll /blobroot

If it works it should display just a couple of log lines like this:

[2018-11-02 19:16:20.050] [backend] [info] [Tid:6] [MetaStore.cc:95] [Initialize] Initialize MetaStore. Status:OK

[2018-11-02 19:16:20.123] [backend] [info] [Tid:6] Info: Hook TriggerReportLoad event.

Hello Magdalena,

no problem, I am curious what's happening too.

I tried it on both Linux machines:

It failed on XUbuntu (I tested the deployment from the portal first):

uno2271gsv@uno2271g2sv-UNO-2271G-E2xAE:~$ sudo docker run -it --rm -e "LOCAL_STORAGE_ACCOUNT_NAME=mytestaccount" -e "LOCAL_STORAGE_ACCOUNT_KEY=Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw==" --entrypoint bash mcr.microsoft.com/azure-blob-storage:1.0.1-linux-amd64

root@64b1a431e17c:/azure-blob-storage# dotnet PlatformBlob.dll /blobroot

Illegal instruction (core dumped)

root@64b1a431e17c:/azure-blob-storage#

On Ubuntu 18.04 it fails too (here, the new blob image was not available yet):

uno2271gsv@uno2271gsv-UNO-2271G-E2xAE:~$ sudo docker run -it --rm -e "LOCAL_STORAGE_ACCOUNT_NAME=mytestaccount" -e "LOCAL_STORAGE_ACCOUNT_KEY=Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw==" --entrypoint bash mcr.microsoft.com/azure-blob-storage:1.0.1-linux-amd64

Unable to find image 'mcr.microsoft.com/azure-blob-storage:1.0.1-linux-amd64' locally

1.0.1-linux-amd64: Pulling from azure-blob-storage

802b00ed6f79: Already exists

c22b53ca7b3e: Already exists

45df784dcb8d: Already exists

36a24f5840c3: Already exists

00c6c7c563d3: Pull complete

b95e79f826bc: Pull complete

8ca9920cba47: Pull complete

1acdae1095c6: Pull complete

cc5a1e958282: Pull complete

9fc1d9264f32: Pull complete

Digest: sha256:8ffa46f55327790555c33c98cfdd1fdf3895e5d94157f9a96794e5be8b817222

Status: Downloaded newer image for mcr.microsoft.com/azure-blob-storage:1.0.1-linux-amd64

root@0d3cb6b65eaf:/azure-blob-storage# dotnet PlatformBlob.dll /blobroot

Illegal instruction (core dumped)

root@0d3cb6b65eaf:/azure-blob-storage#

I contacted the manufacturer too. Can you DM me using twitter @svelde?

@sandervandevelde Thanks for trying it out. At this point I don't see anything else we can try. We need to get that core dump to understand what's the issue.

@sandervandevelde I hope this helps if you want to collect the core dump:

According to this SO answer, core dump location is controlled by the host system.

- Check your host setting for core dump location or change it to something easy, like /cores (the linked answer explains how to do it)

- Start our image with additional core settings and additional volume binding for the dump (change

/coresbelow if you are using something else for core dump location)

docker run --ulimit core=99999999999:99999999999 -v blobdump:/cores -e "LOCAL_STORAGE_ACCOUNT_NAME=mytestaccount" -e "LOCAL_STORAGE_ACCOUNT_KEY=Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw==" mcr.microsoft.com/azure-blob-storage:1.0.1-linux-amd64

- Find the dump on your host. Firstly check location of your blobdump volume data.

$ sudo docker volume inspect blobdump

[

{

"CreatedAt": "2018-11-05T23:02:51Z",

"Driver": "local",

"Labels": null,

"Mountpoint": "/var/lib/docker/volumes/blobdump/_data",

"Name": "blobdump",

"Options": null,

"Scope": "local"

}

]

The core dump should be in the Mountpoint location, e.g. /var/lib/docker/volumes/blobdump/_data.

It took some effort to get to the coredump and zip it, but:

Behold the coredump.zip

So what's next? :-)

@sandervandevelde Magdalena is out of office and she will be back in the middle of next week. It appears that we need her Linux machine where she created the container image to debug the dump. We will get back to you late next week. Sorry for the delay.

Hello @qibozhu ,

I can leave the same setup untouched until then. Meanwhile, if you need more information or if you want me to do some tests, just let me know.

Please DM me on twitter @svelde regarding the device used.

@sandervandevelde Thanks for the dump, it was helpful in pinpointing the issue. I believe it should be fixed with this image: mcr.microsoft.com/azure-blob-storage:1.0.2-linux-amd64. Please try it out (e.g. like here) and let me know if it works.

Success!

@mbialecka Hello, the module keeps on running finally:

uno2271gsv@uno2271g2sv-UNO-2271G-E2xAE:~$ sudo docker logs -f blob

[2018-11-15 18:26:00.005] [backend] [info] [Tid:1] [MetaStore.cc:95] [Initialize] Initialize MetaStore. Status:OK

[2018-11-15 18:26:00.114] [backend] [info] [Tid:1] Info: Hook TriggerReportLoad event.

Glad we got it working at last.

We will now proceed to close this thread. If there are further questions regarding this matter, please tag me in your reply. We will gladly continue the discussion and we will reopen the issue.

I can also confirm it works on another linux (XUbuntu 18.04, Azure IoT Edge 1.0.4) set-up on an Advantech UNO 2271G-AE.

We've updated our multi-arch manifests and both :latest and :1.0 tags should now be pointing to the 1.0.2 version for Linux amd64 platform.

@sandervandevelde @JeeWeetje Can you please educate us about your blob storage scenarios? What workload needs this module and what is your end-to-end goal?

At this point, I am evaluating the capabilities of Blob storage on the Edge. I was hoping to fill blobs directly using the routes with messages. And at a certain limit, I was hoping to send blobs to the cloud as an affordable way of (local) persisting and uploading large amounts of raw data.

I also want to check out if this could be used together with a camera for cognitive services. To send certain images to the cloud for further training of my model in the cloud (building a larger training set)