Amphtml: I2I: OWNERS-bot Reviewer Selection Algorithm

OWNERS Reviewer Selection Algorithm

Overview

We’d like the OWNERS bot to automatically assign reviewers to PRs to give them OWNERS coverage.

Current state

No reviewers are assigned. The bot leaves a comment suggesting reviewers. If any owner exists who can approve all files in the PR, they are suggested; otherwise, the bot lists possible owners for each file.

Concerns

- It must provide a reviewer set that has full ownership coverage over the files in the PR

- It should not spam high-level owners just because they can approve the set of all files

- It should not add too many reviewers to a single PR, since then each one may not feel like they have much responsibility over it or may ignore it

Proposed Algorithm

Description

Inputs: a map from files needing approval to the nearest ownership subtree (using this ownership tree structure)

- Select the set of ownership rules at the greatest depth in the tree and union all owners; this is the set of potential reviewers.

- For each potential reviewer, count the number of files they can approve, and select whichever potential reviewer could cover the most files.

- Remove all files from the set that can be approved by the new reviewer, and repeat until all files in the PR have at least one owner in the reviewer set.

Summarized as: Greedily request reviews from the deepest owner who owns the most files until all files are covered.

Pseudo-code

Written in Python due to its clean syntax for list/set operations.

# Constructing the filename-to-subtree map

def buildFileTreeMap(filenames, ownersTree):

return {filename: ownersTree.atPath(filename) for filename in filenames}

# Part 1

def reviewersForTrees(deepestTrees):

reviewers = set()

for tree in deepestTrees:

for rule in tree.rules:

reviewers.add(*rule.owners)

return reviewers

def findPotentialReviewers(fileTreeMap):

nearestTrees = fileTreeMap.values()

maxDepth = max(tree.depth for tree in nearestTrees)

deepestTrees = filter(nearestTrees, lambda tree: tree.depth == maxDepth)

return reviewersForTrees(deepestTrees)

# Part 2

def filesOwnedByReviewer(fileTreeMap, reviewer):

return [filename for filename, tree in fileTreeMap.items()

if tree.hasOwner(reviewer)]

def reviewersWithMostFiles(reviewerFilesMap):

mostFilesOwned = max(reviewerFilesMap.values().map(len))

return filter(reviewerFileMap.items,

lambda reviewer, files: len(files) == mostFilesOwned)

def pickBestReviewer(fileTreeMap):

reviewerSet = findPotentialReviewers(fileTreeMap)

reviewerFilesMap = {reviewer: filesOwnedByReviewer(fileTreeMap, reviewer)

for reviewer in reviewerSet}

return choice(reviewerWithMostFiles(reviewerFilesMap))

# Part 3

def pickReviweers(fileTreeMap):

reviewers = []

while len(fileTreeMap):

nextReviewer, coveredFiles = pickBestReviewer(fileTreeMap)

reviewers.append(nextReviewer)

for filename in coveredFiles:

del fileTreeMap[filename]

return reviewers

Analysis

This implementation will address all concerns listed above. It will avoid spamming high-level owners where possible, since it will try to loop in the lowest-level owners first. It will not add too many reviewers to most PRs; see below analysis on why. It will always add reviewers until the PR has full ownership coverage.

It can be useful to think of PRs in terms of ownership “zones” where an ownership zone is defined as the set of all files directly under a particular OWNERS file. The most naive algorithm would add one reviewer from each OWNERS zone, meaning no PR could ever need more reviewers than the number of ownership zones it touches. The zone count is therefore the “worst possible” number of reviewers an algorithm could select.

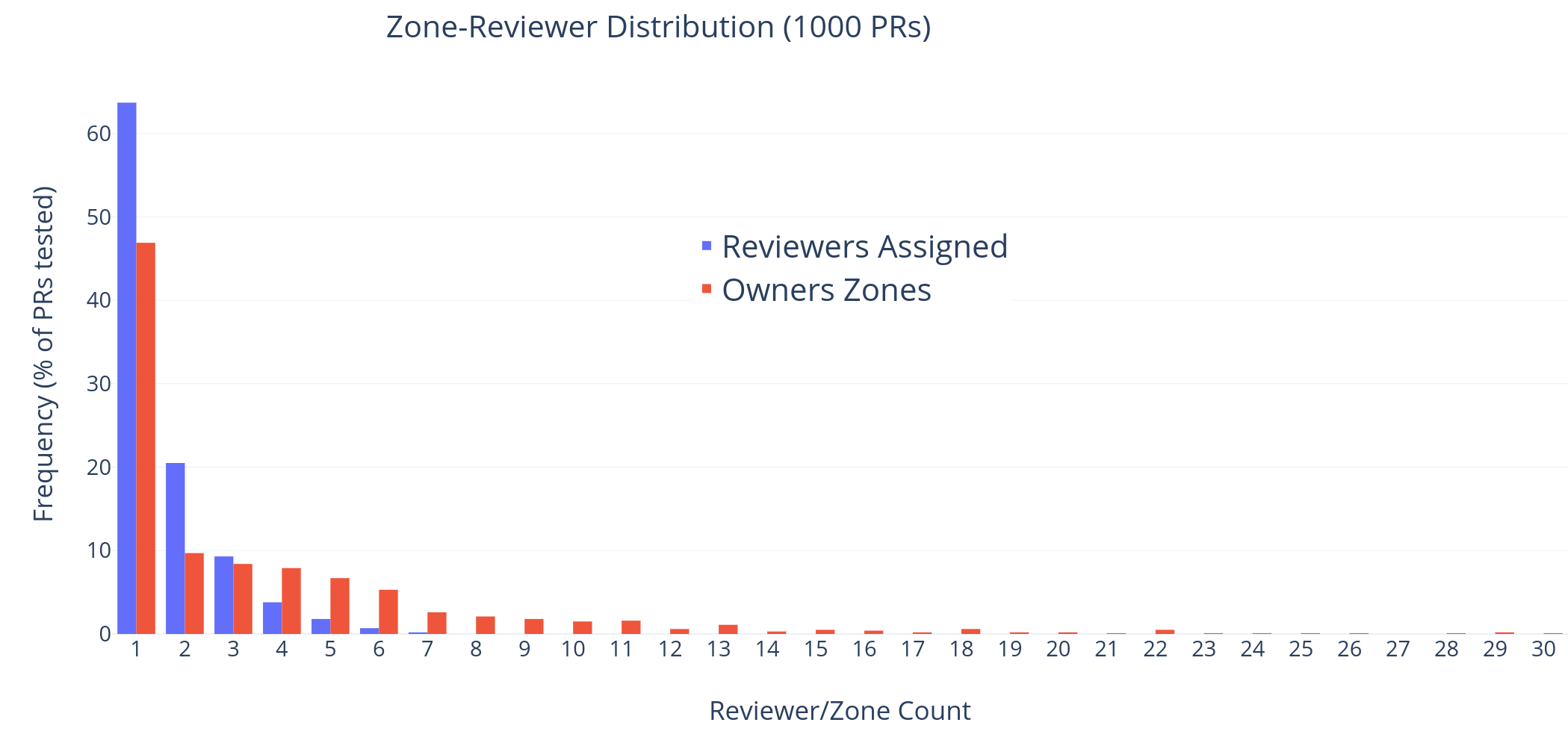

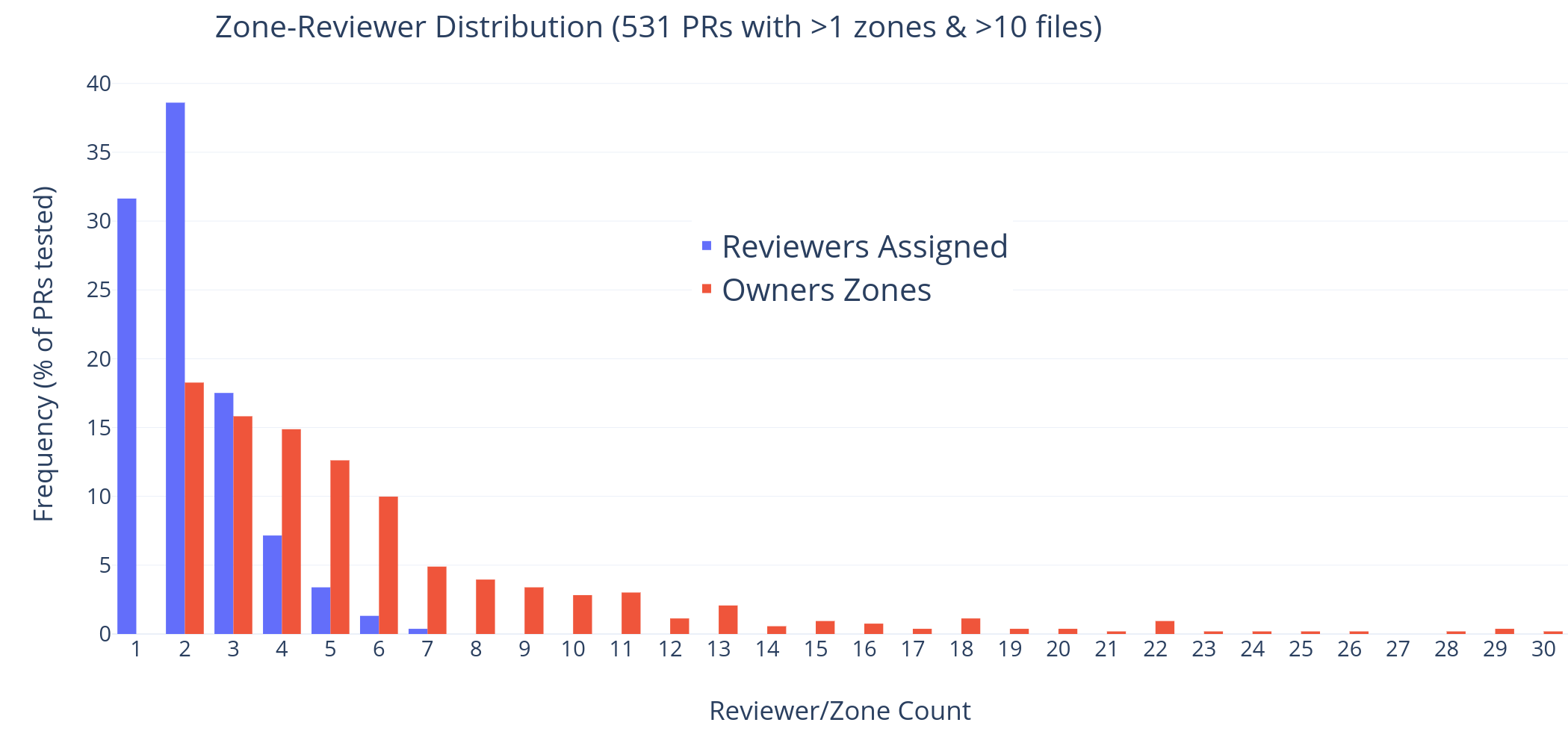

I ran a script to scrape the last 1000 PRs and determine for each the number of ownership zones and the number of reviewers assigned by the proposed selection algorithm. It skipped processing for all PRs with only 1 zone or <10 files, as these would be fairly uninteresting. The plots below show the zone- and reviewer-count distribution; the first shows the distribution with the “trivial” PRs included, while the second excludes that ~47% of the PRs.

The distributions reveal that while PRs vary in size, with larger PRs sometimes having 20+ ownership zones, the selection algorithm never suggested more than 7 reviewers. For ~84% of all PRs (or ~70% of all non-trivial PRs), three or fewer reviewers are needed for full ownership approval; for ~93% of all PRs (or ~89% of all non-trivial PRs), at most four reviewers are needed for full ownership approval.

The third distribution plot below shows the frequency of zone and reviewer counts for PRs with varying numbers of files, where frequency is represented by the size of the marker. It can be seen that while the number of ownership zones fluctuates with some correlation to the size of the PR, the number of reviewers required for ownership coverage is consistently far lower, and does not seem to trend upward with PR size for real PRs. On average, the selection algorithm produces a reviewer set with one-third as many reviewers as ownership zones.

Evaluation & Adjustments

Upon manual inspection of some of the PRs with higher numbers of suggested reviewers, most were actually reviewed by a single top-level owner, such as jridgewell or dvoytenko. On one hand, this could be considered a better selection, since it lowers the number of required reviewers versus the bottom-up approach. On the other, there may be many cases where we’d rather not depend on the top-level owners to approve all such large PRs; or, when they are needed, we may prefer they don’t have to review all changes in the PR. There are a couple adaptations we could make to better handle PRs which necessarily require high-level approval.

1) Auto-backoff the least useful reviewers

Suppose the algorithm builds up a set of 6 reviewers, but we’ve decided we want no more than 3 reviewers. In all but rare degenerate cases, there are some reviewers in the suggested set whose ownership is a subset of another reviewer added later on. Progressively removing the reviewers who provide no incremental coverage over the rest of the reviewer set could reduce the size of the reviewer set and eliminate redundant reviewers.

Pros:

- Smaller reviewer set

Cons:

- Puts more files under the review of higher-level owners

- Does not utilize lower-level owners who may have a better understanding of code changes to their specific directories

- More complex to understand the final reviewer set

2) Incrementally add reviewers

One line of reasoning behind keeping the reviewers list small is that, when many reviewers are added at once, each one may not feel responsibility for the PR or may ignore it. Instead of adding all reviewers at once, the bot could add one or two reviewers at a time, adding the next reviewer each time an approval is granted.

Pros:

- Allows owners to iteratively approve the files under their ownership

- Allows author to make code review changes for lower-level files before involving high-level owners

- Avoids notifying high-level owners until they are needed

Cons:

- Reviewers will generally try to review the whole PR unless told otherwise (and may need to, for context)

- May solicit unnecessary reviews from lower-level owners when high-level owners may review it anyway

- Adds complexity to the bot (the need to monitor for reviews and update the reviewer selection)

3) Give up

If the reviewer selection algorithm produces more than some hard limit (say, four reviewers), just give up on automatically assigning reviewers and instead leave a comment with information about suggested reviewers and who might approve what. Since most of these “hard” PRs end up being reviewed by a single top-level owner in practice, this lets the author determine the best course of action and who to involve.

Pros:

- Doesn’t make bad guesses

- Let’s complex, wide-ranging PRs get blanked-approved by a high-level owner whose approval would likely be solicited anyway

- Provides suggested reviewers the author can choose to add or ignore

Cons:

- Author may be expecting reviewer(s) to be auto-assigned

- Puts the responsibility on the author to determine who should be added

/cc @ampproject/wg-approvers

All 5 comments

Update: After running the analysis on the last 2600 PRs, there were only 14 PRs requiring more than 3 reviewers.

Graphs were not re-created with the new data, as they would add very little and are harder to read since the lower numbers quickly dominate, making it tough to scale in a way that is easy to see

Response to questions in design review:

OWNERS.yaml syntax example file: https://github.com/ampproject/amp-github-apps/blob/master/owners/OWNERS.example.yaml

@honeybadgerdontcare : Looks like your needs would be covered by (ignore backslash, added to prevent tagging):

- One rule in

amphtml/validator/OWNERS.yaml:- @ampproject/wg-infra - One rule in

amphtml/OWNERS.yaml:- "**/validator-*.protoascii": @ampproject/wg-infra

@alanorozco : Do you see a need that would not be satisfied with this syntax?

@rcebulko It would be @ampproject/wg-caching for validator related items. And yes, those rules will cover what we need.

@rcebulko LGTM!

This has been reviewed, implemented, and deployed. Looks like this I2I can be closed. Nice work @rcebulko!

Most helpful comment

Update: After running the analysis on the last 2600 PRs, there were only 14 PRs requiring more than 3 reviewers.

Graphs were not re-created with the new data, as they would add very little and are harder to read since the lower numbers quickly dominate, making it tough to scale in a way that is easy to see