Webpack-dev-server: Hard to catch error on proxying in Node.js 10

- Operating System: macOs 10.14.1

- Node Version: 10.15.0

- NPM Version: yarn 1.13.0

- webpack Version: 4.23.1

- webpack-dev-server Version: 3.1.14

- [X] This is a bug

- [ ] This is a modification request

Code

The bug I'm reporting here showed up after switching to Node js v10.x. I believe there are differences in how the net core package raises exceptions/policy toward unhandled error events.

To repro, you need to proxy a connection that uses WebSockets, allow it to connect using chrome, then restart the target server of the proxy, for example with Nodemon. Want to avoid creating a minimal repo of this if possible since it's a bit comple and there seems to be a nice trail of ticket/changelogs.

This will cause the WDS process to die due to unhandled error event from ECONNRESET with the following stack trace:

HPM] Error occurred while trying to proxy request /foo from localhost:9090 to https://localhost:3333 (ECONNREFUSED) (https://nodejs.org/api/errors.html#errors_common_system_errors)

events.js:167

throw er; // Unhandled 'error' event

^

Error: read ECONNRESET

at TLSWrap.onStreamRead (internal/stream_base_commons.js:111:27)

Emitted 'error' event at:

at emitErrorNT (internal/streams/destroy.js:82:8)

at emitErrorAndCloseNT (internal/streams/destroy.js:50:3)

at Object.apply (/Users/kieran/work/Atlas/ui/node_modules/harmony-reflect/reflect.js:2064:37)

at process._tickCallback (internal/process/next_tick.js:63:19)

Before upgrading to node js 10, I believe something similar was happening but the exception wasn't fatal.

After some debugging/ticket searching it seems that what changed is that it is now an error for the https module to encounter an exception without a registered handler, which impacts the behavior of your dependency node-http-proxy, like this ticket describes.

Debugging the error, I discovered that WDS proxy config is really http-proxy-middleware config, so it is possible to suppress the error and keep the server up by modifying config by adding an onError handler to proxy config for future readers, however this was fairly difficult to debug and ideally this package would register some sort of handler for this exception so that it isn't fatal.

Willing to submit a PR, the change is very straight-forward. Involve modifying this code:

// Proxy websockets without the initial http request

// https://github.com/chimurai/http-proxy-middleware#external-websocket-upgrade

websocketProxies.forEach(function (wsProxy) {

this.listeningApp.on('upgrade', wsProxy.upgrade);

}, this);

To be like:

// Proxy websockets without the initial http request

// https://github.com/chimurai/http-proxy-middleware#external-websocket-upgrade

websocketProxies.forEach(function (wsProxy) {

this.listeningApp.on('upgrade', (req, socket, ...args) => {

socket.on('error', (err) => console.error(err));

return wsProxy.upgrade(req, socket, ...args);

};

}, this);

Expected Behavior

The server should stay alive when a client (on either side of the proxy) disconnects.

Actual Behavior

Server dies due to no handler for ECONNRESET

For Bugs; How can we reproduce the behavior?

Use Node 10.

All 68 comments

@kmannislands Can you create minimum reproducible test repo? It is hard to fix without this and maybe never will be fixed without this (so issue will be closed)

@evilebottnawi Minimal repro is more effort here than submitting a PR.

I modified my ticket to include the specific code that needs to be changed and how. Not sure what your policy on exception handling/logging is.

I'm sure you'll have others trip over this as they go to 10.x

@kmannislands Fatal error should be don't crash webpack-dev-server so we will accept a PR, but we need test case or minimum reproducible test repo to unsure we fix this problem and avoid regressions in future

@kmannislands we need minimum reproducible test repo for manual testing (hard to testing), it is increase speed for solving problem

Can confirm we are also experiencing this error proxying websockets when the server doesn't gracefully respond to the connection or it closes abruptly. Only happens on Node 10.x

@mjrussell can you create minimum reproducible test repo?

Friendly ping guys

Yes it's still an issue.

No I can't produce a minimal repro, concurrency is hard.

Please just fix it :heart:

@cha0s it is hard to fix something in anything, can you provide screen this about problem?

@evilebottnawi It seems @kmannislands provided the console output of the error, so pretty much equivalent to a screenshot. Basically, you start the Webpack bundler with proxying, and if any client disconnects it crashes the whole process for the reason mentioned in the original issue, above:

Happens on Node v10.x.

@mathieumg Only Node v10.x? Node.js v12 has been released today so I want to know what occurs using v12. Thanks.

@hiroppy I presume it will be the same on v12, but I can install it and test it too. Either way, this still needs to be fixed for v10.

@hiroppy A separate bug prevents me from testing it in v12, but I'm not on the latest version of the Dev Server either:

Starting the development server...

internal/buffer.js:928

class FastBuffer extends Uint8Array {}

^

RangeError: Invalid typed array length: -4095

at new Uint8Array (<anonymous>)

at new FastBuffer (internal/buffer.js:928:1)

at Handle.onStreamRead [as onread] (internal/stream_base_commons.js:165:17)

at Stream.<anonymous> (/Users/mathieumg/project/frontend/node_modules/handle-thing/lib/handle.js:120:12)

at Stream.emit (events.js:201:15)

at endReadableNT (/Users/mathieumg/project/frontend/node_modules/readable-stream/lib/_stream_readable.js:1010:12)

at processTicksAndRejections (internal/process/task_queues.js:83:17)

error Command failed with exit code 1.

info Visit https://yarnpkg.com/en/docs/cli/run for documentation about this command.

see https://github.com/nodejs/node/issues/24097

OK, let's talk about this in another issue.

@mathieumg Could you submit a reproducible repo?

Could you submit a reproducible repo?

Yes I can try to muster up a repro repo when I have a spare second sometime this week!

I tried reproducing this error in a minimal repo, but I can't. Tried with NodeJS 10, 11 and 12. NodeJS 12 doesn't work with WDS though (getting a weird error message different than the one posted above - maybe because I'm on windows).

Maybe you guys can use the repo I created. I have definitely seen this behavior in our project using NodeJS 11.x as well:

IIRC, this also may have depended on a specific chrome version. Perhaps chrome has changed its behavior again.

To clarify my original ticket, this is non-blocking because WDS allows users to pass through config to the proxy package. So if anyone reading this is blocked, all you need to do is add an error handler on upgrade to the WDS config in your webpack config:

{

// ... other config

devServer: {

proxy: {

'/your_path': {

target: 'https://localhost:XXX',

ws: true,

// Add this:

onError(err) {

console.log('Suppressing WDS proxy upgrade error:', err);

},

},

},

},

}

hm, i think we should add logger by default as described above, feel free to send a PR, it is easy to fix

This has just started happening to me but I'm unsure of the change that caused it.

- Operating System: macOs 10.14.5

- Node Version: 10.15.3

- NPM Version: 6.9.0

- webpack Version: 4.31.0

- webpack-dev-server Version: 3.3.1

The onError addition above doesn't make a difference I still get the crash with:

events.js:174

throw er; // Unhandled 'error' event

^

Error: read ECONNRESET

at TCP.onStreamRead (internal/stream_base_commons.js:111:27)

Emitted 'error' event at:

at emitErrorNT (internal/streams/destroy.js:82:8)

at emitErrorAndCloseNT (internal/streams/destroy.js:50:3)

at process._tickCallback (internal/process/next_tick.js:63:19)

It happens at the point I try to open a websocket through the proxy (it doesn't always happen):

proxy: {

"/api": {

target: "http://localhost:8181",

},

'/api/realtime': {

target: "ws://localhost:8181",

ws: true

},

"/auth": {

target: "http://localhost:8181",

},

}

@markmcdowell can you create minimum reproducible test repo?

I got the same error. What actually helped me out was:

process.on('uncaughtException', function (err) {

console.error(err.stack);

console.log("Node NOT Exiting...");

});

Found the solution on StackOverflow. Thanks to Ross the Boss.

Friendly ping, somebody still have the problem? Please create reproducible test repo, thanks

@evilebottnawi I tried to force install latest webpack-dev-server version (I'm using create-react-app) and it seems that error is still there.

I might have a try in making an example (it needs some setup work), although personally @scipper solution is fine for me.

@alexkuz will be great if you help to reproduce the problem, because @scipper solution is not good, I think we don't catch some errors, so we need fix it

@evilebottnawi ok, here it is (based on CRA):

https://github.com/alexkuz/wds-ws-proxy-example

To reproduce:

- install deps with

yarn install - run server with

node src/server.js - open dev app with

yarn start

Server returns 502 error instead of proper WS response (turns out that's what Nginx does on my server when I'm for example restarting container with my actual WS server), which leads to ECONNRESET error.

@alexkuz your problem is not related to the original problem :confused: What is use case? Why do you use it configuration? You can send a fix, but it is not high priority, because it is edge case.

Why do not use real host for ws instead proxing?

your problem is not related to the original problem 😕

you may be right, I haven't read original issue very carefully tbh, sorry 😬 The error looks very similar though so it might be related.

Why do not use real host for ws instead proxing?

You now what, I just tried to connect directly and it works 😅 I configured this long time ago and not sure why (or if) it really didn't work before. Something with CORS, maybe?

Still, I think catching that 502 error wouldn't hurt, but yeah, it's probably a low priority issue.

Ah, I think the idea was to not use server url explicitly in source code, it was easier to setup proxy than providing url via env variable or something.

Something with CORS, maybe?

I think not, a problem in ws implementation, i think you can look at here https://github.com/websockets/ws/issues/1692

@alexkuz Yes, anyway feel free to send a PR, i think it should be easy to fix :+1:

Update: i think env variable is better to use in your case, it is more flexible and faster

Issue still exists in Node 13. And isn't specific to websockets, happens even without websocket use.

Is an issue for me as well with proxied websockets to our backend server... this is bad because restarting the backend server always kills webpack dev server...

The only solution that worked for us for now is the one catching the exception (thanks @scipper!)

@ueler Can you create reproducible test repo?

We had the same problem and worked around the problem by downgrading webpack-dev-server to v3.9.0 instead of using v3.11.0

In our project adding transportMode as 'ws' helped.

@rameshsunkara Please create reproducible test repo

Same issue https://github.com/chimurai/http-proxy-middleware/issues/44#issuecomment-655948463

Somebody can create reproducible test repo or steps to reproduce?

It is too hard, it requires a connectable test Websocket server and can be started or stopped.

@yoyo837 Just write your steps

Can you imagine going a year and a half commenting over and over expecting people to be able to reproduce some obscure race condition instead of just taking the first comment which had code completely laid out to fix this? A year and a half ago.

There's no way you can convince me that all of these comments over the last year and a half constituted less effort than just rolling in the fix.

I worked around this long ago by simply avoiding this broken functionality. I feel for anyone still dealing with this after a year and a half.

@evilebottnawi

"webpack": "^4.43.0",

"webpack-dev-server": "^3.11.0",

proxy config in webpack options:

proxy: {

'/v3/websocket/socketServer.do': {

target: 'http://localhost:8080/',

changeOrigin: true,

ws: true,

},

'/v3': {

target: 'http://localhost:8080/',

changeOrigin: true,

},

},

Steps:

- Start java or any other server (websocket server)

- Start dev server (websocket client)

- Open the browser using the dev URL, and the websocket code in it will connect to the server

- Shut down the server forcefully, the client attempts to reconnect at 3 second intervals

- Don't close the browser or tabs and wait a few minutes or more time

- The dev server crash

@cha0s why be toxic? If you read, you will see that there were different problems, I ask this to understand in detail and fix all possible problems, and not just take and close the issue. And it’s very disappointing to hear such words when you try fix and improve your work

@yoannmoinet big thanks!

/cc @Loonride Maybe you have time to look at this. I will have time only on the next week

/cc @Loonride Maybe you have time to look at this. I will have time only on the next week

Will look later, I'm working on many things

Thanks for helping, no rush

I apologize for my tone. I will just say that it is always possible for perfect to be the enemy of good enough. It's better (for your loving users) to fix and then keep the issue open to perfect the fix if you believe the very first comment's fix is wrong. I haven't seen a good argument for why it is the wrong fix, and I suspect it will be the ultimate resolution.

@cha0s because any line of code should be tested, it can have side effects when you adding lines without test, like regressions, new problems and etc, so I ask developers provide more information for tests

The case I' facing seems pretty typical. I have Angular client app served by webpack-dev-server (which is standard Angular proxy config). I use proxy to expose backend to the client app. Recently I added web socket connection (in particular to handle Apollo GraphQL subscriptions over websockets) which is proxied.

During development on each backend restart the proxy dies due to this issue. After manually applying proposed change from the issue description to Server.js the proxy stays alive and client is able to reconnect on subsequent backend restarts.

For anyone still coming across this issue, the initial fix code didn't work for me. There was a missing parentheses, the correct code is:

websocketProxies.forEach((wsProxy) => {

this.listeningApp.on('upgrade', (req, socket, ...args) => {

socket.on('error', (err) => console.error(err));

return wsProxy.upgrade(req, socket, ...args);

});

}, this);

This error can be caught using the onProxyReqWs event in the config:

devServer: {

proxy: {

'/my-websocket-path': {

target: 'wss://my.ip',

xfwd: true,

ws: true,

secure: false,

onProxyReqWs: (proxyReq, req, socket, options, head) => {

socket.on('error', function (err) {

console.warn('Socket error using onProxyReqWs event', err);

});

}

}

}

}

Awesome workaround! thanks @maknapp for sharing!

This error can be caught using the

onProxyReqWsevent in the config:

I can reproduce this error consistently by setting the target to nginx to then proxy_pass to nowhere (e.g. a destination that is being restarted).

events {}

http {

proxy_http_version 1.1;

# This is intended to point to nothing so that

# the request to /ws fails.

upstream _serverThatDoesNotExist {

server 127.0.0.1:7070;

}

server {

listen 7071 default;

root /var/www;

location /ws {

proxy_pass http://_serverThatDoesNotExist;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "Upgrade";

}

}

}

devServer: {

proxy: {

'/ws': {

target: 'ws://127.0.0.1:7071',

xfwd: true,

ws: true,

secure: false,

onProxyReqWs: (proxyReq, req, socket, options, head) => {

socket.on('error', function (err) {

console.warn('Socket ERROR using onProxyReqWs', err);

});

}

}

}

}

A websocket repeatedly attempting to connect to the webpack-dev-server will produce the following every time:

Error: read ECONNRESET at TLSWrap.onStreamRead (internal/stream_base_commons.js:209:20)

If the proxy target is set directly to something that does not exist (e.g. a destination that is being restarted), it will mostly get a connect error (which is caught by default) and sometimes get this same read error. It seems there is some race condition somewhere between connecting, reading, and the default error handling.

@maknapp Maybe do you want to send a fix, we can add default error handler

Should this be fixed on http-proxy-middleware instead of here? It already has a default error handler and seems like the more appropriate place to add another.

Yea and no, we have own logget and we need to ability setup output level in this case, so I think we need to setup own logger here

Hi guys - I have the following webpack config, and intermittently WDS crashes with the below error.

devServer: {

proxy: {

'/api': 'http://gatekeeper',

ws: true,

},

contentBase: commonPaths.outputPath,

compress: true,

hot: true,

host: '0.0.0.0',

public: 'cloud.coreweave.test',

(I am running WDS inside of a container, inside of a minikube cluster/host, hence the public configuration option.)

[gatekeeper-frontend] Version: webpack 4.46.0

[gatekeeper-frontend] Time: 347ms

[gatekeeper-frontend] Built at: 03/04/2021 2:50:54 AM

[gatekeeper-frontend] 1 asset

[gatekeeper-frontend] Entrypoint main = main.js

[gatekeeper-frontend] [./src/App.jsx] 2.97 KiB {main} [built]

[gatekeeper-frontend] [./src/index.jsx] 705 bytes {main} [built]

[gatekeeper-frontend] + 302 hidden modules

[gatekeeper-frontend] ℹ 「wdm」: Compiled successfully.

[gatekeeper-frontend] events.js:291

[gatekeeper-frontend] throw er; // Unhandled 'error' event

[gatekeeper-frontend] ^

[gatekeeper-frontend]

[gatekeeper-frontend] Error: read ECONNRESET

[gatekeeper-frontend] at TCP.onStreamRead (internal/stream_base_commons.js:209:20)

[gatekeeper-frontend] Emitted 'error' event on Socket instance at:

[gatekeeper-frontend] at emitErrorNT (internal/streams/destroy.js:100:8)

[gatekeeper-frontend] at emitErrorCloseNT (internal/streams/destroy.js:68:3)

[gatekeeper-frontend] at processTicksAndRejections (internal/process/task_queues.js:80:21) {

[gatekeeper-frontend] errno: -104,

[gatekeeper-frontend] code: 'ECONNRESET',

[gatekeeper-frontend] syscall: 'read'

[gatekeeper-frontend] }

[gatekeeper-frontend] error Command failed with exit code 1.

Is there anything _obviously wrong_ with my configuration here?

@mecampbellsoup Have you tried this solution: https://github.com/webpack/webpack-dev-server/issues/1642#issuecomment-733826290 ?

By the way, when you say "intermittently", this should typically occur when the WS proxy connection drops (e.g. when the target proxy server restarts). Networking issues shouldn't be the norm, but could also be the culprit.

Ideally WDS would have this default error handler and we wouldn't have to write a custom one just to ignore the error - in fact, this is an expected error and it should never crash the server.

@mecampbellsoup Have you tried this solution: #1642 (comment) ?

By the way, when you say "intermittently", this should typically occur when the WS proxy connection drops (e.g. when the target proxy server restarts). Networking issues shouldn't be the norm, but could also be the culprit.

Ideally WDS would have this default error handler and we wouldn't have to write a custom one just to ignore the error - in fact, this is an expected error and it should never crash the server.

It seems to just happen at random - it seems like perhaps my WS client (in the browser) is disconnecting for some reason, kicking off the following state changes in my WDS server (the WS proxy backend):

cloud gatekeeper-frontend-66ffbc7dc4-vjkmw 0/1 Error 1 2m36s

cloud gatekeeper-frontend-66ffbc7dc4-vjkmw 0/1 CrashLoopBackOff 1 2m41s

cloud gatekeeper-frontend-66ffbc7dc4-vjkmw 0/1 Running 2 2m54s

cloud gatekeeper-frontend-66ffbc7dc4-vjkmw 1/1 Running 2 2m59s

cloud gatekeeper-frontend-66ffbc7dc4-vjkmw 0/1 Error 2 3m57s

cloud gatekeeper-frontend-66ffbc7dc4-vjkmw 0/1 CrashLoopBackOff 2 4m1s

cloud gatekeeper-frontend-66ffbc7dc4-vjkmw 0/1 Running 3 4m29s

cloud gatekeeper-frontend-66ffbc7dc4-vjkmw 1/1 Running 3 4m39s

After restarting, things generally work again for a while, until the next occurrence just a few short minutes later...

I added onError and onProxyReqWs handlers to my devServer.proxy config but the errors don't seem to be caught.

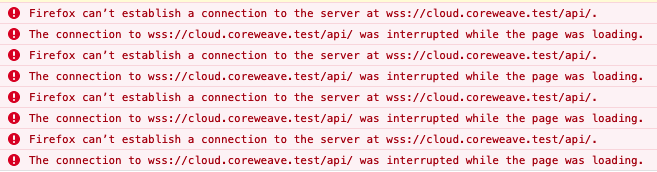

In the browser I see a recurring loop of these errors which I'm assuming are related:

Here is the request being made that is failing in this loop (copied from browser devtools as fetch request):

await fetch("wss://cloud.coreweave.test/api/", {

"credentials": "include",

"headers": {

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.16; rv:86.0) Gecko/20100101 Firefox/86.0",

"Accept": "*/*",

"Accept-Language": "en-US,en;q=0.5",

"Sec-WebSocket-Version": "13",

"Sec-WebSocket-Protocol": "graphql-ws",

"Sec-WebSocket-Extensions": "permessage-deflate",

"Sec-WebSocket-Key": "3wj29T4h7RxPqAErFrJ2wg==",

"Pragma": "no-cache",

"Cache-Control": "no-cache"

},

"method": "GET",

"mode": "cors"

});

Once again here is my current WDS configuration:

devServer: {

proxy: {

'/api': 'http://gatekeeper',

ws: true,

onProxyReqWs: (proxyReq, req, socket, options, head) => {

socket.on('error', function (err) {

console.warn('Socket error using onProxyReqWs event', err);

});

},

onError(err) {

console.log('Suppressing WDS proxy upgrade error:', err);

},

},

contentBase: commonPaths.outputPath,

compress: true,

hot: true,

inline: true,

host: '0.0.0.0',

public,

},

@mecampbellsoup Maybe you can create simple reproducible test repo, I will look, I think we fix it in master, jsut want to check

@mecampbellsoup Maybe you can create simple reproducible test repo, I will look, I think we fix it in master, jsut want to check

When I run this app outside of minikube, I don't seem to have any WS connection issue or disconnects, so creating a reproducible test repo is going to require a docker compose or minikube deploy script... if you need this I'll need some time.

@mecampbellsoup Yep, will be great

@mecampbellsoup Yep, will be great

@alexander-akait @rafaelsales, as with so many things, this turned out to be a user error 😢

In short, my WDS proxy config was invalid. Compare the following two:

Bad

proxy: {

'/api': process.env.PROXY_URI,

ws: true,

onError: err => {

console.log('Suppressing WDS proxy upgrade error:', err);

},

},

Good

proxy: {

'/api': {

target: process.env.PROXY_URI,

ws: true,

onError: err => {

console.log('Suppressing WDS proxy upgrade error:', err);

},

},

},

I blame JS/WDS for letting me pass an invalid but correct-looking proxy configuration object!

Unfortunately, we cannot validate invalid parameters for 3d party modules :disappointed: But I will look how we can import it, thanks for reporting

After spending 5 hours I found how to reproduce the problem

Thanks for your hard works from community.

Hi @alexander-akait

I just published [email protected] which fixed a graceful shutdown issue. Curious if it fixes this issue.

If it doesn't, I'm interested in the reproducible setup. Maybe it's something HPM can fix (if issue can be reproduced)

Spend some hours on it without luck...

@chimurai Yep, it is interesting problem, I reproduce it using IE11/IE10, proxy websockets and sockjs, I don't know why it happens, anyway I try to reproduce it again and put feedback on the next verison

Just hit this as well.

Operating System: macOs 11.2.3

Node Version: v14.15.3

NPM Version: 7.5.2

"webpack": "^4.44.2",

"webpack-dev-server": "^3.11.0",

I have this proxying

{

// ws:localhost:3002 --> ws:localhost:8000

context: ["/a/"],

target: ampTarget,

pathRewrite: { "^/a": "" },

ws: true,

headers: {

"X-Remix-Endpoint": `http://localhost:${port}/a`,

},

},

and I hit the crash immediately when I try e.g. http://192.168.0.148:3002/... in my browser

Most helpful comment

I got the same error. What actually helped me out was:

Found the solution on StackOverflow. Thanks to Ross the Boss.