Virtual-environments: vs2017-win2016 random dll library load errors

Summarizing my comments from #691 CC @miketimofeev

Describe the bug

Yesterday (7. April 2020) we started getting some random failures, the code did not change code on our side but something seems to have changed on the image, comparing logs we noticed the following.

Running same pipeline for same commit twice behaves differently, sometimes we cannot load dlls.

Area for Triage:

C/C++, Python

Virtual environments affected

- [ ] macOS 10.15

- [ ] Ubuntu 16.04 LTS

- [ ] Ubuntu 18.04 LTS

- [x] Windows Server 2016 R2

- [ ] Windows Server 2019

Expected behavior

I expect two builds of the same commit running on vs2017-win2016 to work the same.

Actual behavior

When we call the dir command we get slightly different outputs

- Failing Build 🔴

2020-04-08T04:51:40.9092142Z Volume in drive D is Temporary Storage

2020-04-08T04:51:40.9093101Z Volume Serial Number is 4099-6268

...

2020-04-08T04:51:42.0603173Z rootdir: d:\a\1\s\src

- Passed Build 🟢 (for the same commit)

2020-04-08T04:51:13.6285940Z Volume in drive D is Temp

2020-04-08T04:51:13.6286720Z Volume Serial Number is 7AAE-11A5

...

2020-04-08T04:51:14.7481347Z rootdir: D:\a\1\s\src

At least drive D seems to be slightly different, rootdir is now also lowercase, Windows path does not differentiate between lowercase and uppercase right?

We consistently get dll loading problems when drive D is Temporary Storage.

Could someone shed a bit of light on this?

All 19 comments

@FrancescElies I took the liberty to send you an email to the email address on your profile so we can get some more details and try to figure why your builds started failing. I hope that's ok. Thanks.

Hi thanks for your reply, I got the email :)

Hello, @FrancescElies

Just to double check. Could you please add the current path to the PATH. For PowerShell example:

$env:PATH = "$(pwd);$env:PATH"

@al-cheb i will give it a try on Tuesday (14. April). This will be a long weekend. İ hope it's fine.

@al-cheb prepending current working path to PATH environment variable helps, we added the workaround to most of our tasks, thanks!

We are still having some problems though, this time with DotNetCoreCLI@2 task

- I am seeing

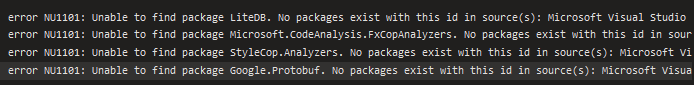

\NSIS\ was unexpected at this time.I can seeC:\Program Files (x86)\NSIS\was added to path, I have the feeling this is related to the PATH problem but for the moment I cannot tell. This fails in the new infrastructure machines and the old ones. - It seems like the task is having problems finding packages `error NU1101: Unable to find package Google.Protobuf

- We use a prebuild event that calls

taskkillbut'taskkill' is not recognized as an internal or external command, this is to be found inC:\Windows\System32.

So far I am getting around some problems like follows.

# The following solves some problems but not all of them

- powershell: |

Write-Host "##vso[task.prependpath]$env:nugetDependencyPath"

Write-Host "##vso[task.prependpath]$env:csprojTwoPath"

Write-Host "##vso[task.prependpath]$env:csprojOnePath"

Write-Host "##vso[task.prependpath]$env:system32Path"

env:

system32Path: C:\Windows\System32

nugetDependencyPath: $(Build.SourcesDirectory)\NuGetConfigRepositoryPath\LiteDB\4.1.4

csprojTwoPath: $(Build.SourcesDirectory)\myproj\apps\a\csproj

csprojOnePath: $(Build.SourcesDirectory)\myproj\apps\another\csproj

# the gist of our task

- task: DotNetCoreCLI@2

inputs:

command: 'publish'

publishWebProjects: false

projects: 'myproj/apps/subpath/*/*.csproj'

arguments: --configuration $(buildConfig) --framework netcoreapp3.1 --runtime $(runtime)

Will post again if I find something else.

@FrancescElies, Could you please try to add dot?

Write-Host "##vso[task.prependpath]."

Write-Host "##vso[task.prependpath]." did not do the trick, was worth trying though.

At the moment I am trying to use a script instead of a DotNetCoreCLI@2 task 🤞

My previous attempt did not work.

At the moment I am printing following info for every running job.

@al-cheb Is there any way to know exactly on which image is every job running? Since some jobs are failing and others aren't would be great for us to print this kind of info, at the moment I feel kind of blind.

@FrancescElies, Image info you can find in the C:\imagedata.json file.

We have done some extra monitoring and saw the following, two builds (I can share the links via email) from different commits, they totally inoffensive changes (renamed task's displayName).

Once failed with error NU1101 in dotnet publish command and the other one passed.

Is this related to this issue or something else? The time this issue appeared is in our builds highly correlated wth the PATH issue being discussed here.

Error

ImageData

[

{

"group": "Operating System",

"detail": "Microsoft Windows Server 2019\n10.0.17763\nDatacenter"

},

{

"group": "Virtual Environment",

"detail": "Environment: windows-2019\nVersion: 20200331.1\nIncluded Software: https://github.com/actions/virtual-environments/blob/win19/20200331.1/images/win/Windows2019-Readme.md"

}

]

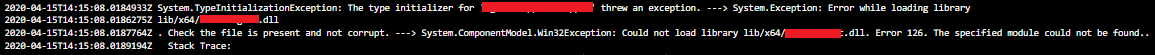

I think i face a similar issue, with same symptoms and same timeline, but when executing VSTests in releases.

In my case, it is always the same dll that is not found :

Where it is actually available, we can see download traces in previous steps.

Everything was working fine on agent image '20200316.1' but it appears on image '20200331.1' in my case. Nor code, nor release was changed in between.

I can provide more information privately if needed.

@mguirao, Could you please append dot as the fist step and provide logs?

- powershell: |

Write-Host "##vso[task.prependpath]."

@FrancescElies Could you provide a minimal repo to reproduce?

In my case, it is always the same dll that is not found :

@mguirao did you try to add current path before you call that command as @al-cheb suggested? https://github.com/actions/virtual-environments/issues/692#issuecomment-611469154, that solved the dll loading problems for us.

Edit: sorry, I should have refreshed the page, I did not see you already replied 😅

@FrancescElies Could you provide a minimal repo to reproduce?

That's a fair request, I'm sorry but at the moment I can't spend effort on that.

Not sure if it helps but I provided two links via email testing the same code (only changing a displayName in a task), I know they are not exactly the same commit but that change in my opinion should not have such a side effect.

I feel like even though C:\imagedata.json is the same something else not related with our repo is going on.

Questions:

- If I print

C:\imagedata.jsonand they are the same in two different builds, can I be sure the environment is the same? Do I need to check something else? - With regard to NuGet Error NU1101 I don't really get what that means (I am not a dotnet dev).

@FrancescElies we've deployed the image with the fix. Could you please check the behavior?

Thanks for the update, we will do that soon, I'll report back

@FrancescElies I'm going to close the issue since there are no new issues after the fix. Feel free to ping me if you have any concerns.

Thank you!

I did not get yet the time yet to undo the prependpath changes and see, but I'll report back if I find anything by that time.

Thanks for your support!