Transformers: codecov invalid reports due to inconsistent code coverage outputs (non-idempotent test-suite)

Currently PRs get a codecov report

- Observing various commits - especially pure doc commits - It's either not working right or it needs to be configured.

e.g., this PR has 0.00% change to the code:

https://github.com/huggingface/transformers/pull/6315/files

yet, codecov found -0.51% decrease in coverage - this makes no sense. (edit: it updates itself with other commits to master, now it shows -0.31%)

- Does it have to send a notification and not just comment on the PR?

It appears that it can be finetuned, and notifications sent only if a desired threshold is passed: https://docs.codecov.io/docs/notifications#standard-notification-fields - so that it actually flags an issue when there is one.

Here is a ready conf file from a random project: https://github.com/zulip/zulip/blob/master/.codecov.yml

except perhaps adjusting threshold to 1%? (edited) and not sure whether we want it to comment by default.

All 34 comments

Here is another gem - PR to remove a single comment from a test file https://github.com/huggingface/transformers/pull/6338 - guess what codecov's report was - it will increase coverage by 0.32%! Sounds like its output would make a pretty good RNG.

Pinging @thomasrockhu

We've seen such reports for a while now, could you explain why these diffs in coverage happen, or provide a link that explains why? Thank you!

I took at look at #6338 because that is extremely strange.

-- Codecov --

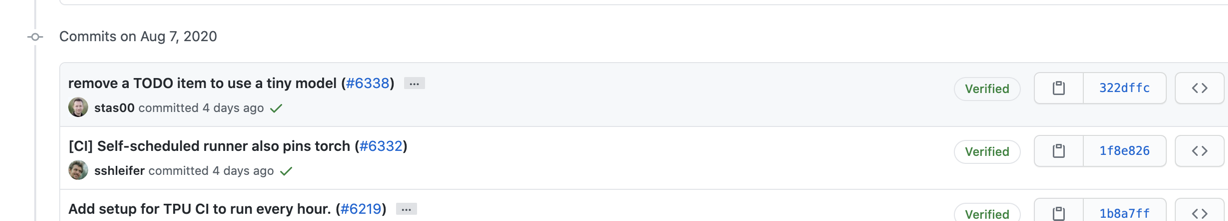

First, I took a look at the commits to make sure we were comparing against the right commits SHAs here: https://codecov.io/gh/huggingface/transformers/pull/6338/commits

which matches roughly to the commit stack on master (merging commits changes the SHA, I'm fairly sure, but the commit messages are consistent) https://github.com/huggingface/transformers/commits/master?after=3f071c4b6e36c4f2d4aee35d76fd2196f82b7936+34&branch=master

So, I thought that maybe we read the coverage reports wrong. I focused on this file src/transformers/modeling_tf_electra.py, because it had the most changes. Going into the build tab of the base commit and the head commit, I noticed that the coverage reports uploaded to Codecov show different coverages

Base commit

Head commit

To further confirm, I went into the CircleCI builds and compared the coverage generated by running python -m pytest -n 8 --dist=loadfile -s ./tests/ --cov | tee output.txt

I don't know the codebase well enough here, but my suspicion is that your test suite is not idempotent

As for notifications, could I get some more details here? One thing to note is target and threshold are not the same. Target is the coverage percentage to hit (like 80% of the total project), while threshold is the "wiggle" room (if set to 1%, it allows a 1% drop from the target to be considered acceptable)

As for notifications, could I get some more details here?

My thinking was that the project could set a threshold so that when it's crossed codecov makes itself heard, say -1% decrease would raise a flag. That way codecov becomes a useful ally and not something that most start ignoring because it's always there. but that's just IMHO.

To further confirm, I went into the CircleCI builds and compared the coverage generated by running python -m pytest -n 8 --dist=loadfile -s ./tests/ --cov | tee output.txt

Thank you for pointing out how we could use coverage data to explain this discrepancy, @thomasrockhu

I don't know the codebase well enough here, but my suspicion is that your test suite is not idempotent

Is there a tool, that can narrow down which tests cause the idempotent behavior? Other then doing a binary search, which often fails in such complex situation of many tests.

Thank you!

If you are talking about a notification not in GitHub (comments and status checks), you could do something like this in the codecov.yml file

coverage:

notify:

{{ notification_provider (e.g. slack) }}:

default:

threshold: 1%

This should only notify in cases of a 1% drop. (https://docs.codecov.io/docs/notifications#threshold)

Is there a tool, that can narrow down which tests cause the idempotent behavior? Other then doing a binary search, which often fails in such complex situation of many tests.

Unfortunately, if there is one, we are not aware of it. I wish we could be a little more helpful here right now.

Is there a tool, that can narrow down which tests cause the idempotent behavior? Other then doing a binary search, which often fails in such complex situation of many tests.

Unfortunately, if there is one, we are not aware of it. I wish we could be a little more helpful here right now.

So, the brute force approach would be to run groups of tests on the same code base, comparing the coverage before and after, narrowing it down to the smallest group of tests that cause the coverage to vary - Am I correct?

Probably to make an intelligent guess instead of the brute force, I'd look at the covebot reports for PRs that had no changes in code and yet wild swings were reported in some files. And from those files, consistently reported at the top, deduct the suspect tests.

edit: I looked a bit and most likely this issue has to do with TF tests, as most of the time the large coverage changes get reported in src/transformers/modeling_tf_*py, when the changes have nothing to do with TF.

@thomasrockhu, I run and re-run a bunch of tests, comparing the coverage reports and I can't reproduce the suggested possible lack of idempotency in the test suite.

However, if I look at for example https://codecov.io/gh/huggingface/transformers/pull/6505 it says it doesn't have a base to compare to, yet it produces a (invalid) codecov report https://github.com/huggingface/transformers/pull/6505#issuecomment-674440545. So to me it tells that something else is broken. i.e. it's not comparing that PR to the base, but comparing it to some totally unrelated nearest code branch that codecov happened to have the coverage file for. Does it make sense?

Hi @stas00, basically what that's saying is that in this PR, GitHub told us the parent was 24107c2 (https://codecov.io/gh/huggingface/transformers/pull/6505/commits). Unfortunately, we did not receive coverage reports or the CI might have failed. So we took the next parent from the master branch

This is an unfortunate consequence of not having coverage for a base commit.

Thank you for confirming that, @thomasrockhu.

Unfortunately, we did not receive coverage reports or the CI might have failed. So we took the next parent from the master branch

Given that the report is misleading then, would it be possible to let the user configure codecov to not provide any report in such situation or a note that a report couldn't be generated? And perhaps make that the default?

Or, perhaps, there should be a special internal action triggered that will go back to the base hash, run a CI on it, generate the coverage report, and now codecov can compare the 2 reports it was awesomely designed for. If that is possible at all.

It oddly seems to happen a lot, here is just a sample of a few recent PRs.

- https://codecov.io/gh/huggingface/transformers/pull/6494?src=pr&el=desc

- https://codecov.io/gh/huggingface/transformers/pull/6505?src=pr&el=desc

- https://codecov.io/gh/huggingface/transformers/pull/6504?src=pr&el=desc

- https://codecov.io/gh/huggingface/transformers/pull/6511?src=pr&el=desc

I did find a few recent ones that were fine, i.e. there was a base coverage report.

Given that the report is misleading then, would it be possible to let the user configure codecov to not provide any report in such situation or a note that a report couldn't be generated? And perhaps make that the default?

@stas00, unfortunately this is not possible in the Codecov UI. It is possible on the comments sent by Codecov in PRs via require_base.

Or, perhaps, there should be a special internal action triggered that will go back to the base hash, run a CI on it, generate the coverage report, and now codecov can compare the 2 reports it was awesomely designed for. If that is possible at all.

We depend on users to make this determination. We often find that users will use blocking status checks to enforce a failed commit which would imply that Codecov receives a coverage report.

It oddly seems to happen a lot, here is just a sample of a few recent PRs.

Looking at these PRs, they all depend on the same commit 24107c2c83e79d195826f18f66892feab6b000e9 as their base, so it makes sense that it would be breaking for those PRs.

Thank you very much, @thomasrockhu! Going to try to fix this issue by adding require_base=yes as you suggested: https://github.com/huggingface/transformers/pull/6553

Thank you for your awesome support!

For sure, let me know if there's anything else I can do to help!

@thomasrockhu, could you please have another look at the situation of this project?

After applying https://github.com/huggingface/transformers/pull/6553 it should now not generate invalid reports when the base is missing - this is good.

However, the problem of code coverage diff when there should be none is still there. e.g. here are some recent examples of pure non-code changes:

- https://codecov.io/gh/huggingface/transformers/pull/6650/changes

- https://codecov.io/gh/huggingface/transformers/pull/6650/changes

- https://codecov.io/gh/huggingface/transformers/pull/6629/changes

- https://codecov.io/gh/huggingface/transformers/pull/6649/changes

I did multiple experiments and tried hard to get the test suite to behave in a non-idempotent way, but I couldn't get any such results other than very minor 1-line differences in coverage. This was done on the same machine. I'm not sure how to approach this issue - perhaps CI ends up running different PRs on different types of hardware/different libraries - which perhaps could lead to significant discrepancies in coverage.

If changes in hardware and system software libraries could cause such an impact, is there some way of doing a fingerprinting of the host setup so that we know the report came from the same type of setup?

Thank you!

Apologies here @stas00 this got lost. Do you have a more recent pull request to take a look at so I can dig into the logs?

Yes, of course, @thomasrockhu.

Here is a fresh one: https://codecov.io/gh/huggingface/transformers/pull/6852 (no code change)

Let me know if it'd help to have a few.

@stas00 this is really strange. I was focusing in on src/transformers/trainer.py

Most recently on master, this commit is showing much lower coverage than normal (13.55% vs ~50%)

I'm comparing it to the commit right after

The CI build for the first, shows that there are fewer tests run by 1

Compared to the a497de run.

Maybe there's something here?

Thank you, @thomasrockhu!

This is definitely a great find. I'm requesting to add -rA to pytest runs https://github.com/huggingface/transformers/pull/6861

then we can easily diff which tests aren't being run. I will follow up once this is merged and we have new data to work with.

OK, the -rA report is active now, so we can see and diff the exact tests that were run and skipped.

Have a look at these recent ones with no code changes:

- https://github.com/huggingface/transformers/pull/6861

- https://github.com/huggingface/transformers/pull/6867

I double checked that same number of tests were run in both, but codecov report is reporting huge coverage differences.

This is odd:

- https://codecov.io/gh/huggingface/transformers/pull/6861/changes

- https://codecov.io/gh/huggingface/transformers/pull/6867/changes

It seems to be reporting a huge number of changes, which mostly cancel each other.

@thomasrockhu? Please, let me know when you can have a look at it - otherwise your logs will be gone again and the provided examples per your request will be unusable again. Thanks.

Hi @stas00, apologies I took a look a few days ago, but I really couldn't find a good reason or another step to figure out what is going on in your testing setup. I'll take a look again today.

@thomasrockhu, thank you for all your attempts so far. As you originally correctly guessed transformers tests suite is not idempotent. I finally was able to reproduce that first in a large sub-set of randomly run tests and then reduced it to a very small sub-set. So from here on it's totally up to us to either sort it out or let codecov go.

reproducing the problem

note: this is not the only guilty sub-test, there are others (I have more sub-groups that I haven't reduced to a very small sub-set yet), but it's good to enough to demonstrate the problem and see if we can find a solution.

Step 1. prep

pip install pytest-flakefinder pytest-randomly

note: make sure you pip uninstall pytest-randomly when you're done here, since it'll randomize your tests w/o asking you - i.e. no flags to enable it - you installed it, all your tests suites are now random.

why randomize? because pytest -n auto ends up running tests somewhat randomly across the many processors

flakefinder is the only pytest plugin that I know of that allows repetition of unittests, but this one you can leave around - it doesn't do anything on its own, unless you tell it to.

Case 1. multiprocess

We will run 2 sub-tests in a random order:

export TESTS="tests/test_benchmark_tf.py::TFBenchmarkTest::test_inference_encoder_decoder_with_configs \

tests/test_benchmark_tf.py::TFBenchmarkTest::test_trace_memory"

pytest $TESTS --cov --flake-finder --flake-runs=5 | tee k1; \

pytest $TESTS --cov --flake-finder --flake-runs=5 | tee k2; \

diff -u k1 k2 | egrep "^(\-|\+)"

and we get:

--- k1 2020-09-11 20:00:32.246210967 -0700

+++ k2 2020-09-11 20:01:31.778468283 -0700

-Using --randomly-seed=1418403633

+Using --randomly-seed=1452350401

-src/transformers/benchmark/benchmark_tf.py 152 62 59%

-src/transformers/benchmark/benchmark_utils.py 401 239 40%

+src/transformers/benchmark/benchmark_tf.py 152 50 67%

+src/transformers/benchmark/benchmark_utils.py 401 185 54%

-src/transformers/configuration_t5.py 32 16 50%

+src/transformers/configuration_t5.py 32 4 88%

-src/transformers/modeling_tf_t5.py 615 526 14%

+src/transformers/modeling_tf_t5.py 615 454 26%

-src/transformers/modeling_tf_utils.py 309 214 31%

+src/transformers/modeling_tf_utils.py 309 212 31%

-TOTAL 32394 24146 25%

+TOTAL 32394 23994 26%

-================== 10 passed, 3 warnings in 71.87s (0:01:11) ===================

+======================= 10 passed, 3 warnings in 58.82s ========================

Whoah! Non-Idempotent test suite it is! A whooping 1% change in coverage over no change in code.

Saving the seeds I'm now able to reproduce this at will by adding the specific seeds of the first run:

export TESTS="tests/test_benchmark_tf.py::TFBenchmarkTest::test_inference_encoder_decoder_with_configs \

tests/test_benchmark_tf.py::TFBenchmarkTest::test_trace_memory"

pytest $TESTS --cov --flake-finder --flake-runs=5 --randomly-seed=1418403633 | tee k1; \

pytest $TESTS --cov --flake-finder --flake-runs=5 --randomly-seed=1452350401 | tee k2; \

diff -u k1 k2 | egrep "^(\-|\+)"

getting the same results.

Case 2. randomization issue

Here are some other tests with the same problem, but the cause is different - randomization

CUDA_VISIBLE_DEVICES="" pytest -n 3 --dist=loadfile tests/test_data_collator.py --cov | tee c1; \

CUDA_VISIBLE_DEVICES="" pytest -n 3 --dist=loadfile tests/test_data_collator.py --cov | tee c2; \

diff -u c1 c2 | egrep "^(\-|\+)"

this time w/o using flake-finder, but instead relying on -n 3 + randomly.

--- c1 2020-09-11 19:00:00.259221772 -0700

+++ c2 2020-09-11 19:00:14.103276713 -0700

-Using --randomly-seed=4211396884

+Using --randomly-seed=3270809055

-src/transformers/data/datasets/language_modeling.py 168 23 86%

+src/transformers/data/datasets/language_modeling.py 168 25 85%

-src/transformers/tokenization_utils_base.py 750 321 57%

+src/transformers/tokenization_utils_base.py 750 316 58%

-TOTAL 32479 23282 28%

+TOTAL 32479 23279 28%

-======================= 9 passed, 13 warnings in 13.10s ========================

+======================= 9 passed, 13 warnings in 13.44s ========================

a much smaller diff, but a diff nevertheless

Next is to try to resolve this or give up codecov.

The preliminary reading points the blaming finger to multiprocessing (Pool, and others).

Thank you for reading.

@stas00 this is absolutely incredible. I'll admit that I wouldn't be able to have found this myself, you've done a hell of an investigation here. How can I be useful?

Thank you for the kind words, @thomasrockhu. I have a solution for the random issue (need to set a fixed seed before the test), but not yet for the multiproc. It's all the tools that fork sub-processes that are the issue potentially, as they don't all get accounted for consistently. I need more time staring at the screen doing experiments.

But I do need your help here: https://codecov.io/gh/huggingface/transformers/pull/7067/changes

What does it mean? If you look at +X/-X - they all are identical numbers, and should add up to 0. Yet, we get 2.41% diff in coverage. How does that get calculated and why are those identical numbers but flipped up - clearly there is something off there, not sure if it's related to coverage as they are perfectly complementary.

I did see many others cases where they weren't complementary, but in this case it's 100% so. Ideas?

Or perhaps if I rephrase this: how on that screen can I see the 2.41% difference if I look at it as a black box. I imagine the numbers are the same, but perhaps they are not the same lines in the code, hence the difference. But it's impossible to see that from that presentation. Clicking on the specific diff makes no sense to me. it's just one screen of one type/color - I can't see the actual diff.

@thomasrockhu? Could you please have a look that issue mentioned in my last comment? Thank you.

@stas00 apologies will take a look today/tomorrow

@stas00, so the +X/-X are actually showing coverage change for that file. So as an example,

you see -1 -> +1. This means in total, one line that was not covered is now covered (this is not always a zero-sum game if a line is removed). You can see that two lines have added coverage (red to green) and one line has no coverage (green to red).

So taking the total over all those changes actually leads to a -777 line coverage drop. You can see that in the commits of this PR

base -> https://codecov.io/gh/huggingface/transformers/tree/8fcbe486e1592321e868f872545c8fd9d359a515

head -> https://codecov.io/gh/huggingface/transformers/tree/a4dd71ef19033ec8e059a0a76c7141a8a5840e66

Does this make more sense?

The case you're are showing makes total sense. I'm absolutely clear on that one.

But your example doesn't work for https://codecov.io/gh/huggingface/transformers/pull/7067/changes

Let's pick a small one: Changes in src/transformers/modelcard.py

As you can see there is only addition, I don't see any subtraction. i.e I only see red lines - where are the green ones? If it's +2/-2 I'd expect to see 2 in red and 2 in green. Does it make sense which parts of the reports I'm struggling to understand?

@stas00 I see the source of your confusion. This is not a code diff, it's a coverage diff. What you are seeing is two lines that were previously covered (the green 2) are now no longer covered (the red 2). The +/- signs are confusing, I'm going to bring that back to my team. Does this make sense now?

Absolutely. I know it's a coverage diff.

If I may suggest it'd be much clearer if it were to only show +1 / -1 if there was one line covered anew and another different line was not, like a normal diff would. And only +1 / 0 and 0 / -1 in the other cases.

So in the particular case of [the pull we are discussing]((https://codecov.io/gh/huggingface/transformers/pull/7067/changes) it's most likely just a bunch of +Xs/0 for most of the coverage and then a few 0/-Xs at the end.

Thank you for clarifying.

Hi @stas00, yeah you are right. I've passed along the feedback to our product team to re-think that widget in the next iteration of our UI and that page in particular. Thanks for your help here!

Thank you, @thomasrockhu