Transformers: How to load locally saved tensorflow DistillBERT model

I have got tf model for DistillBERT by the following python line

import tensorflow as tf from transformers import DistilBertTokenizer, TFDistilBertModel tokenizer = DistilBertTokenizer.from_pretrained('distilbert-base-uncased') model = TFDistilBertModel.from_pretrained('distilbert-base-uncased') input_ids = tf.constant(tokenizer.encode("Hello, my dog is cute"), dtype="int32")[None, :] # Batch size 1 outputs = model(input_ids) last_hidden_states = outputs[0]

These lines have been executed successfully. But I am facing error with model.save()

model.save("DSB/DistilBERT.h5")

model.save("DSB")

model.save("DSB/")

all the above 3 line gives errors

but downlines works

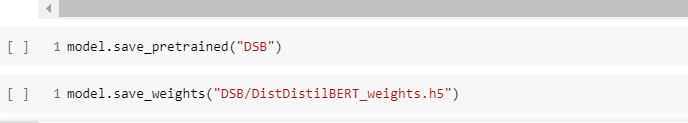

model.save_pretrained("DSB")

this saves 2 file tf_model.h5 and config.json

model.save_weights("DSB/DistDistilBERT_weights.h5")

this also have saved the file

but I am not able to re-load this locally saved model any how, I have tried with all down-lines it gives error

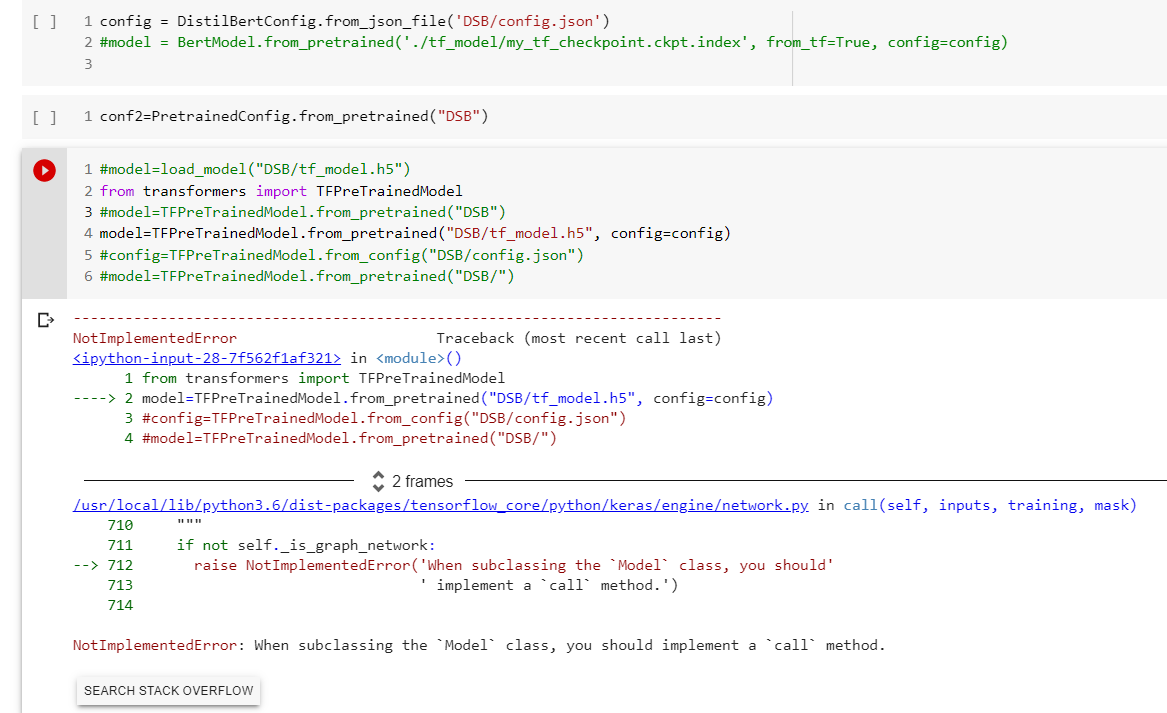

from tensorflow.keras.models import load_model from transformers import DistilBertConfig, PretrainedConfig from transformers import TFPreTrainedModel config = DistilBertConfig.from_json_file('DSB/config.json') conf2=PretrainedConfig.from_pretrained("DSB") config=TFPreTrainedModel.from_config("DSB/config.json")

all these load configuration , but I am unable to load model , tried with all down-line

model=TFPreTrainedModel.from_pretrained("DSB")

model=PreTrainedModel.from_pretrained("DSB/tf_model.h5", from_tf=True, config=config)

model=TFPreTrainedModel.from_pretrained("DSB/")

model=TFPreTrainedModel.from_pretrained("DSB/tf_model.h5", config=config)

NotImplementedError Traceback (most recent call last)

in ()

1 from transformers import TFPreTrainedModel

----> 2 model=TFPreTrainedModel.from_pretrained("DSB/tf_model.h5", config=config)

3 #config=TFPreTrainedModel.from_config("DSB/config.json")

4 #model=TFPreTrainedModel.from_pretrained("DSB/")

2 frames

/usr/local/lib/python3.6/dist-packages/transformers/modeling_tf_utils.py in from_pretrained(cls, pretrained_model_name_or_path, model_args, *kwargs)

309 return load_pytorch_checkpoint_in_tf2_model(model, resolved_archive_file, allow_missing_keys=True)

310

--> 311 ret = model(model.dummy_inputs, training=False) # build the network with dummy inputs

312

313 assert os.path.isfile(resolved_archive_file), "Error retrieving file {}".format(resolved_archive_file)

/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/engine/base_layer.py in __call__(self, inputs, args, *kwargs)

820 with base_layer_utils.autocast_context_manager(

821 self._compute_dtype):

--> 822 outputs = self.call(cast_inputs, args, *kwargs)

823 self._handle_activity_regularization(inputs, outputs)

824 self._set_mask_metadata(inputs, outputs, input_masks)

/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/engine/network.py in call(self, inputs, training, mask)

710 """

711 if not self._is_graph_network:

--> 712 raise NotImplementedError('When subclassing the Model class, you should'

713 ' implement a call method.')

714

NotImplementedError: When subclassing the Model class, you should implement a call method.

All 4 comments

Please format your code correctly using code tags and not quote tags, and don't use screenshots but post your actual code so that we can copy-paste it and reproduce your errors. https://help.github.com/en/github/writing-on-github/creating-and-highlighting-code-blocks

Thanks to your response, now it will be convenient to copy-paste.

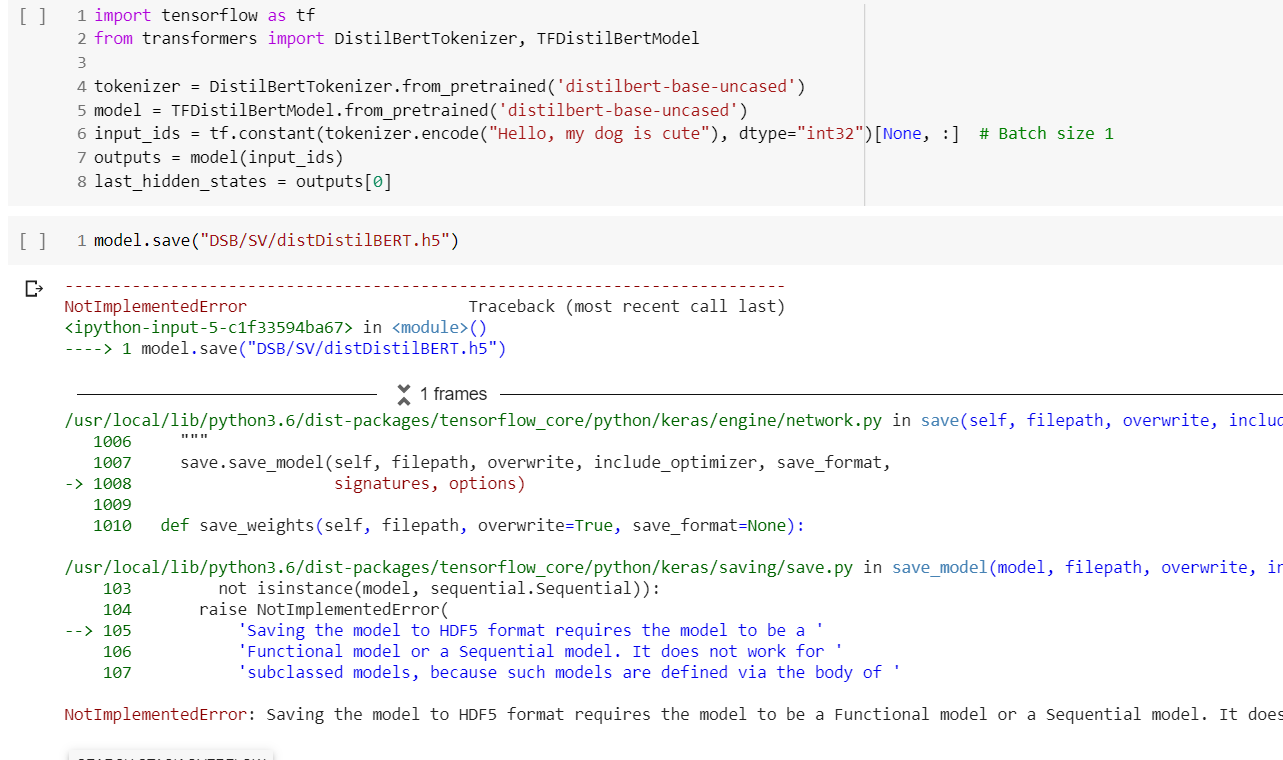

import tensorflow as tf

from transformers import DistilBertTokenizer, TFDistilBertModel

tokenizer = DistilBertTokenizer.from_pretrained('distilbert-base-uncased')

model = TFDistilBertModel.from_pretrained('distilbert-base-uncased')

input_ids = tf.constant(tokenizer.encode("Hello, my dog is cute"), dtype="int32")[None, :] # Batch size 1

outputs = model(input_ids)

last_hidden_states = outputs[0]

###################################### success

model.save("DSB/SV/distDistilBERT.h5")

NotImplementedError Traceback (most recent call last)

----> 1 model.save("DSB/SV/distDistilBERT.h5")

1 frames

/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/engine/network.py in save(self, filepath, overwrite, include_optimizer, save_format, signatures, options)

1006 """

1007 save.save_model(self, filepath, overwrite, include_optimizer, save_format,

-> 1008 signatures, options)

1009

1010 def save_weights(self, filepath, overwrite=True, save_format=None):

/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/saving/save.py in save_model(model, filepath, overwrite, include_optimizer, save_format, signatures, options)

103 not isinstance(model, sequential.Sequential)):

104 raise NotImplementedError(

--> 105 'Saving the model to HDF5 format requires the model to be a '

106 'Functional model or a Sequential model. It does not work for '

107 'subclassed models, because such models are defined via the body of '

NotImplementedError: Saving the model to HDF5 format requires the model to be a Functional model or a Sequential model. It does not work for subclassed models, because such models are defined via the body of a Python method, which isn't safely serializable. Consider saving to the Tensorflow SavedModel format (by setting save_format="tf") or using save_weights.

#

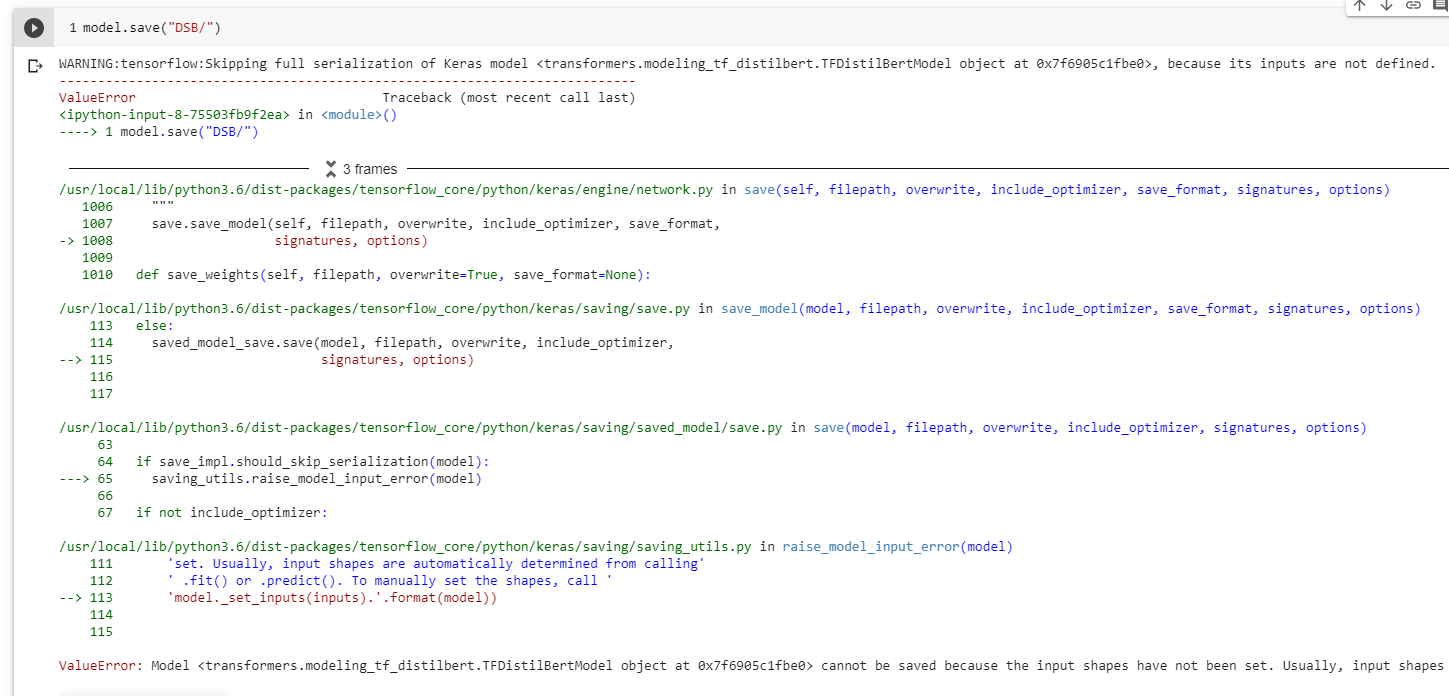

model.save("DSB/")

ValueError Traceback (most recent call last)

----> 1 model.save("DSB/")

3 frames

/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/engine/network.py in save(self, filepath, overwrite, include_optimizer, save_format, signatures, options)

1006 """

1007 save.save_model(self, filepath, overwrite, include_optimizer, save_format,

-> 1008 signatures, options)

1009

1010 def save_weights(self, filepath, overwrite=True, save_format=None):

/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/saving/save.py in save_model(model, filepath, overwrite, include_optimizer, save_format, signatures, options)

113 else:

114 saved_model_save.save(model, filepath, overwrite, include_optimizer,

--> 115 signatures, options)

116

117

/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/saving/saved_model/save.py in save(model, filepath, overwrite, include_optimizer, signatures, options)

63

64 if save_impl.should_skip_serialization(model):

---> 65 saving_utils.raise_model_input_error(model)

66

67 if not include_optimizer:

/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/saving/saving_utils.py in raise_model_input_error(model)

111 'set. Usually, input shapes are automatically determined from calling'

112 ' .fit() or .predict(). To manually set the shapes, call '

--> 113 'model._set_inputs(inputs).'.format(model))

114

115

ValueError: Model

#

model.save_pretrained("DSB")

model.save_weights("DSB/DistDistilBERT_weights.h5")

################################################### success

from transformers import DistilBertConfig, PretrainedConfig

config = DistilBertConfig.from_json_file('DSB/config.json')

conf2=PretrainedConfig.from_pretrained("DSB")

####################################################### success

#from tensorflow.keras.models import load_model

#model=load_model("DSB/tf_model.h5") # error

########## error, It looks because-of saved model is not by

model.save("path")

from transformers import TFPreTrainedModel

#model=TFPreTrainedModel.from_pretrained("DSB") # error

model=TFPreTrainedModel.from_pretrained("DSB/tf_model.h5", config=config) # error

#config=TFPreTrainedModel.from_config("DSB/config.json") # error

#model=TFPreTrainedModel.from_pretrained("DSB/") # error

NotImplementedError Traceback (most recent call last)

1 from transformers import TFPreTrainedModel

2 #model=TFPreTrainedModel.from_pretrained("DSB") # error

----> 3 model=TFPreTrainedModel.from_pretrained("DSB/tf_model.h5", config=config)

4 #config=TFPreTrainedModel.from_config("DSB/config.json")

5 #model=TFPreTrainedModel.from_pretrained("DSB/")

2 frames

/usr/local/lib/python3.6/dist-packages/transformers/modeling_tf_utils.py in from_pretrained(cls, pretrained_model_name_or_path, model_args, *kwargs)

309 return load_pytorch_checkpoint_in_tf2_model(model, resolved_archive_file, allow_missing_keys=True)

310

--> 311 ret = model(model.dummy_inputs, training=False) # build the network with dummy inputs

312

313 assert os.path.isfile(resolved_archive_file), "Error retrieving file {}".format(resolved_archive_file)

/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/engine/base_layer.py in __call__(self, inputs, args, *kwargs)

820 with base_layer_utils.autocast_context_manager(

821 self._compute_dtype):

--> 822 outputs = self.call(cast_inputs, args, *kwargs)

823 self._handle_activity_regularization(inputs, outputs)

824 self._set_mask_metadata(inputs, outputs, input_masks)

/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/engine/network.py in call(self, inputs, training, mask)

710 """

711 if not self._is_graph_network:

--> 712 raise NotImplementedError('When subclassing the Model class, you should'

713 ' implement a call method.')

714

NotImplementedError: When subclassing the Model class, you should implement a call method.

To save/load a model:

model = TFDistilBertModel(config)

# Saving the model

model.save_pretrained("directory")

# Loading the model

loaded_model = TFDistilBertModel.from_pretrained("directory") # automatically loads the configuration.

Thanks @LysandreJik

It works.

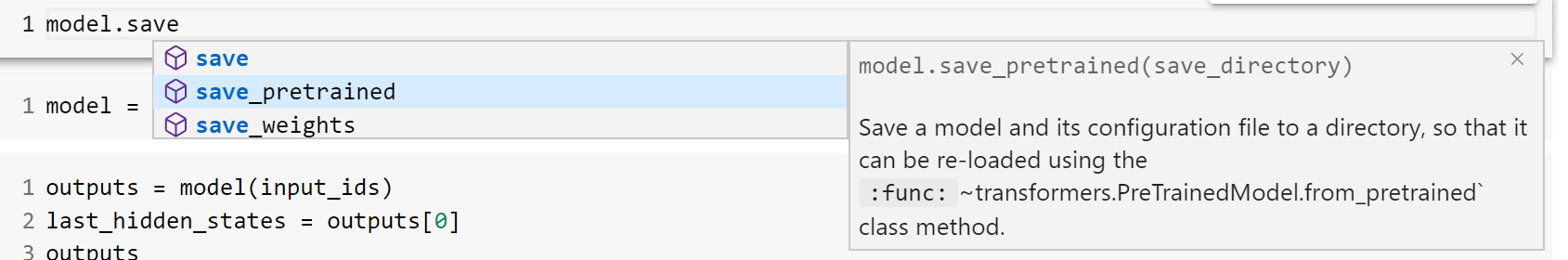

greedy guidelines poped by model.svae_pretrained have confused me. It pops up like this

model.save_pretrained("directory")

save a model and its configuration file to the directory, so that it can be re-loaded using the

:func: ~transformers.PreTrainedModel.from_pretrained`

class method

Most helpful comment

To save/load a model: