Transformers: token indices sequence length is longer than the specified maximum sequence length

❓ Questions & Help

When I use Bert, the "token indices sequence length is longer than the specified maximum sequence length for this model (1017 > 512)" occurs. How can I solve this error?

All 27 comments

This means you're encoding a sequence that is larger than the max sequence the model can handle (which is 512 tokens). This is not an error but a warning; if you pass that sequence to the model it will crash as it cannot handle such a long sequence.

You can truncate the sequence: seq = seq[:512] or use the max_length tokenizer parameter so that it handles it on its own.

Thank you. I truncate the sequence and it worked. But I use the parameter max_length of the method "encode" of the class of Tokenizer , it do not works.

Hi, could you show me how you're using the max_length parameter?

If you use it as such it should truncate your sequences:

from transformers import GPT2Tokenizer

text = "This is a sequence"

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

x = tokenizer.encode(text, max_length=2)

print(len(x)) # 2

I use max_length is as follows:

model_class, tokenizer_class, pretrained_weights = BertModel, BertTokenizer, 'bert-base-uncased'

tokenizer = tokenizer_class.from_pretrained(pretrained_weights)

model = model_class.from_pretrained(pretrained_weights)

text = "After a morning of Thrift Store hunting, a friend and I were thinking of lunch, and he ... " #this sentence is very long, Its length is more than 512. In order to save space, not all of them are shown here

# text = tokenizer.tokenize(text)

# if len(text) > 512:

# text = text[:512]

#text = "After a morning of Thrift Store hunting, a friend and I were thinking of lunch"

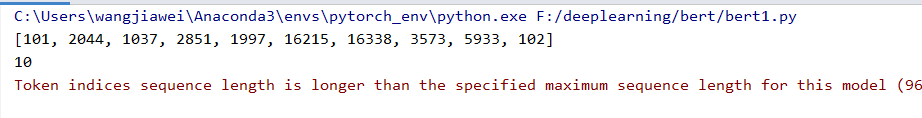

text = tokenizer.encode(text, add_special_tokens=True, max_length=10)

print(text)

print(len(text))

It works. I previously set max_length to 512, just output the encoded list, so I didn't notice that the length has changed. But the warning still occurs:

Glad it works! Indeed, we should do something about this warning, it shouldn't appear when a max length is specified.

Thank you very much!

What if I need the sequence length to be longer than 512 (e.g., to retrieve the answer in a QA model)?

Hi @LukasMut this question might be better suited to Stack Overflow.

I have the same doubt as @LukasMut . Did you open a Stack Overflow question?

did you got the solution @LukasMut @paulogaspar

Not really. All solutions point to using only the 512 tokens, and choosing what to place in those tokens (for example, picking which part of the text)

Having the same issue @paulogaspar any update on this? I'm having sequences with more than 512 tokens.

Having the same issue @paulogaspar any update on this? I'm having sequences with more than 512 tokens.

Take a look at my last answer, that's the point I'm at.

Also dealing with this issue and thought I'd post what's going through my head, correct me if I'm wrong but I think the maximum sequence length is determined when the model is first trained? In which case training a model with a larger sequence length is the solution? And I'm wondering if fine-tuning can be used to increase the sequence length.

Same question. What to do if text is long?

That's a research questions guys

This might help people looking for further details https://github.com/pytorch/fairseq/issues/1685 & https://github.com/google-research/bert/issues/27

Hi,

The question i have is almost the same.

Bert has some configuration options. As far as i know about transformers, it's not constrain by sequence length at all.

Can I change the config to have more than 512 tokens ?

Most transformers are unfortunately completely constrained, which is the case for BERT (512 tokens max).

If you want to use transformers without being limited to a sequence length, you should take a look at Transformer-XL or XLNet.

@LysandreJik

I thought XLNet has a max length of 512 as well.

Transformer-XL is still is a mystery to me because it seems like the length is still 512 for downstream tasks, unlike language modeling (pre-training).

Please let me know if my understanding is incorrect.

Thanks!

XLNet was pre-trained/fine-tuned with a maximum length of 512, indeed. However, the model is not limited to such a length:

from transformers import XLNetLMHeadModel, XLNetTokenizer

tokenizer = XLNetTokenizer.from_pretrained("xlnet-base-cased")

model = XLNetLMHeadModel.from_pretrained("xlnet-base-cased")

encoded = tokenizer.encode_plus("Alright, let's do this" * 500, return_tensors="pt")

print(encoded["input_ids"].shape) # torch.Size([1, 3503])

print(model(**encoded)[0].shape) # torch.Size([1, 3503, 32000])

The model is not limited to a specific length because it doesn't leverage absolute positional embeddings, instead leveraging the same relative positional embeddings that Transformer-XL used. Please note that since the model isn't trained on larger sequences thant 512, no results are guaranteed on larger sequences, even if the model can still handle them.

I was going to try this out, but after reading this out few times now, I still have no idea how I'm supposed to truncate the token stream for the pipeline.

I got some results by combining @cswangjiawei 's advice of running the tokenizer, but it returns a truncated sequence that is slightly longer than the limit I set.

Otherwise the results are good, although they come out slow and I may have to figure how to activate cuda on py torch.

Update: There is an article that shows how to run the summarizer on large texts, I got it to work with this one: https://www.thepythoncode.com/article/text-summarization-using-huggingface-transformers-python

What if I need the sequence length to be longer than 512 (e.g., to retrieve the answer in a QA model)?

You can go for BigBird as it takes a input token size of 4096 tokens(but can take upto 16K size)

Let me help with what I have understood. Correct me if I am wrong. The reason you can't use sequence length more than max_length is because of the positional encoding. Let's have a look at the positional encoding in the original Transformer paper

So the _pos_ in the formula is the index of the words, and they have set 10000 as the scale to cover the usual length of most of the sentences. Now, if you look at the visualization of these functions, you will notice until the _pos_ value is less than 10000 we will get a unique temporal representation of each word. But once it's length is more than 10000 representation won't be unique for each word (e.g. 1st and 10001 will have the same representation). So if max_length = scale (512 as discussed here) and sequence_length > max_length positional encoding will not work.

I didn't check what scale value (you can check it by yourself) BERT uses, but probably this may be the reason.

.

What if I need the sequence length to be longer than 512 (e.g., to retrieve the answer in a QA model)?

You can go for BigBird as it takes a input token size of 4096 tokens(but can take upto 16K size)

The code and weights for BigBird haven't been published yet, am I right?

What if I need the sequence length to be longer than 512 (e.g., to retrieve the answer in a QA model)?

You can go for BigBird as it takes a input token size of 4096 tokens(but can take upto 16K size)

The code and weights for BigBird haven't been published yet, am I right?

Yes and in that case you have Longformers, Reformers which can handle the long sequences.

My model was pretrained with max_seq_len of 128 and max_posi_embeddings of 512 using the original BERT code release.

I am having the same problem here. I have tried a couple of fixes, but none of them is working for me.

export MAX_LENGTH=120

export MODEL="./bert-1.5M"

python3 preprocess.py ./data/train.txt $MODEL $MAX_LENGTH > train.txt

python3 preprocess.py ./data/dev.txt $MODEL $MAX_LENGTH > dev.txt

python3 preprocess.py ./data/test.txt $MODEL $MAX_LENGTH > test.txt

```export MAX_LENGTH=512 # I have tried 128, 256

I am running run_ner_old.py file.

Can anyone help.

Most helpful comment

What if I need the sequence length to be longer than 512 (e.g., to retrieve the answer in a QA model)?