Transformers: Unexpectedly preprocess when multi-gpu using

When run example/run_squad with more than one gpu, the preprocessor cannot work as expected. For example, the unique_id will not be a serial numbers, then keyerror occurs when writing the result to json file.

I do not check there are others unexpectedly behavior or not yet.

I'll update the issue after checking them.

All 8 comments

Why do you think the unique_id is not serial?

Each process should convert ALL the dataset.

Only the PyTorch dataset should be split among processes.

By the way it would be cleaner if the other processes wait for the first process to pre-process the dataset before using the cache so the dataset is only converted once and not several time in parrallel (waste of compute). I'll add this option.

@thomwolf Hi, thanks for your reply

For example, the unique_id should [1000000, 1000001, 1000002, ...]

However, with multi-process I got [1000000, 100001, 1000004, ....]

I did not check what cause the error.

As a result, when predict the answer with multiple gpu, the key error happened.

Had the same problema: a KeyError 1000000 after doing a distributed training. Does anyone know how to fix it?

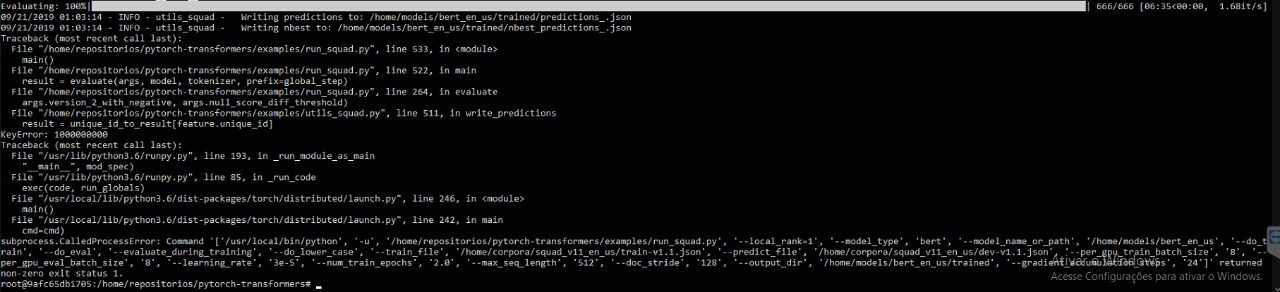

@ayrtondenner Have the same problem as you when distributed training, after evaluation completes and in writing predictions:

_" File "/media/dn/dssd/nlp/transformers/examples/utils_squad.py", line 511, in write_predictions

result = unique_id_to_result[feature.unique_id]

KeyError: 1000000000"_

Setup: transformers 2.0.0; pytorch 1.2.0; python 3.7.4; NVIDIA 1080Ti x 2

_" python -m torch.distributed.launch --nproc_per_node=2 ./run_squad.py \ "_

Data parallel with the otherwise same shell script works fine producing the results below, but of course takes longer with more limited GPU memory for batch sizes.

Results:

{

"exact": 81.06906338694418,

"f1": 88.57343698391432,

"total": 10570,

"HasAns_exact": 81.06906338694418,

"HasAns_f1": 88.57343698391432,

"HasAns_total": 10570

}

Data parallel shell script:

python ./run_squad.py \

--model_type bert \

--model_name_or_path bert-base-uncased \

--do_train \

--do_eval \

--do_lower_case \

--train_file=${SQUAD_DIR}/train-v1.1.json \

--predict_file=${SQUAD_DIR}/dev-v1.1.json \

--per_gpu_eval_batch_size=8 \

--per_gpu_train_batch_size=8 \

--gradient_accumulation_steps=1 \

--learning_rate=3e-5 \

--num_train_epochs=2 \

--max_seq_length=384 \

--doc_stride=128 \

--adam_epsilon=1e-6 \

--save_steps=2000 \

--output_dir=./runs/bert_base_squad1_ft_2

The problem is that evaluate() distributes the evaluation under DDP:

https://github.com/huggingface/transformers/blob/079bfb32fba4f2b39d344ca7af88d79a3ff27c7c/examples/run_squad.py#L216

Meaning each process collects a subset of all_results

but then write_predictions() expects all_results to have all the results 😮

Specifically, unique_id_to_result only maps a subset of ids

https://github.com/huggingface/transformers/blob/079bfb32fba4f2b39d344ca7af88d79a3ff27c7c/examples/utils_squad.py#L489-L491

but the code expects an entry for every feature

https://github.com/huggingface/transformers/blob/079bfb32fba4f2b39d344ca7af88d79a3ff27c7c/examples/utils_squad.py#L510-L511

For DDP evaluate to work all_results needs to be collected from all the threads. Otherwise don't allow args.do_eval and args.local_rank != -1 at the same time.

edit: or get rid of the DistributedSampler and use SequentialSampler in all cases.

Make sense, do you have a fix in mind @immawatson? Happy to welcome a PR that would fix that.

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

The problem is that

evaluate()distributes the evaluation under DDP:

https://github.com/huggingface/transformers/blob/079bfb32fba4f2b39d344ca7af88d79a3ff27c7c/examples/run_squad.py#L216Meaning each process collects a subset of

all_results

but thenwrite_predictions()expectsall_resultsto have _all the results_ 😮

Specifically,unique_id_to_resultonly maps a subset of ids

https://github.com/huggingface/transformers/blob/079bfb32fba4f2b39d344ca7af88d79a3ff27c7c/examples/utils_squad.py#L489-L491but the code expects an entry for every feature

https://github.com/huggingface/transformers/blob/079bfb32fba4f2b39d344ca7af88d79a3ff27c7c/examples/utils_squad.py#L510-L511For DDP evaluate to work

all_resultsneeds to be collected from all the threads. Otherwise don't allowargs.do_evalandargs.local_rank != -1at the same time.

edit: or get rid of theDistributedSamplerand useSequentialSamplerin all cases.

That didn't work for me.

Most helpful comment

The problem is that

evaluate()distributes the evaluation under DDP:https://github.com/huggingface/transformers/blob/079bfb32fba4f2b39d344ca7af88d79a3ff27c7c/examples/run_squad.py#L216

Meaning each process collects a subset of

all_resultsbut then

write_predictions()expectsall_resultsto have all the results 😮Specifically,

unique_id_to_resultonly maps a subset of idshttps://github.com/huggingface/transformers/blob/079bfb32fba4f2b39d344ca7af88d79a3ff27c7c/examples/utils_squad.py#L489-L491

but the code expects an entry for every feature

https://github.com/huggingface/transformers/blob/079bfb32fba4f2b39d344ca7af88d79a3ff27c7c/examples/utils_squad.py#L510-L511

For DDP evaluate to work

all_resultsneeds to be collected from all the threads. Otherwise don't allowargs.do_evalandargs.local_rank != -1at the same time.edit: or get rid of the

DistributedSamplerand useSequentialSamplerin all cases.