Transformers: Issue happens while using convert_tf_checkpoint_to_pytorch

Hi,

We are using your brilliant project for working on the Japanese BERT model with Sentence Piece.

https://github.com/yoheikikuta/bert-japanese

We are trying to use the convert to to convert below TF BERT model to PyTorch.

https://drive.google.com/drive/folders/1Zsm9DD40lrUVu6iAnIuTH2ODIkh-WM-O

But we see error logs:

Traceback (most recent call last):

File "/Users/weicheng.zhu/PycharmProjects/pytorch-pretrained-BERT-master/pytorch_pretrained_bert/convert_tf_checkpoint_to_pytorch.py", line 66, in

args.pytorch_dump_path)

File "/Users/weicheng.zhu/PycharmProjects/pytorch-pretrained-BERT-master/pytorch_pretrained_bert/convert_tf_checkpoint_to_pytorch.py", line 37, in convert_tf_checkpoint_to_pytorch

load_tf_weights_in_bert(model, tf_checkpoint_path)

File "/Users/weicheng.zhu/PycharmProjects/pytorch-pretrained-BERT-master/pytorch_pretrained_bert/modeling.py", line 95, in load_tf_weights_in_bert

pointer = getattr(pointer, l[0])

File "/usr/local/lib/python3.6/site-packages/torch/nn/modules/module.py", line 535, in __getattr__

type(self).__name__, name))

AttributeError: 'BertForPreTraining' object has no attribute 'global_step'

Could you kindly help with how we can avoid this?

Thank you so much!

All 10 comments

I resolved this issue by adding the global_step to the skipping list. I think global_step is not required for using pretrained model. Please correct me if I am wrong.

Is Pytorch requires a TF check point converted? am finding hard to load the checkpoint I generated.BTW is it safe to convert TF checkpoint ?

I resolved this issue by adding the global_step to the skipping list. I think global_step is not required for using pretrained model. Please correct me if I am wrong.

can you explain me what is skipping list?

In the file modeling.py add it to the list at:

if any(n in ["adam_v", "adam_m"] for n in name):

Is it possible to load Tensorflow checkpoint using pytorch and do fine tunning?

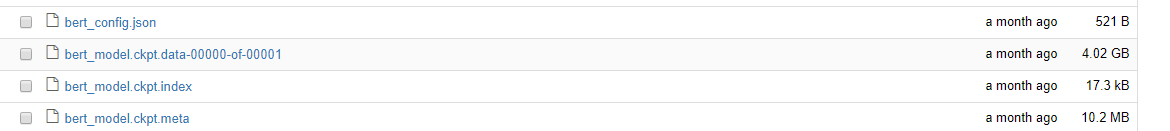

I can load pytorch_model.bin and finding hard to load my TF checkpoint.Documentation says it can load a archive with bert_config.json and model.chkpt but I have bert_model_ckpt.data-0000-of-00001 in my TF checkpoint folder so am confused. Is there specific example how to do this?

There is a conversion script to convert a tf checkpoint to pytorch: https://github.com/huggingface/pytorch-pretrained-BERT/blob/master/pytorch_pretrained_bert/convert_tf_checkpoint_to_pytorch.py

In the file

modeling.pyadd it to the list at:

if any(n in ["adam_v", "adam_m"] for n in name):

added global_step in skipping list but still getting same issue.

@naga-dsalgo Is it fixed? I too added "global_step" to the list. But still get the error

Yes it is fixed for me ... I edited installed version not the downloaded

git version ..

On Tue, Apr 2, 2019 at 4:37 AM Shivam Akhauri notifications@github.com

wrote:

@naga-dsalgo https://github.com/naga-dsalgo Is it fixed? I too added

"global_step" to the list. But still get the error—

You are receiving this because you were mentioned.Reply to this email directly, view it on GitHub

https://github.com/huggingface/pytorch-pretrained-BERT/issues/306#issuecomment-478899861,

or mute the thread

https://github.com/notifications/unsubscribe-auth/AttINdN0Pj9IU0kcwNg_BtrnZdwF6Qjwks5vcxbZgaJpZM4bGhdh

.

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

Most helpful comment

I resolved this issue by adding the global_step to the skipping list. I think global_step is not required for using pretrained model. Please correct me if I am wrong.