Three.js: scene.background CubeTexture gets culled by frustum

I have a set of 6 identical 512x512 images that are set as scene.background as follows:

const createScene = () => {

const scene = new Scene();

const loader = new CubeTextureLoader();

const texture = loader.load( [

'space3d.jpg', 'space3d.jpg', 'space3d.jpg', 'space3d.jpg', 'space3d.jpg', 'space3d.jpg'

] );

scene.background = texture;

return scene;

}

I use a PerspectiveCamera.

If I set far plane to less than cca. 200, background gets shown correctly.

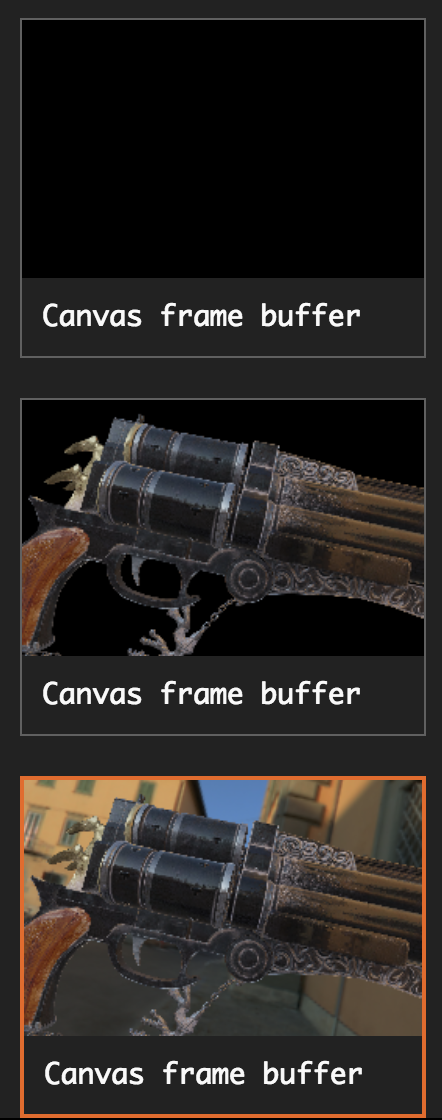

If I set far plane between 200 and 600, background is partially cut by frustum in a strange way, like this:

.

.

(black vertical part is the edge between two background planes).

For far plane above 600 frustum cuts it completely, and I see black background.

Why is that so?

All 21 comments

Any chances you show the problem with a new fiddle? 😇

https://jsfiddle.net/jhd5mqvp/

Camera's far plane is set to 1300.

And for some reason it (frustum?) clips background box.

Try changing far plane:

On 1600 for example it clips background entirely.

On 600 for example does not clip.

Strangely, I opened the fiddle on my Android mobile Chrome and I see no issue. Do you see the culling on Windows desktop Chrome?

When i use Chrome (61.0.3163.79) or FF (55.0.3) on my iMac i see no errors.

60.0.3112.101 (Official Build) (32-bit) and Version 61.0.3163.79 (Official Build) (32-bit) Chrome,

Windows 7 (32bit).

In this fiddle it cuts the background like this:

And when I set camera far plane to 600 it does not cut.

In IE 11 and FF 52 and FF 55, Windows 32bit, I don't see the issue.

/cc @kenrussell

One thing to note is that backgrounds now rely on polygonOffset and polygonOffsetUnits. Maybe it's not well supported?

https://github.com/mrdoob/three.js/blob/dev/src/renderers/webgl/WebGLBackground.js#L65-L74

The fiddle works fine on my Windows workstation with AMD GPU, both with Chrome Stable and Canary, and in Stable, also with --use-angle=d3d9 . My about:gpu is here: https://pastebin.com/5Q2cMpxn . Please provide it from a machine which reproduces the problem. Thanks.

polygonOffset is only lightly tested in the WebGL conformance tests, but it's ancient functionality, so I'm surprised there's a big behavioral difference on some GPUs.

As I said above, on the same machine (ATI Mobility Radeon HD 5650, Windows 7 32-bit) in IE 11 and FF 52 and FF 55 I don't see the issue.

Don't know how to make about:gpu like yours.

Please just navigate to about:gpu in a new tab, copy-paste the entire page, and put in a pastebin. Thanks.

https://pastebin.com/TeUcupm3 is this enough?

Yes. Your machine is falling back to the SwiftShader software renderer. I can reproduce the behavior with --use-gl=swiftshader. @c0d1f1ed and @sugoi1 work on SwiftShader so perhaps they can help. You could also file a bug on crbug.com/ at this point, link to the test case, provide your about:gpu info from above, and email me the bug ID and I'll ask them to take a look.

Thanks for reporting this. It looks like in SwiftShader we're not properly clamping the depth values after applying the polygon offset. I'll have a fix shortly.

Filed https://bugs.chromium.org/p/swiftshader/issues/detail?id=82 to track this on our end.

Thanks @c0d1f1ed . Do you have any suggestion on how we could write a WebGL conformance test for this?

Quick update: despite now passing all of the polygon offset tests in the dEQP test suite, the sample (https://jsfiddle.net/jhd5mqvp/) is rendered without the background with the latest Chrome Canary. Sorry about not having tried verifying the change sooner. I'll look into what's wrong.

Finally had another look at this. I noticed that the 'units' parameter passed to polygonOffset() is 13000.0. That's an enormous value. Note that 1.0 is defined by the OpenGL spec as being sufficient to create discernible depth values, so typically that is enough.

@mrdoob, I don't understand the reasoning behind the code at https://github.com/mrdoob/three.js/blob/dev/src/renderers/webgl/WebGLBackground.js#L65-L74 . The camera's far plane position shouldn't affect the amount of polygon offset you want to apply, since the near and far planes are mapped to logical 0.0 and 1.0 values in the depth buffer, respectively.

Which brings me to question why polygonOffset() is used in the first place? If the intention is to use a cube map as the background, why not render it with depth testing and depth writing disabled?

I suspect that your code might only be working 'accidentally' on (most) GPUs due to either the actual depth resolution that gets selected, or because the drivers clamp the values to something smaller.

Edit: Ok, I think I see what the intent is. You want to push the depth values of the cube background far away so it gets clamped against the far frustum plane. Unfortunately I don't think polygonOffset() can be used reliably for this. The 'units' value is multiplied by a tiny epsilon to offset the depth values, intended to render coplanar primitives just in front or behind one another, without any significant gap. It is highly implementation dependent how large or small this epsilon value is. OpenGL only provides some basic guarantees for the intended use case of creating sufficiently distinguishable depth values to prevent 'z-fighting'.

Also note that the offset is added to the depth value after homogeneous division. So a lot of the camera space depth gets mapped close to 1.0. That is why 13000 epsilons, which is typically still a small value, happens to be enough on most GPU drivers to get it to be clamped against 1.0. It may not suffice when a higher depth resolution or a 'complementary' depth buffer is used though.

A better way to get your depth values to be 1.0 is to just set gl_Position.z to 1.0 at the end of your vertex shader. But I still question if you even need depth test/write enabled at all.

I agree this is likely an abuse of polygonOffset, and another solution will have to be found.

For context, see the discussion here.

@c0d1f1ed

A better way to get your depth values to be 1.0 is to just set gl_Position.z to 1.0 at the end of your vertex shader. But I still question if you even need depth test/write enabled at all.

What I'm trying to do is to render the background the last thing and avoiding painting pixels twice.

I agree that I'm probably abusing polygonOffset. I'll try setting gl_Position.z to 1.0. I think I tried that already though...

Doing this seems to work...

gl_Position.z = gl_Position.w;

🤔

Looks good to me.

Most helpful comment

Thanks for reporting this. It looks like in SwiftShader we're not properly clamping the depth values after applying the polygon offset. I'll have a fix shortly.