Three.js: documentation on gamma correction incorrect?

Hi,

the documentation about WebGLRenderer states that:

.gammaInput If set, then it expects that all textures and colors are premultiplied gamma. Default is false.

.gammaOutput If set, then it expects that all textures and colors need to be outputted in premultiplied gamma. Default is false.

My understanding by examining the code is instead that:

- if gammaInput is true, textures in material are considered sRGB (e.g. diffuseTexture), so the fetched value is linearised before using it in calculations; however, colours (e.g. diffuse color) that are passed to the shader are considered as in linear space.

- if gammaInput is false, instead, textures and colors are considered as linear

- if gammaOutput is true, the final color computed by material shaders is gamma-encoded, otherwise not.

If my understanding is correct, I think the documentation is misleading.

In any case, using the MeshStandardMaterial, we should always use gammaOutput=true, right? Otherwise the display will show a wrong color - or are there any other gamma operations outside the shaders?

Thanks!

All 16 comments

Yes, the documentation can be improved. Thanks for tracking this down.

renderer.gammaInput and renderer.gammaOutput were implemented early-on. Later, texture.encoding was added. However, for backward-comparability, gammaInput and gammaOutput remained.

I think some changes are in order. The following is my understanding of where we want to get to, based on past discussions. Most of the following is already in place.

renderer.gammaInputshould be removed (texture.encodingis sufficient)renderer.gammaOutputshould be renamed torenderer.outputEncoding(and default toTHREE.sRGBEncoding-- or maybeTHREE.LinearEncoding-- that is debatable)The RGB channels of the following maps should be assumed to be in sRGB colorspace if the encoding is undefined: (

texture.encoding = THREE.sRGBEncoding)a.

material.map

b.material.envMap(except HDR maps -- they need to be decoded, but are in linear space)

c.material.emissiveMap

d. future:material.specularMap(when is it changed to a 3-channel map)Everything else should be assumed to be in linear-RGB colorspace:

material.color

material.emissive

material.specular

vertex colors

ambientLight.color

light.colorfordirectionalLight,pointLight,spotLight,hemisphereLight,areaLight

alpha channels of color maps

material.lightMap

material.specularMap(for now, only as long as it is a grayscale)

material.glossinessMap(future)

material.alphaMap

material.bumpMap

material.normalMap

material.aoMap

material.metalnessMap

material.roughnessMap

material.displacementMap

HDR environment maps

This is just my understanding, it is not a decree. :)

Corrections/clarifications are welcome.

I'm not sure how we should handle encoding if a texture contains per-channel maps that require different encodings -- or if that is even an issue...

/ping @bhouston

Thanks for your reply, and I fully agree with your proposed changes.

The RGB channels of [map, emissiveMap, and envMap] should be assumed to be in sRGB colorspace if the encoding is undefined: (texture.encoding = THREE.sRGBEncoding)

@WestLangley is this the case today? I assumed the default was THREE.LinearEncoding for all maps based on THREE.Texture docs, but map_fragment.glsl contains mapTexelToLinear( texelColor ) sure enough... If by setting material.map.encoding = THREE.sRGBEncoding i'm double-converting my color space, that could explain #12554?

EDIT: Ok never mind, looking at the compiled shader it does still appear to assume THREE.LinearEncoding.

I would hope this is not broken...

In your examples, don't you need to set this?

renderer.gammaOutput = true; // should be renderer.outputEncoding = THREE.sRGBEncoding;

@donmccurdy

That's the core color encoding part (if I'm right).

mapTexelToLinear() is

vec4 mapTexelToLinear( vec4 value ) { return sRGBToLinear( value ); }

where map.encoding is sRGBEncoding.

XXX of return XXXToLinear( ... ) is switched depending on encoding, for example sRGB for sRGBEncoding and Linear for LinearEncoding.

@takahirox Ok yeah that was what confused me.

@WestLangley If I don't set any encoding in GLTFLoader and leave the defaults alone, I see this compiled shader:

vec4 mapTexelToLinear( vec4 value ) {

return LinearToLinear( value );

}

... which I assume means that map is treated as THREE.LinearEncoding by default, as opposed to the "where we want to get to" state you mention. Overriding map.encoding=THREE.sRGBEncoding in the loader changes that function as expected. renderer.gammaOutput=true is not having any effect that I can see.

@donmccurdy Right. "Where we want to get to" has not been implemented, apparently.

renderer.gammaOutput=true is not having any effect that I can see.

I'm surprised to hear that.

In webgl_loader_gltf.html, setting renderer.gammaOutput = true -- prior to rendering the scene -- brightens, as expected.

Changing that value after rendering the scene requires setting mesh.material.needsUpdate = true.

Yup, that requires GLSL code update.

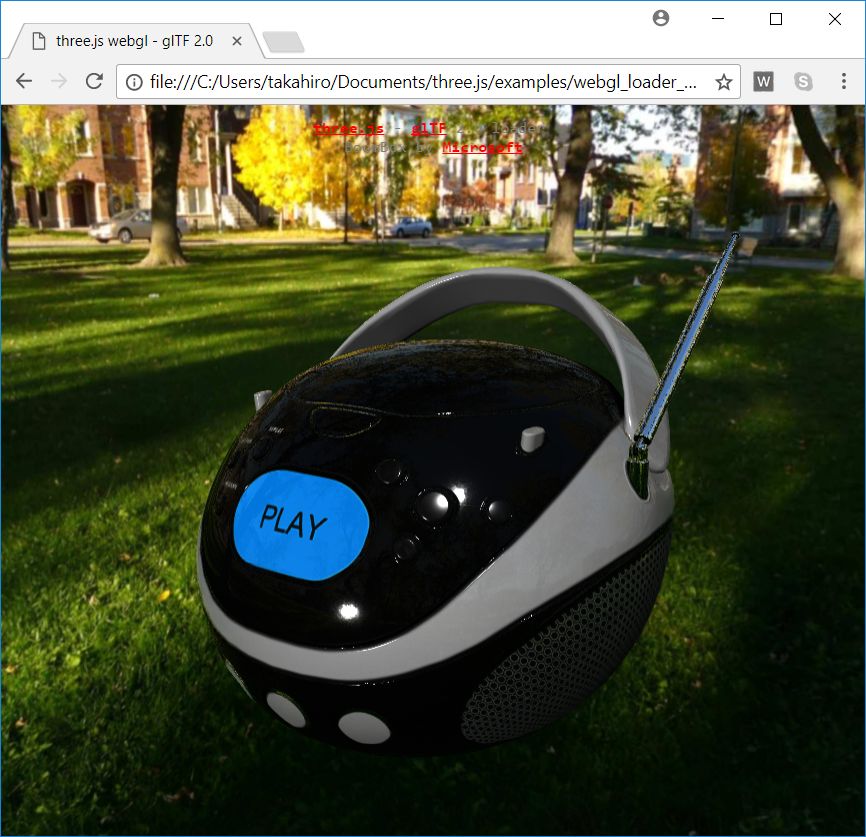

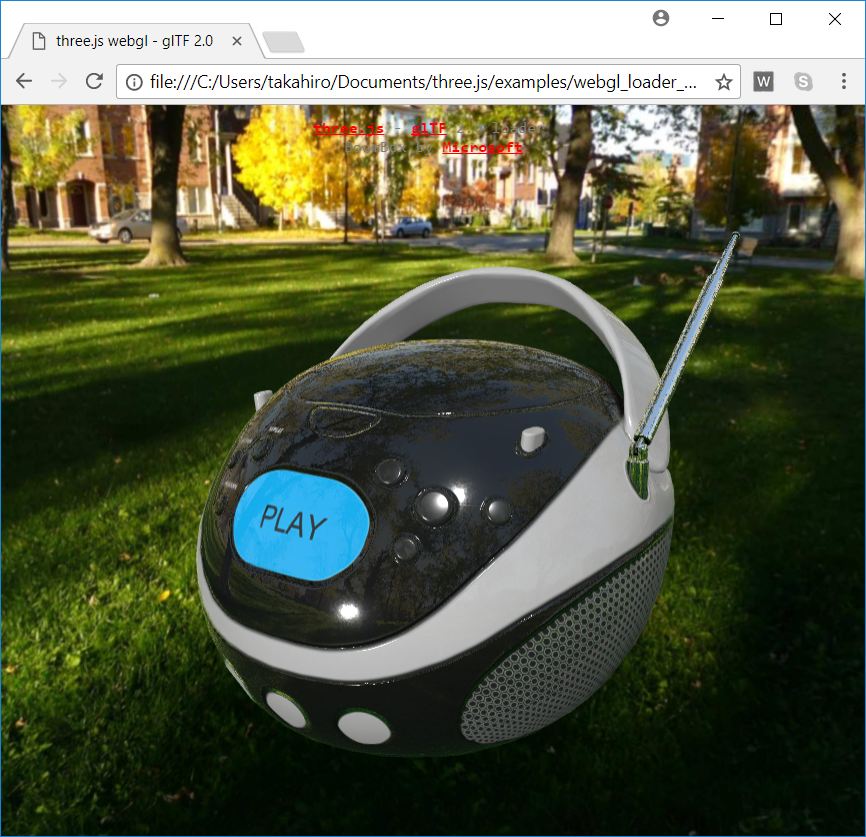

renderer.gammaOutput = false; (current)

renderer.gammaOutput = true; (current)

gammaOutput = true; is lighter.

Changing that value after rendering the scene requires setting

mesh.material.needsUpdate = true.

That's exactly what I was missing, thanks!

Although even with material.needsUpdate=true though, renderer.gammaFactor cannot be changed after the scene has rendered?

For my understanding, then: setting gammaOutput=true is recommended if the input textures are in sRGB color space? And, despite the fact that you _can_ specify colorspace on a per-texture basis, it's best not to mix sRGB and linear diffuse maps, because gammaOutput applies to the final image as a whole? (a link to an article I should read would also be accepted in place of answers here... 😅)

setting gammaOutput=true is recommended if the input textures are in sRGB color space?

Those are separate issues. Theoretically, you should gamma-correct (compensate) the output to match the gamma profile of your monitor. Google 'gamma correction'.

A reason to output in linear space would be, for example, if you are rendering to a texture for further processing.

it's best not to mix sRGB and linear diffuse maps

Yes, doing so would be atypical.

Those are separate issues. Theoretically, you should gamma-correct (compensate) the output to match the gamma profile of your monitor. Google 'gamma correction'.

Ok, this makes sense. So from your comment above:

renderer.gammaOutput should be renamed to renderer.outputEncoding (and default to THREE.sRGBEncoding -- or maybe THREE.LinearEncoding -- that is debatable)

... Assuming there's no post-processing, are there other situations where I would want linear output? Or why would sRGB be debatable as the default output format, other than backwards-compatibility?

Assuming there's no post-processing, are there other situations where I would want linear output?

If you are rendering to the screen then you should probably use sRGB outputEncoding.* If you are not rendering to the screen, then you need to think through your use case and you can usually derive what the right answer is.

*The exception is that when you are writing to the screen when using post processing you may want to write with linear encoding or you may end up applying the sRGB transform multiple times.

"renderer.gammaOutput" should be removed, it is legacy. I would sort of prefer that renderer.outputEncoding is not used, but (and this is sort of a weird idea) rather we introduce ScreenRenderTarget, which is a render target with a null renderTarget. We could set properties on a screenRenderTarget and pass it into the renderer and it would take those properties and apply them but then write to the null render target (the screen.) It would actually unify a few things and make the code simplier, at the cost of introducing this idea of a virtual render target.

We could use this for outputEncoding, and possibly other parameters such as proxying "WebGLRenderingContext.drawingBufferWidth" and "WebGLRenderingContext.drawingBufferHeight", and "depth", "stencil", and maybe even switch between RGB and RGBA based on whether "alpha" was selected. The format should probably always been Byte per element as I do not believe WebGL exposes true HDR buffers (does OpenGL do that yet?)

Assuming there's no post-processing, are there other situations where I would want linear output?

GPGPU would be an example. Another example could be if you are rendering to a render target that will be used as a material texture.

Or why would sRGB be debatable as the default output format, other than backwards-compatibility?

Yes, maintaining backwards-compatibility would be a compelling reason.

renderer.gammaOutputshould be removed, it is legacy.

Happy to move it to Three.Legacy.js. How should it look like?

gammaInput and gammaOutput have been removed via #18108 and #18127.

Most helpful comment

Yes, the documentation can be improved. Thanks for tracking this down.

renderer.gammaInputandrenderer.gammaOutputwere implemented early-on. Later,texture.encodingwas added. However, for backward-comparability,gammaInputandgammaOutputremained.I think some changes are in order. The following is my understanding of where we want to get to, based on past discussions. Most of the following is already in place.

renderer.gammaInputshould be removed (texture.encodingis sufficient)renderer.gammaOutputshould be renamed torenderer.outputEncoding(and default toTHREE.sRGBEncoding-- or maybeTHREE.LinearEncoding-- that is debatable)The RGB channels of the following maps should be assumed to be in sRGB colorspace if the encoding is undefined: (

texture.encoding = THREE.sRGBEncoding)a.

material.mapb.

material.envMap(except HDR maps -- they need to be decoded, but are in linear space)c.

material.emissiveMapd. future:

material.specularMap(when is it changed to a 3-channel map)Everything else should be assumed to be in linear-RGB colorspace:

material.colormaterial.emissivematerial.specularvertex colors

ambientLight.colorlight.colorfordirectionalLight,pointLight,spotLight,hemisphereLight,areaLightalpha channels of color maps

material.lightMapmaterial.specularMap(for now, only as long as it is a grayscale)material.glossinessMap(future)material.alphaMapmaterial.bumpMapmaterial.normalMapmaterial.aoMapmaterial.metalnessMapmaterial.roughnessMapmaterial.displacementMapHDR environment maps

This is just my understanding, it is not a decree. :)

Corrections/clarifications are welcome.

I'm not sure how we should handle encoding if a texture contains per-channel maps that require different encodings -- or if that is even an issue...

/ping @bhouston