Test-infra: Extract test step periodically failing

What happened:

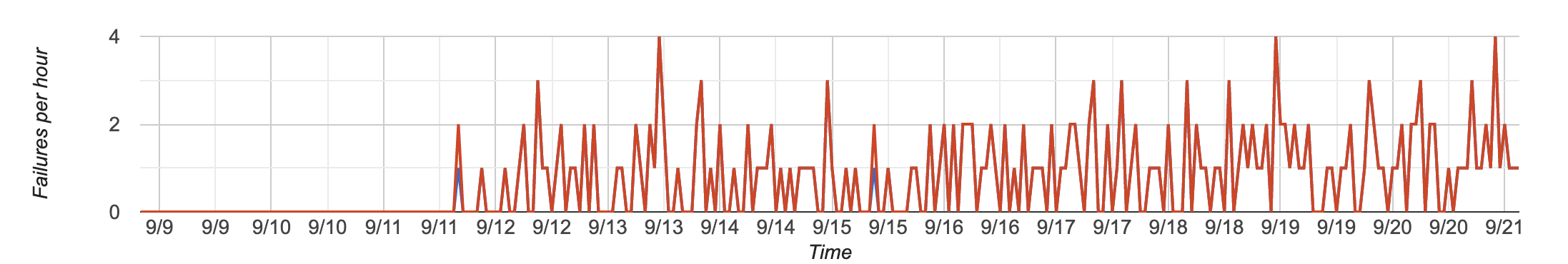

The Extract test step is periodically failing across pipelines since 2020-09-11.

The extract step downloads binaries from https://storage.googleapis.com/kubernetes-release-dev/ci/fast/v1.xxxxxx using curl with a retry of 3. The download makes progress and then fails 3 times with:

curl: (56) OpenSSL SSL_read: SSL_ERROR_SYSCALL, errno 104

What you expected to happen:

I expect the download to succeed.

How to reproduce it (as minimally and precisely as possible):

Still flaking as of opening this.

Please provide links to example occurrences, if any:

Timeline of aggregated failures.

The following boards run this test (still more to add - will update):

- Conformance - GCE - master

- gce-cos-master-alpha-features

- gce-cos-master-default

- gce-cos-master-reboot

- gce-cos-master-scalability-100

- gce-ubuntu-master-containerd

- gci-gce-ingress

- gce-cos-master-slow

- gce-cos-1.19-scalability-100

- gce-cos-k8sbeta-alphafeatures

Anything else we need to know?:

Does not appear to be flaking on the windows pipelines.

Related to https://github.com/kubernetes/kubernetes/issues/95011

/cc @thejoycekung @RobertKielty @hasheddan @jeremyrickard

All 10 comments

This list of flakes is just from the release-master-blocking board for the last two weeks - I haven't had the time to reference others yet. But it's a good idea of how much the job has been flaking.

gci-gce-alpha-features (6 flakes in the past 2 weeks):

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-alpha-features/1310522738569383936

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-alpha-features/1310262018925662208

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-alpha-features/1310099448658923520

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-alpha-features/1307267968287117312

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-alpha-features/1307221664366333952

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-alpha-features/1306502821318758400

gci-gce-ingress (9 flakes in the past 2 weeks):

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-ingress/1309651248160444416

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-ingress/1309503143930761216

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-ingress/1308705510098210816

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-ingress/1308058933184696320

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-ingress/1307937376483414016

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-ingress/1307797202462052352

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-ingress/1307635134706487296

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-ingress/1305356577426903042

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-ingress/1305303729947283456

gci-gce-reboot (18 flakes in the past 2 weeks):

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-reboot/1305310020526673920

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-reboot/1305524182418722817

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-reboot/1305714872763289600

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-reboot/1305707072419008512

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-reboot/1306095291643990017

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-reboot/1307369135801372672

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-reboot/1307408140798529536

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-reboot/1307556368672100352

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-reboot/1307657784258465792

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-reboot/1307791667935318016

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-reboot/1307862886349017088

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-reboot/1308474736468037632

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-reboot/1308601575467388928

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-reboot/1308782519339978752

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-reboot/1309488546809122816

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-reboot/1310217476864217088

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-reboot/1310063965493006336

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-reboot/1310526260564201472

gci-gce-scalability (8 flakes in the past 2 weeks):

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-scalability/1309778839328526336

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-scalability/1309442743126200320

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-scalability/1308759367570427904

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-scalability/1308697961852571648

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-scalability/1308208037835575296

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-scalability/1307818090645426176

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-scalability/1306286803816288256

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-scalability/1306249810809982976

ubuntu-gce-containerd:

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-ubuntu-gce-containerd/1310007341298487296

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-ubuntu-gce-containerd/1309794192108556288

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-ubuntu-gce-containerd/1307541268275924992

gce-conformance-latest:

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-gce-conformance-latest/1306898430328573952

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-gce-conformance-latest/1310268563860230144

- several others that TestGrid labeled as "flakes" but have commit unknown

@eddiezane @thejoycekung thanks for the detailed investigation here! I noticed a number of successful runs also hit this issue, then retry and do not. An important thing to note here is that I don't think curl is retrying these ECONNRESET errors, so we are not getting the 3 retries specified by the flag in get-kube.sh. As an alternative to setting --retry-all-errors in get-kube.sh, I opened #19394 to bump the number of retries in extract_k8s.go. Long-term I think we should move away from using get-kube.sh as mentioned in this TODO :+1:

19394 was merged as infra commit 82b41a145 yesterday, but it looks like Extract is still having problems last night/today. Interestingly, even though we bumped up the number of retries in extract_k8s.go it still looks like we are only retrying 3 times (searching for the error message curl: (56) OpenSSL SSL_read: SSL_ERROR_SYSCALL, errno 104 returns only 3 results).

Prow links for reference:

@thejoycekung the retry bump will go into effect when the image autobumper PR is merged today: https://github.com/kubernetes/test-infra/pull/19396 :)

FWIW 2020-09-11 is when the nodepool was upgraded from v1.14 to v1.15 (ref: https://github.com/kubernetes/k8s.io/issues/1120#issuecomment-691193351) so I wouldn't be surprised if that's the triggering event.

What specifically changed and why it's causing flakiness I don't expect I have time to figure out.

Expect at least the control plane to be migrated to v1.16 in the next couple of weeks (https://github.com/kubernetes/k8s.io/issues/1284). Whether this will fix things or make them worse, again, no idea.

Agree with @hasheddan I would move away from curl and toward gsutil

So these jobs all use the new image gcr.io/k8s-testimages/kubekins-e2e:v20200929-82b41a1-master and looking through their build logs, it still looks like the curl is only retried 3 times. (Am I still missing something?)

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-scalability/1311467328172462080

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-scalability/1311398375270125568

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gce-device-plugin-gpu/1311599450409406464

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-alpha-features/1311549118560079872

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-ingress/1311502308328083456

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/logs/ci-kubernetes-e2e-gci-gce-reboot/1311487966039773184

@thejoycekung I'm not sure about the 3 vs 5 retries but chasing down the error reported by curl

curl: (56) OpenSSL SSL_read: SSL_ERROR_SYSCALL, errno 104

I found this issue reported on curl https://github.com/curl/curl/issues/4409 which led to this mitigation fix https://github.com/curl/curl/issues/4624

I believe upgrading CURL to the 7.72 release https://curl.haxx.se/changes.html#7_72_0 which includes openssl: Revert to less sensitivity for SYSCALL errors might fix our problem.

It would be good to get a second opinion on whether or not the above issue and mitigation is relevant to our incidents reported here.

@kubernetes/ci-signal @hasheddan can you please review and let me know if you think that the above issue is relevant?

If we agreed that this the above is relevant, next steps here would be to get the curl version we are using and if it was down rev from 7.72 try upgrading to 7.72 and see if that fixes the underlying issue.

@thejoycekung good catch! I had missed a check in extract_k8s.go, should be updated in #19451. Apologies for the miss there!

@RobertKielty that might be related to what we are seeing, but I think we always get the latest stable versions of dependencies like curl. As mentioned by @spiffxp, it would be good to just move towards using gsutil.

@RobertKielty I think the issue is relevant - we're still seeing lots of flakes with it. Bumping it up to 5 retries is a patch, but we haven't seen it properly in action yet (so it might not even work).

@hasheddan Thank you for fixing that - we should've caught it while reviewing 😅 How much work is involved with moving to gsutil?

k8s.io#1284 mentions that the bump to v1.16 happen sometime after 2020-10-06 which is ... sometime after tomorrow.

Just to summarize possible fixes/tasks we've collected so far:

- Start moving to

gsutilnow - Keep monitoring the Extract job once the 5 autotries is merged as part of image bump

- Investigate the

curlversion and make sure we're using 7.72 (which is the latest stable version - so we might already be?) - @eddiezane mentioned working on

an exponential back off to that retry(but not sure if he's had the time - or what it would look like)

/cc @droslean @alejandrox1

Most helpful comment

@RobertKielty I think the issue is relevant - we're still seeing lots of flakes with it. Bumping it up to 5 retries is a patch, but we haven't seen it properly in action yet (so it might not even work).

@hasheddan Thank you for fixing that - we should've caught it while reviewing 😅 How much work is involved with moving to

gsutil?k8s.io#1284 mentions that the bump to v1.16 happen

sometime after 2020-10-06which is ... sometime after tomorrow.Just to summarize possible fixes/tasks we've collected so far:

gsutilnowcurlversion and make sure we're using 7.72 (which is the latest stable version - so we might already be?)an exponential back off to that retry(but not sure if he's had the time - or what it would look like)