Test-infra: [prow] Job History for pull-kubernetes-e2e-gce has incorrect data

What happened:

Loaded the following in Chrome

https://prow.k8s.io/job-history/gs/kubernetes-jenkins/pr-logs/directory/pull-kubernetes-e2e-gce

What you expected to happen:

I expect the page to contain Job run results

but it is mainly comprised of yellow rows with the following data

Build Id : blank

Started : 0001-01-01 00:00:00 +0000 UTC

Duration : 0s

Result : blank

How to reproduce it (as minimally and precisely as possible):

Just load the link in a browser, visit Older Runs and Newer Runs

Please provide links to example occurrences, if any:

Anything else we need to know?:

Page can take up to 40s to load

Bottom of page today reports "Showing 20/40879 results"

All 13 comments

I got there via:

- https://prow.k8s.io/view/gcs/kubernetes-jenkins/pr-logs/directory/pull-kubernetes-e2e-gce/1300326047228628993

- Click "Job History"

And am seeing the same thing

This job migrated to k8s-infra-prow-build by #18916 which merged 2020-08-19 16:40 PT, I would like to understand if this is related at all

A known good to compare against:

- https://prow.k8s.io/job-history/gs/kubernetes-jenkins/pr-logs/directory/pull-kubernetes-e2e-gce-ubuntu-containerd

- migrated to k8s-infra-prow-build via https://github.com/kubernetes/test-infra/pull/18815 which merged 2020-08-12 20:20 PT

- another dimension to check: this job remains always_run and merge-blocking for all branches, while pull-kubernetes-e2e-gce doesn't always run for the main branch and release-1.19

I think next step here is to track down the source data in the bucket that job_history reads and see why is parsed oddly.

I'll see if I can find the data and report back.

/help

It might be worth looking at the output of gsutil ls gs://kubernetes-jenkins/pr-logs/directory/pull-kubernetes-e2e/ and comparing some of the later entries against what is visible in prow's job history view.

Is this bad data? Is this a bug in prow / spyglass?

@spiffxp:

This request has been marked as needing help from a contributor.

Please ensure the request meets the requirements listed here.

If this request no longer meets these requirements, the label can be removed

by commenting with the /remove-help command.

In response to this:

/help

It might be worth looking at the output ofgsutil ls gs://kubernetes-jenkins/pr-logs/directory/pull-kubernetes-e2e/and comparing some of the later entries against what is visible in prow's job history view.Is this bad data? Is this a bug in prow / spyglass?

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository.

@snowmanstark , who is new to the project and keen to contribute, pinged me about working on this.

Building on the signposting offered by @spiffxp (many thanks, much appreciated) we formulated the following plan

The problem is either with the Prow job artifact data that we were looking at with gsutil

and/or it is a bug in SpyGlass which presents the data to us as this HTMLThe row count of spurious entries between jobs _varies in number_

So the next step is to look at a normal job entry, count the number of spurious rows above it or below it. Let's say we see 6 empty rows. Look at the data for that job and see if we can find 6 "somethings" that are the cause the 6 spurious rows.

So it might get a little more complicated than that (we could decide to alter the job configuration to not produce data the way it does) and as we go through the data we may change the plan. But this is a reasonable place to start our trouble shooting.

@snowmanstark has offered to dig through the data and I will provide support as needed.

@RobertKielty I did dig through the Prow job data with gsutil and found that there are 302 builds as of 10/30/2020 which had no build-log.txt.

Couldn't find a connection on the varying row counts of the spurious job entries.

In the meantime I will be taking a look at how Spyglass shows the Job History and also other reason why the varying row counts of the spurious job entries.

So the empty records on the Job History page seems to have gotten worse

gsutil ls gs://kubernetes-jenkins/pr-logs/directory/pull-kubernetes-e2e/

CommandException: One or more URLs matched no objects.

Given the absence of the pull-kubernetes-e2e dir in the bucket

I think we can call this a new SpyGlass defect as it's failing to report the absence of the path in the bucket?

will reach out @spiffxp on Slack later today to review.

So the empty records on the Job History page seems to have gotten worse

ref: https://github.com/kubernetes/test-infra/issues/19721

Given the absence of the pull-kubernetes-e2e dir in the bucket

Typo in your bucket name? The bucket we care about is pull-kubernetes-e2e-gce

$ gsutil ls gs://kubernetes-jenkins/pr-logs/directory/pull-kubernetes-e2e-gce | wc -l

953

This is going to get harder to troubleshoot soon. The kubernetes-jenkins bucket has a lifecycle of 90d.

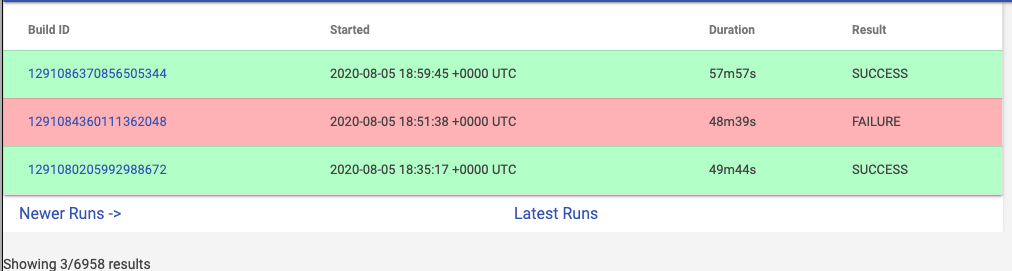

https://github.com/kubernetes/test-infra/issues/19034#issuecomment-684130355 says this job was migrated 2020-08-19, and the known good to compare it against was migrated 2020-08-12. Currently the earliest job result in the bucket is from 2020-08-05 (see below), giving us a week before we start losing known goods if this was the change that broke things

$ echo $(gsutil cat $(gsutil ls gs://kubernetes-jenkins/pr-logs/directory/pull-kubernetes-e2e-gce 2>/dev/null | head -n1))

gs://kubernetes-jenkins/pr-logs/pull/93162/pull-kubernetes-e2e-gce/1291080206013960192

# => https://prow.k8s.io/view/gs/kubernetes-jenkins/pr-logs/pull/93162/pull-kubernetes-e2e-gce/1291080206013960192

# => Started | 2020-08-05 18:35:11 +0000 UTC

https://prow.k8s.io/job-history/gs/kubernetes-jenkins/pr-logs/directory/pull-kubernetes-e2e-gce?buildId=1291086874634358784 - or maybe we've already lost known goods, this is as far back as I can go

I noticed that if you put a / at the end of the URI it seems to displays the data as expected.

https://prow.k8s.io/job-history/gs/kubernetes-jenkins/pr-logs/directory/pull-kubernetes-e2e-gce/

https://prow.k8s.io/job-history/gs/kubernetes-jenkins/pr-logs/directory/pull-kubernetes-e2e-gce/?buildId=1291086874634358784

Most helpful comment

@RobertKielty I did dig through the Prow job data with gsutil and found that there are 302 builds as of 10/30/2020 which had no

build-log.txt.Couldn't find a connection on the varying row counts of the spurious job entries.

In the meantime I will be taking a look at how Spyglass shows the Job History and also other reason why the varying row counts of the spurious job entries.