Terraform-provider-google: google_project_iam_member is slow when upgrading >= v3.14

When upgrading the google provider I noticed one of the workflow I am working one takes a lot longer to execute.

Not even the actual deployment phase, just the terraform plan.

The "refreshing state" step took ~10 minutes before and now it takes 3 hours.

I probed the different versions available and found out that this issue started for v3.14

I have reduced this issue to a single component google_project_iam_member, to make things easier to talk about and reproduce.

This is a list of ~100 members to deploy in iam.

With v3.13, terraform plan takes <1 min to execute

with v3.14 and higher, terraform plan takes 8 minutes to execute.

Community Note

- Please vote on this issue by adding a 👍 reaction to the original issue to help the community and maintainers prioritize this request.

- Please do not leave _+1_ or _me too_ comments, they generate extra noise for issue followers and do not help prioritize the request.

- If you are interested in working on this issue or have submitted a pull request, please leave a comment.

- If an issue is assigned to the

modular-magicianuser, it is either in the process of being autogenerated, or is planned to be autogenerated soon. If an issue is assigned to a user, that user is claiming responsibility for the issue. If an issue is assigned tohashibot, a community member has claimed the issue already.

Terraform Version

Terraform v0.12.28

- provider.google v3.38.0

Affected Resource(s)

- google_project_iam_member

Terraform Configuration Files

resource "google_project_iam_member" "project_members" {

count = "${length(var.members)}"

project = "${var.project}"

role = "roles/browser"

member = "${element(var.members, count.index)}"

}

Debug Output

https://gist.github.com/FrancoisPoinsot/07f597e7d3e5aef3705b31114e4b0b23

Expected Behavior

terraform plan would take <1 minute to execute

Actual Behavior

terraform plan takes 8 minutes to execute

Steps to Reproduce

- create a var

membersof ~100 members - actually deploy them

- execute

terraform plan

All 18 comments

@FrancoisPoinsot I would think this might be caused by api, as its responses did not end sooner. Can you repro the issue in different time?

The PRs that might be related to this issue are #3196 and #3410, but I can't see the reasons on code side that cause the numerous calls as you experienced.

What do you mean by "in different time"?

Do you constantly see this issue with version > v3.14? I mean you can repro the issue?

Yes, I have been able to reproduce this issue for the past week.

and I can reproduce it now

any news?

I ran a small test:

- reduced the member list in the state to 12

- reduced the member list in the variable to 0

- run

time terraform plan - remove one member from the state

repeated the last 2 step until I can plot a graph

repeated the whole process with v3.13 and v3.14.

Ok I did not plot all the point of 3.14 because I just did not want to wait the whole morning in front of my screen.

Here are the results:

member length in state v3.13 v3.14

0 1.21 1.234

1 13.499 13.707

2 13.985 19.492

3 14.53

4 14.93

5 15.142 36.684

6 15.519

7 15.999

8 16.356 54.528

9 16.306

10 17.097 1:06.31

11 17.644 1:11.65

12 18.243 1:16.88

Reminder, my test project is still this only resource:

resource "google_project_iam_member" "project_members" {

count = "${length(var.members)}"

project = "${var.project}"

role = "roles/browser"

member = "${element(var.members, count.index)}"

}

It looks to me there is an issue with how v3.14 (and higher) scales with the state size.

Test a bit again, with only 3 members.

Looking at the trace log I see parts that takes most of the time is waitings such as

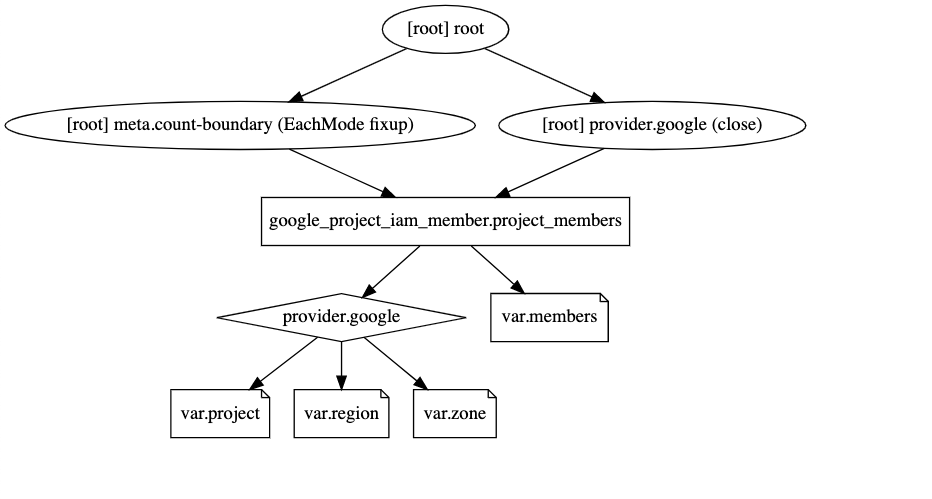

020/10/22 14:14:04 [TRACE] dag/walk: vertex "provider.google (close)" is waiting for "google_project_iam_member.project_members"

2020/10/22 14:14:04 [TRACE] dag/walk: vertex "root" is waiting for "google_project_iam_member.project_members[1]"

2020/10/22 14:14:09 [TRACE] dag/walk: vertex "provider.google (close)" is waiting for "google_project_iam_member.project_members"

2020/10/22 14:14:09 [TRACE] dag/walk: vertex "root" is waiting for "google_project_iam_member.project_members[1]"

This one above is the only waiting log done when running v3.13

When running v3.14 I have those:

2020/10/22 14:06:06 [TRACE] dag/walk: vertex "provider.google (close)" is waiting for "google_project_iam_member.project_members"

2020/10/22 14:06:06 [TRACE] dag/walk: vertex "root" is waiting for "google_project_iam_member.project_members[2]"

2020/10/22 14:06:11 [TRACE] dag/walk: vertex "provider.google (close)" is waiting for "google_project_iam_member.project_members"

2020/10/22 14:06:11 [TRACE] dag/walk: vertex "root" is waiting for "google_project_iam_member.project_members[2]"

[...] some stuff being done

2020/10/22 14:06:16 [TRACE] dag/walk: vertex "provider.google (close)" is waiting for "google_project_iam_member.project_members"

2020/10/22 14:06:16 [TRACE] dag/walk: vertex "root" is waiting for "google_project_iam_member.project_members[2]"

[...] some stuff being done

2020/10/22 14:06:21 [TRACE] dag/walk: vertex "provider.google (close)" is waiting for "google_project_iam_member.project_members"

2020/10/22 14:06:24 [TRACE] dag/walk: vertex "root" is waiting for "google_project_iam_member.project_members[1]"

full log for 3.14: https://gist.github.com/FrancoisPoinsot/3ebf48aac83a2472bdf6b641d583cb8a

So yeah, there is more waiting for the same setup

I see these waitings are wrapped by request and response object of /v1/projects/ricardo-dev-ch:getIamPolicy call.

So I guess this part is the waiting for the HTTP response.

in 3.13, the request take ~10s to run the first time and then is instantaneously answered for all following calls. As if cached.

in 3.14, the request always takes ~10s to run.

This look like an issue with the caching of the getIamPolicy request.

Thank you for that - I promise to look into this in more detail today. My preliminary investigation didn't find a culprit - I suspected it might have been an upstream issue that would resolve itself, but it's clear that it's not. I will keep looking.

I suspect https://github.com/hashicorp/terraform-provider-google/pull/5902 may have introduced this performance regression and will verify tomorrow.

To be honest, I get the feeling I am abusing google_project_iam_member.

LIke, I am not supposed to use it to handle ~100 users.

Instead of using a for or for_each inside google_project_iam_member, I can achieve a similar result using google_project_iam_binding setting the original set to the field members.

This works around the issue of this thread, but also seems to be the intended way.

meh, scratch that.

I tried to refactor my project using google_project_iam_binding.

I mean it does makes it faster.

There is still a huge difference between v3.13 (28 sec) and >=v3.14 (4min 39 sec).

But at least it is better than the hours-long deployment I originally had.

Hey - I'm sorry, but I have finally gotten around to trying to reproduce this and it just does not reproduce for me on 3.14.0. I am able to apply 5 iam members in 11 seconds using your config, the same as in 3.13.0.

There must be something else about your config that is causing this to happen - can you help me debug a little more?

Big question first: Does this happen to you on a new project?

Smaller question: Does your config include many other resources that might be batched, e.g. google_project_service resources?

The project is not new. There are ~100 users and ~50 service accounts

My test project, for which you see the number in the previous posts, only contains a single resource.

For clarity:

The GCP project is not new.

The terraform project, "test project", is new.

To give more context: I was migrating from a terraform project 0.11 to a new one 0.12.

I am starting with an empty statefile and run import commands to load only the resources I moved.

Okay, so it's neither of those. I'll have to keep asking questions to figure out how I can reproduce this.

Here are a few:

What version of terraform are you using?

What kind of iam members are you using? user:? domain:? etc.

Can you reproduce this on a fresh GCP project?

terraform 0.12.28

My iam members are user: and group:

will try to reproduce on a fresh GCP project later.

Most helpful comment

I suspect https://github.com/hashicorp/terraform-provider-google/pull/5902 may have introduced this performance regression and will verify tomorrow.