Terraform-provider-azurerm: azurerm_recovery_services_protected_vm returns lower case values of recovery_vault_name and resource_group_name

Community Note

- Please vote on this issue by adding a 👍 reaction to the original issue to help the community and maintainers prioritize this request

- Please do not leave "+1" or "me too" comments, they generate extra noise for issue followers and do not help prioritize the request

- If you are interested in working on this issue or have submitted a pull request, please leave a comment

Terraform (and AzureRM Provider) Version

terraform -version

Terraform v0.12.9

+ provider.azurerm v1.36.1

Affected Resource(s)

azurerm_recovery_services_protection_policy_vmazurerm_recovery_services_protected_vm

Terraform Configuration Files

resource "azurerm_recovery_services_protection_policy_vm" "this" {

recovery_vault_name = "RSV-1"

resource_group_name = "RG-1"

...

...

}

resource "azurerm_recovery_services_protected_vm" "this" {

recovery_vault_name = "RSV-1"

resource_group_name = "RG-1"

...

...

}

Debug Output

State file also shows that recovery_vault_name and resource_group_name returns a lower case value.

# azurerm_recovery_services_protection_policy_vm.this must be replaced

-/+ resource "azurerm_recovery_services_protection_policy_vm" "this" {

~ id = "/subscriptions/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourcegroups/rg-1/providers/microsoft.recoveryservices/vaults/rsv-1" -> (known after apply)

name = "Default-Policy"

~ recovery_vault_name = "rsv-1" -> "RSV-1" # forces replacement

~ resource_group_name = "rg-1" -> "RG-1" # forces replacement

~ tags = {} -> (known after apply)

...

}

This also occurs for the azurerm_recovery_services_protected_vm resource.

# azurerm_recovery_services_protected_vm.protect_vm must be replaced

-/+ resource "azurerm_recovery_services_protected_vm" "protect_vm" {

backup_policy_id = "..."

~ id = "..." -> (known after apply)

~ recovery_vault_name = "rsv-1" -> "RSV-1" # forces replacement

~ resource_group_name = "rg-1" -> "RG-1" # forces replacement

source_vm_id = "..."

~ tags = {} -> (known after apply)

}

Expected Behavior

Nothing should change

Actual Behavior

Terraform wants to delete and recreate the policy

Steps to Reproduce

terraform applyterraform apply

All 73 comments

Note this issue also affects the backup_policy_id - terraform tries to modify it (if you change your rg and rsv names to lower(x)) but gets stuck in a "still trying...." loop because it can't.

workaround so you can still use your terraform config to make non-backup changes to your environment:

- comment out the resource "azurerm_recovery_services_protected_vm" "vmname" {} stanza

- terraform state rm azurerm_recovery_services_protected_vm.vmname

- terraform apply

also occurs when using azurerm provider 1.32.1.

Azure ignores the case of names in fields that allow mixed case (e.g. you can't create resources a and A), so why doesn't Terraform?

the workaround, with a comment out, will it not try to delete?

check with 'terraform plan' to be on the safe side, but no, it should not delete the resource, as terraform won't know about it after you remove it from the state.

I did wonder if it would try to modify the source VM resource, but it did not.

it is Friday and I must be getting confused somewhere :-(

if I comment out the back (recovery) section (I have done all), it is prompting to destroy the backup element

this is assuming we are just talking about '#' each line of the backup resource

comment out (with #) the resource "azurerm_recovery_services_protected_vm" "vmname" { ... } stanza

Don't forget step 2, which stops terraform caring about the resource you just commented out:

- terraform state rm azurerm_recovery_services_protected_vm.vmname

I don't know that command.... how is it used (sorry)

https://www.terraform.io/docs/commands/state/rm.html

If you're still not confident after trying in a lab environment with a demo resource and don't have a pressing need for the workaround, then you're probably better off waiting for the fix.

@tombuildsstuff FYI, I've updated the examples to show these resources are effected:

azurerm_recovery_services_protected_vmazurerm_recovery_services_protected_vm

Our work around is to use the lower function so that the resource is not recreated.

For example:

resource "azurerm_recovery_services_protected_vm" "protect_vm" {

resource_group_name = lower(var.resource_group_name)

# lower("RG-1") = "rg-1" => value is the same, therefore no replacement

recovery_vault_name = lower(var.rsv_name)

# lower("RSV-1") = "rsv-1" => value is the same, therefore no replacement

source_vm_id = var.vm_id

backup_policy_id = var.backup_policy_id

}

hi @tombuildsstuff

i am referring to existing issue #1386

would it make sense to have all resource_id becoming case-insensitive ?

Hi @josh-barker,

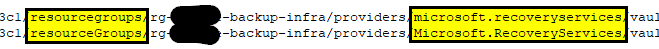

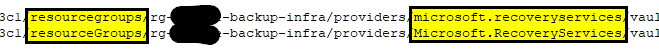

unfortunately to use the lowerfunction does not solve the problem in my case, as there are the resourcegroupsand microsoft.recoveryservices which still force an in-place update, as the following screenshot illustrates.

Any other ideas? Will a bug fix be provided with 1.37.0 (@tombuildsstuff )? Thx

@stefan-rapp ,

instead of using lower() you can replace those different API string manually

backup_policy_id = "${replace(replace(var.backup_policy_id,"resourceGroups","resourcegroups"),"Microsoft.RecoveryServices","microsoft.recoveryservices")}"

@ranokarno

would it make sense to have all resource_id becoming case-insensitive ?

Whilst the Azure ARM Specification says they /should/ be case insensitive (technically it says they should be returned in the casing in which users submitted them, but this isn't the case..) in practice Resource ID's can't be treated as case-insensitive because some ARM API's and (most) external tooling doesn't treat them as such - meaning in practice they have to be treated as case-sensitive, else this'd cause other users more obtuse errors.

We can look to work around this within Terraform by checking for both versions (Title and lower case) - however ultimately the API should be returning this in the correct case (or at least all references to this ID should be in the same casing).

Thanks!

backup policy id won't be in a var, unless you manually create one. It's returned from

azurerm_recovery_services_protection_policy_POLICY.id.

lower() works fine for everything but backup policy ID, which means it's not a valid workaround (this was the first thing I tried).

How responsive are MS to API inconsistency issues like this?

I'd heard they use Terraform too, so this should be burning them as well, but maybe not in the same department as those who can do something about it.

we ended up using lifecycle management on the resource to get round it for the moment - of course an issue if we need to change the resource, but that's the bigger issue for them... not sure of any update on fix

lifecycle {

ignore_changes = ["backup_policy_id"]

}

@tombuildsstuff

The azure recovery services vault has a "Soft Delete" feature for Azure Virtual Machines. It is enabled by default while creating a recovery services vault. It would be helpful if you could include this feature in the "azurerm_recovery_services_vault" resource. As once we have added VM's for protection in the vault, it cannot be disabled unless all the backup data is deleted.

So if we try to remove a VM from backup using terraform by commenting the "azurerm_recovery_services_protected_vm" resource, the operations fails with below error as soft delete is enabled on the vault.

azurerm_recovery_services_protected_vm.VM (destroy): 1 error occurred:

* azurerm_recovery_services_protected_vm.VM: Error waiting for the Recovery Service Protected VM "VM;iaasvmcontainerv2;XXXX;VM" to be false (Resource Group "XXXX") to provision: timeout while waiting for state to become 'NotFound' (last state: 'Found', timeout: 30m0s)

@AbhishekB15 as far as I know there's no flag in the SDK/API to do so - we've raised it with the Recovery Services Team, however since there's no setting in the API to toggle Soft Delete at the moment I don't believe there's much we can do to work around this unfortunately.

Sorry for the delay in response.

We are reverting the change from converting to lower case and I will update the fix timelines soon. I didn't go through all the discussion above, apologies), but do we have a workaround like converting to lower case all out?

I will also comment on the soft-delete configuration capabilities soon but now we are focusing on reverting back the translation to lower case changes.

@tombuildsstuff , @WascawyWabbit :

Just went through all comments and realized that the lower case conversion workaround is not working for PolicyID.

" lower() works fine for everything but backup policy ID, which means it's not a valid workaround (this was the first thing I tried)"

Can you please explain more on this?

@pvrk, There is no workaround possible as we case sensitively parse the resource ID, and treat all those properties in a case sensitive way. In a future release we could change this and fix users but it would only fix it for those who upgrade, while reverting the change on the API side fixes all existing terraform versions & doesn't require users to change their TF into a new casing.

@katbyte : We are looking at reverting changes so that you need not upgrade. I was trying to see if there is a workaround until we deliver the fix to you.

@pvrk: do you plan to release a new (minor) version including this fix soon? We are facing this problem in several deployments and we would like to know if you have some time estimation in order to decide if we can wait until a new version is released, or if we will need to do some workaround such as fix the version of the azure provider to 1.35.0, which is the previous one we were using and we know that is not affected by this problem.

Unfortunately, this update was made across versions. So, not sure if the previous one is run successfully. Can you please verify? We are planning to revert changes so that there is no version change upgrade/downgrade.

@rubenmromero for clarification - @pvrk is talking about applying the fix to the Azure API, rather than fixing Terraform (since this change happened in the API) - so this should be fixed automatically once that's fixed/rolled out

@pvrk cool thanks - sounds good to us 👍

Thanks to both for your quick answers and aclarations. @pvrk : Regarding the change that you are planning to revert, could you give some time estimation for carrying out that?

Hi all,

Currently we are focusing on isolating the changes from the big payload that went and reverting only them. I will get back on the timelines.

Can you please provide an estimate of business impact? This is to justify moving currently planned items to accommodate the work required to revert these changes.

The impact for us is quite high, because we are facing this problem every time that we try to update the Azure resources of any deployed customer, and we have a considerable amount of customers whose infrastructures needs to kept updated.

Hi all,

I need some business numbers like X customers getting affected with these operations and the frequency of those operations. Otherwise, the fix might get delivered in the monthly train which might take until Nov end. If we want a quicker release, I need to justify this by saying X number of customers are getting affected

@pvrk from our side this affects every Terraform user using Recovery Services / Backups - I'd suggest reaching out to @grayzu (offline) who should be able to help.

Update: We are currently planning to revert the changes and provide the fix to all regions by Nov 18th/19th. As noted above, since this is a revert, there are no changes expected from your side. After Nov 18th/19th, your current templates should work as-is.

Hope this is Ok.

This does raise some questions about MS change management & advance comms processes….

@WascawyWabbit : This is an unintended bug and hence there were no planned notifications. Please don't consider this incident as an example of API update process.

This does raise some questions about MS change management & advance comms processes….

I completely agree

Also, since the topic is in context, what channel do you propose to get notified about any such planned changes? Or when you raise issues? This same GitHub Repo or anything else?

I will also update about soft delete configurability soon.

@pvrk : personally, I'm not expecting to be notified, but some advance comms to partners like Hashicorp would come in handy for them I expect. Bugs happen, but (from comments in this and other threads) the inconsistencies in MS APIs vs documented standards really shouldn't. Anyway that's off topic.

Thanks for putting wheels in motion to revert the breaking change. Please could you update tests so that this sort of thing doesn't creep in again?

@pvrk,

We have the ability to run our test suite against canary where we could catch issues like the casing & soft delete before they go live in public. Currently it runs nightly against public and some folk at msft have access to it already.

As for the best way to notify hashicorp you can email myself and we can continue that conversation there and loop in the appropriate people from hashicorp's side.

@pvrk Because of the soft delete default option for Azure Recovery Service Vault, we are facing issues while deleting backups. The deletion operations runs for 30 minutes and the fails. Because of this issue we are unable to decommission a VM from terraform as the backups wont get deleted.

Could you please also add this soft delete feature in the backup resource block so that we can perform the deletions smoothly.

We are facing the same problem, which together with the problem reported in this issue is making it even more painful the consequences, because when Terraform tries to do the update-in-place in the protected_vm resource motivated by the conversion to lower case, the operation eventually ends by time out because the protected_vm resource is not able to handle properly the new 'soft delete' feature released...

Regarding the breaking off motivated by the 'soft delete' feature in the disablement of Azure Backup in VMs and the decommission of recovery service vaults from Terraform, the best answer that we have achieved from Azure support after on month is this one:

Like I have previous referred the solution provided worldwide to disable the soft delete feature is only for the new vaults, and it will only be possible to deactivate the soft delete feature for the new vaults that do not contain protected items.

So, for the new vaultsPrerequisites for disabling soft delete

· Enabling or disabling soft delete for vaults (without protected items) can only be done the Azure portal. This applies to:

o Newly created vaults that do not contain protected items

o Existing vaults whose protected items have been deleted and expired (beyond the fixed 14-day retention period)

· If the soft delete feature is disabled for the vault, you can re-enable it, but you cannot reverse that choice and disable it again if the vault contains protected items.

· You cannot disable soft delete for vaults that contain protected items or items in soft-deleted state. If you need to do so, then follow these steps:

o Stop protection of deleted data for all protected items.

o Wait for the 14 days of safety retention to expire.

o Disable soft delete.https://docs.microsoft.com/bs-latn-ba/azure/backup/backup-azure-security-feature-cloud

Unfortunately, it will not be possible to have the same solution for the old vaults.

For this scenario the only possibility is to involve the product team and request to disable the soft delete feature for those vaultsYou can provide us all the affected subscriptions and the vault names and we will perform the disable of the feature.

How many vaults are still to be deleted?

But we think it should be possible to disable this feature in the existing vaults in the same way that it was automatically enabled for all vaults (existing and new ones) without any confirmation from the customer side when it was released in September. And of course, this must be able to be done from the API (and provisioning tools that integrate with the API such as Terraform), instead of being only available through the Azure Portal...

Update: With regards to the service deployment to roll back the changes done regarding the case, unfortunately, we have hit a snag and this is currently delayed by 4-5 days. We are already working with high priority on this. Will update with the expected end date of deployment soon.

With regards to soft delete, your feedback is taken into consideration. We are working to see if we can enable this for existing vaults via API as well. Will probably have an update by early next week i.e., Nov 18th/19th.

Ok Kartik, thank you very much for your update.

It is also very important for us to push the option to disable the soft delete feature in existing vaults with backup items stored on them, because this is being a painful break point for us when provisioning/destroying our infrastructure deployments through Terraform.

Update: Since the deployment to fix case changes issue got delayed, we thought it is better if we can manage to sneak in soft-delete related changes as well. So, the current plan is provide you fixes for both issues. After this deployment is done, you should be able to

- Run the same templates without any case change.

- Disable/enable soft delete flag for existing/new vaults via REST API.

I hope that with this release, the major issues in automation are taken care of. Will update the thread back when I have the date of delivery.

Update: Current plan is to complete the deployment by Nov 29th. As mentioned earlier, with this deployment both issues (case change, not able to enable/disable soft delete feature on existing vaults) will be fixed.

10 days late and keep going up... :(((

We are also affected by this issue.

Update: We were able to expedite the deployment and complete in all public geos. Now the case change should not occur. Please verify and let us know.

With regards to Soft-delete, I would like one clarification. Please excuse my ignorance of terraform. What is your requirement of updating the flag? Should I share the REST API or .NET SDK where the capability is available?

@pvrk

We were able to expedite the deployment and complete in all public geos. Now the case change should not occur. Please verify and let us know.

Awesome, thanks - we'll run our test suite to confirm and post back when that's completed.

With regards to Soft-delete, I would like one clarification. Please excuse my ignorance of terraform. What is your requirement of updating the flag? Should I share the REST API or .NET SDK where the capability is available?

Once this is available in the Swagger / REST API Specifications repository then we should be able to pick this up via the Generated Go SDK - so a link to the PR adding that would be great if you can :)

Thanks!

@pvrk from our side whilst the test still fails (due to Soft Delete - below) it appears the API is fixed:

recoveryservices.VaultsClient#Delete: Failure responding to request: StatusCode=400 -- Original Error: autorest/azure: Service returned an error. Status=400 Code="ServiceResourceNotEmptyWithContainerDetails" Message="Recovery Services vault cannot be deleted as there are backup items in soft deleted state in the vault. The soft deleted items are permanently deleted after 14 days of delete operation. Please try vault deletion after the backup items are permanently deleted and there is no item in soft deleted state left in the vault.For more information, refer https://aka.ms/SoftDeleteCloudWorkloads. The registered items are : acctestvm\nUnregister all containers from the vault and then retry to delete vault. For instructions, see https://aka.ms/AB-AA4ecq5"

So it looks like once the Soft Delete functionality is available that this should pass 👍

Thanks!

This is the REST API for soft delete:

https://docs.microsoft.com/rest/api/backup/backupresourcevaultconfigs/update

Hope this helps.

@pvrk thanks for that - I've opened this PR on the Rest API Specs Repository which should generate the associated Go SDK required to make this possible

Thanks!

Update: We were able to expedite the deployment and complete in all public geos. Now the case change should not occur. Please verify and let us know.

This doesn't seem to be resolved for me.

A terraform plan shows that for an azurerm_recovery_services_protected_vm resource, the backup_policy_id will be changed, from text containing resourceGroups/rgname/providers/Microsoft.RecoveryServices to a new object with resourcegroups/rgname/providers/microsoft.recoveryservices

Oddly, when I go and look at the object references in my state file, they are already all lowercase in this instance, instead of the expected starting value with upper case which the Plan is implying.

@jeffwmiles : Sorry to hear this. Let me take this observation back to my engg team and investigate.

Others: Any such observations? It seems to be working for some of them at least.

Update: We were able to expedite the deployment and complete in all public geos. Now the case change should not occur. Please verify and let us know.

This doesn't seem to be resolved for me.

A _terraform plan_ shows that for an azurerm_recovery_services_protected_vm resource, the backup_policy_id will be changed, from text containing resourceGroups/rgname/providers/Microsoft.RecoveryServices to a new object with resourcegroups/rgname/providers/microsoft.recoveryservicesOddly, when I go and look at the object references in my state file, they are already all lowercase in this instance, instead of the expected starting value with upper case which the Plan is implying.

The same happens to us...

I tried it out and now everything is working fine again. No change regarding the backup_policy_id! Thx!

@rubenmromero / @jeffwmiles : Can you please re-check? We have again checked in all regions and there seems to be no case change as indicated by @stefan-rapp. We even had other customers confirms that the fix now works. If you are still observing this issue, can you please send the x-ms-client-request ID so that we can verify?

I just executed terraform plan on my Recovery Service Vault (_Azure Backup_) and it shows me the following.

Terraform v0.12.7

+ provider.azurerm v1.36.1

No changes. Infrastructure is up-to-date.

This means that Terraform did not detect any differences between your

configuration and real physical resources that exist. As a result, no

actions need to be performed.

So I do NOT have the issue/error anymore the the attribute backup_policy_idneeds to be updated, like the following screenshot illustrated weeks ago.

So from my point of view, I do not have issues anymore!

@rubenmromero / @jeffwmiles : Do you still have any work arounds in your code, like lower(), causing touble?

Hello @stefan-rapp and @pvrk. We have tested again and it is still trying to update in place the 'protected_vm' resources. For instance, an execution attempt done a few minutes ago:

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

~ update in-place

Terraform will perform the following actions:

~ azurerm_recovery_services_protected_vm.engine

backup_policy_id: "/subscriptions/****/resourceGroups/a_travelers/providers/Microsoft.RecoveryServices/vaults/travelers/backupPolicies/daily-policy" => "/subscriptions/****/resourcegroups/a_travelers/providers/microsoft.recoveryservices/vaults/travelers/backupPolicies/daily-policy"

~ azurerm_recovery_services_protected_vm.gateway

backup_policy_id: "/subscriptions/****/resourceGroups/a_travelers/providers/Microsoft.RecoveryServices/vaults/travelers/backupPolicies/daily-policy" => "/subscriptions/****/resourcegroups/a_travelers/providers/microsoft.recoveryservices/vaults/travelers/backupPolicies/daily-policy"

~ azurerm_recovery_services_protected_vm.portal

backup_policy_id: "/subscriptions/****/resourceGroups/a_travelers/providers/Microsoft.RecoveryServices/vaults/travelers/backupPolicies/daily-policy" => "/subscriptions/****/resourcegroups/a_travelers/providers/microsoft.recoveryservices/vaults/travelers/backupPolicies/daily-policy"

But the most unusual aspect is that Terraform keeps trying to update from camel case to lower case, in the same way that it was done before reverting the to-lower-case function... Why does this happen?

@rubenmromero I believe this is an issue with the Terraform Configuration, more than a bug in the API - mind sharing your configuration so we can take a look? Thanks!

Here you have the Terraform configuration that we are using:

+ terraform -v

Terraform v0.11.13

+ provider.azurerm v1.36.1

+ provider.null v2.1.2

We know 0.11 version is being deprecated, and we have already planned to upgrade to 0.12 next weeks, but with this same Terraform version was working properly the updates (without requesting the previous pasted update-in-place) until the change regarding the lower-case function was initially done in the API.

Any update regarding soft delete feature?

@rubenmromero I meant a Terraform Configuration/Resource snippets more than the specific version :)

@AbhishekB15 not yet, we're currently waiting for the new version of the Azure SDK containing this to drop

Ups, sorry @tombuildsstuff ...

This is the implementation that we are using:

- One independent folder and state in which we define the network resources for a customer (Virtual Network, subnet, etc) and the Azure Backup vault and associated policy for it:

resource "azurerm_recovery_services_vault" "default" {

name = "${var.customer}"

location = "${azurerm_resource_group.default.location}"

resource_group_name = "${azurerm_resource_group.default.name}"

sku = "Standard"

}

resource "azurerm_recovery_services_protection_policy_vm" "default" {

name = "daily-policy"

resource_group_name = "${azurerm_resource_group.default.name}"

recovery_vault_name = "${azurerm_recovery_services_vault.default.name}"

timezone = "UTC"

backup {

frequency = "Daily"

time = "00:00"

}

retention_daily {

count = 90

}

}

- Another independent folder and state in which we define the VMs for a customer and enable the Azure Backup protection for each defined VM:

# This resource is defined to fix the timeout problem in the creation of 'azurerm_recovery_services_protected_vm.*' resources

resource "null_resource" "delay" {

provisioner "local-exec" {

command = "sleep 180"

}

depends_on = [

"azurerm_virtual_machine.portal",

"azurerm_virtual_machine.engine",

"azurerm_virtual_machine.gateway",

]

}

resource "azurerm_recovery_services_protected_vm" "portal" {

resource_group_name = "${data.azurerm_resource_group.default.name}"

recovery_vault_name = "${data.terraform_remote_state.shell.backup_vault_name}"

source_vm_id = "${azurerm_virtual_machine.portal.id}"

backup_policy_id = "${data.terraform_remote_state.shell.backup_policy_id}"

depends_on = ["null_resource.delay"]

}

resource "azurerm_recovery_services_protected_vm" "engine" {

count = "${var.number_of_engines}"

resource_group_name = "${data.azurerm_resource_group.default.name}"

recovery_vault_name = "${data.terraform_remote_state.shell.backup_vault_name}"

source_vm_id = "${element(azurerm_virtual_machine.engine.*.id, count.index)}"

backup_policy_id = "${data.terraform_remote_state.shell.backup_policy_id}"

depends_on = ["null_resource.delay"]

}

resource "azurerm_recovery_services_protected_vm" "gateway" {

count = "${var.number_of_gateways}"

resource_group_name = "${data.azurerm_resource_group.default.name}"

recovery_vault_name = "${data.terraform_remote_state.shell.backup_vault_name}"

source_vm_id = "${element(azurerm_virtual_machine.gateway.*.id, count.index)}"

backup_policy_id = "${data.terraform_remote_state.shell.backup_policy_id}"

depends_on = ["null_resource.delay"]

}

If you need any clarification, don't hesitate to ask.

Just a comment to confirm that a fresh creation of a Recovery Vault + policies, we don't have the lower/upper case changes bug anymore. Thanks for the API update.

Could you check the configuration sent by me to find out if we have some issue in our definitions?

Confirming that I still get the following on an existing vault and policy:

azurerm_recovery_services_protected_vm.VM will be updated in-place

~ resource "azurerm_recovery_services_protected_vm" "VM" {

~ backup_policy_id =

…

(Azure Cloud Shell, Terraform v0.12.16 (can't update it), azurerm provider v1.38)

@rnibandybooth in your Terraform Configuration are you tolower-ing the ID perchance?

spot on! I had left them as lower() from when this issue started & I'd tried that as a workaround, then left the lines disabled until now.

I can confirm that the protected items worked fine after removing lower().

Cheers!

@rnibandybooth thanks for confirming that - glad to hear that's now working for you 👍

Since there's been sufficient folks confirming this works after removing their workarounds (e.g. lower) I'm going to close this issue for the moment since I believe this has been resolved.

For the other issue mentioned in this thread - I've opened a new issue specifically to track adding Soft Delete for Recovery Services - please subscribe to that issue for updates.

Thanks!

Just for your information, we plan to release soft-delete capability for other workloads such as SQL running in Azure VMs. Can any one from the terraform community help us test this scenario before we roll-out to wider audience?

Thanks in advance.

@pvrk let's track that in #5177 - but yes - presumably that takes effect at the top-level Recovery Services Vault rather than on each individual item?

Yes, the soft-delete-capability works at a vault level. Even then, we would like to check with anybody who is using terraform for SQL backups so that we are sure that the feature can work as intended.

Hi @josh-barker,

unfortunately to use thelowerfunction does not solve the problem in my case, as there are theresourcegroupsandmicrosoft.recoveryserviceswhich still force an in-place update, as the following screenshot illustrates.

Any other ideas? Will a bug fix be provided with 1.37.0 (@tombuildsstuff )? Thx

I've been debugging this issue for a while and found out to be the source backup_policy_id camel case issue.

The way to fix this problem is to replace strings below with upper case in terraform.tfstate file (I know this sound crazy but it does help fixed the problem). Note, italic _{values}_ are variable and should be unique to your environment.

Before Replace

"id": "/subscriptions/_{yoursubscriptionid}_/resourcegroups/_{yourresourcegroupname}_/providers/microsoft.recoveryservices/vaults/_{yourbackupname}_/backupPolicies/_{yourbackuppolicyname}_",

After Replace

"id": "/subscriptions/_{yoursubscriptionid}_/resourceGroups/_{yourresourcegroupname}_/providers/microsoft.RecoveryServices/vaults/_{yourbackupname}_/backupPolicies/_{yourbackuppolicyname}_",

You may find above line value under following section of terraform.state file.

"mode": "managed",

"type": "azurerm_recovery_services_protection_policy_vm",

"name": "{yourbackuppolicyname}",

"provider": "provider.azurerm",

Conclusion, issue encountered due to the fact that source backup id pattern is not in camel case as expected and target backup id (protected vm) has lower case string pattern and it was flagged as mismatch and in-place upgrade is required. Hence, we modify source backup id in camel case as expected to put change at rest :-)

I'm going to lock this issue because it has been closed for _30 days_ ⏳. This helps our maintainers find and focus on the active issues.

If you feel this issue should be reopened, we encourage creating a new issue linking back to this one for added context. If you feel I made an error 🤖 🙉 , please reach out to my human friends 👉 [email protected]. Thanks!

Most helpful comment

Update: We are currently planning to revert the changes and provide the fix to all regions by Nov 18th/19th. As noted above, since this is a revert, there are no changes expected from your side. After Nov 18th/19th, your current templates should work as-is.

Hope this is Ok.