Spacy: POS/Morph annotation empty with trained Transformer model

How to reproduce the behaviour

I have trained a Swedish Transformer model on UD-Treebank Talbanken using mainly the Quickstart Config from the Spacy website. I only added morphologizer and lemmatizer to the model. So the full pipeline looks like this:

['transformer', 'tagger', 'morphologizer', 'lemmatizer', 'parser']

With normal sentences everything seems to work fine, but when very short sequences _(such as nlp('Privat'))_ are passed through the model the POS and Morph annotation can be missing for some tokens.

Here are some examples with first the text sequence and then a list with respective POS and Morph annotations returned by token.pos_ and token.morph:

Privat [''] []

A-Ö ['PROPN', 'SYM', ''] [Case=Nom, , ]

A [''] []

B [''] []

C ['NOUN'] [Abbr=Yes]

D [''] []

E [''] []

F [''] []

G ['PROPN'] [Case=Nom]

H [''] []

I ['ADP'] []

J [''] []

K [''] []

L ['ADP'] []

M [''] []

N ['PROPN'] [Case=Nom]

O ['PUNCT'] []

P [''] []

Q [''] []

R [''] []

S ['ADP'] []

T [''] []

U [''] []

V [''] []

W [''] []

X [''] []

Y [''] []

Z [''] []

Å ['INTJ'] []

Ä [''] []

Ö [''] []

I also trained a model on both the UD-Talbanken and UD-Lines corpora. That model has the issue as well, but it seems to occur not that frequently:

Privat ['PUNCT'] []

A ['NOUN'] [Case=Nom|Definite=Ind|Gender=Neut|Number=Sing]

-Ö ['SYM', ''] [, ]

A ['ADV'] []

B ['PUNCT'] []

C ['PUNCT'] []

D ['PUNCT'] []

E ['PUNCT'] []

F ['PUNCT'] []

G ['PUNCT'] []

H ['PUNCT'] []

I ['PUNCT'] []

J ['PUNCT'] []

K ['PUNCT'] []

L ['PUNCT'] []

M [''] []

N ['PUNCT'] []

O ['PUNCT'] []

P ['PUNCT'] []

Q ['PUNCT'] []

R ['PUNCT'] []

S ['PUNCT'] []

T ['PUNCT'] []

U ['PUNCT'] []

V ['PUNCT'] []

W ['PUNCT'] []

X ['NOUN'] []

Y ['PUNCT'] []

Z ['PUNCT'] []

Å ['INTJ'] []

Ä ['ADV'] []

Ö ['PUNCT'] []

_(Please note that the list containing the morph annotation actually contains a spacy.tokens.morphanalysis.MorphAnalysis

object where the __str__/__repr__ method returns an empty string.)_

TAG and dep annotations _seem_ to be okay from what I can see.

Is there maybe some threshold for certainty/entropy that prevents predicting POS tags for uncertain cases?

If that is the case, than I would rather prefer uncertain tags over no tags, because currently I'm getting an error message from Spacy Matcher:

ValueError: [E155] The pipeline needs to include a morphologizer in order to use Matcher or PhraseMatcher with the attribute POS. Try using `nlp()` instead of `nlp.make_doc()` or `list(nlp.pipe())` instead of `list(nlp.tokenizer.pipe())`.

Please let me know if you need additional information. Thanks!

Your Environment

- Operating System:

Ubuntu 20.04.1 LTS - Python Version Used:

Python 3.8.3 (default, May 19 2020, 18:47:26)

[GCC 7.3.0] :: Anaconda, Inc. on linux

- spaCy Version Used:

3.0.0rc2

All 20 comments

Empty morphs are normal (maybe half of Talbanken tokens have an empty morph tag), but empty POS shouldn't happen when training from a UD corpus. Can you attach morphologizer/cfg from the trained model (you'll have to rename it cfg.txt to be able to attach it)?

Thanks, here comes the morphologizer/cfg: cfg.txt

Okay, the training data conversion isn't the problem. How many epochs did you train for? The colab notebook just shows an aborted example that hasn't actually trained at all? Then you might end up with the default empty morphs instead of the morphs learned from the training data, which won't have empty POS tags.

The morphologizer can be run with or without POS tags, but the default POS mapping for an empty internal POS+morph tag is only to "" rather than a POS like "X". I need to think about whether there's a good way to handle this, since I think it's where this unexpected behavior is coming from.

No, I trained until the training script stopped by itself. The notebook shows a later test that I aborted.

Here is an older training output also trained on Talbanken. The training script is the same, I only used different lemma_exc data.

The model performance itself, also on other data is also not all too bad. POS and DEP labels look reasonable.

However the UD-Talbanken treebank is not very large and the problem was less common when I added the UD-Lines treebank, which doubled the amount of training data. So there could be a correlation with the amount of training data.

I don't know how the morph model works, but could it be that even after training some morphs remained empty?

2020-11-02 16:03:39.495695: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library libcudart.so.10.1

ℹ Using GPU: 0

=========================== Initializing pipeline ===========================

Set up nlp object from config

Pipeline: ['transformer', 'tagger', 'morphologizer', 'lemmatizer', 'parser']

Created vocabulary

Finished initializing nlp object

Initialized pipeline components: ['transformer', 'tagger', 'morphologizer', 'lemmatizer', 'parser']

✔ Initialized pipeline

============================= Training pipeline =============================

ℹ Pipeline: ['transformer', 'tagger', 'morphologizer', 'lemmatizer',

'parser']

ℹ Initial learn rate: 0.0

E # LOSS TRANS... LOSS TAGGER LOSS MORPH... LOSS PARSER TAG_ACC POS_ACC MORPH_ACC LEMMA_ACC DEP_UAS DEP_LAS SENTS_F SCORE

--- ------ ------------- ----------- ------------- ----------- ------- ------- --------- --------- ------- ------- ------- ------

0 0 2649.04 1292.14 1295.62 1904.61 5.65 0.00 35.63 62.41 19.15 0.73 0.00 0.24

5 200 414165.46 349024.56 351501.46 314286.58 53.23 87.09 58.23 83.65 81.44 74.00 92.64 0.70

11 400 101147.58 163143.18 171550.76 81703.36 93.33 98.01 93.74 87.00 89.05 85.09 96.27 0.89

16 600 44678.66 13504.81 16589.89 31378.16 97.37 98.66 97.37 87.01 91.48 88.54 97.53 0.91

21 800 19743.16 3001.88 4720.89 11772.16 97.62 98.76 97.61 87.06 92.69 89.84 97.82 0.92

27 1000 8006.59 1393.63 2429.80 4405.85 97.76 98.82 97.87 87.01 92.39 89.73 97.63 0.92

32 1200 4936.63 643.29 1296.42 2246.22 97.73 98.85 97.84 87.04 92.40 89.82 97.72 0.92

38 1400 4526.94 384.91 717.74 1607.24 97.68 98.86 97.90 87.03 92.11 89.56 97.92 0.92

43 1600 2703.55 255.31 425.99 984.58 97.82 98.89 97.99 87.08 92.47 89.99 97.33 0.92

49 1800 2387.74 189.04 294.65 831.95 97.64 98.89 97.94 87.08 92.16 89.71 97.34 0.92

54 2000 2428.07 102.84 140.88 803.04 97.86 98.87 97.95 87.04 92.40 89.92 96.95 0.92

60 2200 1544.83 109.84 136.95 519.11 97.73 98.85 97.92 87.14 92.58 90.12 97.04 0.92

65 2400 1946.26 105.30 133.34 605.98 97.92 98.86 98.06 87.06 92.35 89.76 96.95 0.92

71 2600 1434.08 104.13 129.62 394.13 97.77 98.80 97.94 87.11 91.95 89.32 97.83 0.92

76 2800 1201.01 94.52 90.80 323.93 97.74 98.94 97.94 87.05 92.34 89.85 97.24 0.92

82 3000 1499.74 90.62 90.63 343.52 97.69 98.83 97.92 87.13 92.27 89.75 97.92 0.92

87 3200 1562.12 92.26 91.54 350.25 97.82 98.82 98.01 87.11 92.32 89.92 97.44 0.92

92 3400 1219.65 97.56 96.69 284.64 97.74 98.94 97.87 87.05 92.79 90.14 98.22 0.92

98 3600 1179.11 66.65 98.81 278.46 97.81 98.87 97.94 87.05 92.55 89.97 97.24 0.92

103 3800 1515.70 57.46 58.29 321.78 97.83 98.82 97.99 87.03 92.37 89.74 98.12 0.92

109 4000 1071.01 36.30 44.84 208.29 97.83 98.89 97.98 87.07 92.36 89.86 97.83 0.92

114 4200 986.62 78.65 75.49 204.65 97.81 98.84 97.97 87.04 92.41 89.90 97.83 0.92

120 4400 1073.68 58.29 70.97 205.42 97.72 98.84 97.93 87.08 92.40 90.00 97.63 0.92

125 4600 798.90 56.53 63.53 156.42 97.71 98.94 97.97 87.05 92.64 90.04 98.52 0.92

131 4800 688.09 29.01 32.98 152.94 97.75 98.86 97.91 87.09 92.63 90.16 98.22 0.92

136 5000 979.26 26.85 30.70 192.99 97.87 98.93 98.05 87.03 92.53 89.97 98.52 0.92

✔ Saved pipeline to output directory

output/model-last

Hmm, I trained the same model in colab (except with max_steps = 600 to speed things up) and I can't replicate the missing POS tags.

This is a frustrating thing for me to say, but I think you may be mixing up a trained model and an "aborted" model in your evaluation in the top report with the missing tags. The behavior in the top report is what I'd expect from a model that has barely seen any training examples and it doesn't match the training logs in the comment above. If I train a model with a very small max_steps (I tried 20), I do see missing POS tags.

I can replicate the kind of weird behavior (but with POS tags for all tokens!) for short documents:

a ADV Abbr=Yes

b PUNCT

c PUNCT

d ADV Abbr=Yes

e ADV Abbr=Yes

f PUNCT

g ADV Abbr=Yes

h ADV Abbr=Yes

i ADP

j PUNCT

k ADV Abbr=Yes

l PROPN Case=Nom

m ADV Abbr=Yes

n ADV Abbr=Yes

o CCONJ

p PUNCT

q PUNCT

r ADV Abbr=Yes

s PUNCT

t ADV

u ADV Abbr=Yes

v ADV Abbr=Yes

w ADV Abbr=Yes

x PUNCT

y PUNCT

z PUNCT

A PUNCT

B PUNCT

C PROPN Case=Nom

D PUNCT

E PUNCT

F PUNCT

G PUNCT

H PUNCT

I ADP

J PUNCT

K PUNCT

L PROPN Case=Nom

M PUNCT

N PUNCT

O PUNCT

P PUNCT

Q PUNCT

R PUNCT

S PUNCT

T PUNCT

U PUNCT

V PUNCT

W PUNCT

X PUNCT

Y PUNCT

Z PUNCT

I'm guessing this is related to what short texts look like in the training data and some strong influence (from the special boundary tokens?) that the last token is often PUNCT or a short text is often an abbreviation. I'm not extremely familiar with transformers, though, so this may not be the whole story.

God morning @adrianeboyd and thank you for testing!

This is a frustrating thing for me to say, but I think you may be mixing up a trained model and an "aborted" model in your evaluation in the top report with the missing tags.

I really wish that would be the case! But as mentioned, this did happen as well on the model with the combined corpora of Lines and Talbanken. I did also some quick testing previous models, with no much difference.

Finally I did run another training yesterday and checked it just now _(therefore I loaded it from google drive, but it's the same model)_ - unfortunately with similar issues.

Privat ['PUNCT'] []

Id-kort ['NOUN'] [Case=Nom|Definite=Ind|Gender=Neut|Number=Sing]

E ['PUNCT'] []

-Ö ['PUNCT'] []

SKV 120 ['NOUN', 'NUM'] [Abbr=Yes, Case=Nom|NumType=Card]

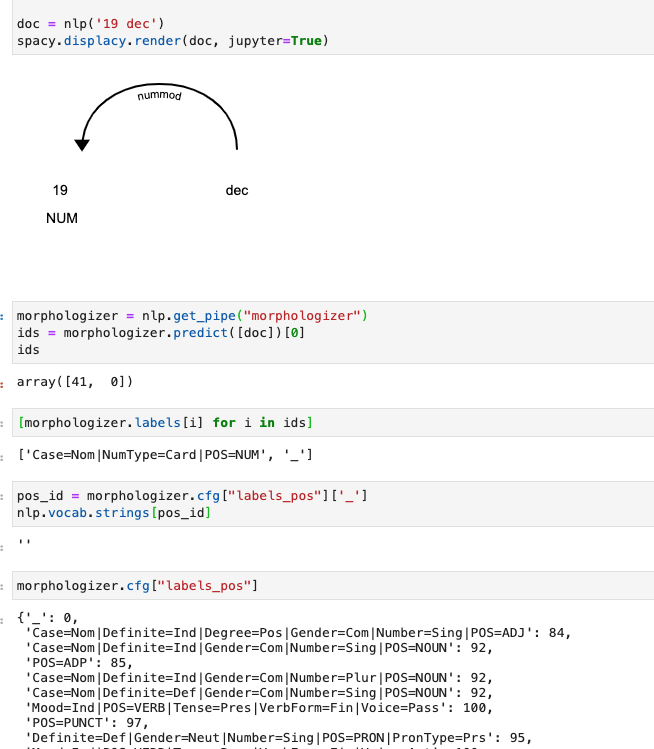

20 dec ['NUM', ''] [Case=Nom|NumType=Card, ]

19 dec ['NUM', ''] [Case=Nom|NumType=Card, ]

19 dec ['NUM', ''] [Case=Nom|NumType=Card, ]

14 dec ['NUM', ''] [Case=Nom|NumType=Card, ]

A ['PUNCT'] []

-Ö ['PUNCT'] []

A ['PUNCT'] []

B ['PUNCT'] []

C ['PROPN'] []

D [''] []

E ['PUNCT'] []

F ['PUNCT'] []

G [''] []

H [''] []

I ['PUNCT'] []

J [''] []

K [''] []

L ['PROPN'] []

M [''] []

N [''] []

O ['PUNCT'] []

P ['PUNCT'] []

Q ['PUNCT'] []

R ['PUNCT'] []

S ['ADP'] []

T ['PUNCT'] []

U ['PUNCT'] []

V ['PUNCT'] []

W ['PUNCT'] []

X ['PUNCT'] []

Y ['PUNCT'] []

Z [''] []

Å ['PUNCT'] []

Ä ['PUNCT'] []

Ö ['PUNCT'] []

Here is the respective notebook. This time with the correct respective outputs. I attached some test cases to the notebook as well.

The training script was running for quite a while 207epochs/7600steps. Though I did not check which model was actually the _best_ model, that could have been worth checking.

_Edit: The loss and accuracy scores in meta.json suggest that actually the last checkpoint at epoch 207 with "transformer_loss":235.5665145985 was taken for creating the package._

I will run another test with ~600-1000 steps. As you did not experience any issues with a low number of training steps, maybe the problem is actually less prominent with fewer steps?

An update: I did now two test trainings with both 600 and 1000 training steps and I could not reproduce this issue there with the given test cases (of course it could still be there, just less often).

Since the models where I experienced this issue all have been trained for much longer with low losses, there could be a correlation in this direction. Meaning that models with higher convergence _could_ increase the likelihood of seeing this issue, at least for Swedish.

Hmm, that would be really weird because the models should never see a missing POS during training from this data. I'll try training for longer to see if I can see the same behavior.

It's possible we'll need to add an option to the morphologizer about whether it should always assign a POS (X) or not.

Okay, I can replicate this with a model that's trained for longer. We'll look into it.

Okay, I can replicate this with a model that's trained for longer. We'll look into it.

Thanks!

_Just a quick question_:

Where is the pos attribute actually set? I couldn't find it in pipeline/tagger.

This is something that's changed in v3. The morphologizer sets both morph and POS here:

_Disclaimer: I probably know too little about how Spacy works behind the scenes._

But on a first glance it does not seem too strange that the Morphologizer predicts _ as this occurs quite often in the corpus. And _ is mapped to 0, and that in turn results in an empty string, after the lookup.

But I guess the POS=... prediction is (should be) handled somehow differently by the model since this does not appear in the original data.

_ doesn't actually appear in the training data because the model is trained on POS+morph tags, so the underlying tags learned by the model should (in theory) all contain at least POS=.... The _ label is added internally to make sure that the mapping from internal POS+morph->POS doesn't fail even if the model hasn't learned any labels, but this is probably the wrong way to handle things.

So technically _ is always a valid morphologizer label in 3.0.0rc2, but I don't understand why the transformer model in particular is predicting it when it's never seen it in the training data.

Ah, I think the logic is wrong when the gold annotation is misaligned.

_doesn't actually appear in the training data because the model is trained on POS+morph tags, so the underlying tags learned by the model should (in theory) all contain at leastPOS=.... The_label is added internally to make sure that the mapping from internal POS+morph->POS doesn't fail even if the model hasn't learned any labels, but this is probably the wrong way to handle things.So technically

_is always a valid morphologizer label in3.0.0rc2, but I don't understand why the transformer model in particular is predicting it when it's never seen it in the training data.

Thanks for the explanation!

Ah, I think the logic is wrong when the gold annotation is misaligned.

What kind of misalignment?

When the data is tokenized via wordpiece tokenizer? Is there actually a way of accessing the wordpiece-token/spacy-token alignment?

doc._.trf_data.tokens['input_texts'] only returns the whole wordpiece tokenized document.

The misalignment doesn't have to do with transformers in the end (although maybe the weird PUNCT results are from some transformer-related overfitting, I'm not sure).

A common type of misalignment is that spacy tokenizes A. into ['A.'] and the gold data annotates A. as two tokens ['A', '.']. For token-level tags like POS tags, if the model says POS=Y and the gold data says POS=Y, POS=Y we count this as aligned and let the model learn that this was correct (or not, if the model had said POS=X). If the model says POS=Y and the gold data says POS=Y, POS=Z (for this example the real case might be POS=NOUN, POS=PUNCT), then we treat it as misaligned, because we can't really know what the correct annotation would be for the single token. And the idea is that the misaligned cases should be ignored while training.

What the current code was doing wrong was learning the default empty label (no POS, no morph) for these cases rather than ignoring them when updating the model, so it did effectively have the empty label as input.

I'll remove the unneeded default empty label, so the model can only predict POS+morphs that were seen in the training data and update the code that handles misalignments so these cases are ignored.

The model trained with the modified code has these results for your test cases:

Privat ['PUNCT'] []

Id-kort ['NOUN'] [Case=Nom|Definite=Ind|Gender=Neut|Number=Sing]

E ['PUNCT'] []

-Ö ['PUNCT'] []

SKV 120 ['NOUN', 'NUM'] [Abbr=Yes, Case=Nom|NumType=Card]

20 dec ['NUM', 'NOUN'] [Case=Nom|NumType=Card, Abbr=Yes]

19 dec ['NUM', 'NOUN'] [Case=Nom|NumType=Card, Abbr=Yes]

19 dec ['NUM', 'NOUN'] [Case=Nom|NumType=Card, Abbr=Yes]

14 dec ['NUM', 'NOUN'] [Case=Nom|NumType=Card, Abbr=Yes]

A ['NUM'] [Case=Nom|NumType=Card]

-Ö ['PUNCT'] []

A ['NUM'] [Case=Nom|NumType=Card]

B ['PUNCT'] []

C ['NUM'] [Case=Nom|NumType=Card]

D ['PUNCT'] []

E ['PUNCT'] []

F ['PUNCT'] []

G ['PUNCT'] []

H ['PUNCT'] []

I ['PUNCT'] []

J ['PUNCT'] []

K ['PUNCT'] []

L ['ADJ'] [Case=Nom|Degree=Cmp]

M ['PUNCT'] []

N ['NUM'] [Case=Nom|NumType=Card]

O ['NUM'] [Case=Nom|NumType=Card]

P ['PUNCT'] []

Q ['NUM'] [Case=Nom|NumType=Card]

R ['PUNCT'] []

S ['NUM'] [Case=Nom|NumType=Card]

T ['PUNCT'] []

U ['PUNCT'] []

V ['PUNCT'] []

W ['PUNCT'] []

X ['PUNCT'] []

Y ['NUM'] [Case=Nom|NumType=Card]

Z ['PUNCT'] []

Å ['PUNCT'] []

Ä ['NUM'] [Case=Nom|NumType=Card]

Ö ['PUNCT'] []

Kind of strange still, but at least all with POS tags.

Thanks for the report and being persistent, this is a pretty bad bug...

Hi again and thank you for the explanation!

Great to hear that you already found a fix! Having these strange POS tags is still far better than having an empty field. :) But I guess it's not that surprising to have bad predictions if those kind of tokens never were seen during training time.

_Just out of curiosity: Do you know roughly how often do those token misalignments appear _(for example in your english training data)_?_

When do you expect this to be fixed in the nightly-branch?

Would it be possible to share the respective fix, so that I can fix that locally?

Look at the tokenization evaluation (token_acc/p/r/f) with nlp.evaluate or just for tokenization with scorer.score_tokenization:

https://nightly.spacy.io/api/scorer#score_tokenization

You have to do your own debugging to get it to output the misaligned cases (which you can also just do outside of spacy based on the gold vs. spacy tokenization), but one place is where it's counting the false positives here:

@adrianeboyd Thanks a lot!

I think this one can be closed, as PR #6363 fixes it?

I've noticed lately on Github that issues are not being linked properly anymore when the PR description has something like Fixes #6350 :(

Most helpful comment

This is something that's changed in v3. The morphologizer sets both morph and POS here:

https://github.com/explosion/spaCy/blob/8ef056cf984eea5db47194f2e7d805ed38b971eb/spacy/pipeline/morphologizer.pyx#L204-L205