Spacy: Segmentation fault on Ubuntu while training NER or TextCat

How to reproduce the behaviour

Hello!

I'm coming across a segmentation fault while training a model for NER and text classifier.

While I was training it exclusively on my local machine, this problem did not (and still do not) occur, it seems to appear only on my VMs.

Here is the context:

I want to train a lot of models, while making some slight variant on different parameters, as the dropout, the batch size, the number of iterations, etc. Also, for each different set of parameters, I train 10 models, in order to be sure that the good score of the model wasn't some kind of luck.

I first of all create a new blank model from a language:

nlp = spacy.blank("fr")

nlp.add_pipe(nlp.create_pipe(SENTENCIZER))

nlp.add_pipe(nlp.create_pipe(TAGGER))

nlp.add_pipe(nlp.create_pipe(PARSER))

nlp.begin_training()

I add the the newly created model some word vectors:

from gensim.models import FastText

gensim_model = FastText.load(vectors_dir)

gensim_model.init_sims(replace=True)

nr_dim = gensim_model.wv.vector_size

nlp.vocab.reset_vectors(width=nr_dim)

for word in gensim_model.wv.index2word:

vector = gensim_model.wv.get_vector(word)

nlp.vocab[word]

nlp.vocab.set_vector(word, vector)

I then call another python script to properly train the component I want, be it the NER or TextCat pipe. In this project, I have a custom "multi-ner" and "multi-textcat" to train each label as a separate submodel, as can be shown in the image:

The training is done with 5000 sentences for the NER and 2000 for the TextCat, and while it demands a bit of RAM, it's really nothing for the machines that have 16 Gigabytes.

After that, I modify the meta.json file in order to incorporate some project-related infos, and it's done.

\

As I said, I want to train a lot of models, so I train series of 10 models (each series with the same parameters). The 10 models aren't trained simultaneously but one after the other.

And here is the thing: while on my local machine I can train dozens of model without a single error, there is another behaviour on the VMs. After some training (usually I can train 2 models, so 2*20 iterations), I have a Segmentation Fault error. It's always when I try to load the model, and it can be just before a training, or before the meta file changes.

I don't really know how to investigate this error, and what I can do to solve it, any help or tip is welcome! :)

I was trying to be as exhaustive as possible, but as I am quite a newbie at writing issues I may have missed some important info, do not hesitate in asking more detail or code preview!

Your Environment

I'm using 3 different VM to train simultaneously the models, and the configuration is slightly different from one to another, but the bug is the same. Here is also my local configuration, the errorless one.

Each machine (VM or Local) has 16 Gigabytes RAM, and it appears to be more than enough.

Info about spaCy | Local | VM 1 | VM 2 & 3

--- | --- | --- | ---

spaCy version: | 2.2.4 | 2.2.4 | 2.2.4

Platform: | Windows-10-10.0.17134-SP0 | Linux-4.4.0-62-generic-x86_64-with-Ubuntu-16.04-xenial | Linux-4.15.0-106-generic-x86_64-with-Ubuntu-18.04-bionic

Python version: | 3.6.5 | 3.6.5 | 3.6.9

All 16 comments

Hi @mohateri, sorry to hear you're running into this trouble!

Can you paste an example error trace when the Segmentation fault happens?

Are you getting the segmentation fault BOTH when training NER and TextCat, or perhaps only with one of the two?

And finally, is the script you're using on local vs. VMs really truly 100% the same ?

Hi!

The segfault occurs when training the NER or the TextCat. I didn't tried yet training both of them, I'll do it and have you know.

The script is exactly the same, I'm positive because I pulled it from a git repo and there aren't any local changes on the VMs nor on my local machine.

As for the error trace, I don't really know how to do it... The only thing I get is:

[some custom printing infos throughout the training]

Segmentation fault (core dumped)

Is there a way to show the stacktrace of the error?

Unfortunately, segmentation faults are really annoying to debug. What is the last custom printing you see? Or is it always something else?

It's always when I try to load the model, whether it be before a training, or before changing the meta file.

As I have some custom components, here is the loading function that I use:

class Custom_Model(French):

def load_model(self, path, **overrides):

print("[CUSTOM_MODEL] Loading model:", path)

Language.factories["sentencizer"] = model_sbd.SentenceSegmenter

nlp = spacy.load(path, **overrides)

multi_ner = MultiNER(path, nlp)

nlp.add_pipe(multi_ner, multi_ner.name)

multi_textcat = MultiTextcat(path, nlp)

nlp.add_pipe(multi_textcat, multi_textcat.name)

if not nlp.has_pipe("sentencizer"):

nlp.add_pipe(nlp.create_pipe("sentencizer"))

nlp.tokenizer = custom_tokenizer(nlp)

nlp.clean_html = False

return nlp

def __init__(self, path, **overrides):

nlp = self.load_model(path, **overrides)

print("[CUSTOM_MODEL] Loading done")

self.meta = nlp.meta

self.vocab = nlp.vocab

self.pipeline = nlp.pipeline

self.tokenizer = nlp.tokenizer

self.clean_html = False

self.max_length = nlp.max_length

I always see the Loading model: [model_path] before the segfault, that occurs before the Loading done.

As you can see, we use our custom components in this project, and I don't know how much it can impact the loading.

Are you using custom components that were created/trained on your local Windows? If so, could you try recreating them on one of your Linux VMs, and try running again ?

Oh, I didn't think of that. Yes, they were created on my local Windows. Thank you, I'll give it a try!

Because it needs several training for the segfault to happen, I don't think I will be able to update this today.

Don't worry about that, just report back whenever you have time to look into it / run the scripts !

Hello, I've run some tests, and I hope the results will help us find the cause of the error.

First of all, these are the tests I ran:

Test | Local | VM 1 | VM 2 | VM 3

---|---|---|---|---

Components trained|NER and TextCat|NER and TextCat|NER and TextCat|NER and TextCat

Word vectors|Yes, all|No|Yes, pruned to 100000|Yes, all

The word vectors, if not pruned, have 962148 vectors. There is 947364 keys.

The models and their components were created locally on each machine.

And now for the results:

Local

It really surprised me, because for the first time I had a memory error on my local Windows.

Here is the stacktrace:

File "C:\Project\project-nlp\src\project_nlp\utils\model\model_custom.py", line 32, in load_model

multi_textcat = MultiTextcat(path, nlp)

File "C:\Project\project-nlp\src\project_nlp\utils\model\model_multi_textcat.py", line 18, in __init__

textcat.from_disk(path + TEXTCAT_MODEL_PATH + "/" + model, vocab=False)

File "pipes.pyx", line 228, in spacy.pipeline.pipes.Pipe.from_disk

File "C:\Project\v_env\lib\site-packages\spacy\util.py", line 654, in from_disk

reader(path / key)

File "pipes.pyx", line 219, in spacy.pipeline.pipes.Pipe.from_disk.load_model

File "C:\Project\v_env\lib\site-packages\thinc\neural\_classes\model.py", line 395, in from_bytes

dest = getattr(layer, name)

File "C:\Project\v_env\lib\site-packages\thinc\describe.py", line 44, in __get__

self.init(data, obj.ops)

File "C:\Project\v_env\lib\site-packages\thinc\neural\_classes\hash_embed.py", line 20, in wrapped

copy_array(W, ops.xp.random.uniform(lo, hi, W.shape))

File "mtrand.pyx", line 1307, in mtrand.RandomState.uniform

File "mtrand.pyx", line 242, in mtrand.cont2_array_sc

MemoryError

VM 1

I didn't get any errors. It seems that creating the model and the components on the VM was a good call.

VM 2

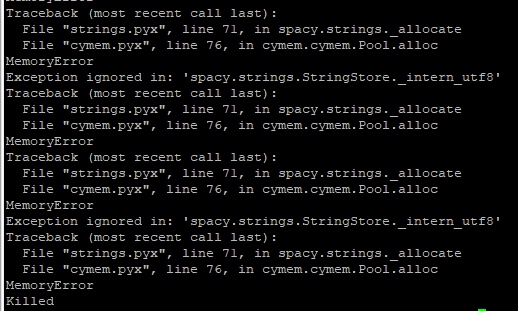

I got another memory error.

The stacktrace is full of this fragment, reiterated more than a hundred time - so much that I can't scroll up to see what started it.

VM 3

Here I didn't get Segmentation fault (core dumped) nor a Memory Error, but just

[CUSTOM_MODEL] Loading model: /path/to/model

Killed

\

For me the conclusion is that my word vectors are causing the error, because if they aren't present, all seems to work perfectly (although I'll run some other tests to be sure).

@adrianeboyd : do you see anything wrong with the way word vectors are defined here (cf original post) ?

I should add something that I didn't give enough attention before.

When I load the model with the word vectors, I have this warning message:

[CUSTOM_MODEL] Loading model: /path/to/model

src/project_nlp/training/entrainements.py:22: UserWarning: [W019] Changing vectors name from spacy_pretrained_vectors to spacy_pretrained_vectors_100000, to avoid clash with previously loaded vectors. See Issue #3853.

Naturally, when the segfault occurs, it is before the warning message appears, that's why I forgot about it until now.

Can the error come from the fact that spaCy loads all the vectors each time the model is loaded without freeing enough memory, thus ending in a memory error or a segmentation fault?

When calling the nlp.to_disk() function, is the memory used by the model and its word vectors freed?

Ok so I wanted to verify if the error came from that, so I created a new model and added to it the entire word vectors (cf code in the original post). I then just looped into loading and saving it:

for i in range(50):

nlp = model_custom.Custom_Model("path/to/model") # Loads the model and adds the custom components

nlp.remove_pipe("multi_ner")

nlp.remove_pipe("multi_textcat")

nlp.to_disk(model)

I have to add the two remove_pipe because if not I get the following error message:

KeyError: "[E002] Can't find factory for 'multi_ner'.

This usually happens when spaCy calls `nlp.create_pipe` with a component name that's not built in - for example, when constructing the pipeline from a model's meta.json.

If you're using a custom component, you can write to `Language.factories['multi_ner']` or remove it from the model meta and add it via `nlp.add_pipe` instead."

There was no error coming up, so it's my code that's doing something wrong.

I looked more deeply in code of the project, to find that we're doing something quite strange. The structure of a training, either for the NER or the TextCat, is the following:

- Loading the whole model

- Creating an EntityRecognizer or a TextCategorizer

- Training it on our corpus

- Saving it as a sub-model, in a subfolder (see the screenshot in the original post)

So we actually never save directly the whole model, and I think that's the cause of the segfault. I also think that we never save it due to the error message that we would get (cf above).

Now that I've reached this conclusion, I don't really know how to proceed, I don't know what to do in order to avoid the error while saving to whole model.

About the serialization of custom components, the documentation provides more information here:

When spaCy loads a model via its meta.json, it will iterate over the "pipeline" setting, look up every component name in the internal factories and call

nlp.create_pipeto initialize the individual components, like the tagger, parser or entity recognizer. If your model uses custom components, this won’t work – so you’ll have to tell spaCy where to find your component. You can do this by writing to the Language.factories:

from spacy.language import Language

Language.factories["my_component"] = lambda nlp, **cfg: MyComponent(nlp, **cfg)

That's also pretty much what the error message says there. Have you tried implementing this so you can just save out the entire model in one go?

Thank you, it worked!

Thanks for taking your time to help me find the problem

Great, I'm happy we got to the bottom of it!

I'll go ahead and close this issue as it seems resolved :-)